Wen| Ask nextquestion

In mankind’s journey to explore the mysteries of nature, the innovation of scientific tools has always been the core driving force for breaking through cognitive boundaries. The awarding of the 2024 Nobel Prize in Chemistry marks that artificial intelligence (AI) has officially entered the core stage of scientific research. Through AI-driven protein structure prediction and design, the three winners have solved a problem that has plagued biology for half a century and achieved Innovative protein design from scratch. These discoveries not only deepen our understanding of life, but also provide practical solutions for developing new drugs, vaccines and environmentally friendly technologies, and even addressing global challenges such as antibiotic resistance and plastic degradation.

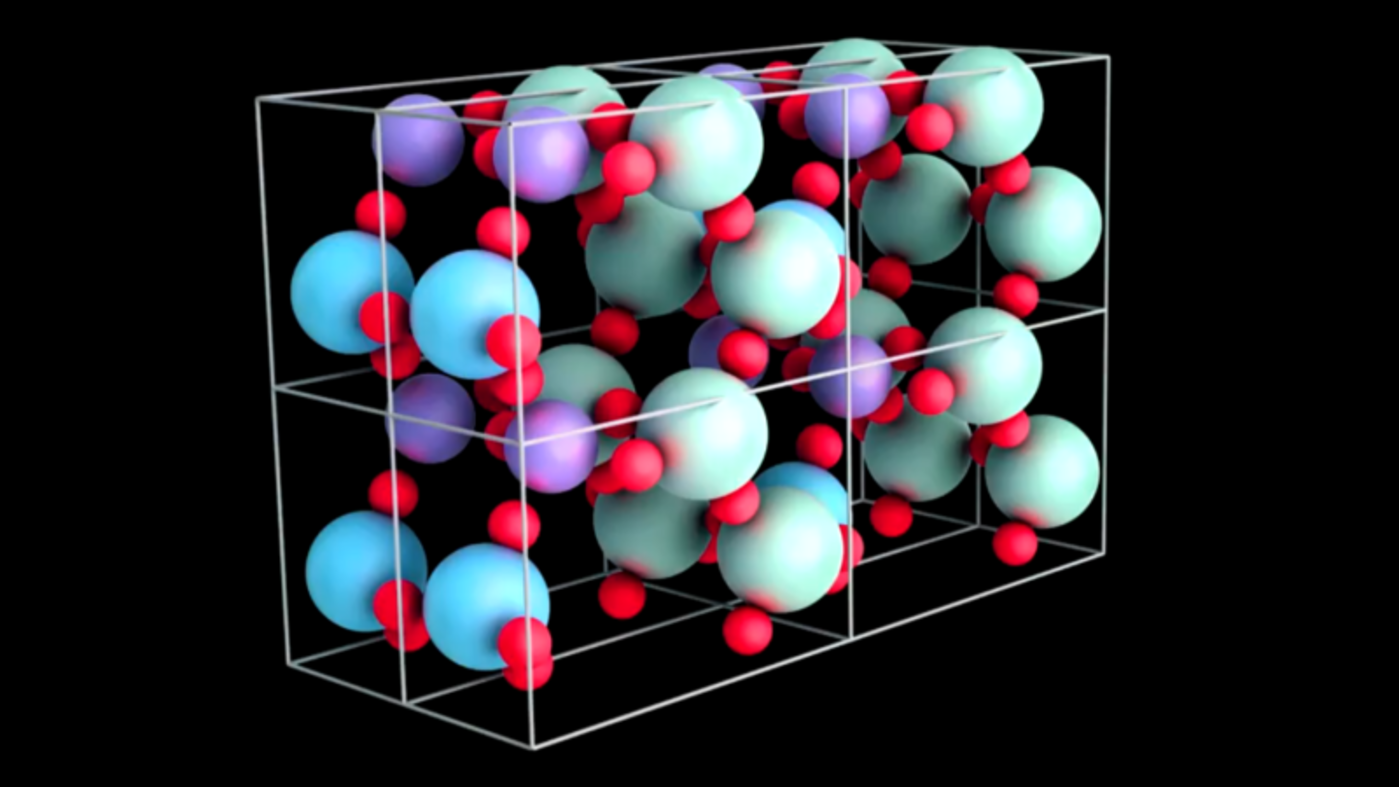

This milestone has not yet dissipated, and the generative AI model MatterGen released by Microsoft in early 2025 has caused a stormy wave in the materials field: the new material TaCr ˇ O it produced by reverse design has an error between the experimental value of the bulk modulus and the design target. Less than 20%, shortening the research and development cycle of traditional materials from years to weeks. These breakthroughs reveal an irreversible trend:AI has transformed from an auxiliary tool for scientists to a co-conspirator of scientific discovery, and is reconstructing the underlying logic of scientific research.

▷ Inorganic materials designed by MatterGen. Source: @satyanadella

Faced with this scientific revolution, scientific researchers are facing unprecedented opportunities and challenges.

On the one hand,Although many scientific researchers have a solid professional knowledge background, they lack sufficient artificial intelligence knowledge and skills.They may be confused and limited by the application of AI, and they may not know where to start so that AI can maximize its effectiveness in specific scientific research tasks. On the other hand,The wet experimental methods relied on by many scientific research fields require high trial and error costs and a large number of repetitive experiments.Coupled with the huge consumption of manpower and material resources, it also adds great uncertainty to the scientific research process.

During this process,Many seemingly useless data are abandoned, resulting in some potentially valuable information not being fully explored, resulting in huge waste of resources.In the AI field, data in the natural and social sciences are often scarce for a long time. Even if data is barely collected, there will inevitably be problems of insufficient confidence or lack of interpretability (researchers will be selective when evaluating model performance). Select evaluation indicators selectively). Especially in the application of large language models (LLMs), nonsense or misreferences occur from time to time, further deepening doubts about the credibility of AI results.

The black box nature of AI technology also makes many generated results lack transparency and cannot clearly explain the mechanism and logic behind them, thus affecting its trust and application depth in scientific research.What is even more serious is that with the gradual development and popularization of AI technology, some work originally completed by human scientists is gradually automated, and some positions are even at risk of being replaced.More and more scientific researchers are worried that the popularization of AI technology may lead to the weakening of human creative work.In the end, if we do not carefully grasp the direction of AI’s development, this technological revolution may bring about profound changes in social structure, career market, and scientific ethics.

hereinIt provides some practical guidance and strategic suggestions for scientific researchers on how to respond to challenges and embrace artificial intelligence to assist scientific research.This article will first clarify the key areas in which artificial intelligence can support scientific research, and explore ways in these directions.The core elements needed to achieve a breakthrough。In-depth analysis will be followedCommon risks when using artificial intelligence in scientific research,In particular, it has the potential impact on scientific creativity and research reliability, and provides information on how to use artificial intelligence to bring ultimate overall net benefits through reasonable management and innovation. Finally, this article will propose three action guidelines aimed at helping scientific researchers embrace AI proactively in this change and start a golden age of scientific exploration.

01 Key areas where AI supports scientific research

Changes in the way knowledge is acquired, created and disseminated

In order to make new breakthrough discoveries, scientists often have to face one increasingly large knowledge peak after another. Because new knowledge emerges one after another, professional division of labor continues to deepen, and the knowledge burden becomes heavier, the average age of scientists with major innovation is getting older, they are more inclined to interdisciplinary research, and most of them are rooted in top academic institutions. Even though small teams are generally more capable of promoting disruptive scientific ideas, the proportion of papers written by individuals or small teams has declined year by year. In terms of sharing scientific research results, most scientific papers are obscure and have numerous terms, which not only blocks exchanges among scientific researchers, but also makes it difficult to stimulate the interest of other scientific researchers, the public, enterprises or policy makers in relevant scientific research work.

However, with the development of artificial intelligence, especially big language models, we are discovering new ways to address current scientific research challenges.With the help of LLM-based scientific assistants, we can more efficiently extract the most relevant insights from massive literature, and we can also directly ask questions about scientific research data, such as exploring the associations between behavioral variables in research. In this way, the cumbersome process of analysis, writing and review is no longer the only way to obtain new discoveries. Extracting scientific discoveries from data is also expected to significantly accelerate the scientific process.

As technology advances, especially through fine-tuning LLM on specific data in scientific fields, as well as breakthroughs in long-context window processing capabilities and cross-literature citation analysis, scientists will be able to extract key information more efficiently, thereby significantly improving research efficiency. Furthermore, if meta-robot-style assistants can be developed to integrate data across different research fields, it is expected to answer more complex questions and outline a panoramic view of subject knowledge for humans. It is foreseeable that in the future, scientific researchers may emerge who design advanced queries for these paper robots, and by intelligently splicing knowledge fragments from multiple fields, they may promote the boundaries of scientific exploration.

Although these technologies provide unprecedented opportunities, they also come with certain risks. We need to rethink the nature of some scientific tasks,Especially when scientists can rely on LLM to help critically analyze, adjust influence, or transform research into interactive papers, audio guides, etc., the definition of reading or writing scientific papers may change.

Generate, extract, label and create large scientific datasets

As scientific research data continues to increase, artificial intelligence is providing us with more and more help. example,It improves the accuracy of data collection, reduce possible errors and interference during DNA sequencing, cell type identification, or animal sound collection. In addition, scientists can also use LLM’s enhanced cross-image, video and audio analysis capabilities to extract hidden scientific data from more hidden resources such as scientific publications, archival materials, and teaching videos, and transform it into a structured database for further analysis and use.Artificial intelligence can also add auxiliary information to scientific data and help scientists make better use of this data.For example, at least one-third of the functional details of microbial proteins cannot be reliably annotated. In 2022, DeepMind researchers used artificial intelligence to predict protein functions, adding new entries to databases such as UniProt, Pfam and InterPro.

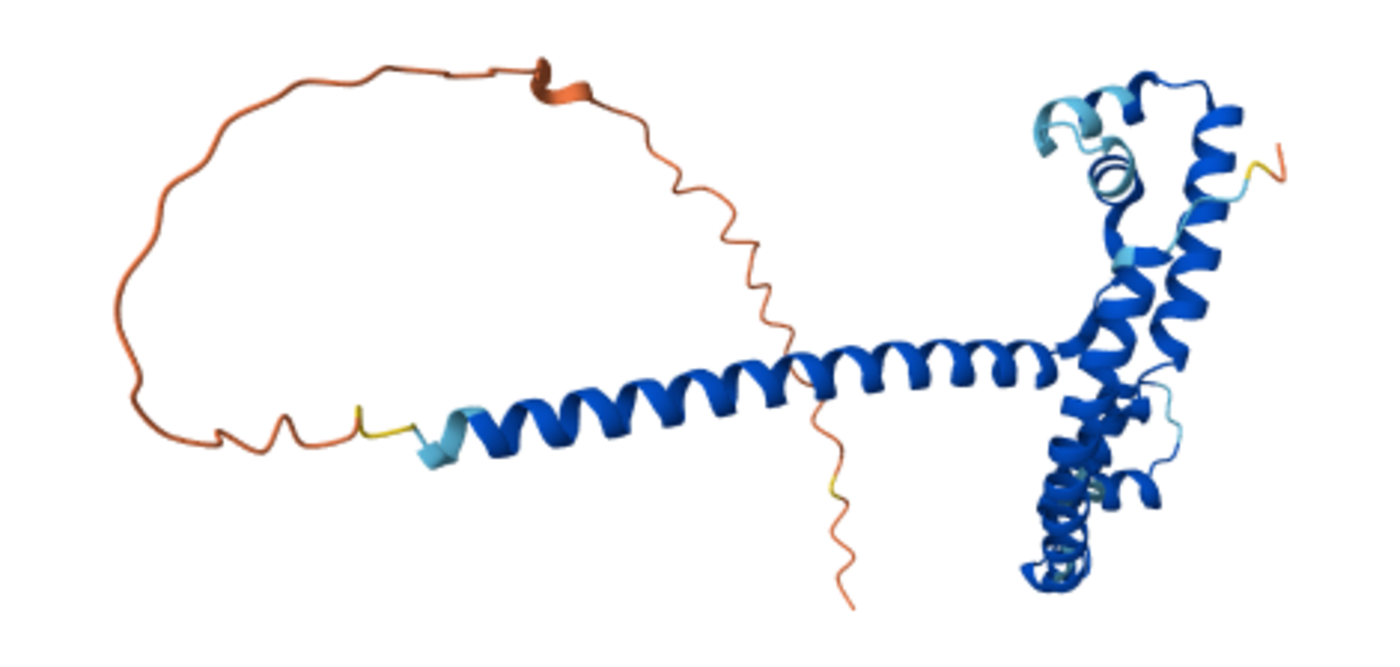

When there is insufficient real data, verified artificial intelligence models can also become an important source of synthetic scientific data.The AlphaProteo protein design model is trained based on more than 100 million artificial intelligence protein structures generated by AlphaFold 2 and experimental structures from the protein database. These artificial intelligence technologies can not only complement existing scientific data generation processes, but can also significantly enhance the return on other scientific research efforts, such as digitization of archives or funding new data collection technologies and methods. Taking the field of single cell genomics as an example, a huge single cell data set is being constructed with unprecedented precision to promote breakthroughs and progress in this field.

▷ The bluer the color, the higher the reliability, and the redder the color, the lower the reliability. Source: AFDB

Simulate, accelerate and inform complex experiments

Many scientific experiments are costly, complex, and time-consuming, and some simply cannot be carried out because researchers cannot access the necessary facilities, participants, or inputs. Nuclear fusion is a typical example. Nuclear fusion is expected to become an almost inexhaustible, zero-emission energy source and promote the large-scale application of high-energy-consuming innovative technologies such as seawater desalination. To achieve nuclear fusion, scientists need to create and control plasma. However, the facilities required are extremely complex to build. Construction of the prototype Tokamak reactor of the International Thermonuclear Experimental Reactor began in 2013, but plasma experiments will not begin until the mid-2030s at the earliest. Artificial intelligence can help simulate nuclear fusion experiments and make more efficient use of subsequent experimental time. Researchers can run reinforcement learning agents in simulations of physical systems to control the shape of the plasma. Similar ideas can also be extended to large facilities such as particle accelerators, astronomical telescope arrays or gravitational wave detectors.

The use of artificial intelligence to simulate experiments will behave differently in different disciplines, but one thing in common is that these simulations usually provide information and guidance for physical experiments rather than replacing them.For example, the AlphaMissense model can classify 89% of 71 million potential human missense variants, helping scientists focus on those variants that may cause disease, thereby optimizing the allocation of experimental resources and improving research efficiency.

▷ The reactor chamber for DIII-D, an experimental tokamak fusion reactor operated by General Atomics in San Diego, has been used for research since it was completed in the late 1980s. Typical annular chambers are covered with graphite to help withstand extreme temperatures. Source: Wikipedia

Modeling complex systems and interactions between their components

In a 1960 paper, Nobel Prize winner Eugene Wigner marveled at the incredible effectiveness of mathematical equations in simulating natural phenomena such as planetary motion. However, over the past century,Models that rely on systems of equations or other deterministic assumptions have always struggled to fully capture the ever-changing dynamics and chaos in biology, economics, weather and other complex domain systems.These systems have large numbers of components, closely interact with each other, and can undergo random or chaotic behavior, making it difficult for scientists to predict or control their reactions in complex scenarios.

Artificial intelligence can improve its modeling of these complex systems by obtaining more data about them and learning more powerful patterns and laws from them.For example, traditional numerical prediction is mainly based on carefully defined physical equations, which have certain explanatory power on atmospheric complexity, but the accuracy is always insufficient and the calculation cost is high. The prediction system based on deep learning can predict weather conditions 10 days in advance, which outperforms traditional models in terms of accuracy and prediction speed.

In many cases,AI does not replace traditional modeling methods for complex systems, but gives them richer tools.For example, agent-based modeling methods simulate interactions between individuals, such as companies and consumers, to study how these interactions affect larger, more complex systems like the economy. Traditional methods require scientists to preset the behavior of the agent, such as “buy 10% less when you see the price rise” and “save 5% of your salary every month.” However, the complexity of reality often makes these models too stretched, making it difficult to provide accurate predictions of emerging phenomena such as the impact of live streaming on the retail industry.

With the help of artificial intelligence, scientists can now create more flexible agents.These agents can communicate, take actions (such as searching for information or purchasing goods), and can reason and remember those actions. Using reinforcement learning can also allow these agents to learn and adapt in dynamic environments, and even adjust their own behaviors in the face of changes in energy prices or changes in policies to deal with the epidemic. These new methods not only increase the flexibility and efficiency of simulations, but also provide scientists with more innovative tools to help them cope with increasingly complex research problems.

Find innovative solutions to problems with a broad search space

Many important scientific problems are accompanied by almost astronomical numbers of incomprehensible potential solutions. When designing small molecule drugs, scientists need to start at 1060 If you design a protein containing 400 standard amino acids, the selection space is even as high as 1020400。Traditionally, scientists have relied on a combination of intuition, trial and error, iteration, or brute force calculations to find the best molecule, proof, or algorithm. However, these methods are difficult to exhaust the search space and often miss the best solution.Artificial intelligence can open up new areas of these search spaces while focusing more quickly on solutions that are most likely to be feasible and useful.——This is a delicate balance.

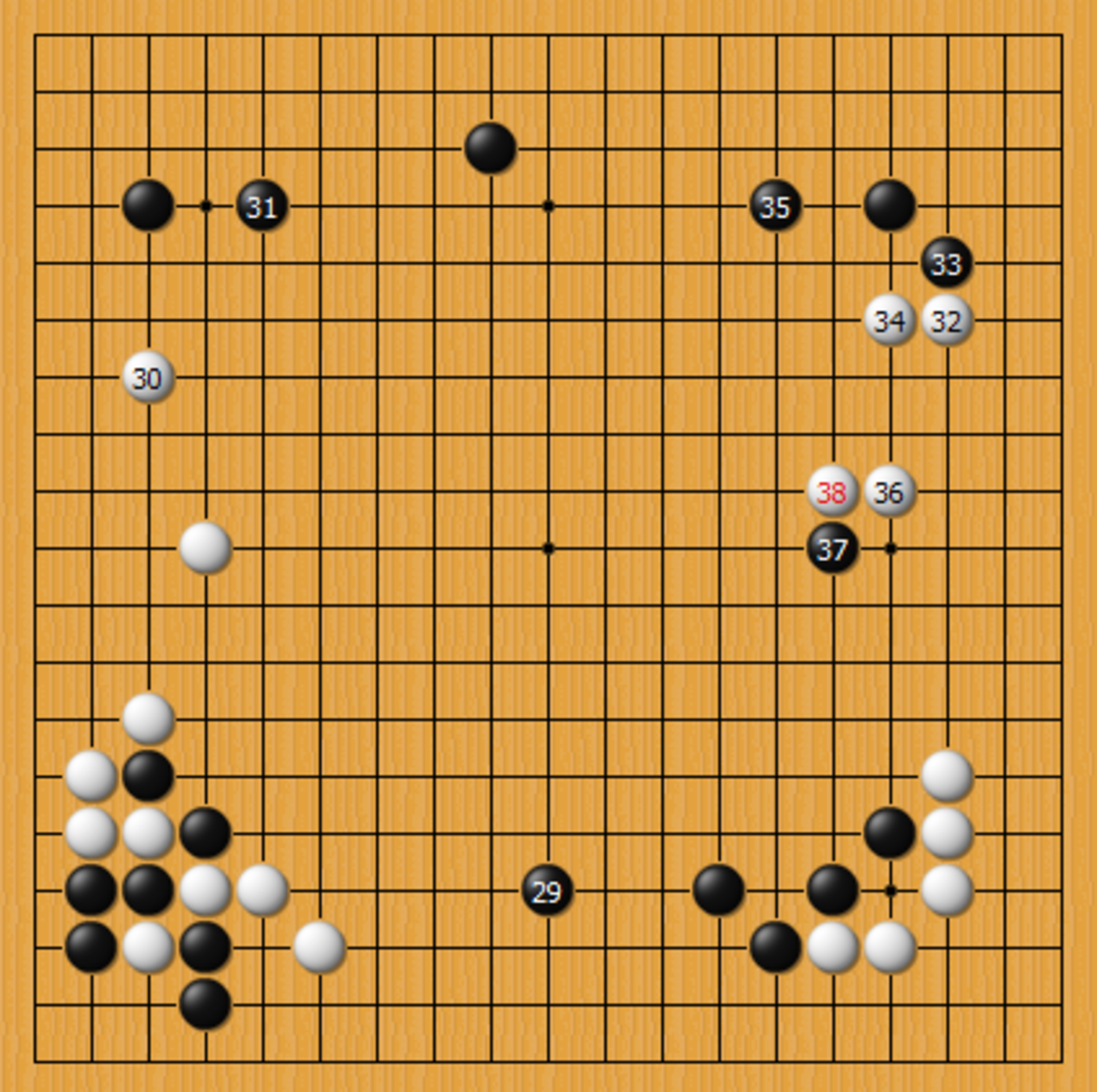

Take the 2016 AlphaGo game against Li Shishi as an example. The AI’s positioning may seem unconventional and even exceeds the traditional human chess path and experience, but it successfully disrupts Li Shishi’s thinking and makes it easier for AlphaGo to control the situation. Li Shishi later said that he was shocked by this move. This means that AlphaGo’s move completely exceeds the thinking style and experience of traditional human chess players. It also proves that AI can discover solutions that humans have never thought of in a huge space of possibilities, thereby promoting strategic innovation.

▷ AlphaGo (holding black) and Li Shishi (holding white) played in the second game, and AlphaGo finally won the game. AlphaGo’s 37th hand fell on the fifth line, exceeding the expectations of most chess players and experts. After the game, many people spoke highly of this move, believing that it demonstrated AlphaGo’s overall judgment. Photo source: Mustard Seed View of Meru

02 Core elements of AI-driven scientific breakthroughs

questionable choices

As Heisenberg, the founder of quantum mechanics, said, asking the right question is often the same as solving most of the problem. So, how to evaluate the quality of a problem? Demis Hassabis, CEO of DeepMind, proposed a thinking model: If you think of the entire science as a knowledge tree,What we should pay special attention to is the basic root node problems of tree roots”Solving these problems can unlock new research areas and applications. Second, it is necessary to assess whether artificial intelligence is applicable and can bring benefits,We need to look for problems with specific characteristics, such as huge combined search space, large amounts of data, and clear objective functions for performance benchmarking.

Usually, a problem is theoretically suitable for artificial intelligence, but because the input data is not yet in place, it may need to be shelved and wait for the opportunity. In addition to selecting the right question, it is also crucial to specify the difficulty level and feasibility of the question.Artificial intelligence’s powerful problem statement capabilities are often reflected in questions that can produce intermediate results.If you choose a problem that is too difficult, you cannot generate enough signals to make progress. This requires relying on intuition and experimentation to achieve it.

Choice of evaluation method

Scientists use a variety of assessment methods, such as benchmarks, metrics, and competitions, to assess the scientific capabilities of artificial intelligence models. Generally, multiple evaluation methods are necessary. For example, weather forecasting models start with an initial measure of progress and scale up model performance based on key variables such as surface temperature. When the model reached a certain level of performance, they used more than 1300 metrics (inspired by the evaluation scorecard of the European Centre for Medium-Range Weather Forecasting) to conduct a more comprehensive evaluation.

The most influential AI assessment methods for science are often community-driven or recognized.Community support also provides the basis for publishing benchmarks so that researchers can use, criticize and improve them. However, one concern needs to be wary of during this process: if benchmark data is accidentally absorbed by the model training process, the evaluation accuracy will be compromised. There is currently no perfect solution to deal with this contradiction, but regularly launching new public benchmarks, establishing new third-party evaluation agencies and holding various competitions are feasible ways to continuously test and improve AI scientific research capabilities.

interdisciplinary collaboration

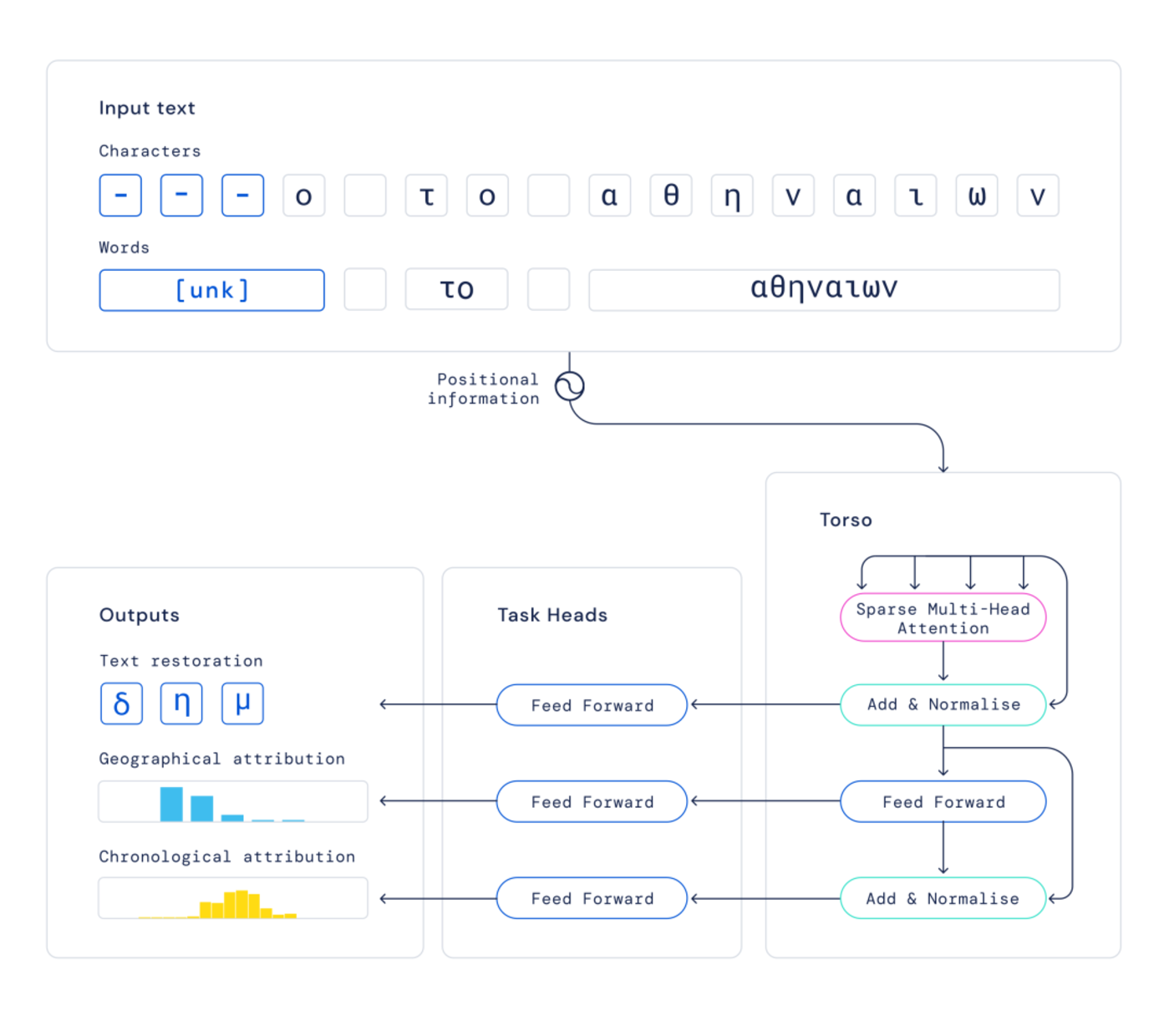

Applications of artificial intelligence in science are often multidisciplinary by default, but to be successful, they need to truly transform intointerdisciplinary cooperation。An effective starting point is to select a scientific problem that requires a variety of expertise, and then provide it with enough time and energy to cultivate a team spirit of collaboration around the problem.For example, DeepMind’s Ithaca project uses artificial intelligence to repair and classify damaged ancient Greek inscriptions to help scholars study the thoughts, languages and history of past civilizations. To be successful, project co-leader Yannis Assael must understand inscriptions, the discipline of ancient written writing. Inscription scientists in the project must learn how AI models work in order to combine their professional intuitions with model output.

The cultivation of this kind of team spirit is inseparable from the appropriate incentive mechanism. Giving a small, close-knit team the power to focus on solving problems rather than focusing on the author’s signature is key to the success of AlphaFold 2. Such focus may be easier to achieve in industrial laboratories, but it once again emphasizes the importance of long-term public research funding, especially since it should not rely too heavily on publishing pressure.

Similarly, organizations need to create positions and career paths for talents who can integrate different disciplines.At Google, for example, DeepMind’s research engineers play a key role in promoting positive interactions between research and engineering, while project managers help cultivate a team atmosphere and promote communication and collaboration between teams. More attention should be paid to those who can identify and connect connections between different disciplines and quickly improve skills in new fields. In addition, in order to stimulate the exchange of ideas, organizations should encourage scientists and engineers to adjust projects regularly, establish a culture that promotes curiosity, humility, and critical thinking, and enable practitioners in different fields to provide constructive opinions and feedback in public discussions.

Of course, building partnerships is no easy task. At the beginning of the discussion, consensus must be reached early, the overall goal should be clarified, and some thorny potential issues should be resolved, such as the allocation of rights to the results, whether to publish research, whether to open source models or datasets, and what type of licensing should be used.Differences are inevitable, but if public and private organizations under different incentive mechanisms can find clear and equal value exchange points, then it is possible to achieve success together while giving full play to their respective advantages.

▷ The architecture of Ithaca. Damaged parts of the text are indicated by dashed lines-. In this example, the project team artificially corrupted the characters. Based on these inputs, Ithaca was able to recover the text and identify the time and place when the text was written. Source: deepmind

03 Manage AI risks,Improve scientific creativity and research reliability

in 2023NatureThe published in-depth survey report shows that 62% of scientific research teams around the world have used machine learning tools in data analysis, but 38% of them lack sufficient evidence of algorithm selection. This universality warns us:While AI is reshaping the scientific research paradigm, it is also creating new cognitive traps.

Although AI can help us extract useful rules from massive amounts of information, itDeduction is often based on existing data and knowledge, rather than creative thinking from a new perspective.This kind of imitation innovation may make scientific researchIncreasingly relying on existing data and models has limited the thinking breadth of researchers.By relying too much on AI, we may overlook some original, unconventional research methods that may open up new scientific fields.Especially when exploring unknown and cutting-edge areas, human intuition and independent thinking ability are still crucial.

In addition to its impact on scientific creativity, the popularity of AI may also pose hidden dangers to the reliability and understanding of research. When AI provides predictions and analysis, it often relies on probability and pattern recognition rather than direct causal reasoning. Therefore, the conclusions given by AI may only be a statistical correlation and may not necessarily represent a true causal relationship. In addition, the black box nature of AI algorithms also makes their decision-making process opaque. Therefore, it is crucial for researchers to understand the logic behind AI’s conclusions, especially when the results need to be interpreted or applied to practical problems. If we blindly accept the results of AI without reviewing them, it may lead to misleading conclusions, which in turn affects the credibility of the research.

On the other hand, we believe thatIf the risks of AI can be reasonably managed, there will be an opportunity to deeply integrate this technology into scientific exploration, help deal with more challenges, and even have far-reaching impacts.

creativity

Scientific creativity refers to the ability of individuals or teams to propose novel assumptions, theories, methods or solutions to promote scientific progress through unique thinking methods, methodologies or perspectives in scientific research. In practice, scientists usually judge whether a new idea, method or achievement is creative based on subjective factors, such as its simplicity, counter-intuition or beauty. Some scientists worry that large-scale use of AI could undermine more intuitive, accidental or eclectic methods of research in science. This problem may manifest itself in different ways.

One concern is that AI models are trained to minimize outliers in training data, and scientists often respond to some confusing data points by following their instincts to amplify outliers. Another concern is that AI systems are often trained to complete specific tasks, and relying on AI may miss out on more accidental breakthroughs, such as solutions to unresearched problems. At the community level, some people worry that if scientists embrace AI on a large scale, it may lead to gradual homogenization of research results. After all, large language models may produce similar suggestions when responding to questions from different scientists, or scientists may over-focus on those subjects and problems that are best suited to AI.

In order to mitigate such risks, researchers can flexibly adjust AI use strategies while ensuring the depth of exploratory research.For example, by fine-tuning large language models to provide more personalized research ideas, or to help scientists better spark their own ideas.

AI can also promote scientific creativity that may not otherwise occur.One type of AI creativity is interpolation, in which AI systems identify new ideas in their training data, especially when people’s abilities are limited. For example, AI is used to detect outliers from large data sets from the Large Hadron Collider experiment.

The second is extrapolation, in which case AI models can extend knowledge beyond training data and propose more innovative solutions.

The third is invention, in which AI systems propose completely new theories or scientific systems that are completely divorced from their training data, similar to the original development of general relativity or the creation of complex numbers. While AI systems have not yet demonstrated such creativity, new methods promise to unlock this ability, such as multi-agent systems that optimize for different goals, such as novelty and counter-intuition, or scientific problem generation models, specifically trained to generate new scientific problems that inspire innovative solutions.

reliability

Reliability refers to the degree of trust scientists have when relying on the research results of others, and they need to ensure that these results are not accidental or erroneous.At present, there are bad practices in artificial intelligence research, and scientific researchers should be highly vigilant when conducting scientific research. For example, researchers choose the criteria used to evaluate model performance based on their own preferences, while AI models, especially LLMs, are also prone to hallucinations, that is, false or misleading outputs, including scientific citations. LLMs can also lead to a proliferation of low-quality papers similar to work produced by paper factories.

To address these issues, a number of solutions already exist, including developing a list of good practices for researchers to follow, and different types of AI factual research, such as training AI models to match their output with trusted sources, or helping verify other AI models ‘output.

On the other hand,Researchers can also use AI to improve the reliability of a broader research base.For example, if AI could help automate parts of the process of data annotation or experimental design, this would provide much-needed standardization in these areas. As AI models grow in their ability to match their output with citations, they can also help scientists and policymakers review the evidence base more systematically. Researchers can also use AI to help detect false or forged images or identify misleading scientific claims, such asScienceThe journal recently tested an AI image analysis tool. AI may even play a role in peer review, especially considering that some scientists already use LLMs to help review their own papers and verify the output of AI models.

of interpretability

inNatureIn a recent survey by the magazine, scientists believed that the biggest risk in using AI for scientific research is relying on pattern matching and neglecting in-depth understanding. Among the concerns that AI may undermine scientific understanding is the lack of theory in modern deep learning methods. They do not contain or provide theoretical explanations for the predicted phenomena. Scientists are also concerned about the unexplainability of AI models, meaning that they are not based on explicit sets of equations and parameters. There are also concerns that any way to interpret the output of AI models will not be useful or easy to understand for scientists. After all, AI models may provide predictions of protein structure or weather, but they may not necessarily tell us why proteins fold in a particular way, or how atmospheric dynamics causes climate change.

In fact, people ‘s concerns about replacing real theoretical science with low-level calculations are not new, and past technologies, such as the Monte Carlo method, have been subject to similar criticism. Fields that combine engineering and science, such as synthetic biology, have also been accused of prioritizing useful applications over in-depth scientific understanding.But history has shown that these methods and techniques ultimately drive the development of scientific understanding.What’s more, most AI models are not truly theoretical. theyDatasets and evaluation criteria are usually built based on previous knowledge, and some have a certain degree of interpretability.

Today’s interpretability technologies are constantly developing, and researchers try to understand AI’s inference logic by identifying concepts or intrinsic structures learned in models. Although these interpretable techniques have many limitations, they have enabled scientists to draw new scientific assumptions from AI models. For example, studies have been able to predict the relative contribution of each base in a DNA sequence to the binding of different transcription factors, and explain this result using concepts familiar to biologists. In addition, the superhuman strategies AlphaZero learned while playing chess can be passed on to human chess players after being analyzed by another AI system.This means that new concepts of AI learning may feed back human cognition.

Even without explainable technologies, AI can improve scientific understanding by opening up new research directions that would otherwise be impossible.For example, by unlocking the ability to generate large numbers of synthetic protein structures, AlphaFold allows scientists to search across protein structures, not just protein sequences. The method was used to discover an ancient member of the Cas13 protein family that has potential for RNA editing, especially in helping diagnose and treat disease. The discovery also challenges previous assumptions about the evolution of Cas13. Instead, attempts to modify the AlphaFold model architecture to incorporate more prior knowledge resulted in performance degradation。This highlights the trade-off between accuracy and interpretability. AI fuzziness stems from their ability to operate in high-dimensional spaces that may be incomprehensible to humans but are necessary for scientific breakthroughs.

04 Conclusion: Seize the opportunity, artificial intelligence empowers scientific research action plan

Obviously, the potential of science and artificial intelligence in accelerating the scientific process should be highly valued by scientific researchers. So, where should scientific researchers start? In order to make full use of AI-driven scientific opportunities, it is necessary to embrace change proactively. There may be some such suggestions that can be adopted.

First,Master the language of AI tools,Such as understanding technical principles such as generative models and intensive learning, and skillfully using open source code bases for customized exploration; secondly,Build a closed loop between data and experiments,Quickly verify AI-generated results through automated laboratories such as the University of California, Berkeley A-Lab, forming an iterative chain of hypothesis-generation-verification; more importantly,Reshaping scientific imagination——When AI can design proteins or superconductors beyond the scope of human experience, scientists should turn to more essential scientific issues, such as revealing the hidden variable relationship between material properties and microstructure through AI, or exploring multi-scale coupling mechanisms across physical fields. As Nobel Prize winner David Baker said:“AI does not replace scientists, but gives us a ladder to touch the unknown. rdquo;In this exploration of human-computer collaboration, only by deeply integrating human creative thinking with AI’s computational violence can the infinite possibilities of scientific discovery be truly released.

references

https://www.aipolicyperspectives.com/p/a-new-golden-age-of-discovery

https://mp.weixin.qq.com/s/_LOoN785XhnXao9s9jTSVQ243145.