Wen| GeeTech

Tesla Optimus robot completed factory parts sorting, Yushu robot accurately completed dance movements at the Spring Festival Gala, and BYD kicked off the popularization of smart driving. These landmark events announced that artificial intelligence is entering a critical year for technological evolution.

Recently, Alibaba Group CEO Wu Yongming announced that in the next three years, Alibaba will invest more than 380 billion yuan in building cloud and AI infrastructure, a total amount exceeding the total of the past ten years. This also set a record for the largest investment ever made by China private companies in cloud and AI infrastructure construction. According to IDC forecasts, by 2030, AI will contribute US$19.9 trillion to the global economy and promote global GDP growth by 3.5% in 2030.

From the industrial revolution to the information revolution, every technological transition is accompanied by the subversion of infrastructure. If AGI is an expedition to the sea of stars, then AI infrastructure is the Yangguan Road to the destination. Steam locomotives need railway networks, electricity needs power grids, and the Internet needs optical fibers and base stations. The outbreak of AI is calling for a new infrastructure network. It is not only a pipe for data transmission, but also a nerve center that connects entities and intelligence, coordinates global and local, and balances efficiency and security. It is a new network that can allow machine intelligence and the physical world to resonate at the same frequency.

Under this network, various agents such as robots, autonomous vehicles, and low-altitude aircraft have completed real-time dialogues and human-computer interactions again and again through independent decision-making and collaborative control, and the door to the new world of AGI will also open.

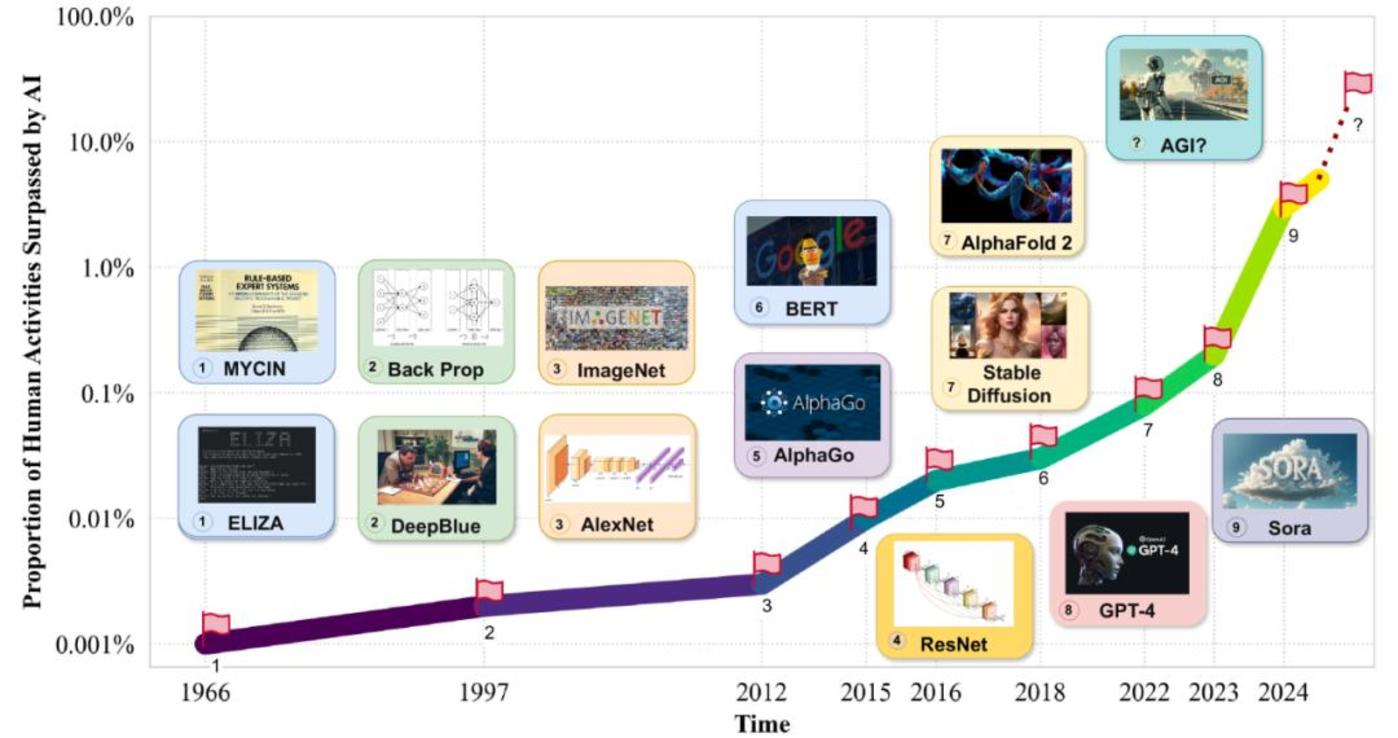

The inevitable path of AGI, from perceptual AI to physical AI

Voice assistants can accurately recognize dialect commands, and mobile phones can automatically capture the brightest smiles. These masterpieces of perceptual AI have built the digital senses of modern society. They are like invisible eyes and ears, transforming light signals and sound waves into computable data streams.

But when self-driving cars face sudden road collapses, or service robots cannot find a charging interface in a messy living room, pure environmental awareness immediately exposes fatal shortcomings.

When Boston Dynamics ‘humanoid robot Atlas performs a somersault on the balance beam, it not only demonstrates the accuracy of its movements, but also reveals the essence of physical intelligence: gravity acceleration calculations need to be synchronized with joint torque control, and the visual information captured by the camera must be instantly transformed into a mechanical response like muscle memory. This millisecond-level closed loop of perception and action far reflects the essential characteristics of intelligence better than AlphaGo’s victory over the human champion.

The continuous evolution of large models is like a butterfly fluttering its wings, subverting people’s traditional understanding of artificial intelligence. From the first attempt at new architectures to the discovery of new universal laws, from capability generalization to seamless modal integration, these breakthrough developments are constantly refreshing the boundaries of machine intelligence.

The large model achieves a comprehensive upgrade of perception and cognitive capabilities, giving the machine more detailed and rich understanding capabilities. At the same time, artificial intelligence is moving towards another critical dimension to simulate and adapt to the real physical world.

From perception to decision-making to control execution, end-to-end intelligent systems are emerging. Machines ‘adaptability and flexibility continue to break through. They can not only independently perceive and reason complex scenarios, but also proactively plan actions and make decisions. The accelerated implementation of intelligence and autonomous driving has further shaped the physical form of the machine.

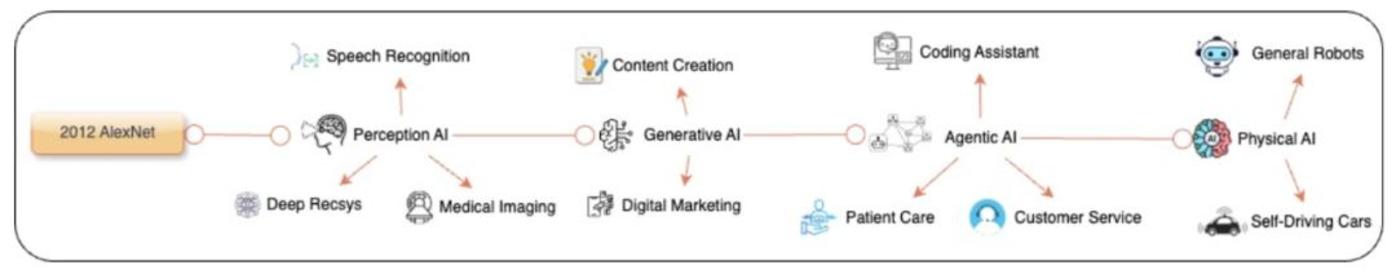

As the initial stage of the development of artificial intelligence, perceptual AI focuses on the machine’s ability to perceive the environment, allowing the machine to obtain information through vision, hearing and other senses, and carry out basic understanding and response. Perceptual AI enables machines to interact initially with the outside world, opening up possibilities for more complex intelligent behaviors. Typical applications at this stage include speech recognition, image processing, and recommendation systems.

In 2012, a neural network called AlexNet exploded the AI research community, outperforming all other types of models and winning the ImageNet competition that year. Since then, neural networks have taken off. In the 13 years since ImageNet, computer vision researchers have mastered object recognition and turned to image and video generation, laying the foundation for subsequent generative AI.

On the basis of perceptual AI, generative AI has formed the ability of machines to generate content through further development and extension. This stage marks the ability of artificial intelligence to not only understand information, but also create new content such as text, images and audio. It is considered a productivity amplifier, providing unprecedented tools and possibilities for the fields of marketing and creation.

This year, DeepSeek’s popularity has once again pushed generative AI to the forefront. However, the underlying logic of DeepSeek is still statistical machine learning to feed data, train, and output results. This means that the technical ceiling of generative AI is clearly visible, and even because its deep thinking process is transparent, it makes people more aware of its essence-a trained intelligent model rather than a real agent.

An interesting example: When asked how many r’s a strawberry has, DeepSeek needs to think twice for 50 seconds to give the correct answer. It can solve complex problems, but exposes limitations in simple scenarios. This is because it relies on statistical correlation rather than causal logic. Just like a supermarket discovering a positive correlation between diaper sales and beer sales, AI can discover patterns, but cannot understand the chain of cause and effect behind the dads buying alcohol easily.” rdquo; Even if OpenAI is as strong as OpenAI, it is trying reflective reasoning (such as GPT-4o’s multipath thinking), the essence is still data-driven optimization.

Recently, Yann LeCun, Meta’s chief AI scientist, said at the 2025 Artificial Intelligence Action Summit that AI needs to understand the physical world. Only on this basis can AI truly approach human intelligence.

Although current large models perform well on tasks such as passing the bar exam and solving mathematical problems, they are unable to perform basic tasks in daily life, such as doing housework. For artificial intelligence, many seemingly simple actions, such as washing dishes or cleaning tables, are still complex problems that cannot be solved. These models do not really understand the physical world, but only simulate phenomena through pattern recognition and data generation.

In order to further enhance AI’s ability to understand the real world, physical AI has been proposed. It enables artificial intelligence systems to not only understand information, but also operate in the physical world. It combines the understanding of physical phenomena with intelligent decision-making capabilities, making Intelligent systems can flexibly respond to complex situations.

Physical AI gives embodied intelligence and autonomous drivers stronger environmental perception, understanding and interaction capabilities, allowing them to better understand the surrounding environment and respond accordingly according to physical laws. For example, AI can directly control robots in warehouses to transport goods, or optimize the driving strategies of autonomous vehicles.

From perceptual AI, generative AI, and finally to physical AI, this evolution process reflects the continuous evolution of artificial intelligence technology. Each stage inherits the technical achievements of the development of artificial intelligence in the previous stage, allowing machines to not only see and hear, but also understand and act. This gradual evolution has laid the foundation for achieving a higher level of General Artificial Intelligence (AGI) and has had a profound impact on all walks of life.

AI and physical intelligence are rising in the double spiral”

Traditional artificial intelligence is like a brain in a vat. Although it can solve equations and write poems, it cannot truly touch reality. The subversive nature of physical AI lies in that it injects intelligence into physical entities, giving machines the closed-loop ability of perceiving, decision-executing. From self-driving vehicles to smart grids, from flexible robots to molecular manufacturing equipment, these systems are no longer satisfied with understanding the world, but rather focused on changing the world.

Compared with generative AI, which processes the input of one-dimensional or two-dimensional information, such as text, pictures, audio or video, and outputs the same type of information, physical AI needs to understand information from a three-dimensional or even four-dimensional perspective (including space-time). This is essentially different from information intelligence.

At the input level, physical AI systems can obtain input from many tools, such as cameras, inertial sensors, radars and lidar. They process data to sense and understand the world, including sensory information such as vision and touch, and can directly learn from sensor data. Learning and understanding the environment allows artificial intelligence to move from simple perception and generation to being able to reason, plan and act.

At the output level, physical AI generates TSD data, that is, time (T) series (S) data. This data can be directly used to control embodied intelligence, giving it a brain that can operate flexibly under real physical rules.

In addition, generative AI and physical AI are also different in product forms and application scenarios. Generative AI is not affected by time and does not require real-time feedback. For example, some information in ChatGPT may only be updated until September last year. Physical AI systems must process input information in real time and require real-time sensing and reasoning environments to ensure that specific intelligence can respond in a timely manner.

Currently, most physical AI systems can only handle specific tasks or small environments, and the results are uneven. Falling to the ground, a currently popular example is Yushu Technology’s four-legged robot dog, which can climb mountains and wading waters, and can also use a series of difficult gymnastics movements, including two in-situ rotations followed by three and a half inverted rotations, as well as a set of smooth Thomas full spin, side somersaults and 360-degree jump and twist.

Just as big models revolutionized generative AI, physical AI has become the key to entering a new stage in fields such as embodied intelligence and autonomous driving.

First of all, the problem of getting on the bus for large models will be well solved.

At present, the application of large models in the automotive field is mainly reflected in two aspects: one is smart cockpit, and the other is autonomous driving. The former has a natural fit with large model technology, because current smart cockpits focus more on entertainment and interactive functions, which is very consistent with the language processing capabilities of the large model. The difficulty lies in the latter.

For autonomous driving, how to achieve efficient and safe vehicle control in complex and dynamic traffic environments has become a core problem. Existing autonomous driving systems generally lack multi-agent collaboration capabilities, efficient decision-making and interpretation capabilities, and it is difficult to effectively understand the behaviors and intentions of surrounding traffic participants when faced with complex traffic environments.

The second is data. In the field of autonomous driving, large models need to be trained with a large amount of real-world data to make them more humanoid. So how to make these data better serve large models for training is another difficulty faced by general car companies.

Secondly, humanoid robots are accelerating towards the ChatGPT moment.

Last year, when artificial intelligence robot startup Figure AI released Figure 02, it attracted a lot of market attention. Figure 02 integrates OpenAI’s GPT-4o multimodal model on the brain, allowing it to better understand and respond to complex instructions.

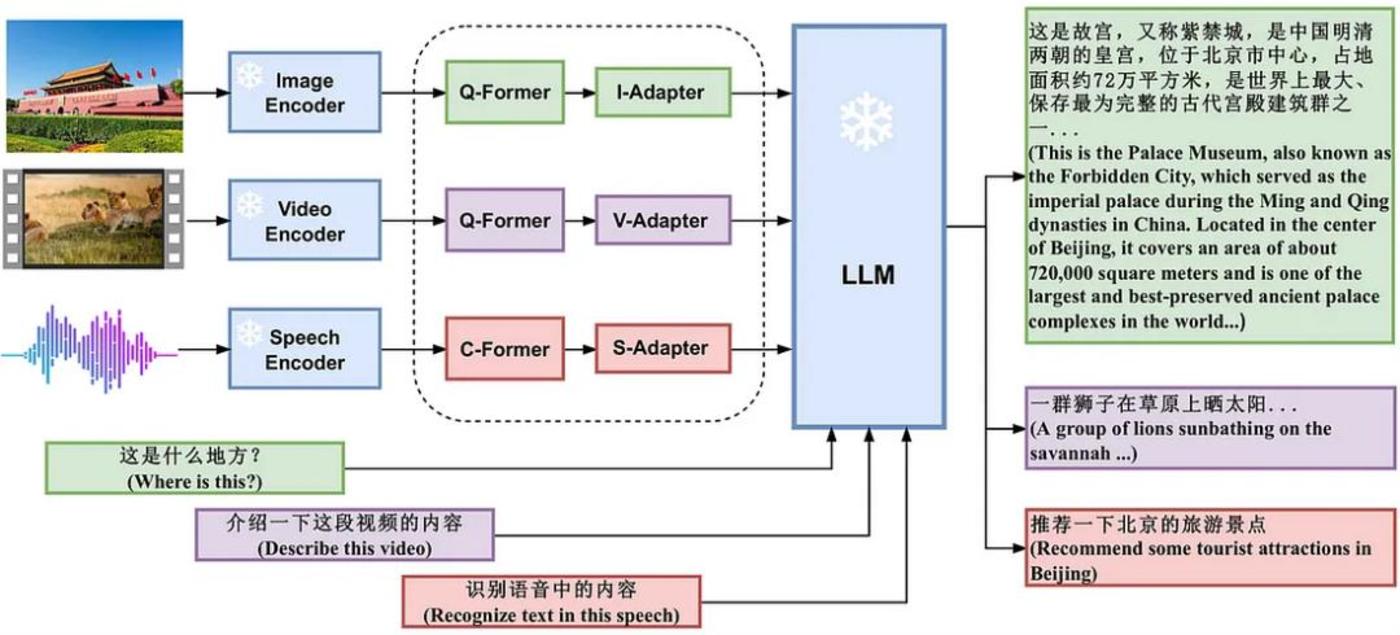

Multimodal large models are not only a simple superposition of technologies, but also an important technical support to promote the development of physical AI. The essence of large model capabilities is the compression and secondary processing of information. Multi-modal large models expand the information input modes and improve the ceiling of model capabilities.

The technical path of multi-modal large model is from image-language modal fusion to the fusion of more than three modes. The training of language modality gives the model the ability of logical thinking and information generation; the visual modality has a higher information flow density and is more relevant to the real world, which can greatly expand the application scenarios, so it has become the first choice for multimodal technology. information carrier. On this basis, the model can continue to develop different modalities such as action, sound, and touch to cope with more complex scenes.

The core advantage of multimodal large models lies in their excellent information fusion capabilities. Through simultaneous processing and deep integration of different modal data, the model can mine the intrinsic correlations between cross-modal information, thereby generating more comprehensive, accurate and insightful understandings and responses.

For example, in the image description generation task, the model can combine the visual elements in the image with relevant text descriptions to generate accurate and smooth natural language descriptions, allowing the machine to not only see the image content, but also tell the story in a language that humans can understand., so it can better meet the needs of machines for widespread use in the physical world.

Infrastructure path to AGI

The rise of physical AI is pushing the development of artificial intelligence to a critical point: Can we build a network that is smart enough, tough and inclusive to not only release the full potential of technology, but also protect the core values of technology? This is not only a challenge for engineers, but also a common topic for the whole society.

The laws of operation in the physical world are far crueler than in digital space: decision-making deviations do not cause program errors, but bloody traffic accidents; model reasoning requires not probability optimization, but precise control at the level of milliseconds. As an AI network where agents interact with the physical world in real time, the vehicle-road cloud network is the key key to breaking this layer of glass. It injects digital intelligence into the capillaries of the physical world through large-scale deployment of roadside sensing units, edge computing nodes that process massive amounts of data every second, and covering urban roads.

The technical core of this huge network lies in the breakthrough in the integrated architecture of sysensory computing. Communication optical fibers are like a nervous system that transmits the acceleration changes of each car in 0.1 seconds, lidar arrays are like visual nerves that capture the gait characteristics of a 200-meter layman, and cloud supercomputing clusters weave the digital twins of urban transportation in the spatio-temporal dimension.

When heavy rain causes the visibility of an intersection to plummet, the roadside base station can complete the prediction of the lane driving trajectory within 100 milliseconds, and send graded braking instructions to vehicles within 800 meters through the vehicle-road cloud network, giving autonomous vehicles a group decision-making ability to exceed the limits of human response.

The AI network with hyper-digital reality is reconstructing the underlying logic of technological evolution. After the vehicle-road cloud architecture transfers 70% of sensory computing tasks to roadside equipment, vehicles only need to retain basic computing power modules. Just like ordinary drivers gain God’s perspective through intelligent transportation systems, it is equivalent to using the group intelligence of municipal facilities to make up for the physical limitations of bicycle perception.

Deeper changes occur at the model and algorithm levels. AI in the digital world can withstand an accuracy of 99%, but the fault tolerance rate of the model controlling the braking system must be six nines. Through digital twin technology, Che-Road Cloud Network clones the real road network into a virtual sand table that can be tried and tested indefinitely. This evolutionary mechanism of virtual and virtual closed-loop allows artificial intelligence to predict the trajectory like a 30-year-old driver when responding to a sudden lane change of electric bicycles, but without being disturbed by the emotions of human drivers.

Viewed from the perspective of technological evolution, the value of vehicle-road cloud networks goes far beyond the improvement of transportation efficiency. It proves a more universal paradigm: when AI breaks through the boundaries of the digital world, its evolutionary trajectory must be deeply integrated with physical entities.

This fusion is not simply a matter of controlling and being controlled, but the formation of autonomous evolution capabilities through continuous environmental interaction. Just like the evolutionary history of the biological nervous system, from the stress response of single-celled organisms to the complex cognition of the human brain, the leap in intelligence is always accompanied by the expansion of the dimension of interaction with the real world.

In the vehicle-road cloud network, vehicles are not only recipients of information, they are also producers of information. Data collected by each vehicle’s sensors, cameras and other devices is transmitted to the cloud in real time. These data not only helps optimize current vehicle driving decisions, but also adversely affects the operation of the entire intelligent transportation system. By sharing information, multiple vehicles and traffic management systems can form collaborative sensing, thereby improving the safety and smoothness of the overall road.

The awakening of physical AI shows that the turning point of the intelligent revolution has arrived. When cities become a flowing neural network, every robot and every car can become an intelligent agent that makes autonomous decisions. As DeepSeek founder Liang Wenfeng said: The future of AI is not to replace humans, but should become infrastructure like hydropower, so that everyone can enjoy the convenience brought by technology. rdquo;

The robotic arm in the laboratory is learning to predict the tremor frequency before the coffee cup slides, and the meteorological AI system simultaneously adjusts the blade angle of the wind turbine. These seemingly fragmented technological breakthroughs are actually weaving an intelligent collaborative network covering the world. When the network reaches critical size, perhaps we will eventually understand the ultimate question Turing asked in 1950: Can machines think? The answer may be hidden in the sparks generated by the continuous dialogue between machines and the physical world.