(Photo source: Photo taken by Zhijia Lin, editor of GuShiio.comAGI)

NvidiaIt ushered in the best year of performance in history.

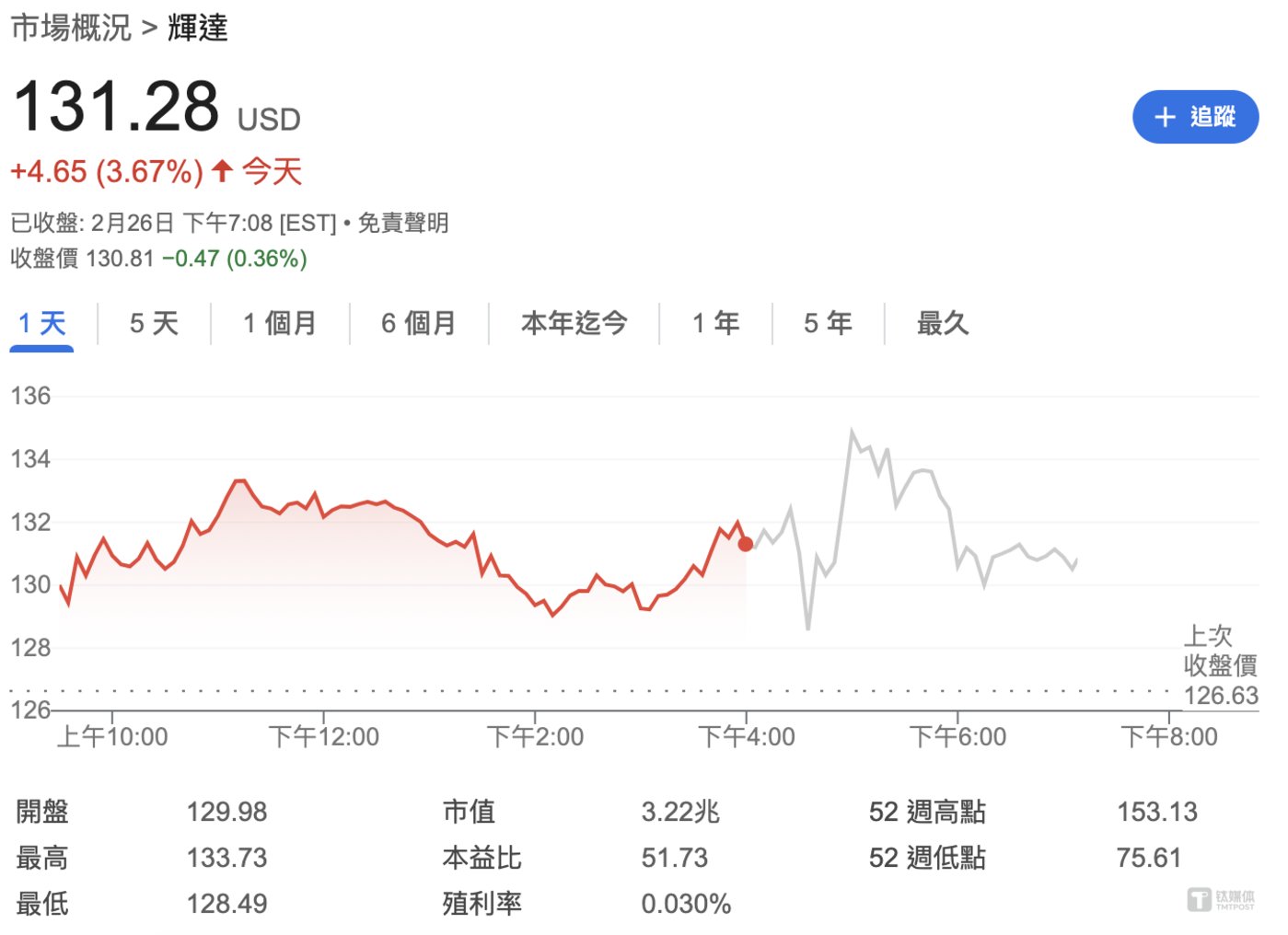

On the morning of February 27, Beijing time, NVIDIA, the world’s largest AI chip giant by market capitalization, released its fourth quarter of fiscal year 2025 (fourth quarter of the natural year of 2024) and annual financial results ending January 26 this year.

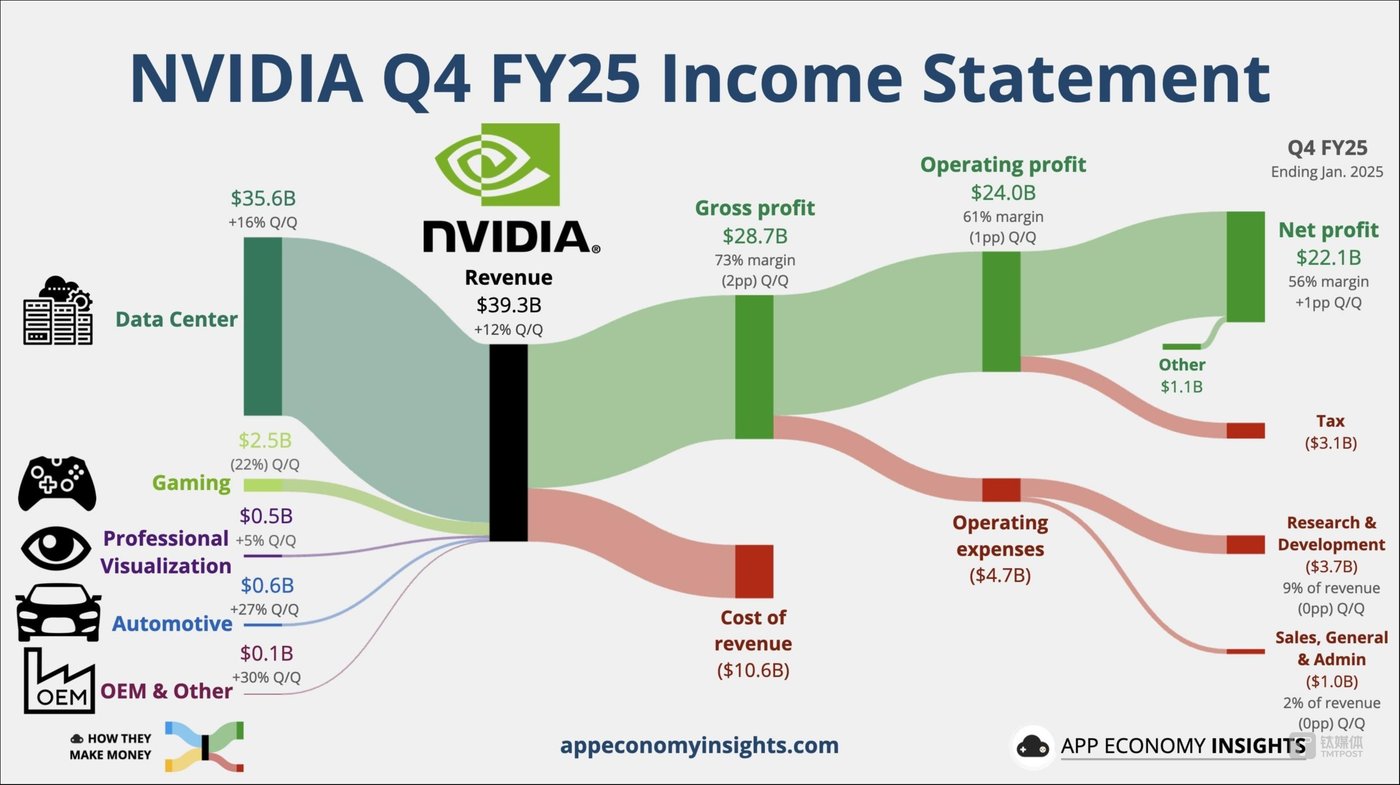

Financial reports show thatIn the fourth quarter, Nvidia’s total revenue was US$39.331 billion, a year-on-year increase of 78%, and analysts expected US$38.25 billion, a month-on-month increase of 94%; adjusted gross profit margin was 73.5%, a year-on-year decrease of 3.2 percentage points, in line with analyst expectations; Under GAAP, quarterly net profit was US$22.091 billion, a year-on-year increase of 80%, a month-on-month increase of 14%, and a non-GAAP adjusted year-on-year increase of 72%; Adjusted earnings per share (EPS) were $0.89, a year-on-year increase of 71%.

In fiscal year 2025, Nvidia’s revenue exceeded US$100 billion for the first time, reaching US$130.5 billion, an increase of 114% over the same period last year; under non-GAAP, net profit reached US$74.265 billion, an increase of 130% over the same period last year; Gross profit margin was 75.5%, a year-on-year increase of 1.7 percentage points, and adjusted EPS was US$2.99.

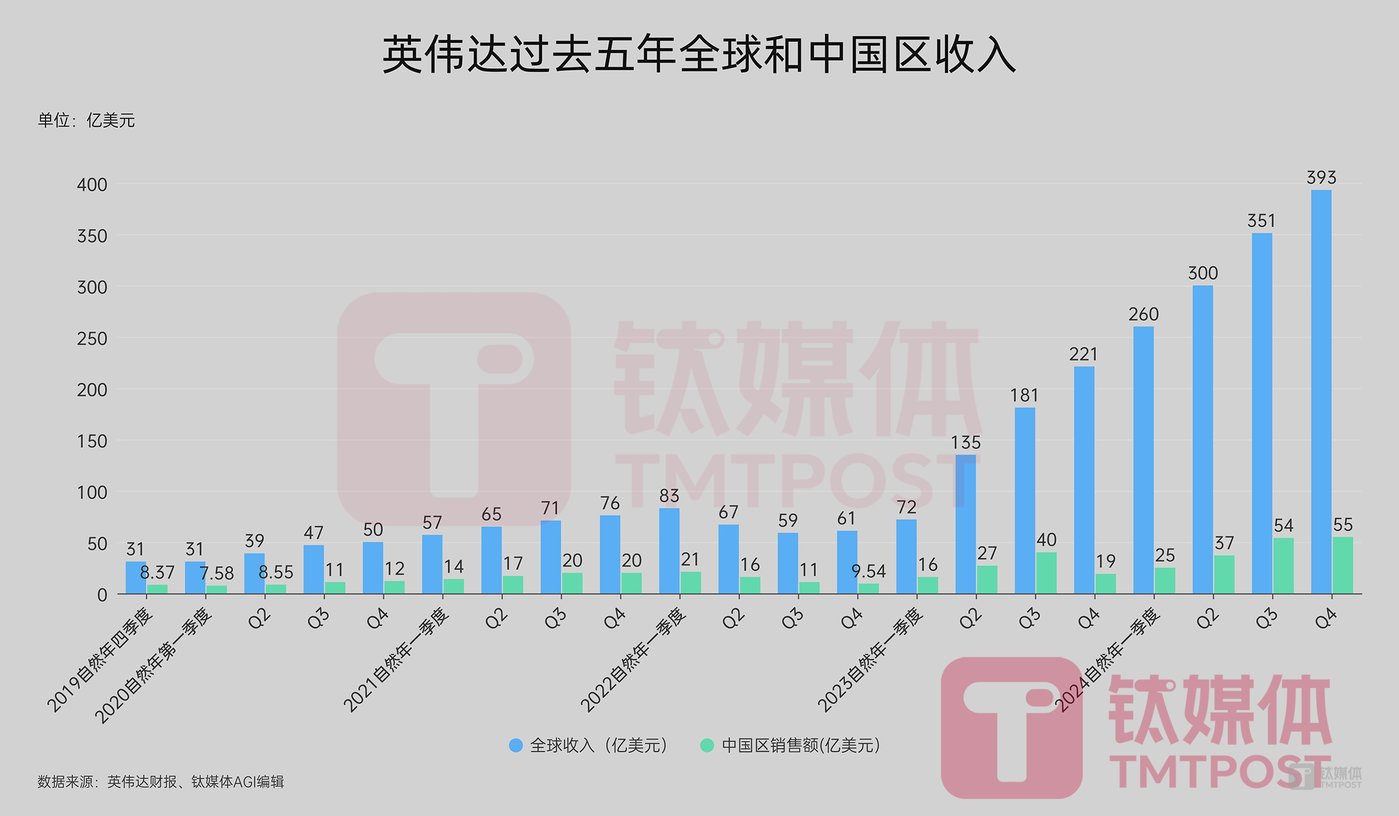

Among them, last year, Nvidia’s revenue in China was US$17.108 billion, the highest in history, an increase of 66% from US$10.306 billion in the previous year. Currently, 53% of Nvidia’s revenue in fiscal year 2025 comes from regions outside the United States.

Huang Renxun, founder and CEO of Nvidia, said that the demand for AI chips Blackwell is staggering. At present, the company has successfully achieved large-scale production of the Blackwell AI supercomputer, with sales reaching billions of dollars in the first quarter. Blackwell Ultra is expected to be released in the second half of 2025. Once Blackwell completes production increase, profits will improve, reiterating that profit margins will be in the middle of the 70%-80% range by the end of 2025.

“‘The potential demand created by reasoning is exciting. This will require more calculations than the large language model. This may require (at least) millions of times more calculations than currently.“rdquo; Huang Renxun emphasized that the AI industry is developing at the speed of light, because agenic AI and physical AI have laid the foundation for the next wave of AI revolution, thereby completely transforming this largest industry.

Before the earnings report was released, it closed up nearly 3.7%. After the earnings report was released, Nvidia first fell after hours and fell nearly 2%. After rising, it once rose more than 2%, and now it has fallen 0.36%. Some commentators said that the stock price turned lower after hours, indicating that investors still felt that the surprises brought by Nvidia’s performance were not big enough.

DeepSeek still has computing needs, Nvidia continues to surge

Wall Street questioned whether DeepSeek’s phenomenal rise would be useful to the more than US$300 billion in computing power spending of U.S. technology giants, shaking expectations for promoting the development of the U.S. AI industry. Just on January 27, Nvidia’s share price plunged 17% to close at US$118.58, and its market value evaporated by nearly US$600 billion (approximately 4.3 trillion yuan).

CNBC said this was the largest decline in the history of a U.S. company.

Although Huang Renxun later emphasized that investors had cognitive bias on the impact of DeepSeek-R1, he believed that the market had simplified AI development into two separate stages of pre-training and reasoning, and mistakenly believed that the efficiency of R1 would end the demand for computing power, and Nvidia began to continuously embrace DeepSeek.However, the market expressed caution about Nvidia shares, and the stock price increase was much lower than the same period in 2024. Nvidia shares have fallen 2.2% so far this year.

Except for two quarters of decline in 2022, Nvidia’s revenue was not particularly affected by market fluctuations until 2023.However, in the second quarter of the natural year of 2023 since the release of ChatGPT, Nvidia’s overall revenue exceeded US$10 billion for the first time, and has grown to more than US$35 billion so far, and the entire full year’s revenue exceeded US$130 billion.

Among them, in 2024, Nvidia’s sales in China exceeded US$17 billion, the highest level since the company’s establishment.

The financial report shows that Nvidia’s latest fourth quarter and full year revenue sources are mainly divided into four sectors: data center, games and AI PC, professional visualization, automobiles androbot。

Among them,In terms of data centers,Revenue in the fourth quarter reached a record $35.6 billion, a 16% increase from the previous quarter and a 93% increase from the same period last year;Full year revenue increased 142% to a record $115.2 billion.The main reasons include that Nvidia will serve as a key technology partner in the US$500 billion U.S. Stargate project, and that more than 75% of the systems in the TOP500 list of the world’s most powerful supercomputers use Nvidia technology.

Colette Kress, Nvidia CFO, pointed out at the earnings conference that the strong year-on-year and month-on-month growth was due to the market demand for accelerated computingBlackwell chip revenue reached US$11 billion in the fourth quarter. NVIDIA achieved the fastest product growth in history, mainly sold to large cloud providers, accounting for approximately 50% of NVIDIA’s data center revenue in the quarter.

Among them, Nvidia’s total sales in China in the fourth quarter were approximately US$5.5 billion, most of which came from the data center business. Huang Renxun pointed out at the earnings conference that shipments to China are expected to account for roughly the same percentage of data center business revenue in the fourth quarter, about half of that before export controls.

Huang Renxun further pointed out that in terms of geography, the relationship between people is roughly the same. Artificial intelligence is software, modern software, and AI has become the mainstream. AI is used for ubiquitous delivery services, ubiquitous shopping services. If 1/4 of the milk you buy is delivered to you, AI is involved in almost everything consumer services provide, and AI is its core.

“I think it’s safe to say that almost all software in the world will be injected with AI. All software and all services will ultimately be based on machine learning. Data flywheel will be part of improving software and services, and computers of the future will accelerate. The computers of the future will be based on AI, and we have spent decades building modern computers, we really only have two years. I am quite certain that we are at the beginning of this new era.& rdquo; Huang Renxun said.

Huang Renxun pointed out that Nvidia has a quite clear understanding of capital investment in data center construction. In the future, most software will be based on machine learning, and accelerated computing, generative AI, and inference AI will all need to be in the data center. Therefore, whether it is in the short term or in the long term, Nvidia GPU and CUDA computing systems are still the future. ldquo; We also know that there are a lot of innovative and truly exciting startups that are still developing new opportunities for AI to break through. We are actually only using consumer-grade AI and search, as well as consumer-generated AI advertising and recommendations, and we are still in the early stages of software. rdquo;

In terms of games and AI PC,In the fourth quarter, consumer-grade business revenue reached US$2.5 billion, a month-on-month decrease of 22% and a year-on-year decrease of 11%; full-year revenue was US$11.4 billion, an increase of 9%. This is mainly due to the launch of GeForce RTX 5090 and 5080 graphics cards, which has increased performance by up to 2x compared with the previous generation, and the acceleration of the implementation of GeForce RTX 50 series graphics cards and AI PC notebooks.

Kress emphasized that the game business is still in a stage of rapid development and was affected by supply constraints in the fourth quarter. The company expects strong continuous growth in the first quarter as supply increases.

In professional visualization business,Revenue in the fourth quarter was US$511 million, a month-on-month increase of 5% and a year-on-year increase of 10%; full-year revenue increased 21% to US$1.9 billion. This is mainly due to the launch of NVIDIA Project DIGITS, the world’s first personal AI supercomputer, and the acceleration of the further expansion of NVIDIA Omniverse integration into physical AI applications, including robots, autonomous vehicles and visual AI.

In the automotive and robotics business,Revenue from the automotive business in the fourth quarter was US$570 million, a month-on-month increase of 27% and a year-on-year increase of 103%; full-year revenue increased 55% to US$1.7 billion.

Huang Renxun said that all software and all services will ultimately be based on machine learning. Data flywheel will be part of improving software and services, future computers will accelerate, and future computers will be AI-based. ldquo; We’ve spent decades building modern computers, we really only have two years, and I’m pretty sure we’re at the beginning of this new era. rdquo;

Looking forward to the first quarter of the natural year of 2025, Nvidia expects revenue of US$43 billion, with a fluctuation of 2%; non-GAAP gross margin of 71.0%, with a fluctuation of 50 basis points, and is expected to usher in the highest single-quarter revenue in history again.

Huang Renxun emphasized that the next wave is coming. Corporate agency AI, robotic physical AI, and different regions building sovereign AI for their own ecosystems, each has just left the ground. ldquo; It is clear that we are at the center of this development. rdquo;

Arm wants to challenge Nvidia, but the latter wants to embrace China DeepSeek

In fact,Nvidia and Huang Renxun have become synonymous with the AI revolution and the most important indicators of its progress. Huang Renxun has spent most of the past two years traveling around the world as a missionary for AI technology and believes it is still in the early stages of economic popularization.

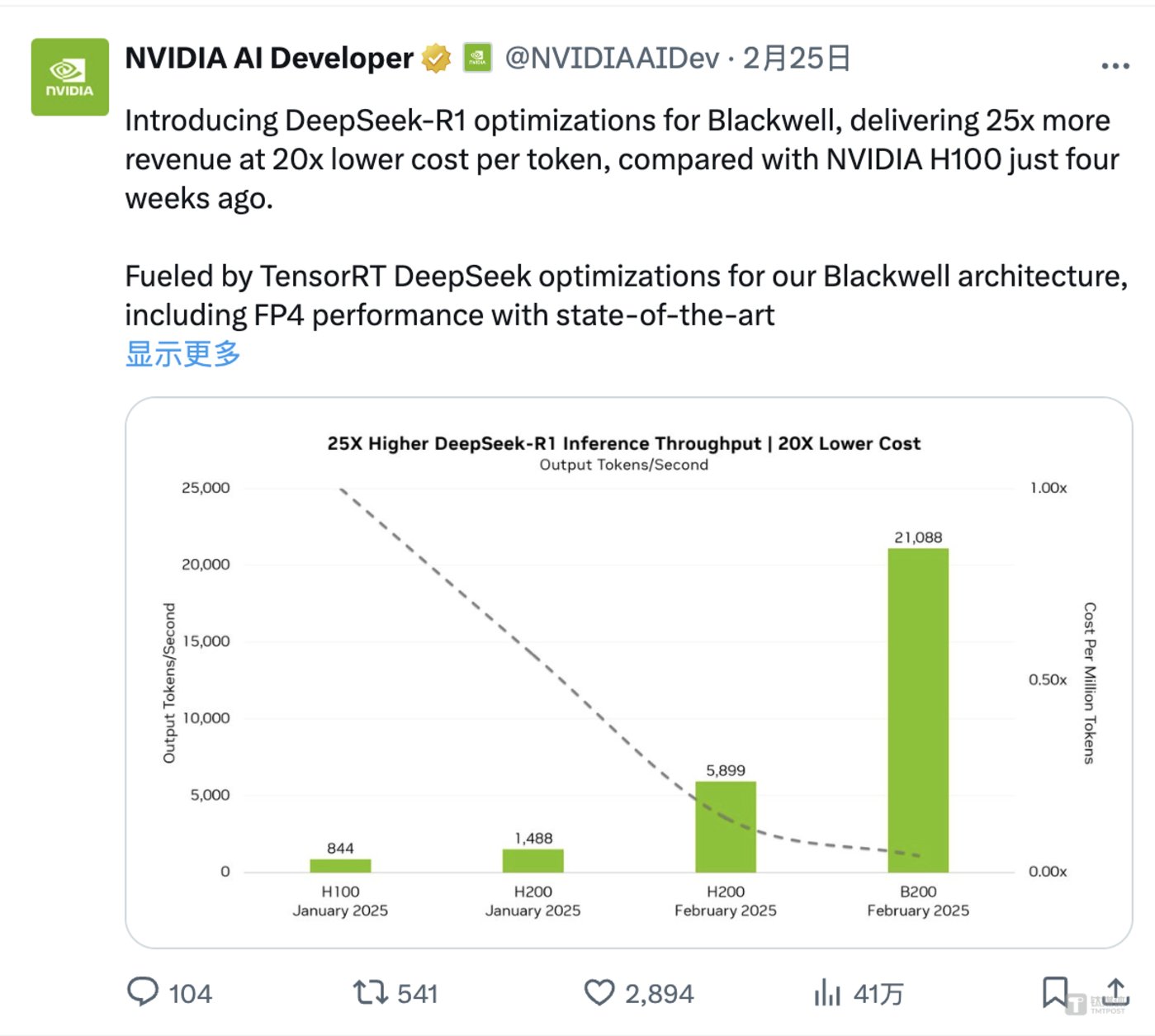

Today, under the influence of DeepSeek, Nvidia’s revenue has not declined.Last night and this morning, three pieces of news about Nvidia attracted attention: China companies purchased large quantities of Nvidia H20, Arm CEO Rene Haas directly said that Nvidia’s competitors still have room for development, and Nvidia has open-sourced the first DeepSeek-R1 optimized on the Blackwell architecture.

Among them, according to a report released by Nvidia, it introduced DeepSeek-R1 optimization for Blackwell. Compared with the Nvidia H100 four weeks ago, with the help of the new model, the B200 achieved an inference throughput of up to 21088 tokens per second. Compared with the H100’s 844 tokens per second,Reasoning speed is increased by 25 times, and the cost per token is reduced by 20 times.

This is exciting news for the market.

Nvidia also stated that it achieved 99.8% of the performance of the FP8 model in the MMLU Universal Intelligence Benchmark by applying TensorRT DeepSeek optimizations on the Blackwell architecture, including FP4 performance with the most advanced production accuracy.

In fact, with DeepSeek Open Source Week releasing the MLA decoding core FlashMLA, the EP communication library DeepEP, and the FP8GEMM (universal matrix multiplication) calculation library DeepGEMM, which are specially designed for Nvidia Hopper GPUs, Nvidia GPUs and DeepSeek are closely integrated to promote efficient calculation and deployment of AI models based on Nvidia GPUs and clusters optimization.

However, Arm, the chip design leader under Softbank Masayoshi Son, expressed different opinions.

According to The information, Rene Haas said that Nvidia still has many competitors, such as making general-purpose computing, reasoning and edge AI chips, and it is only a matter of time before sales of other AI chips will also increase significantly.

“You will start to see some specialized chips for running AI, as many of the largest AI companies and cloud providers are developing alternatives to NVIDIA chips. rdquo; Haas said, however, he is not sure which challengers will achieve the greatest success, but only believes that chip startups,AmazonCloud vendors, new entrants such as OpenAI, and traditional chip companies all have opportunities because they are all beginning to spend more resources developing AI inference models.

Founded in 1978 and headquartered in Cambridge, England, Arm is the world’s largest provider of chip semiconductor intellectual property (IP), mainly forApple, Samsung andQualcommMobile device processors from companies such as the CPU (central processing unit) provide underlying architecture and chip power support, thereby charging royalties for commercialization. 99% of high-end smartphones use Arm technology. In addition, NVIDIA’s newly released Grace Blackwell data center CPU chip also uses ARM architecture.

Since its listing at the end of 2023, Arm’s share price has more than doubled driven by the AI boom. Although licensing designs from Nvidia only accounts for a small part of Arm’s business, Haas may not be neutral towards Nvidia. Arm is reportedly developing its own AI chips.

Haas believes that in the next few years, as models become larger and larger, the field of AI computing power will be divided. Among them, more and more funds will be invested in specialized GPUs or training solutions, but at the same time, CPU general-purpose computing chips based on inference AI still have great development prospects.

“I put all my money into GPUs for training, and my data center also has sunk costs in hardware, and I’m not sure I can use it for reasoning (chips made by other companies). rdquo; Arm, where Haas works, participated in cooperation with SoftBank, OpenAI,OracleThe Stargate joint venture, the Stargate project, aims to develop AI data centers for the United States and has committed to investing US$500 billion over the next four years.

Talking about Musk’s Colossus supercomputer and cluster training of 200,000 Nvidia computing cards, Haas said,Perhaps the most worrying thing is how quickly Musk launched the product. ldquo; I think this puts tremendous pressure on the entire ecosystem.” rdquo;

Talking about the development of chips between China and the United States, Haas told The Verge earlier that the economies of the two countries are inseparable, so it is difficult to design the separation of supply chains and technology. When studying policies such as export controls, the United States should realize that forced interruptions are not as easy as it seems on paper, and there are many levers to consider. In his view, China’s technology investment is slowing down, but it is very pragmatic in building systems and products, especially relying heavily on the global open source software ecosystem. ldquo; So our automotive business in China is very strong. rdquo;

Currently, the demand for Nvidia in China’s AI model is increasing.According to reports, orders from China companies to purchase Nvidia’s H20 AI chips have surged, including medical, education and other fields, as well as smaller companies, have purchased products equipped with H20 chips. However, US President Donald Trump is planning to further increase export controls on semiconductors to China or consider restricting sales of H20 chips to China.

Huang Renxun recently said that despite rising geopolitical tensions, Nvidia will retain its business in China. ldquo; China has unique advantages in developing AI, especially the Guangdong-Hong Kong-Macao Greater Bay Area, which contains huge potential and is an extraordinary opportunity for China. rdquo;

Earlier, a blog post on Nvidia official website pointed out that these regulations will not help enhance U.S. security. The new rules will control technologies around the world, including technologies already widely used in mainstream gaming computers and consumer hardware. ldquo; Biden’s new rules will only weaken the United States ‘global competitiveness and undermine the innovations that allow the United States to maintain its leading position. rdquo;

While Nvidia’s share price continues to be high, Wall Street analysts are also increasingly divided over Nvidia.

Wedbush analysts said that most AI companies still use Nvidia’s CUDA environment to train and deploy models, and demand for their Blackwell GPUs remains strong. The launch of the H200 GPU 2025 will further consolidate Nvidia’s leading position in the field of AI acceleration. While DeepSeek may extend the GPU update cycle and put price pressure on Nvidia, it does not pose a direct threat to Nvidia’s business.

Circle Squared Alternative analyst Jeff Sica believes that Nvidia may burst the AI bubble.

Sica pointed out that Nvidia’s investors have made huge profits since 2018. However, Nvidia now faces the curse of high expectations. Market expectations for Nvidia are too high, which means the company needs to significantly exceed expectations in terms of earnings and revenue. At the same time, Nvidia is under pressure from China’s DeepSeek technology, which is cheaper and more efficient, which may prompt the company to reduce capital expenditures, which will have an impact on Nvidia’s business.

Sica said that if Nvidia fails to meet expectations, AI stocks will fall overall. Nvidia’s share price has always been very fragile, especially when such things asMicrosoftWhen such companies report reducing data center spending, this must be taken seriously.

However, at present, Nvidia’s unique value and ecological card slots are still solid. Almost all full-blooded models use Nvidia graphics cards, which is expected to help Nvidia continue to become a shovels seller that has benefited greatly in today’s gold rush.

“We are observing another law that the more reasoning time or testing time is calculated, the more the model thinks, and then smarter answer models emerge.For example, OpenAI’s Deep Research can consume more than a hundred times the calculations. In the future, inference models can consume more calculations. DeepSeek has ignited global enthusiasm. This is a good innovation, but more importantly, it is an open source AI model, and it requires more than 100 times more calculations for a single query. AI is moving at the speed of light. We are at the beginning of reasoning AI and reasoning time scaling. But we are at the beginning of the AI era. Multimodal AI, enterprise AI, sovereign AI, and physical AI are coming soon. We will grow strongly in 2025 and move forward. AI data centers will become AI factories, and every company will have them, or even rent or operate their own businesses.& rdquo; Huang Renxun said.

According to the latest financial report released by Deloitte, in 2024, the global chip market will be US$576 billion, of which AI chip sales will account for 11%, exceeding US$57 billion. It is estimated that by 2025, the value (scale) of the new generation of AI chips will exceed US$150 billion; by 2027, the global AI chip market will increase to up to US$400 billion.