Image source: Generated by AI

Image source: Generated by AI

During DeepSeek Open Source Week, others are not idle either.

On February 25, Claude released version 3.7 of Sonnet in the early morning. During the day, DeepSeek open-source the DeepEP code base. At night, Ali’s latest video generation model Wan 2.1 was unveiled. What a lively day!

Compared with a language model with stronger coding capabilities and an underlying code base that makes developers more excited, the video generation model is obviously more exciting for ordinary people.

Still adhering to the style of “opening as much as possible”, this time Wanxiang has open-source all inference codes and weights for the two parameters 14B and 1.3B. It also supports Wensheng video and Figun video tasks. Developers around the world can download the experience in Github, HuggingFace and Moda communities.

It also adopts the most relaxed Apache 2.0 protocol, which means that the copyright of the generated content belongs entirely to the developer and can be used for free channels or commercially.

In the evaluation set VBench, Wanxiang 2.1 surpasses domestic and foreign open source models such as Sora, Luma, and Pika.

What is its effect? Without further ado, let’s review first!

#01. Model measurement

Currently, we are experiencing the 2.1 Extreme Edition and Professional Edition in Tongyi Wanxiang. Both versions are 14B. The Extreme Edition is generated in about 4 minutes. The Professional Edition is slower, taking about 1 hour to generate, but the effect is more stable.

Compared with the extreme speed version, Wensheng Video 2.1 Professional version has more accurate text understanding and relatively higher picture clarity. However, the video images generated by both versions have obvious distortions, and there is a lack of understanding of some details of the physical world.

Tip: Refer to the Inception Space Shooting Method, take a wide-angle lens from above, the hotel corridor continues to rotate at an angle of 15 degrees per second, two agents in suits are rolling and fighting between the wall and the ceiling, and their ties are floating at 45 degrees under the influence of centrifugal force. Debris from the ceiling light splashed in a disorderly manner with the direction of gravity.

professional Edition

speed version

Prompt words: Girls in red dress jump on the steps of Montmartre, old objects collection boxes (wind-up toys/old photos/glass marbles) pop up at each step, pigeons form a heart-shaped trajectory under the warm filter, accordion scales and footsteps are accurately synchronized, followed by fisheye lens.

professional Edition

speed version

Prompt: The wolf hair brush is spread on the rice paper. When the ink is stained, the words “Destiny” appear one by one, and the edges of the writing glow with a golden light.

The video effect is relatively stable, with high character consistency and no obvious distortion, but the understanding of the prompt words is incomplete and lacks details. For example, in the case video, there are no pearls in the pearl milk tea, and Niangshi Shiji has not turned into a fat girl.

Tip: In oil painting style, a girl in simple clothes takes out a cup of pearl milk tea, gently opens her red lips and slowly tastes it, and her movements are elegant and calm. The background of the picture is a deep dark color, and the only light is focused on the girl’s face, creating a mysterious and peaceful atmosphere. Close-up, close-up of the side.

Tip: The stone figure’s arms naturally swing with the pace, and the background light gradually turns from bright to dim, creating a visual effect of the passage of time. The lens remains still and focuses on the dynamic changes of the stone figure. The small stone figure in the initial picture gradually increased as the video progressed, and finally transformed into a round and lovely stone girl in the final picture.

In general, the semantic understanding and physical performance of Wanxiang 2.1 still need to be improved, but the overall aesthetics is online, and the optimization and update speed may be accelerated after open source. We look forward to better presentation results in the future.

#02. Low cost, high effect, and high controllability

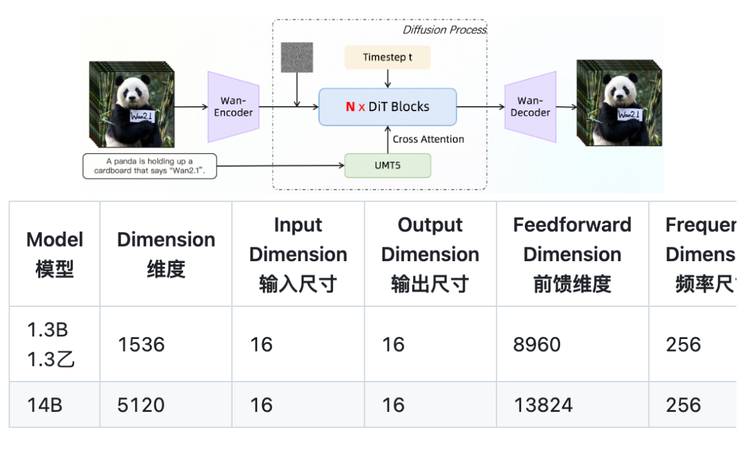

In terms of algorithm design, Wanxiang is still based on the mainstream DiT architecture and linear noise trajectory Flow Matching, which seems a bit complicated, but in fact, everyone almost has this idea.

It means to generate a pile of noise (similar to a TV snowflake screen) until the picture becomes pure noise, and then the model starts to “denoise”, places each noise in the right position, and generates a high-quality picture through multiple iterations.

However, the problem is that the traditional diffusion model requires a huge amount of computation when generating videos, and requires constant sorting optimization, which leads to the first generation time being long but the video time being not long enough, and the second occupying memory and consuming computing power.

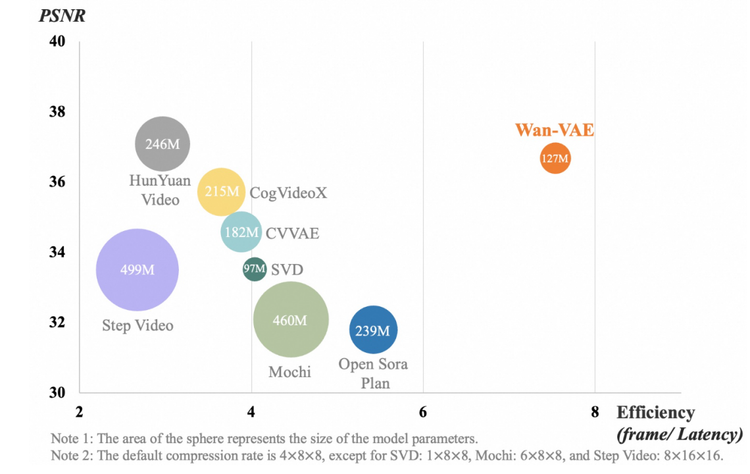

At this time, Wan Xiang proposed a novel 3D spatio-temporal variation automatic encoder (VAE) called Wan-VAE, which improved spatio-temporal compression and reduced memory use by combining multiple strategies.

This technology is somewhat similar to the “two-dimensional foil” in “Three-body”, which changes a person from three-dimensional to two-dimensional. Spatio-temporal compression means compressing the spatio-temporal dimensions of video, such as decomposing the video into low-dimensional representations, from producing a three-dimensional cube, to first generating a two-dimensional cube and then restoring it to three-dimensional, or using hierarchical generation to improve efficiency.

To give a simple example, Wan-VAE can compress a book of “The Romance of the Three Kingdoms” into an outline, and retain the method of restoring content in the outline, which greatly reduces the memory consumption. At the same time, it can use this method to remember longer chapters. Novel.

By solving the problem of content occupation, it also solves the problem of long video production. Traditional video models can only handle fixed lengths, and if they exceed a certain length, they will be stuck or crashed. However, if only the outline is stored and the correlation is remembered, then When generating each frame, temporarily store key information from the previous frames to avoid recalculation starting from the first frame. In theory, with this method, unlimited length 1080P video can be encoded and decoded without losing historical information.

This is why Wanxiang can run on consumer-grade graphics cards. Traditional high-definition video (such as 1080P) has too much data, and ordinary graphics cards have insufficient memory. However, before processing the video, Wanxiang first reduces the resolution, such as scaling 1080P to 720P to reduce the amount of data. After the generation is completed, it uses the super-divided model to improve the image quality to 1080P.

After ten thousand phases of calculations, by advancing spatial downsampling compression, the memory consumption during inference is further reduced by 29% without loss of performance, and the production speed is fast and the image quality does not shrink.

This part of the technological innovation solves the engineering problem that video generation models have not been able to be applied on a large scale before. But at the same time, Wanxiang also further optimized the generation effect.

Wanxiang 2.1 converts the motion trajectory input by the user into a mathematical model, which is used as an additional condition to guide the model during the video generation process. But this is not enough. The motion of objects must meet the physical laws of the real world. Based on the mathematical model, the calculation results of the physics engine are introduced to improve the authenticity of the motion.

In general, Wanxiang’s core advantage lies in solving problems in actual production scenarios through engineering capabilities, and at the same time freeing up space for subsequent iterations through modular design. For ordinary users, it has actually lowered the threshold for video creation.

The comprehensive open source strategy has also completely broken the business model of paying for video models. With the emergence of Wanxiang 2.1, the video generation track in 2025, there will be another good show to watch!