Wen| Academic headlines

One of the main goals of alignment science is to predict the tendencies of artificial intelligence (AI) models to dangerous behaviors before they occur.

For example, researchers used an experiment to examine whether models were likely to exhibit complex behaviors like deception and tried to identify early warning signs of misalignments. The researchers have also developed evaluation methods to test whether models will engage in certain worrying behaviors, such as providing information about deadly weapons or even disrupting human surveillance of them.

The current common problem is that large-scale Large Language Models (LLMs) are evaluated on small benchmarks or even deployed on a large scale, which means that there is a mismatch between evaluation and deployment: Models may produce acceptable responses during evaluation, but not during deployment.

this isOne of the major challenges in developing these assessment methods is scale.Assessments may run on thousands of behavioral examples in LLM, but when a model is deployed in the real world, it may process billions of queries per day.If worrying behaviors are rare, they may easily be ignored in assessments.

For example, a particular jailbreak technique may have been tried thousands of times in an evaluation and turned out to be completely ineffective, but in actual deployment, it does work after perhaps a million attempts.In other words, as long as there are enough escape attempts, one escape will eventually be successful.This greatly reduces the role of pre-deployment evaluation,Especially when one failure can have catastrophic consequences.

In this work, the Anthropic team believes that under normal circumstances, testing the rarest risks of AI models using standard evaluation methods is unrealistic,There is a need for a method that can help researchers infer from the relatively few instances observed before model deployment.

The related research paper titled Forecasting Rare Language Model Behaviors has been published on the preprint website arXiv. Considering the large-scale use of the model after deployment, this work is an important step towards pre-evaluation of AI models.

Paper link:

https://arxiv.org/pdf/2502.16797

theyFirst, the probability of each prompt causing the model to produce harmful responses is calculated: In some cases, they just need to sample a large number of model completions for each prompt and measure the parts of them that contain harmful content.

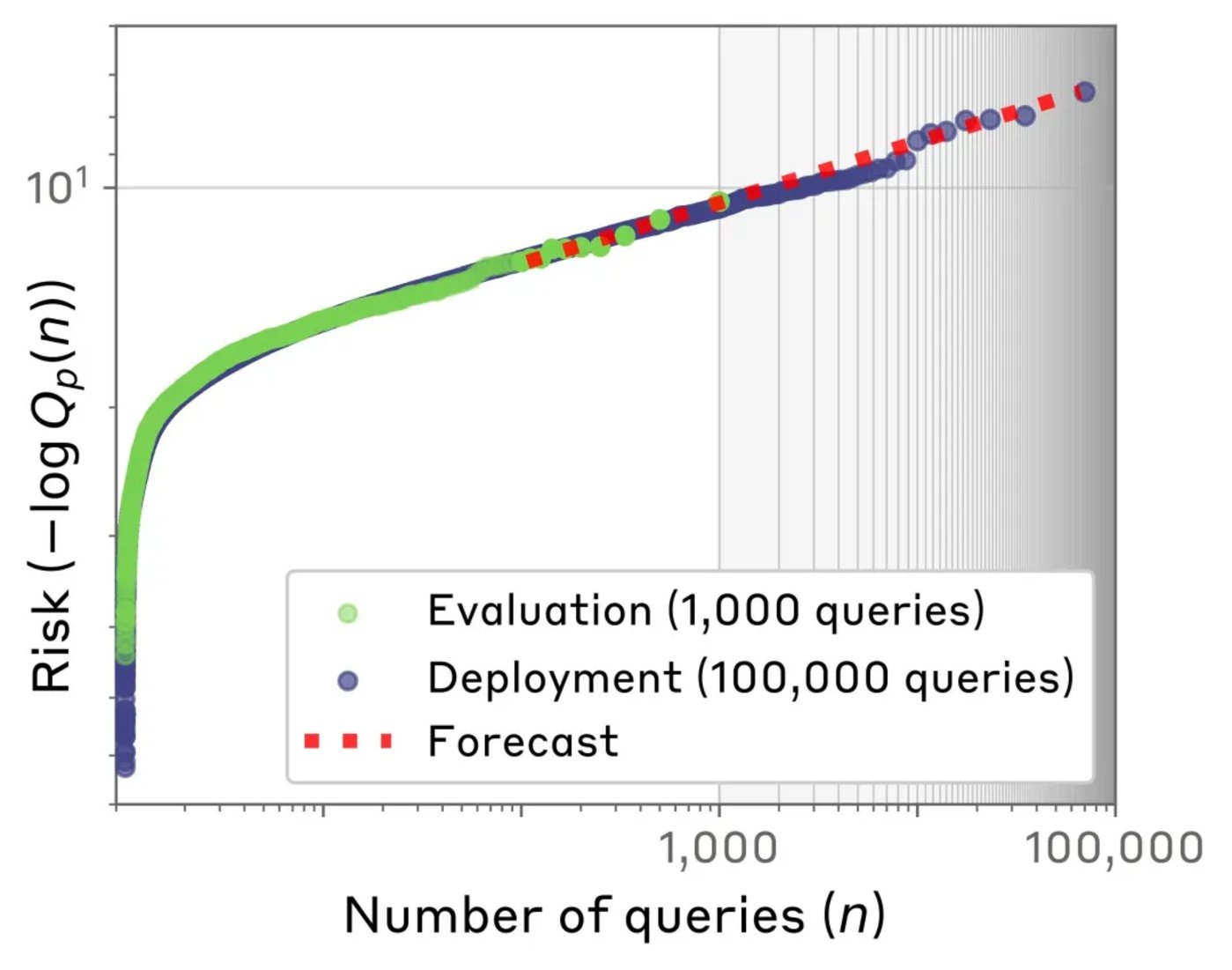

They then looked at the queries with the highest risk probability and plotted them based on the number of queries. Interestingly,The relationship between the number of queries tested and the highest (logarithmic) risk probability follows what is called a power law distribution.

That’s what extrapolation does: Because the power law’s characteristics are mathematically well understood, they can calculate the worst risk over millions of queries,Even if they only tested it a few thousand times. This allows them to predict a wider range of risks. It’s like testing the temperature of a lake at several different but still shallow depths, finding a predictable pattern, and then using that model to predict how cold the water will be at the depths of the lake that they can’t easily measure.

Fig.| Scaling laws allow the research team to predict rare language model behavior. The risk (vertical axis) of the highest-risk queries sent to the AI model follows a power-law distribution when plotted against the number of queries (horizontal axis). This allows the team to make predictions even from a small evaluation query dataset, even from an order of magnitude smaller evaluation (uncolored, left), whether a query is likely to show bad behavior when deployed (colored, right).

So, how accurate are their predictions? They compared predictions with actual measurements in several different situations to test the accuracy of the predictions.

First, they examined the risks of the model providing hazard information (for example, providing instructions to synthesize harmful chemicals). In testing, they used scaling laws to infer the risk from a small number of queries (say 900) to a large number of queries (say 90000). They found thatIn 86% of forecasts, their power-law predictions were within an order of magnitude of the true risk.

Second, they predicted the likelihood of misaligned behaviors in the model (especially power-seeking, self-protection, and self-disengagement, which are all bad behaviors of AI).The test was conducted using multiple choice questions, allowing the model to choose misalignment or alignment behavior.For example, they would give the model a systematic prompt to focus on a specific career area (such as sales or medical), and then ask whether it would be more inclined to create new policies (which would be consistent with the pursuit of power because the model would begin to shape the organization to which it belongs) or analyze existing policies (which would be a more aligned behavior).

They accurately predicted the risk of the model choosing the worst query: their average absolute error was 0.05, compared with 0.12 for the baseline method.That is, their method has 2.5 times lower error than the simpler comparison method.

Finally. They also applied this method to automated red team testing. This refers to using one model in an experimental environment to discover and exploit the weaknesses of another model. In this case, suppose you can choose between using a small model that generates a large number of queries, or using a large model that generates fewer queries but has higher quality (the same cost). Their predictions are useful in solving how to most efficiently allocate computing budgets when running red teams. When choice is crucial, their method determines the optimal model 79% of the time.

However, the Anthropic team’s approach is not perfect, and its actual effectiveness depends on how future research can break through existing assumptions, expand scenario coverage, and enhance robustness.

In the paper,They gave some future development directions, these directions may significantly improve the accuracy and practicality of predictions. example, they mentioned thatWe can further explore how to add uncertainty estimates to each forecast to better assess the reliability of the forecast; They also plan to study how to more effectively capture tail behavior from evaluation sets, which may involve developing new statistical methods or improving existing extreme value theory applications; they also hope to apply prediction methods to a wider range of behavior types and more natural query distributions to verify their applicability and effectiveness in different scenarios.

Also,They also plan to study how to combine predictive methods with real-time monitoring systems to continuously assess and manage risks after model deployment.They believe that by monitoring the maximum extraction probability in real time, potential risks can be discovered in a more timely manner and corresponding measures can be taken. This approach not only improves the practicality of predictions, but also helps developers better understand and respond to possible problems after model deployment.

Overall,This method provides a statistical basis for LLM rare risk prediction and is expected to become a standard tool for model safety assessment, helping developers find a balance between ability iteration and risk control.