Article source: Fenghuang Technology

Image source: Generated by AI

Image source: Generated by AI

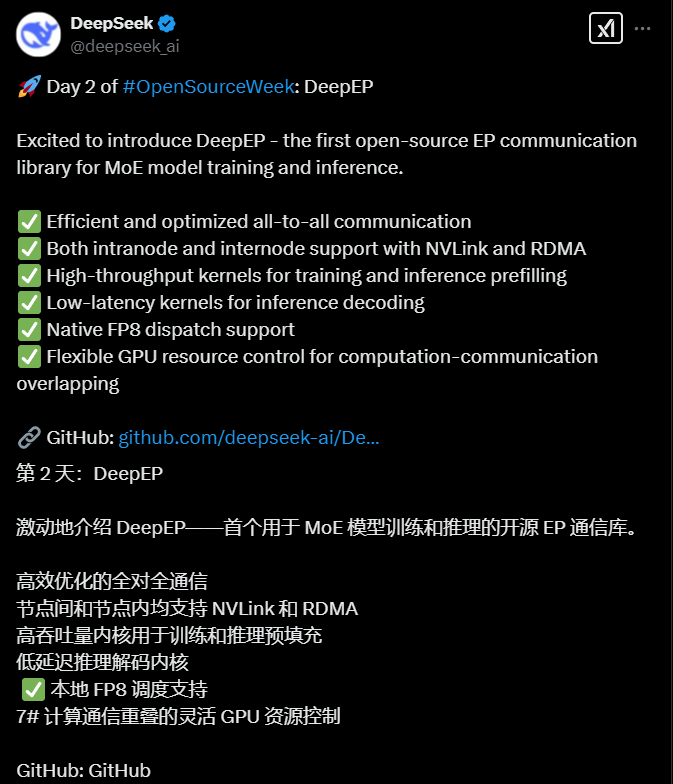

On February 25, DeepSeek, a well-known open-source provider, threw out a piece of cake-DeepEP, the world’s first full-stack communication library for MoE models. Because it directly solved the anxiety of AI computing power, GitHub instantly shot up to 1500 stars (referring to the collection), and its importance can be seen in the circle.

Many people are curious about what DeepEP means? Imagine the express station on November 11:2048 express delivery boys (GPUs) frantically carrying packages (AI data) among 200 warehouses (servers). The traditional transportation system is equivalent to letting little guys deliver goods on three rounds, while DeepEP directly equipped all employees with a “magnetic levitation + quantum transfer” package to transmit information stably and efficiently.

Feature 1: Directly change transportation rules

At the NVIDIA conference call on August 29, 2024, Huang Renxun once specifically emphasized the importance of NVLink (a technology developed by NVIDIA that allows direct interconnection between GPUs, with bidirectional mutual transmission speeds of up to 1.8TB/S) for low latency, high output and large language models, and considered it one of the key technologies to promote the development of large models.

However, this blown NVLink technology was directly played to a new level by the China team this time. The mystery of DeepEP lies in the optimization of NVLink, which means that between couriers in the same warehouse, maglev rail is used to transport at a speed of up to 158 containers per second (GB/s), which is equivalent to shortening the distance from Beijing to Shanghai. Time to take a sip of water.

Black Technology 2 is the low-latency core of RDMA technology included in it. Imagine that goods are directly “quantum transported” between warehouses in different cities. Each aircraft (network card) has a capacity of 47 containers per second. Aircraft can also fly while loading loads, and calculations and communications overlap, completely bidding farewell to downtime and waiting.

Feature 2: Intelligent sorting black technology: AI version of the “strongest brain”

When goods need to be distributed to different experts (sub-networks in the MoE model), traditional sorters have to unpack and inspect them one by one, while DeepEP’s “dispatching-combination” system seems to have predictive capabilities: in training pre-fill mode, 4096 data packets travel through the smart conveyor belt at the same time, automatically identifying same-city or cross-city items; in inferential pre-fill mode, 128 urgent packages travel through the VIP channel, and 163 microseconds are delivered five times faster than a human blink. At the same time, dynamic orbit change technology is adopted to meet the traffic peak and second-cut transmission mode, which perfectly adapts to the needs of different scenarios.

Feature 3: FP8 “Bone Reduction”

Ordinary cargo is transported in standard boxes (FP32/FP16 format), while DeepEP can compress cargo into microcapsules (FP8 format), and trucks can hold three times more cargo. What’s even more amazing is that these capsules will automatically return to their original state after arriving at their destination, saving both postage and time.

This system has been measured in DeepSeek’s own warehouse (H800 GPU cluster): the speed of freight in the same city has tripled, the cross-city delay has been reduced to a level that is difficult for humans to perceive, and the most disruptive thing is that it achieves true senseless transmission–It’s like a delivery boy stuffing packages into the delivery cabinet while riding a bicycle. The whole process is smooth and smooth.

Now DeepSeek has open source this trump card, which is equivalent to making the drawings of SF Express’s unmanned sorting system public. Heavy tasks that originally required 2000 GPUs can now be easily handled with hundreds of them.

Earlier, DeepSeek released the first achievement of its “Open Source Week”: code for FlashMLA (literally translated as fast multi-head potential attention mechanism), which is also one of the key technologies to reduce costs in training large models. In order to alleviate cost anxieties upstream and downstream of the industrial chain, DeepSeek is teaching each other everything it can.

Previously, You Yang, founder of Luchen Technology, posted on social media that “in the short term, China’s MaaS model may be the worst business model.” His simple estimation,If the daily output is 100 billion tokens, the monthly machine cost of DeepSeek based services is 450 million yuan, with a loss of 400 million yuan; the monthly revenue from AMD chips is 45 million yuan, and the monthly machine cost is 270 million yuan, which means that the loss also exceeds 200 million yuan.