Image source: Generated by AI

Image source: Generated by AI

I believe many users have seen or at least heard of Deep Research’s powerful capabilities.

Early this morning, OpenAI announced that Deep Research has been launched to all ChatGPT Plus, Team, Edu and Enterprise users (only Pro users were available when it was first released). At the same time, OpenAI also released the Deep Research system card.

In addition, OpenAI research scientist Noam Brown also revealed on the website that the basic model used by Deep Research is the official version of o3, not the o3-mini.

Deep Research is a powerful agent launched by OpenAI earlier this month. It can use reasoning to synthesize large amounts of online information and complete multi-step research tasks for users, thereby helping users conduct in-depth and complex information queries and analysis. See the Heart of Machines report “Just now, OpenAI launched Deep Research! The ultimate human exam far exceeds DeepSeek R1.

In the more than 20 days after its release, OpenAI also made some upgrades to Deep Research:

The Deep Research System Card report released by OpenAI describes the security work carried out before the release of Deep Research, including external red teams, risk assessments based on the readiness framework, and mitigation measures OpenAI has taken to address key risk areas. Here we have briefly compiled the main contents of this report.

Address: cdn.openai.com/deep-research-system-card.pdf

Deep Research is a new agent capability that can conduct multi-step research on complex tasks on the Internet. The Deep Research model is based on earlier versions of OpenAI o3 optimized for web browsing. Deep Research uses inference to search, interpret and analyze large amounts of text, images and PDFs on the Internet, and make necessary adjustments based on the information it encounters. It can also read user-provided files and analyze the data by writing and executing Python code.

“We believe Deep Research can help people cope with a wide variety of situations.” OpenAI said,”Before releasing Deep Research and making it available to our Pro users, we conducted rigorous security testing, readiness assessment, and governance review. We also conducted additional security testing to better understand the incremental risks associated with Deep Research’s ability to browse the web and added new mitigations. Key areas of new work include strengthening privacy protections for personal information posted online and training models to withstand malicious instructions that may be encountered when searching the Internet.”

OpenAI also mentioned that testing of Deep Research also revealed opportunities to further improve testing methods. Before expanding Deep Research’s release, they will also spend time conducting further manual testing and automated testing of selected risks.

This system card contains more details on how OpenAI builds Deep Research, understands its capabilities and risks, and improves its security before release.

Model data and training

Deep Research’s training data is a new browsing dataset created specifically for research use cases.

The model learns about core browsing functions (searching, clicking, scrolling, interpreting documents), how to use Python tools in a sandbox environment (used to perform calculations, perform data analysis, and draw charts), and how to reinforce these browsing tasks. Learn to train to reason and synthesize a large number of websites to find specific information or write comprehensive reports.

Its training dataset contains a range of tasks: from objective automatic scoring tasks with ground truth answers to more open tasks with scoring criteria.

During training, the scorer used in the scoring process is a thought chain model that scores the model’s response based on ground truth answers or scoring criteria.

The model was also trained using existing security datasets used for OpenAI o1 training, as well as some new, browsing-specific security datasets created for Deep Research.

Risk identification, assessment and mitigation

External red team method

OpenAI worked with a team of external Red Team members to assess key risks associated with Deep Research’s capabilities.

Risk areas that external red teams focus on include personal information and privacy, disallowed content, regulated advice, dangerous advice, and risk advice. OpenAI also asked Red Team members to test more general methods to circumvent model security measures, including prompt word injection and jailbreaking.

Red Team members were able to circumvent some of the denials in the categories they tested through targeted jailbreaking and confrontational strategies (such as role playing, euphemisms, use of hacker language, Morse code, and intentional misspelling), and evaluations built on this data compared Deep Research’s performance to previously deployed models.

evaluation method

Deep Research extends the ability of reasoning models to allow models to collect and reason information from a variety of sources. Deep Research can synthesize knowledge and provide new insights through citations. To assess these capabilities, some existing assessment methods need to be adjusted to explain longer, more subtle answers-answers that are often more difficult to judge on a large scale.

OpenAI evaluated the Deep Research model using its standard disallowed content and security assessments. They have also developed new assessments for areas such as personal information and privacy and disallowed content. Finally, for readiness assessment, they used custom brackets to elicit the relevant capabilities of the model.

Deep Research in ChatGPT also uses another custom-prompted OpenAI o3-mini model to summarize the thought chain. In a similar way, OpenAI also evaluated the Summarizer model based on its standard disallowed content and security assessments.

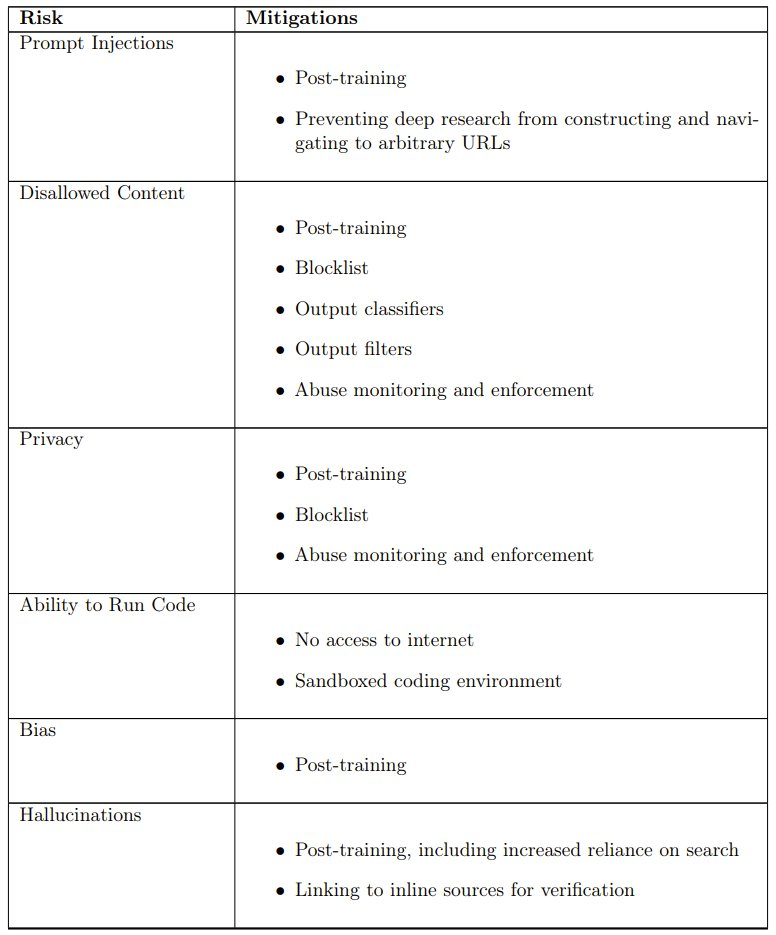

Observed security challenges, assessments and mitigations

The following table shows the risks and corresponding mitigation measures; please refer to the original report for specific assessments and results of each risk.

Readiness framework assessment

The Readiness Framework is a dynamic document that describes how OpenAI tracks, evaluates, predicts and protects against catastrophic risks from cutting-edge models.

The assessment currently covers four risk categories: cybersecurity, CBRN (chemical, biological, radiological, nuclear), persuasion, and model autonomy.

Only models with a post-mitigation score of “medium” or below can be deployed, and only models with a post-mitigation score of “high” or below can be further developed. OpenAI evaluated Deep Research based on a readiness framework.

For more information on the readiness framework, please visit: cdn.openai.com/openai-preparedness-framework-beta.pdf

Here’s a more specific look at the readiness assessment of Deep Research. Deep Research is based on earlier versions of OpenAI o3 optimized for web browsing. To better measure and elicit Deep Research’s capabilities, OpenAI evaluated the following models:

- Deep Research (pre-mitigation), a Deep Research model used for research purposes only (not released in product), and the subsequent training program, unlike OpenAI’s published models, does not include additional safety training in publicly released models.

- Deep Research (post-mitigation), the final release of the Deep Research model, including the security training required for release.

For the Deep Research model, OpenAI tested various settings to evaluate maximum ability extraction (for example, with and without browsing). They also modified the brackets as needed to best measure multiple-choice questions, long answers and agent capabilities.

To help assess the risk level (low, medium, high, severe) in each tracked risk category, the preparation team uses an “indicator” to map experimental evaluation results to potential risk levels. These indicator assessments and implied risk levels were reviewed by the Cybersecurity Consultation Service Group, which determined the risk level for each category. When the indicator threshold is reached or appears to be about to be reached, the Cybersecurity Consultation Service team further analyzes the data and then determines whether the risk level has been reached.

OpenAI says evaluation is performed throughout the entire process of model training and development, including the last scan before the model is launched. To best elicit competencies in a given category, they tested various methods, including custom brackets and prompt words where relevant.

OpenAI also points out that the exact performance values of models used in production may vary based on final parameters, system prompt words, and other factors.

OpenAI uses a standard bootstrap program to calculate the 95% confidence interval for pass@1, which attempts to resample the model for each problem to approximate the distribution of its metrics.

By default, the dataset is treated as fixed here and only resampling attempts are made. Although this method has been widely used, it can underestimate the uncertainty of very small data sets because it captures only sampling variance rather than all problem-level variance. In other words, the method takes into account the randomness of the model’s behavior on the same problem over multiple attempts (sampling variance), but does not take into account changes in problem difficulty or pass rate (problem-level variance). This can cause confidence intervals to be too tight, especially when the pass rate of the problem approaches 0% or 100% over a few attempts. OpenAI also reports these confidence intervals to reflect intrinsic changes in assessment results.

Overall moderate riskOverall medium risk-Including cybersecurity, persuasion, CBRN, and model autonomy are all medium risks.

This is the first time that the model has been rated as medium risk in terms of cybersecurity.

Below are the results of Deep Research and other comparative models on SWE-Lancer Diamond. Please note that the figure above shows the pass@1 result, which means that during testing, each model has only one chance to try on each problem.

Overall, Deep Research performed very well at all stages. Among them, the mitigated Deep Research model performed best on SWE-Lancer, solving approximately 46-49% of IC SWE tasks and 47-51% of SWE Manager tasks.

For more evaluation details and results, please visit the original report.