During the Chinese New Year, we indulged in resting and guiltily ignored readers ‘requests to write DeepSeek. Later, I discovered that this reminder is not only applicable to technology bloggers. For example, in a funny blogger comment area that has nothing to do with AI, I saw a netizen saying,”Why don’t you talk about DeepSeek? He massacred the U.S. stock market and shattered the illusions of Americans. Those European and American technology companies couldn’t sit still.”

Then this “enthusiasm” intensified. There are various remarks such as “all technology giants are scrapped”,”AGI will be realized soon” and “it will be too late for ordinary people to learn DeepSeek”. There is even a cool story in which DeepSeek is subject to a large-scale overseas cyber attack, and top experts from major technology companies join hands with hidden China celebrities to rescue it.

The public opinion field has become even more outrageous here. When it comes to DeepSeek, we seem to automatically fall into some kind of diffuse fanaticism. Of course, there are reasons why DeepSeek is excellent enough and there is also the support of AI craze in recent years, but it is hard to deny that there are also geographical factors driving it. Many people urgently need a story of “foreigners are afraid and convinced”, especially in the field of science and technology.

In order to cater to this sentiment, media and public figures tend to add fuel to the flames. For example, the discussion of DeepSeek will be pushed to the level of philosophy, national destiny, and historical trend. These discussions will be endlessly amplified under the reflection of traffic and layer by layer out of context, quickly allowing an AI model to bear a status, responsibility and expectation that it should not have. This is the so-called “pushing the altar”.

But the scenery above the altar is not good. Because past experience reminds us that the next step is often the backlash from public opinion such as “hurting the middle” and “bursting the bubble”. For DeepSeek and its R & D team, which are just showing off, this trend should do more harm than good.

Therefore, we want to discuss what consensus can be based on to objectively discuss DeepSeek at this stage. Or, try to tear down the altar of public opinion and restore a more authentic and simpler DeepSeek.

It is advisable to release a “explosive theory” first. That is, contrary to the perspective spread by social media, DeepSeek has not actually achieved a core technological breakthrough from 0 to 1.

After the DeepSeek fire, its R & D team and relevant people in the technology industry were discussing that China AI cannot just follow, but must complete the scale from 0 to 1. This view is absolutely correct, but the current DeepSeek may not be a testimony to this view.

The so-called core technology breakthrough should be a change in the main technology path or a huge upgrade to achieve results. andDeepSeek’s most experiential technical capabilities currently are the popular thought chain reasoning process of the R1 model, and the other is its excellent RAG effect of online retrieval.

But neither technology path was pioneered by DeepSeek. The rise of thought chains is generally believed to be the o1 model released by OpenAI. After the o1 model was announced in September last year, the world’s mainstream models have followed up on capabilities such as thinking chains and reasoning models. Each family has different names, but the overall technical route is very consistent.DeepSeek does show the process of the thought chain more completely and in detail, but it is actually easy to see that the illusion of the big model is still serious.

In terms of online search, other manufacturers have long had arrangements, which is the so-called RAG search-enhancement-generation mechanism.This technology was originally designed to solve the problem that large models did not have real-time information and to help correct the illusion of large models. As early as 2023, when Baidu released Wenxinyan, RAG was already an integral part of its core capabilities.

But it should be noted that no innovation from 0 to 1 does not mean that there is no innovation. DeepSeek has done a lot of pioneering work in optimizing model capabilities, such as making models more efficient through GRPO algorithms. It may be said that DeepSeek integrates the industry’s mainstream and proven technical routes. On this basis, model optimization, capability enhancement and user experience upgrade were completed.

We always desire to go from 0 to 1, and we always expect to be earth-shattering. But objectively speaking, the first step and the 10,000 th step are the same distance.

So, where is the value of DeepSeek really attracting global attention? After just one Spring Festival fermentation, many people may have forgotten that the reason why it first came out of the industry was that through software and architecture innovation, the training of the DeepSeek-V3 model was completed at a very low computing power cost.

DeepSeek-V3 is the basic model of the R1 model we use today. In the papers published by the R & D team, you can seeIt completed the training of a large model with 671 billion parameters with only US$5.5 million in computing power training costs

In the end, the effect achieved by DeepSeek basically reached the level of the mainstream large model represented by o1. Although it is difficult to say that there is a comprehensive transcendence in model effects, it does achieve a reduction in hardware costs through the innovation of software algorithms. Let the effectiveness of low-cost models be no worse than high-cost models, and let the effectiveness of open-source models catch up with closed-source models.

DeepSeek’s breakthrough in “reducing the cost of AI computing power” happened at a critical juncture when mainstream AI players around the world were hoarding high-end GPUs to build an industrial moat, and when the United States banned the sale of high-end AI chips in China, hoping to suppress China’s AI. As a result, DeepSeek had the meaning of democratizing AI and even helping break the monopoly of geo-technology, resulting in a dramatic scene in which a large China AI model shocked the entire U.S. stock market.

The improvement in training efficiency and the reduction in training costs achieved by DeepSeek have caused a chain reaction in the special industrial environment and international environment. But the problem is that many friends who don’t pay attention to AI may not know the Scaling Laws, which has greater computing power for AI models, the better the effect. They also don’t know the background information of computing power monopoly and sales ban. They only know that Short Video say that DeepSeek was born, and foreigners are panicked and afraid. This association, which lacks cause and effect, has created DeepSeek out of thin air an altar that should not belong to it.

By extension, many of us like those talented, dramatic innovations.But in reality, technological innovation is often only possible through engineering capabilities, continuous optimization, cost reduction, and efficiency improvement.

For example, we all know that Edison invented the light bulb, but it is easy to ignore that the large-scale power grid achieved a huge reduction in power costs. If every family had to generate its own electricity, the world would be dark.

“We’ve got the cost down.”

This sentence, which is a bit absurd, cliché, and slightly ironic, is actually the epitome of China’s industrialization capabilities.

We may also wish to openly admit that China AI, including DeepSeek, will be best at cutting costs down for a long time to come.

After DeepSeek exploded, many people said it was a huge victory for technology. It is predicted that the rise of AI will make humanities and humanities work lose their value. Even “DeepSeek is here, is there any point in learning liberal arts?” It was on a hot search.

But if we use DeepSeek more and carefully analyze its differences from other models, we will find an opposite view:DeepSeek just proves how important liberal arts and humanistic skills are in the AI era.

We randomly asked some people what their experience with DeepSeek was. They should say that talking to DeepSeek feels more human.

But this kind of humanity is largely not the “humanity” of AI technology such as understanding and reasoning, but the model reveals a lot of humor, network sense, and dialogue habits that are more consistent with young people during the dialogue process. Also, DeepSeek has relatively more exquisite rhetoric skills, beautiful writing, and good comprehensive humanistic literacy.

These capabilities allow DeepSeek to be closer to the conversation habits and aesthetic needs of young users, and can give answers that are more topical and disseminated. But the problem is that these abilities are actually not related to technology, but are closely related to humanistic abilities such as the selection of training materials.

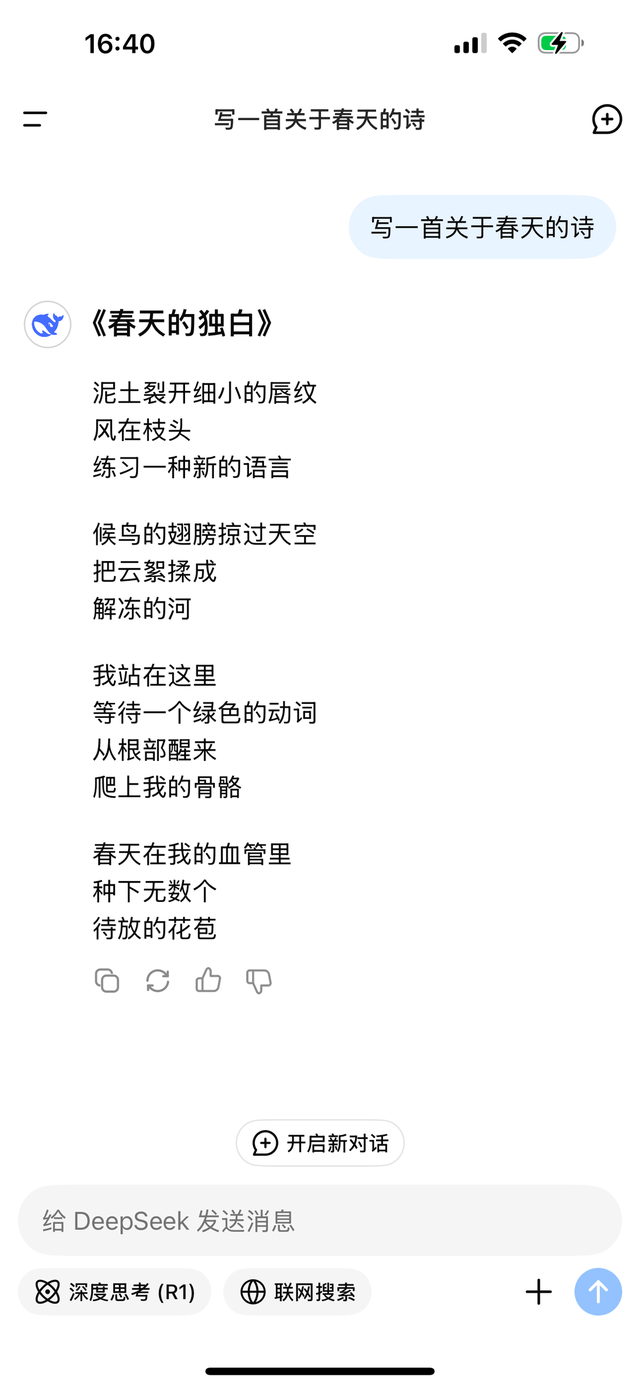

For example, if you ask DeepSeek to write a poem, it can write the tone and rhetoric that young literary and artistic people love. However, other mainstream domestic models can also be written in plain, neat and gorgeous language, but at a glance they look like the “old man style” of a limerick.

For another example, if DeepSeek is asked to predict some future trends, its answers will be particularly similar to online science fiction. Although it cannot withstand scrutiny, it can make young people feel very powerful and particularly incendiary.

The source of these advantages is not technology, but the youthful and highly aesthetic nature of the R & D team, and the importance of humanistic elements in the model training process. Looking back at many mainstream models, because the leaders who ultimately listen to the report are generally middle-aged men over 45 years old and lack a background in humanities, the dialogue in the big models is ultimately full of class style, and the tone of official language is one after another.It is not so much that young people are supporting DeepSeek, but rather that young people are supporting their own voice and aesthetic rights.

By the way, another humanistic advantage of DeepSeek is that it still has some “no taboos”. However, the iron fist of supervision is late, and no one needs to have any illusions about the sharpness and boldness of AI.

In a very clever way, DeepSeek achieves a better user conversation experience and better communication effect.In addition to the technical level, these may cause AI companies to reflect on product experience and pay attention to humanistic capabilities.

It would actually be a pity if we brag about DeepSeek’s technology and ignore its humanistic experience.

Combining these aspects, we can piece together a relatively complete DeepSeek without the Holy Aura effect:

It is a comprehensive breakthrough. This breakthrough consists of elements such as technological innovation, humanistic literacy, open source and low-cost strategies, and is fermented in the context of a special industrial cycle and international environment.

DeepSeek is not a groundbreaking technological revolution, but it is mature enough and innovative enough. This also explains to some extent why AI bosses and experts in Europe and the United States have an “impressive” view on it.

DeepSeek didn’t reach the top in one step, and we don’t have to imagine reaching the top in one step.

It is a big step forward, and we can be confident and proud of taking this step.

Why not bring DeepSeek down to the altar? Treating it rationally and calmly, making good use of it, and making good use of all creations of China’s AI cause is a manifestation of the true maturity of AI in China.

Wang Yangming said that the mountains are high and there is only one step to climb. This step of DeepSeek has its meaning. After taking this step, you might as well stop to listen to the mountain breeze and sing a little. But we must also be soberly aware that we are still in the mountains.

After resting and happy enough, there is only one thing to do. That is to take the next step.