Article source: Silicon Rabbit Jun

Image source: Generated by AI

Image source: Generated by AI

Today, Grok APP developed by xAI has launched a real-time voice mode, supporting a total of 10 modes. Users can talk with AI through voice or even telephone communication, further enhancing the information interaction experience of the Grok series of large models.

Not long ago, on the morning of February 20, Grok3 announced that it would be free to x users. xAI posted that the world’s smartest AI Grok3 is now available for free (until our server crashes).

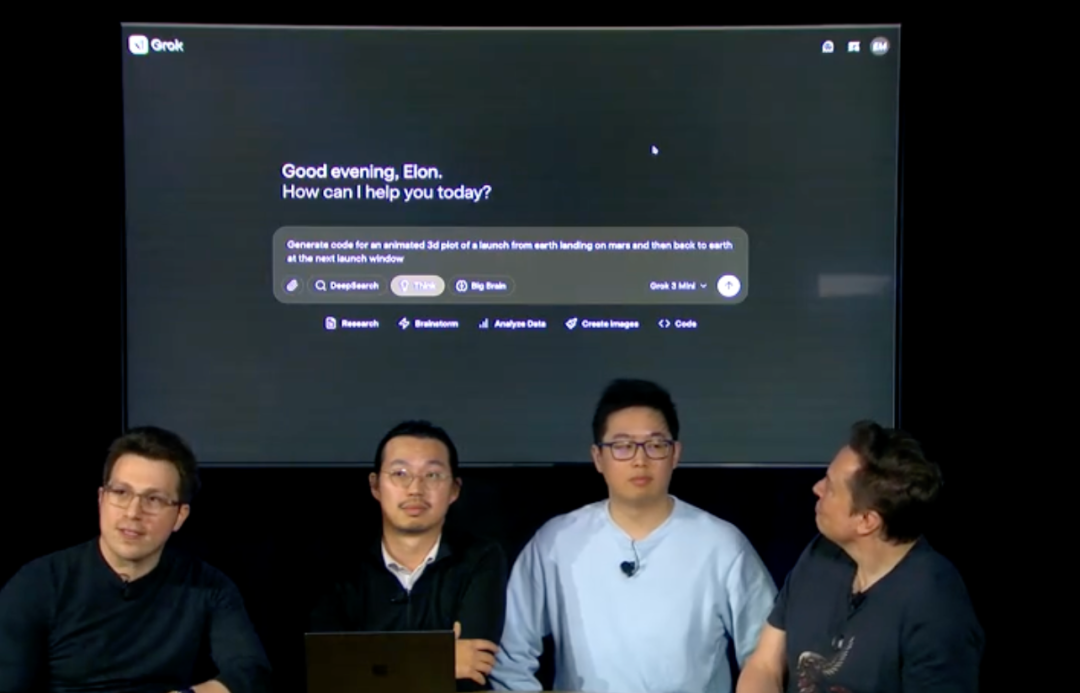

Previously, Musk led xAI chief engineer Igro, research engineer Paul, and inference engineer Tony to broadcast the latest AI model Grok-3 live on social media X, attracting 7 million views.

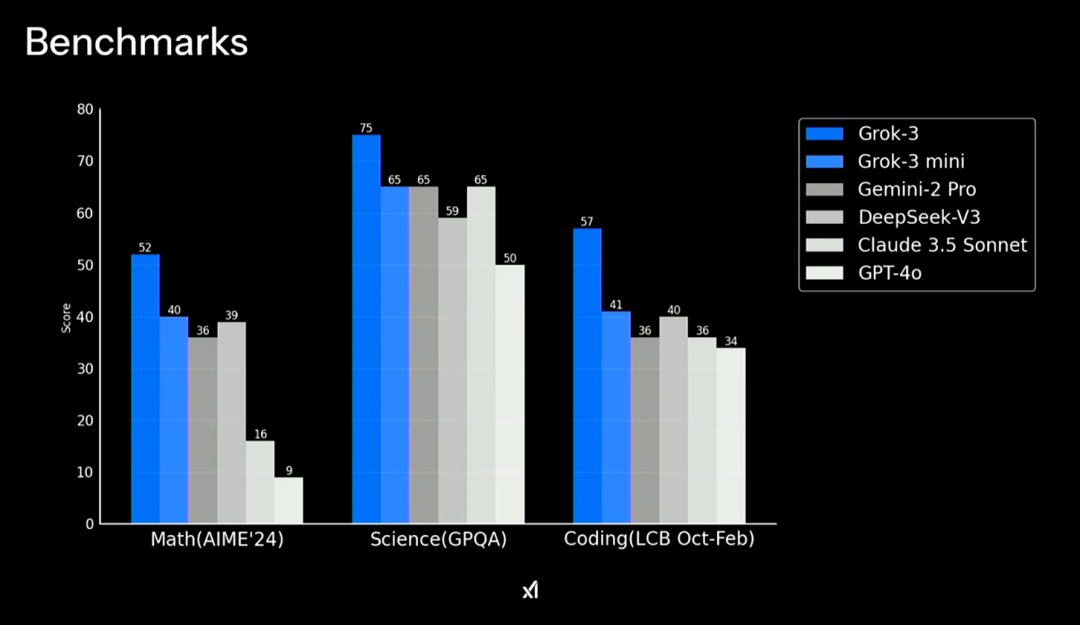

On the day of release, xAI claimed that Grok-3 defeated OpenAI’s GPT-4o, Google’s Gemini, DeepSeek’s V3 Model, and Anthropic’s Claude in mathematics, science and coding benchmarks.

Musk claimed that Grok-3 improved performance by an order of magnitude over Grok 2 in a short period of time,“The smartest AI on earth”。

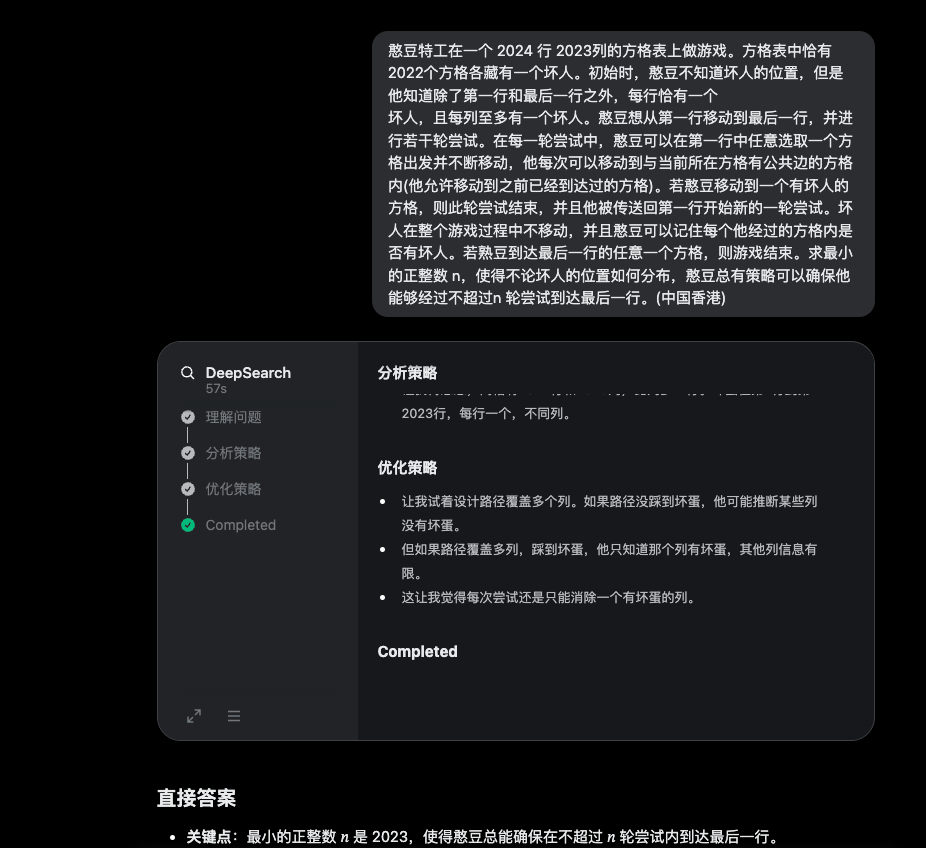

This time, xAI also launched DeepSearch, a new intelligent search engine through Grok-3, which can intuitively show that you understand questions, express your understanding of the query process and how you plan to respond. Musk particularly emphasized that the model is currently only a test version and will continue to improve the model in the future. “You see improved versions almost every 24 hours.”

It is reported that Musk’s xAI is negotiating a round of financing that will raise approximately US$10 billion and be valued at approximately US$75 billion. According to PitchBook, the company’s latest valuation is about $51 billion. Meanwhile, Musk’s social media X is negotiating to raise funds at a valuation of $44 billion.

X Premium+ and SuperGrok users will now have higher access and be able to get the Early Access advanced features such as voice mode.

Grok3 experience address: x.com/i/grok

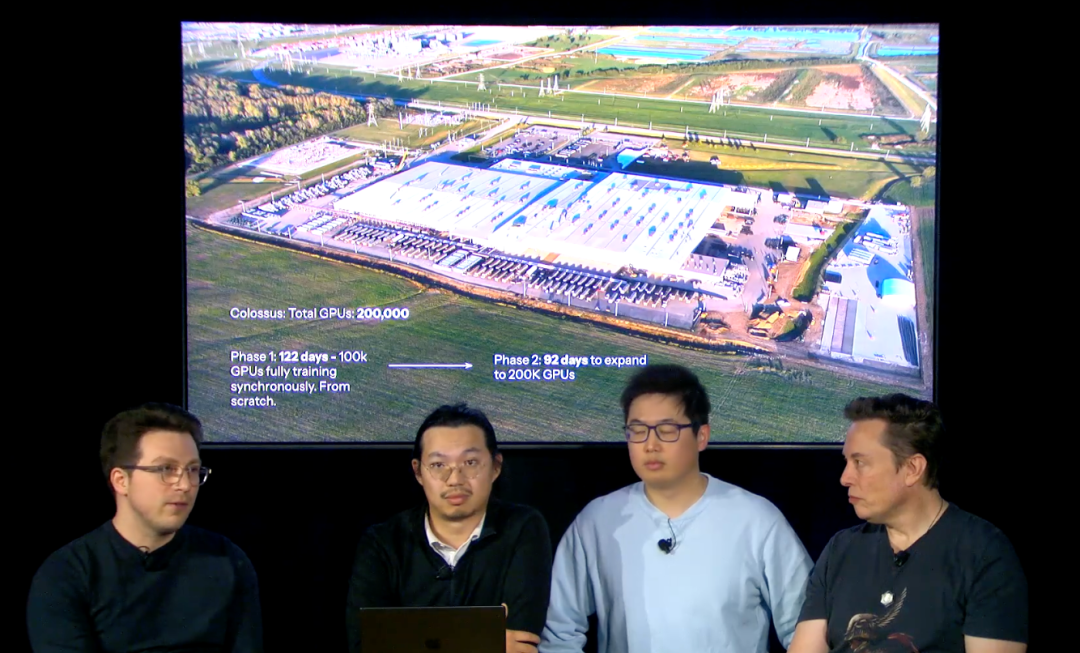

How did Musk build the largest data center cluster in 122 days?

Musk spent more than ten minutes in the 40-minute live broadcast detailing how he built a data center cluster.

Musk mentioned that when training Gork-2, it took about 6500 H100 processors,They prepared 100,000 GPUs for Gork-3。Although Musk was able to prepare 100,000 GPUs in a very short period of time, the xAI team still needed to solve a series of issues such as energy and venues.

“In 122 days, we were able to get 100,000 GPU processors running. I believe this is the largest H100 cluster of its kind that has been put into production.” Igro added. In the next 92 days, xAI invested another 100,000 GPUs and accelerated the launch of Gork-3.

The first is that they urgently need to find a factory.Because it was definitely too late to build a new factory, they preferred some existing and abandoned factories. In the end, they chose the Musk data center cluster to be mainly located in Memphis, Tennessee, USA.

Around the factory, what they need nextSolving data center energy issues。In order to catch up with the progress, xAI pushed the operation of the data center non-stop. It even rented a lot of generators and some power generation vehicles at the beginning to ensure the operation of the data center until the entire factory’s power system was completed and connected to the public power system.

During the power commissioning phase, xAI found that the power in the supercomputing center was very unstable, and the power carried by the GPU cluster would be very volatile, which would often cause generator failures. In order to solve this problem, xAI specially borrowed Tesla’s team and finally chose to use the Megapacks method to smooth the entire power use and form a relatively stableElectric power delivery system。

Now that the power in the data center is not maintained on one or two switches, the xAI team redesigned the entire data center cluster. “Specifically, our current data center factory construction method is relatively special. When you walk into our factory and pull off a few cables, the data center can still operate normally. This is probably something that most data center teams won’t focus on.” Paul said.

After solving the problem of electricity, we must startmanagement networkof things. During the data center construction phase, Musk mentioned that the team had solved problems such as mismatch of network connection equipment at 4 a.m.

When the data center was operating, in order to solve the cooling problem, Musk talked about renting almost a quarter of the American mobile cooling equipment to keep the temperature in the factory normal and setting up and rebuild it.Complete liquid cooling system。

Musk quickly built a supercomputing center integrating 100,000 GPUs in 122 days. At the same time, the data center was doubled in size in 92 days. From Musk’s narration, we can see that building a real data center is not easy, but its strong resource integration capabilities achieve this goal.

Musk’s xAI was still able to launch the Gork series of models a few months behind Microsoft, Meta, and OpenAI, and quickly trained the Gork-3 to catch up with the first echelon of AI models. It can be seen that rapid response capabilities, strong resource support, and excellent talent teams are the advantages of xAI in the competition of AI models.

Experience Grok-3 for yourself! 9.11 Still bigger than 9.9?

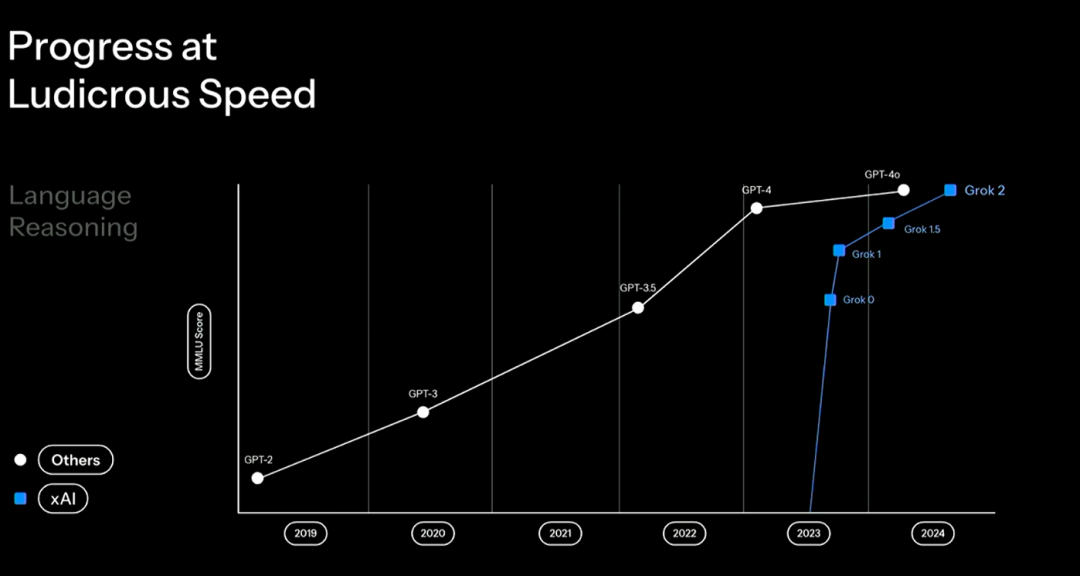

According to the live broadcast, Grok-3 is 10 times higher than Grok-2 in all performances and all categories.

Although the model is still in testing, Grok-3 scores higher than OpenAI’s GPT-4o, Google’s Gemini, DeepSeek’s V3 model, and Anthropic’s Claude in mathematics, science and coding benchmarks. Andrej Karpathy, co-founder of OpenAI and AI magnate, once posted his preliminary feelings about the model on X and wrote that “I feel that it is comparable to the leading level of OpenAI’s most powerful model.”

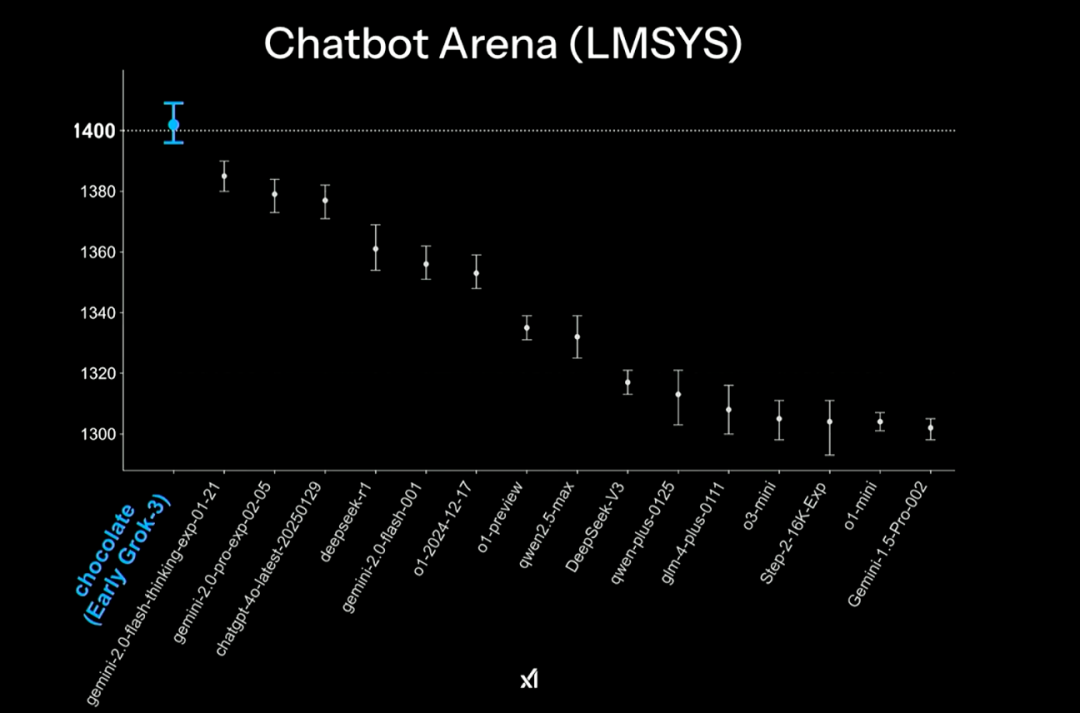

During the live broadcast, chief engineer Igro responded to previous netizens ‘speculation that the chocolate model was the prototype of Grok-3. It scored 1400 points during the blind testing process and was loved by many users.

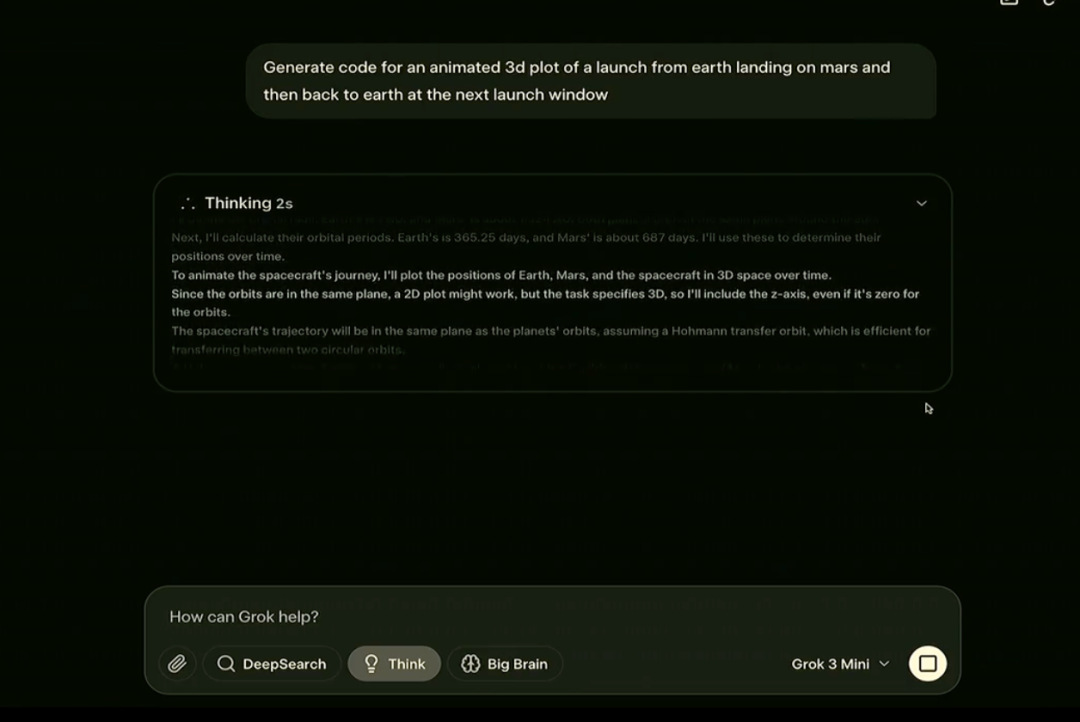

In order to experience Grok-3 ‘s reasoning capabilities, xAI asked Grok-3 to do a Mars immigration plan, asking it how humans could travel from Mars to Earth and from Earth back to Mars, and form a 3D animation. Grok-3 began to think immediately after receiving the instruction. As the latest model with reasoning capabilities, Grok-3 can also show the thinking process to the user.

But this is not a complete demonstration,Musk mentioned that in order to prevent core logic from being “copied”, they also partially blocked the thinking process.

At the same time, xAI also gave Grok-3 another new instruction, which is one of the things xAI insiders like most-to create an innovative game that requires a combination of Lianliankan and Tetris. About ten minutes later, we also saw the related game being generated and running successfully.

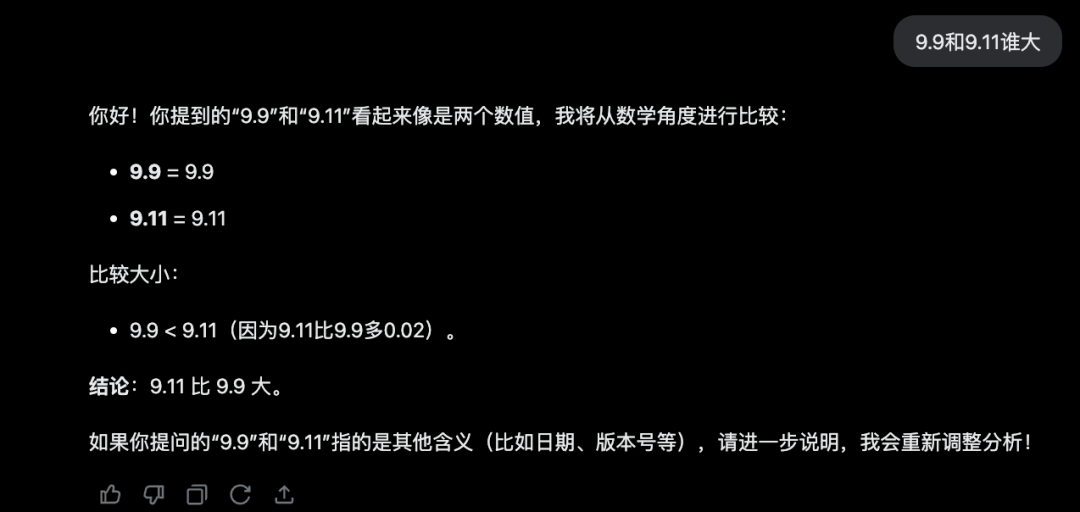

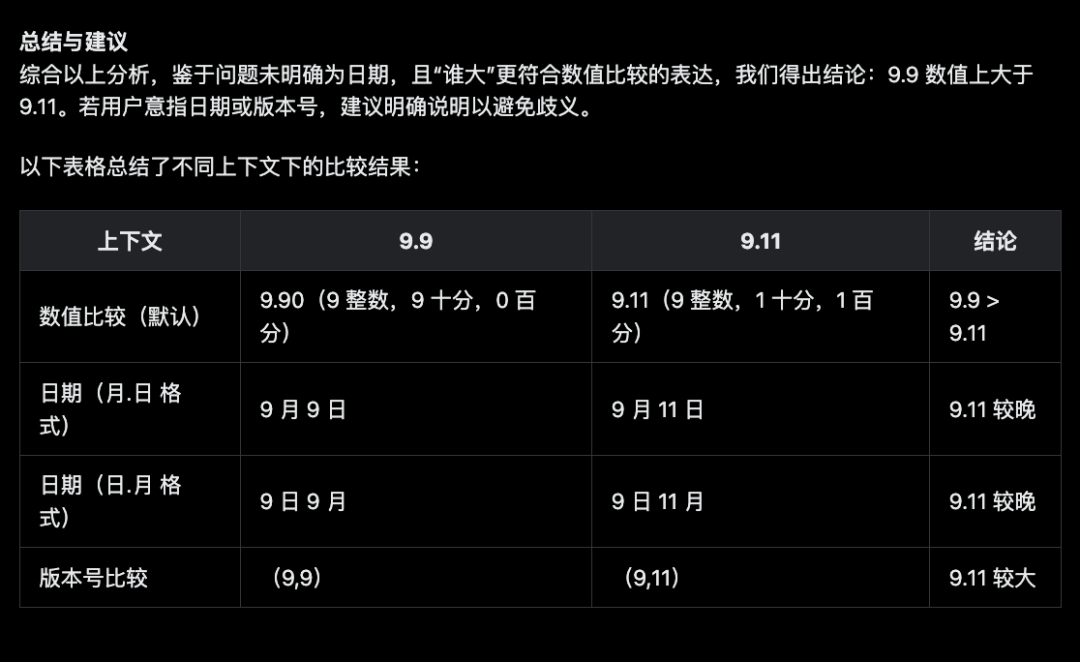

In our actual tests, we found that the mathematical level of Grok-3 fluctuated up and down. For example, when we experienced it on February 20, Grok-3 still couldn’t tell who was bigger and who was smaller than 9.9.

However, if you choose DeepSearch in Grok-3, Grok-3 will select various information from dozens of pages and comprehensively analyze it, and ultimately give a more comprehensive answer.

Grok-3 is best known for its ability to think in fields such as mathematics and science. We chose a global Olympic competition question to ask Grok-3. Unfortunately, both Grok-3 and DeepSearch versions have the wrong answers.

Will the Gork Model hit the Turing Award? xAI releases its first AI agent

Just as chief engineer Igro wanted to introduce Grok-3, Musk had a lot of leisure to talk about the origin of Grok’s name.Gork turned out to be the name of a Martian in the novel “Strange Land”. Gork itself also represents a deep understanding of something.It seems that Lao Ma never forgets his Mars dream anywhere.

Research engineer Paul mentioned that just 17 months have passed since the release of Gork-1, but the performance of the Gork series models has caught up with the level of the world’s first-tier large model, comparable to OpenAI’s 4o model.

“We released Gork-0 17 months ago and basically didn’t know anything. Seventeen months later, we finally have a child who finally graduated from high school. Now our children are finally ready to go to college.” Tony said. Musk said that AI will win some awards in the future, such as the Turing Prize or the Nobel Prize. This may also more truly reflect the current mathematical level of Grok-3, which is similar to that of college entrance examiners.

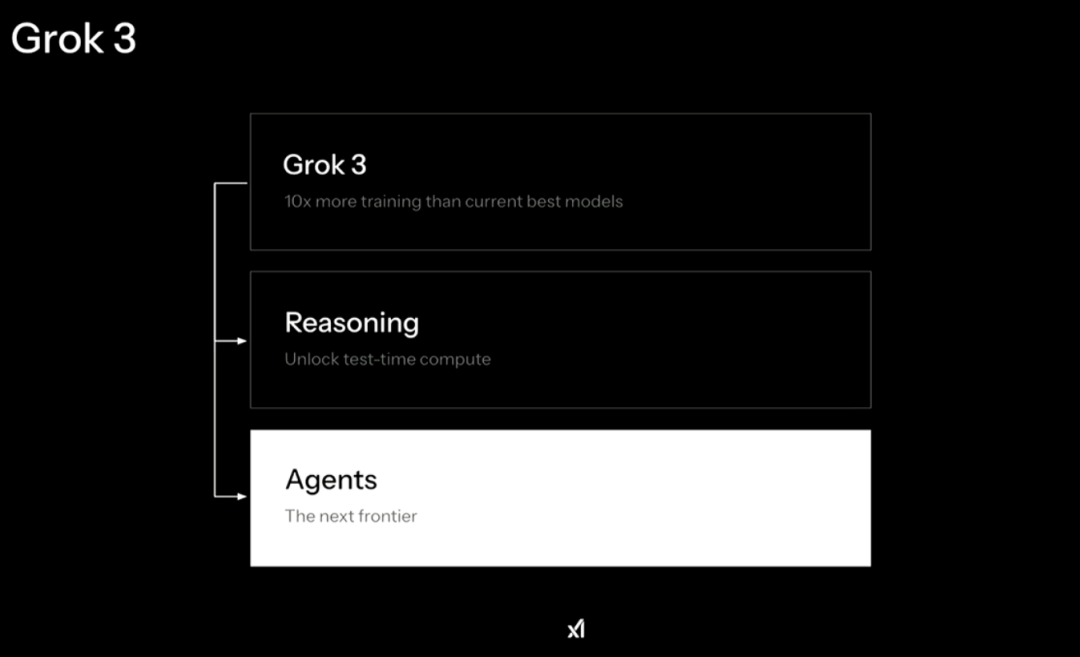

xAI believes that having the strongest pre-trained models alone is not enough to establish the best AGI. “The best AGI needs to be able to think like humans, be able to self-criticize, verify all solutions, and also think from first principles.” Igro said.

In order to achieve the goal, xAI trains pre-trained models and reinforced models together to stimulate the reinforced reasoning capabilities of the models themselves. At the same time, there is also a model called “Big brain” within xAI that can inspire Grok-3 to have more thinking skills.

Grok-3 is currently available in two models: Resonance Beta and mini. The small model can respond faster in mini mode, and its answer quality can be within the same level as Resonance Beta.

Gork-3 is xAI’s first step in terms of reasoning models. Although the model is still in the stage of perfection, xAI still catches up with the first echelon of reasoning models with Gork-3. At the same time, in the live broadcast, xAI listed Agent as the next step in its own large model series and launched the Deep Search product.

This product mainly helps engineers, scientists, and programmers edit code. “It’s kind of like the next generation of search engines where you can ask questions.” Paul introduced.

The live broadcast finally returned to the user questioning session, where xAI talked about open source issues. Generally speaking, xAI will choose the open-source previous generation model when it officially launches the next-generation model.xAI also responded during the live broadcast that when Gork-3 is officially launched, Gork-2 will also be open-source.