Wen| 20 Society

DeepSeek was born, and our relationship with artificial intelligence has entered a new era. But for many people, the honeymoon period with AI is not over yet, and hallucination problems are untimely warnings.

Recently, a fake news article that the death rate of the post-80s generation exceeded 5.2% has been widely circulated. According to the Shanghai Internet Anti-Rumors, the original source is likely to come from an AI conversation.

How did this even fake data come from? I have also recently tried to use AI dialogue instead of search, and found that it does lay some mines for my work.

For example, a few days ago, we wrote a manuscript on Jingdong takeout and tried to use DeepSeek to collect information. Regarding the question of how many orders Sam brings to Jingdong’s instant retail every year, DeepSeek gave a data affirmatively and said that Jingdong will launch a new cooperation with Sam this year.

I didn’t find out the source of this data, and I was even more shocked by the prediction of cooperation. Didn’t Sam and JD break up last year?

This is DeepSeek’s illusion. Illusion is a genetic problem in the big model, because it essentially selects answers based on the probability of each word appearing, so it is easy to make up an answer that looks smooth but does not match the facts at all.

All large models more or less have this problem.

However, the illusion of DeepSeek-R1 is particularly severe in leading models, reaching 14.3% in the Vectara HEM artificial intelligence illusion test, nearly four times that of DeepSeek-V3 and far exceeding the industry average.

DeepSeek-R1 has a higher hallucination rate than its peers (Figure from Semafor)

At the same time, DeepSeek R1 is currently one of the most widely used large models in China. Precisely because it is intelligent enough, it can be easily trusted fully, and it will not be noticed when it falls off the chain. On the contrary, it may become an illusion of public opinion that will trigger a wider range of illusions.

How does DeepSeek stab me?

Qiuqiu is a senior this year and has been interning in a laboratory recently. He is already familiar with using AI assistants such as Kimi and Doubao to write materials and find documents. After DeepSeek was launched, he felt even more powerful.

Recently, as soon as school started, he began to be busy writing his thesis. However, he no longer dared to use AI-generated content directly this semester.

In a recent post circulated on the Internet, in a review generated by DeepSeek, all the references were compiled by myself. With a rigorous attitude, I searched for these references, and unexpectedly!! Not even one is true!! ”

A large model industry insider said that this is a very interesting case. I have seen fabricated facts, but I have never seen fabricated papers and citations. rdquo;

There are still many similar situations of nonsense making up. For example, a netizen asked DeepSeek how many Ma Liuji stores there are in Shanghai and where are their addresses? As a result, DeepSeek gave him four addresses, and all four addresses were wrong.

The funniest thing was a toy blogger who asked DeepSeek to help her check a literature review of domestic children’s toy theories, which quoted a book called “Toys and Children’s Development”.

“Why haven’t I seen it? Let me introduce it in detail, and as a result, she found in the chain of thought that DeepSeek said that this book was fictional, and“Avoid pointing out that the book is fictional to avoid confusing users”。

Music from Media Luantan Mountain further discovered that DeepSeek is particularly good at using unfamiliar information and vocabulary from professional fields to make things up.

He found a little red book note called “I have listened to Mayday for so many years, but it’s not as good as DeepSeek”, and asked DeepSeek to provide the eggs in the Mayday songs. ldquo; In fact, it’s full of nonsense.

For example, it mentions that you are needed in the prelude to “Cangjie”, and that if you are put upside down, I saw your face at the moment when the universe explodes. Most people will try it and know that no matter how many twists and turns these three syllables back and forth, they will not be able to form this sentence. But it still doesn’t stop many people below saying they were moved!

In addition, he also asked DeepSeek to deeply analyze the style of South Korean musician Woodz. DeepSeek’s analysis of two-channel alternating breathing sounds, amplification of vowels and stretching, and other clever ideas are not found in the corresponding songs. It’s very like we just learned some professional terms and then showed off.

But it is worth pointing out that when there are enough professional vocabulary and these majors are unfamiliar enough, ordinary people cannot tell the authenticity of these narratives.

Just like the aforementioned rumor reported by CCTV News that the mortality rate of the post-80s generation has reached 5.2%, Li Ting, a professor at the School of Population and Health at Renmin University of China, sorted out and found that it was probably a mistake caused by the AI model, but ordinary people don’t have a concept of these data, so it is easy to believe it.

In the past few days, there have been several rumors believed to have been written by AI that have deceived many people: Liang Wenfeng’s first response to DeepSeepk in Zhihu, employee 996 of “Nezha 2” was due to the company’s allocation of rooms in Chengdu. The accident caused by the elevator falling and then crashing to the roof. Real and fictional news paragraphs are well combined together, making it difficult for ordinary people to distinguish.

Moreover, even if DeepSeek is not out of order, many times ordinary people don’t even use it correctly. The reward and punishment method of AI training, simply put, is that it guesses what answer you want most, not what answer is the most correct one.

Lilac Garden wrote two days ago that many people have come to doctors for advice with DeepSeek’s diagnosis. A parent of a feverish child firmly believes that the examination prescribed by the doctor is unnecessary and over-treatment; if the doctor does not prescribe antiviral drugs to fight H1N1 influenza, it is delaying treatment. The doctor was very confused. How can you be sure it was a swine flu? There are many reasons for fever.& rdquo; Parents said they asked DeepSeek.

The doctor turned on his mobile phone and found that the parents ‘question was, what treatment should I take for H1N1? rdquo; This question first presupposes that the child has H1N1, and the large model will naturally only make corresponding answers and will not make decisions based on actual conditions. Illusion can use it to endanger reality.

Illusion, it’s both blessing and curse

Illusion itself is not actually highly toxic, but can only be regarded as a gene of a large model. Studying artificial intelligenceIn the early days, hallucinations were considered good, which means that AI has the possibility to generate intelligence. This is also a very long-term topic of research in the AI industry.

biases and errors。In the LLM field, illusions are an inherent flaw in every model.

To describe it in the simplest logic, during the LLM training process, massive data is highly compressed and abstracted, and what is input is a mathematical representation of the relationship between contents, not the content itself.Like Plato’s cave allegory, the prisoner sees all the projections of the external world, not the real world itself.

When outputting, LLM cannot completely restore the compressed rules and knowledge, so it will fill in the gaps, resulting in illusions.

Different studies have also classified hallucinations into cognitive uncertainty and accidental uncertainty, or hallucinations caused by data sources, training processes and reasoning stages, depending on their source or domain.

But researchers at teams such as OpenAI have found that enhanced reasoning significantly reduces hallucinations.

Previously, when ordinary users used ChatGPT (GPT3), they found that while the model itself remained unchanged, they only needed to add let us think step by step to the prompt words to generate chain-of-thought (CoT), improving the accuracy of reasoning and reducing hallucinations. OpenAI further proves this with the o-series model.

But the performance of DeepSeek-R1 is exactly the opposite of this finding.

R1 is extremely strong in mathematical reasoning, but is very easy to make up in areas involving creative creation. Very extreme.

A case can well illustrate DeepSeek’s capabilities. I believe many people have seen a blogger using the classic question of how many r’s are in strawberry to test R1.

Most large models will answer 2. This is a fallacy of learning and passing between models, and it also illustrates the black box situation of LLM. It cannot see the external world, or even the simplest letters in words.

After going through many rounds of in-depth thinking lasting more than 100 seconds, DeepSeek finally chose to firmly believe in the three numbers it deduced and defeated the two thought stamps it learned.

Photo from @ Scully

And this powerful reasoning ability (CoT’s deep thinking ability) is a double-edged sword. In tasks that have nothing to do with mathematical or scientific truth, it sometimes generates a set of self-explanatory truths and concocts arguments that match its own theory.

According to Tencent Technology, Li Wei, former vice president of engineering for the Go Ask model team, believes that R1 is four times higher than the V3 illusion. There is a model layer reason:

V3: query –〉answer

R1: query+CoT —〉answer

“For tasks that V3 can already complete well, such as summarizing or translating, any long guidance in the thought chain may lead to deviations or a tendency to play, which provides a breeding ground for illusions.” rdquo;

A reasonable speculation is that R1 removed manual intervention during the intensive learning stage, reducing the number of loopholes used by large models to please human preferences. However, pure accuracy signal feedback may make R1 regard creativity as a higher priority in liberal arts tasks. However, subsequent Alignment did not effectively compensate for this.

Weng Li, a former scientist at OpenAI, wrote an important blog (Intrinsic Hallucinations in LLMs) in 2024. In her later tenure at OpenAI, she focused on large model security issues.

She suggested that if pre-trained datasets are seen as symbols of world knowledge, then they are essentially trying to ensure that model output is factual and can be verified through external world knowledge. ldquo; When a model does not know a fact, it should make it clear that it does not know. rdquo;

Nowadays, some large models will give answers that they don’t know or are uncertain when they touch the boundaries of knowledge.

R2 may have significant effects in reducing hallucinations. At present, R1 has a huge application range, and the hallucination degree of its model needs to be realized by everyone to reduce unnecessary harm and losses.

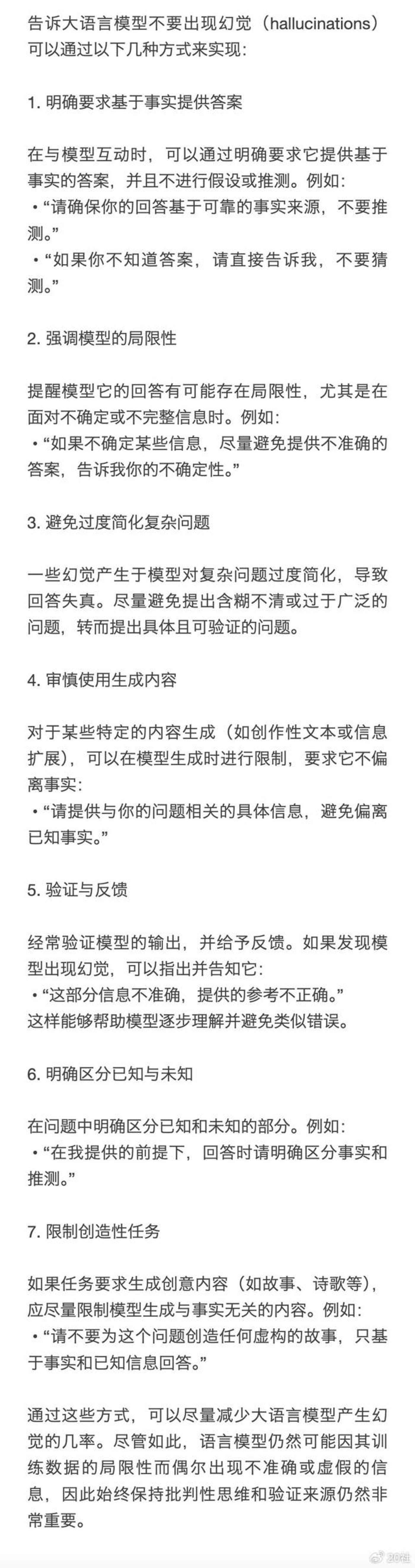

Come on, let us defeat the illusion

So, in the process of real use, are we ordinary people helpless about the illusion of large models?

Sam, senior Internet product manager, has been using large models to make applications recently. He has a rich experience in using ChatGPT and DeepSeek.

For developers like Sam, there are two most reliable anti-hallucination methods.

The first is to set some parameters, such as temperature and top_p, as required when calling the API to control the illusion problem. Some large models also support setting information tags. For example, for fuzzy information, you need to mark this as speculation content.

The second method is more professional. Whether the answers to the big model are reliable or not depends on the quality of the corpus. The quality of the corpus of the same big model may also be different. For example, the corpus of DeepSeek, which is also a full-blooded version, and the corpus of Baidu and Tencent versions come from their respective content ecology. At this time, developers need to choose the ecosystem they trust.

For professional corporate users, you can start from the data side to avoid illusions. In this regard, RAG technology has now been widely adopted in application development.

RAG, also known as retrieval enhanced generation, first retrieves information from a dataset and then guides content generation. Of course, this collection is a factual and authoritative database built according to the company’s own needs.

Sam believes that although this method is good, it is not suitable for ordinary individual users because it involves large samples of data annotation and is costly.

ChatGPT also has an adjustment plan for individual users to reduce hallucinations. In the playground of the ChatGPT Developer Center, there is an adjustment parameter function specifically for ordinary users. However, DeepSeek does not currently provide this feature.

ChatGPT provides parameter adjustment function on the playground

In fact, even with this feature, ordinary users may find it troublesome. Sam said he found that this feature of ChatGPT was rarely used by ordinary individual users.

So what should individual users do? At present, there are two most reliable methods for the DeepSeek illusion problem that people respond to more. The first is multi-party query and cross-verification.

For example, a friend of mine who owns cats said that before using DeepSeek, she usually learned about cat raising from the Little Red Book. Although DeepSeek is convenient, she still uses the Little Red Book and uses two results to cross-verify. DeepSeek’s results are often found to be contaminated by some previously widely popular misconceptions.

If you want to use DeepSeek for some professional data collection, this method may not be so easy to use. In addition, there is a simpler method.

Specifically, if you find that DeepSeek has its own brain-inspired content in the conversation, you can tell it directly and tell it what you know. Don’t talk nonsense, DeepSeek will immediately correct its own generated content.

Suggestions given by chatgpt

Sam said this method works well for the average user.

In fact, as we said earlier, the DeepSeek illusion is more serious, in part because it is smarter. Conversely, if we want to defeat illusion, we must also take advantage of its characteristics.