Article source: Xin Zhiyuan

Image source: Generated by AI

Image source: Generated by AI

Today, every move of DeepSeek team members has attracted much attention from the circle.

Recently, a new masterpiece CODEI/O launched by researchers from DeepSeek, Shanghai Jiao Tong University, and Hong Kong University of Science and Technology was strongly recommended by Ai2 Daniel Nathan Lambert!

Paper address: arxiv.org/abs/2502.07316

Project homepage: codei-o.github.io/

Lambert said he was very happy to see more papers written by DeepSeek team members, not just interesting technical reports. (By the way, he also joked that he really missed them)

The theme of this paper is to refine LLM’s reasoning pattern through a CodeI/O method using code input/output.

It is worth noting that this paper is a research completed by Junlong Li during his internship at DeepSeek.

Once it was released, netizens immediately began to study it carefully. After all, DeepSeek is now a GOAT team in the minds of researchers.

Someone concluded that in addition to the main paper, DeepSeek authors have also published many papers, such as Prover 1.0, ESFT, Fire-Flyer AI-HPC, DreamCraft 3D, etc. Although they are all intern work, they are very enlightening.

LLM reasoning flaws are broken by code

Reasoning is a core ability of LLM. Previous research has focused on improving narrow areas such as mathematics or code, but LLM still faces challenges in many reasoning tasks.

The reason is that the training data is sparse and scattered.

In this regard, the research team proposed a new method-CODEI/O!

CODEI/O systematically extracts various inference patterns contained in the code context by transforming code into an input/output prediction format.

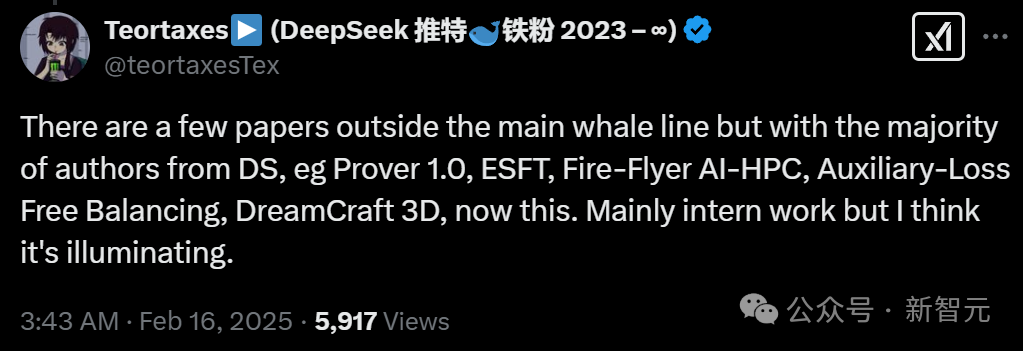

The research team proposed converting the original code file into executable functions and designing a more straightforward task: Given a function and its corresponding text query, the model needs to predict the execution output of a given input in a natural language form of CoT inference or feasible inputs for a given output.

this methodFree the core reasoning process from the code-specific syntax while retaining logical rigour.By collecting and transforming functions from different sources, the generated data includes a variety of basic reasoning skills, such as logic flow choreography, state space exploration, recursive decomposition, and decision-making.

Experimental results show that CODEI/O achieves consistent performance improvements in tasks such as symbolic reasoning, scientific reasoning, logical reasoning, mathematical and numerical reasoning, and common sense reasoning.

Figure 1 below outlines the training data construction process for CODEI/O. The process begins with collecting raw code files and ends with assembling a complete training data set.

Decompose CODEI/O architecture

Collect raw code files

The effectiveness of CODEI/O lies in the selection of diverse original code sources to cover a wide range of reasoning patterns.

The main code sources include:

- CodeMix:A large collection of raw Python code files retrieved from the internal code pre-training corpus is filtered to remove files that are too simple or complex.

- PyEdu-R (inference):A subset of Python-Edu that focuses on complex reasoning tasks such as STEM, system modeling, or logic puzzles, and excludes purely algorithm-centered documents.

- Other high-quality code files:Come from a variety of small, reputable sources, including comprehensive algorithm repositories, challenging mathematical problems and well-known online coding platforms.

When these sources were combined, a total of approximately 810.5K code files were generated.

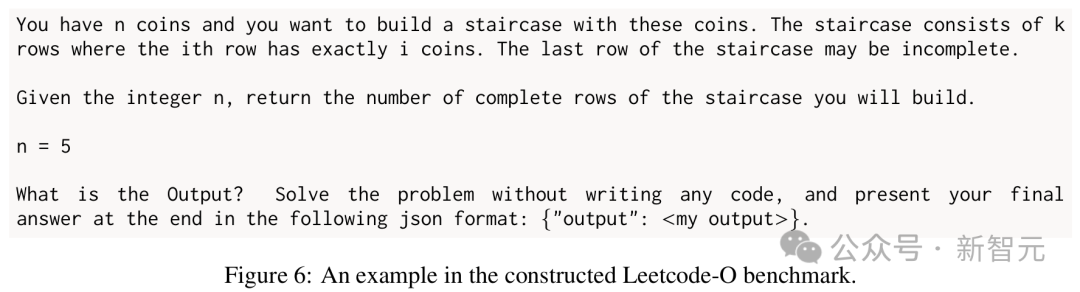

An example from the constructed LeetCode-O benchmark

Convert to unified format

The collected raw code files are often chaotic, contain redundant content, and are difficult to execute independently.

Use DeepSeek-V2.5 to preprocess the original code file, refining it into a unified format, emphasizing the main logical functions and making it executable, so that input-output pairs can be collected.

By cleaning and refactoring the code, the research team extracted core logic functions into functions, eliminated unnecessary elements, and then added a main entry point function to summarize the overall logic of the code.

The function can call other functions or import into external libraries, and must have non-null arguments (inputs) and return meaningful output. All inputs and outputs need to be JSON serializable for further processing.

The inputs and outputs of the main entry point functions need to be clearly defined during the process, including information such as data types, constraints (e.g., output range), or more complex requirements (e.g., keys in a dictionary).

Then create a separate, rules-based Python input generator function instead of directly generating test cases. This generator returns non-trivial input that follows the requirements of the primary entry point function. Apply randomness under constraints to achieve scalable data generation.

Finally, a concise problem statement is generated based on the main entry point function as a query describing the expected functionality of the code.

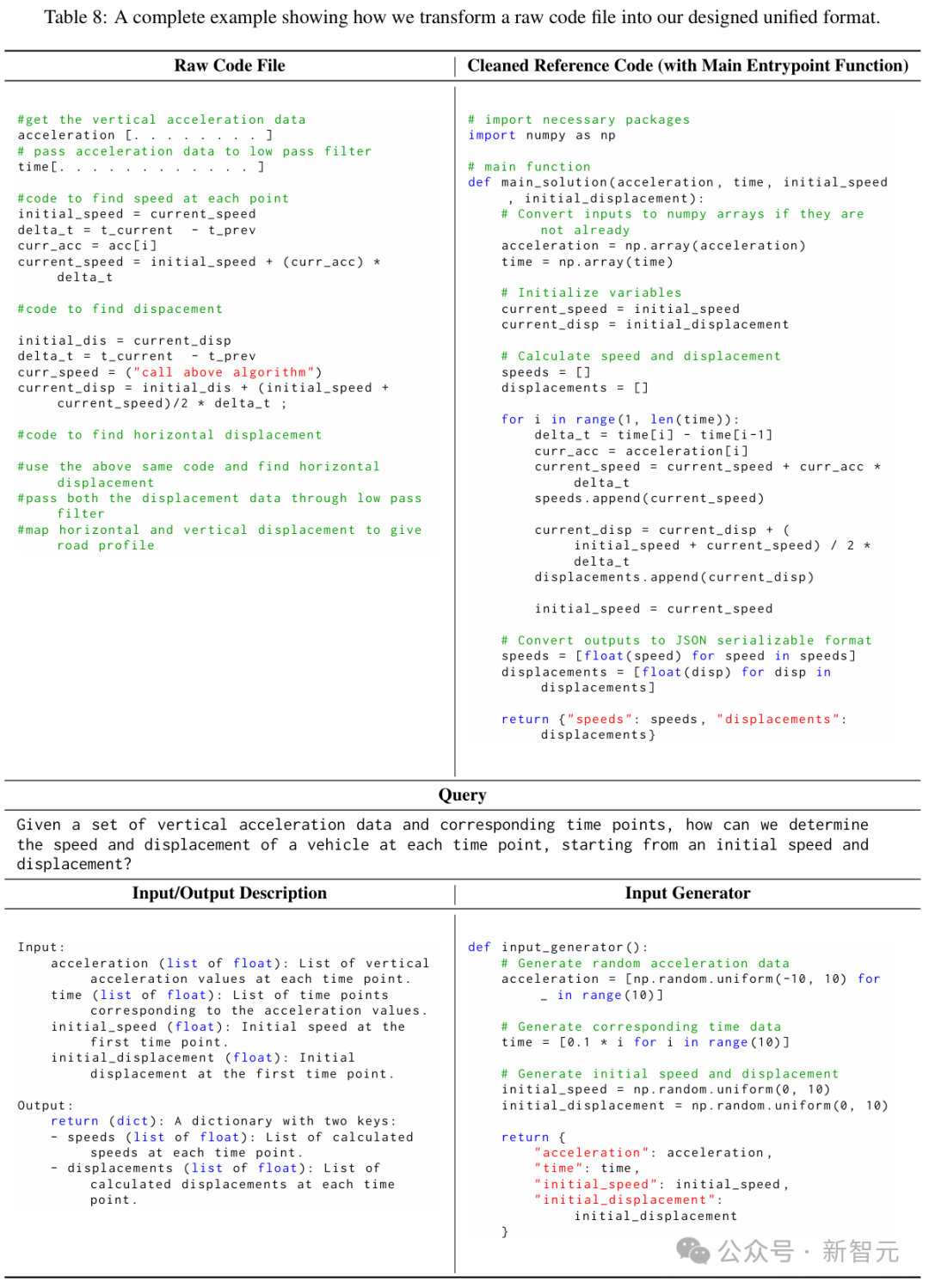

Example of how to convert the original code file to the same format required

Collect input and output pairs

After converting the collected raw code files into a unified format, an input generator is used to sample multiple inputs for each function, and the corresponding output is obtained by executing the code.

To ensure that the output is deterministic, all functions that contain randomness are skipped. During execution of this code, the research team also imposed a series of limitations on the complexity of runtime and input/output objects.

After filtering out non-executable code, samples that exceeded runtime limits, and input-output pairs that exceeded the required complexity, 3.5M instances derived from 454.9K raw code files were obtained. The distribution of input and output prediction examples is roughly balanced, accounting for 50% each.

Build input and output prediction samples

After you collect input-output pairs and converted functions, you need to combine them into a trainable format.

The research team used a supervised fine-tuning process, with each training sample requiring a prompt and a response. Since the goal was the input-output prediction task, the research team used designed templates to combine functions, queries, reference code, and specific inputs or outputs to build hints.

Ideally, the response should be a natural language CoT used to reason how to get the correct output or feasible input.

The research team mainly used the following two methods to build the required CoT response.

·Direct prompt (CODEI/O)

Use DeepSeek-V2.5 to synthesize all the responses you need because it has top-level performance but is extremely low cost. The dataset generated here is called CODEI/O.

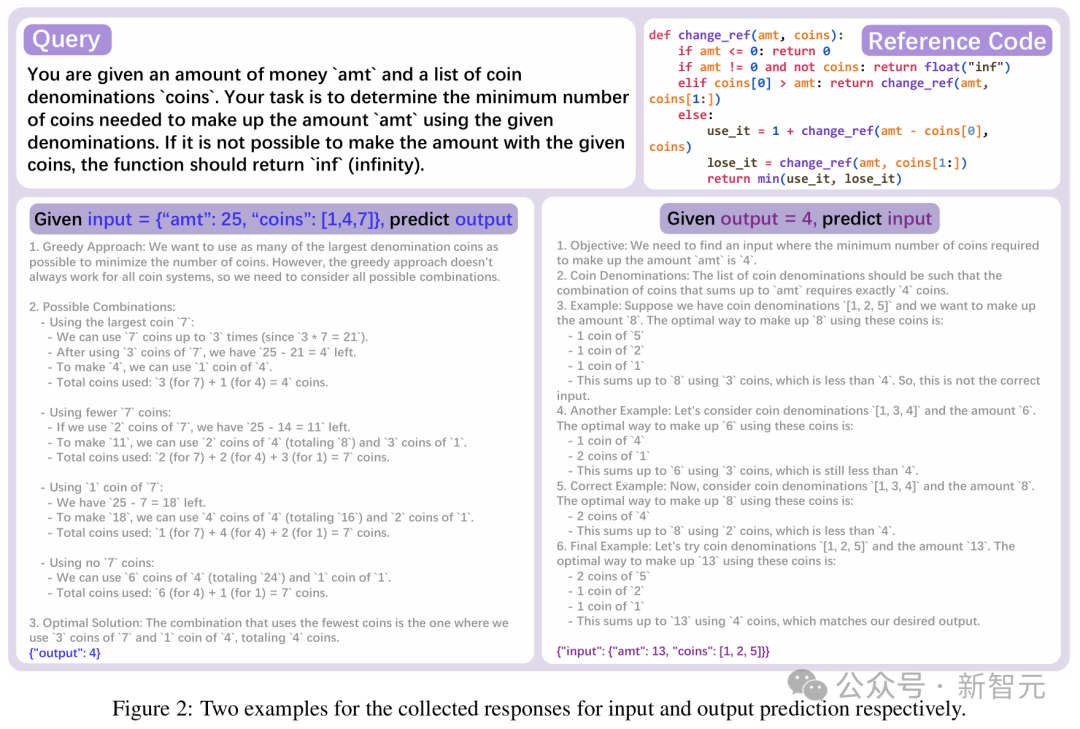

Figure 2 below shows two examples of input and output predictions in the CODEI/O dataset. In both cases, the model needs to give the reasoning process in the form of a natural language chain of thought (CoT).

·Make full use of code (CODEI/O++)

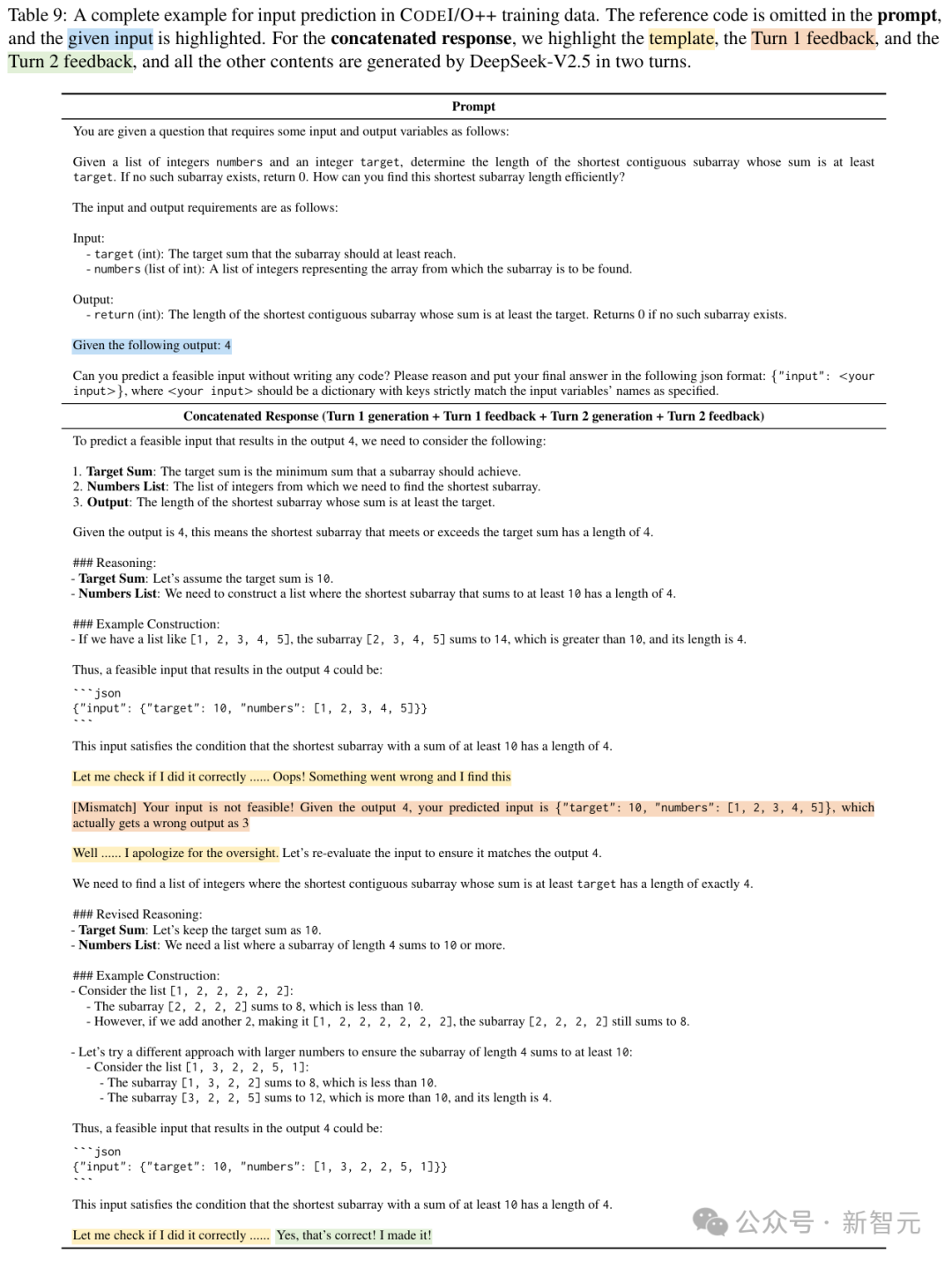

For responses with incorrect predictions, the feedback is appended as a second-round input message and DeepSeek-V2.5 is asked to regenerate another response. Connect all four components: first round of response + first round of feedback + second round of response + second round of feedback. The researchers call the dataset collected in this way CODEI/O++.

A complete training sample in CODEI/O++

A framework that bridges the gap between code reasoning and natural language

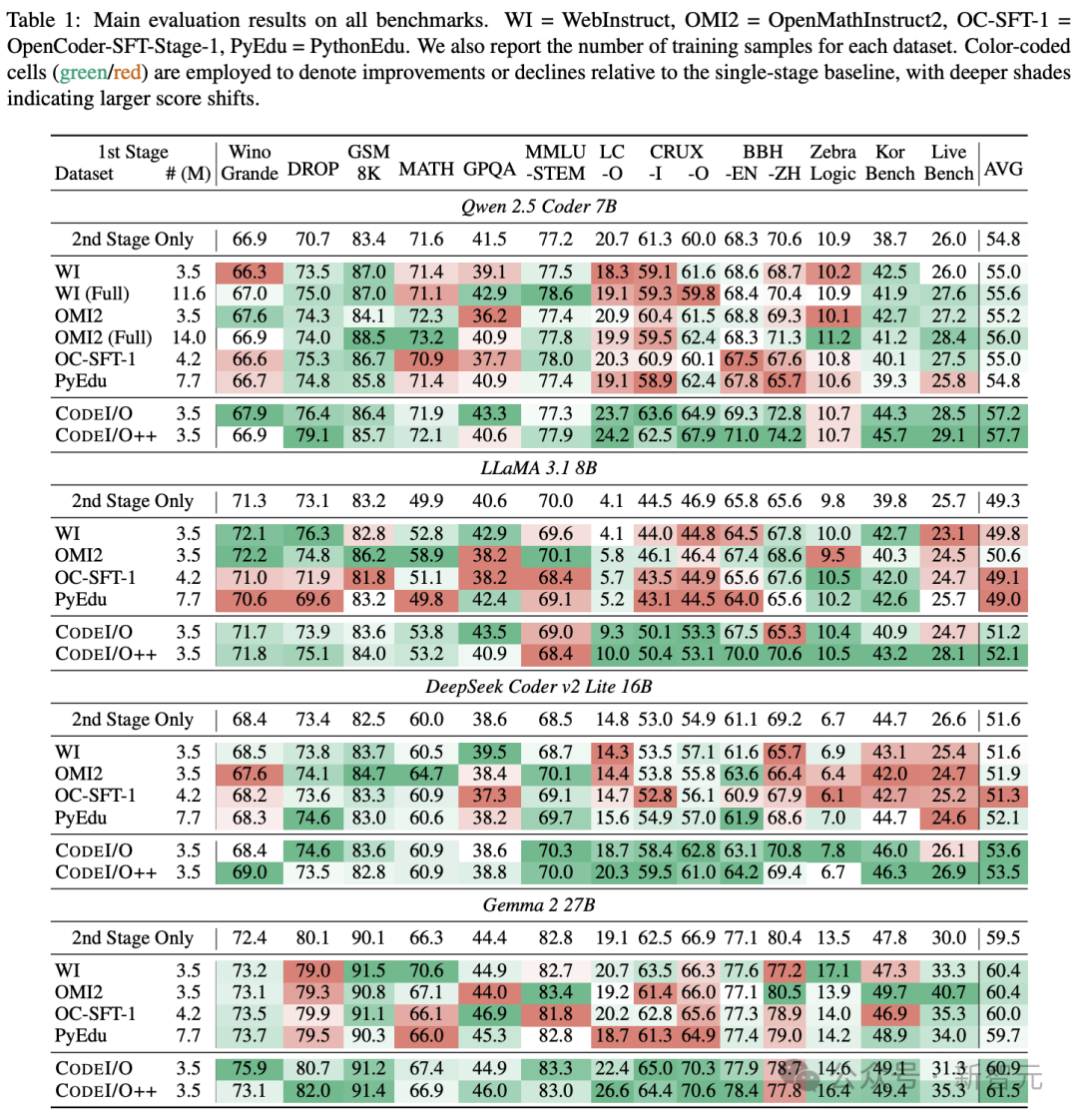

As shown in Table 1 below, the evaluation results of Qwen 2.5 7B Coder, Deepseek v2 Lite Coder, LLaMA 3.1 8B, and Gemma 2 27B models are mainly shown.

CODEI/O improved the performance of the model in all benchmark tests, outperforming the single-stage baseline model and other datasets (even larger datasets).

However, competing datasets, such as OpenMathInstruct2, perform well on certain math-specific tasks, but experience regress on other tasks (mixing green and red cells).

CODEI/O shows a trend of continuous improvement (green cells).

Although it only uses code-centric data, it improves code reasoning capabilities while also enhancing performance on all other tasks.

The researchers also observed that training using raw code files (PythonEdu) resulted in only minor improvements and sometimes even negative effects compared to a single-stage baseline.

The performance is significantly inadequate compared to CODEI/O, which suggests that learning from this kind of poorly structured data is sub-optimal.

This further emphasizes performance improvements that depend not only on the size of the data, but more importantly on carefully designed training tasks.

These tasks include diverse, structured reasoning patterns in the broad thought chain.

In addition, CODEI/O++ systematically surpasses CODEI/O, improving average scores without affecting individual task performance.

This highlights that multiple rounds of revisions based on execution feedback can improve data quality and enhance cross-domain reasoning capabilities.

Most importantly, both CODEI/O and CODEI/O++ demonstrate universal effectiveness across model scales and architectures.

This further validates the experimental training method (predicting code inputs and outputs), allowing the model to perform well in a variety of reasoning tasks without sacrificing professional benchmark performance.

In order to further study the impact of different key aspects of the new method, the researchers conducted multiple sets of analytical experiments.

All experiments were conducted using the Qwen 2.5 Coder 7B model, and the results reported are obtained after fine-tuning of the second stage of common instructions.

ablation experiments

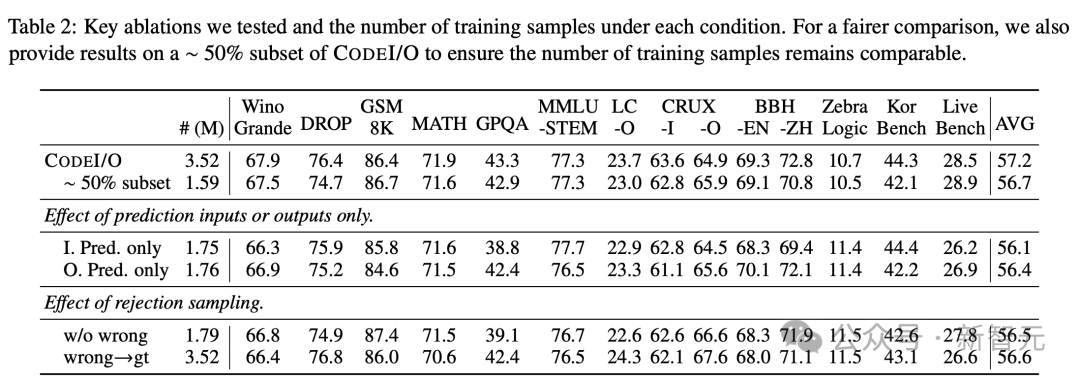

The research team first conducted two key ablation studies on the data construction process, and the results are shown in Table 2 below.

Input/output prediction

The author studied input and output predictions by training separately.

The results showed that the overall scores were similar, but input prediction performed well on KorBench and slightly affected GPQA performance; while output prediction showed greater advantages on symbolic reasoning tasks such as BBH. CRUXEval-I and-O bias input and output predictions respectively.

Reject sampling

They also explored ways to use reject sampling to filter out incorrect responses, which resulted in 50% of training data being deleted. However, this caused widespread performance degradation, indicating a possible loss of data diversity.

The author also attempts to replace all incorrect responses with correct answers (excluding thought chains) through code execution.

This approach does bring improvements on benchmarks such as LeetCode-O and CRUXEval-O, which are designed to measure the accuracy of output predictions, but reduces scores in other ways, resulting in reduced average performance.

When these two methods are compared to training on approximately 50% of a subset of CODEI/O with comparable sample numbers, they still do not show advantages.

Therefore, in order to maintain a balance of performance, the researchers retained all incorrect responses in the main experiment without making any modifications.

Effects of different synthetic models

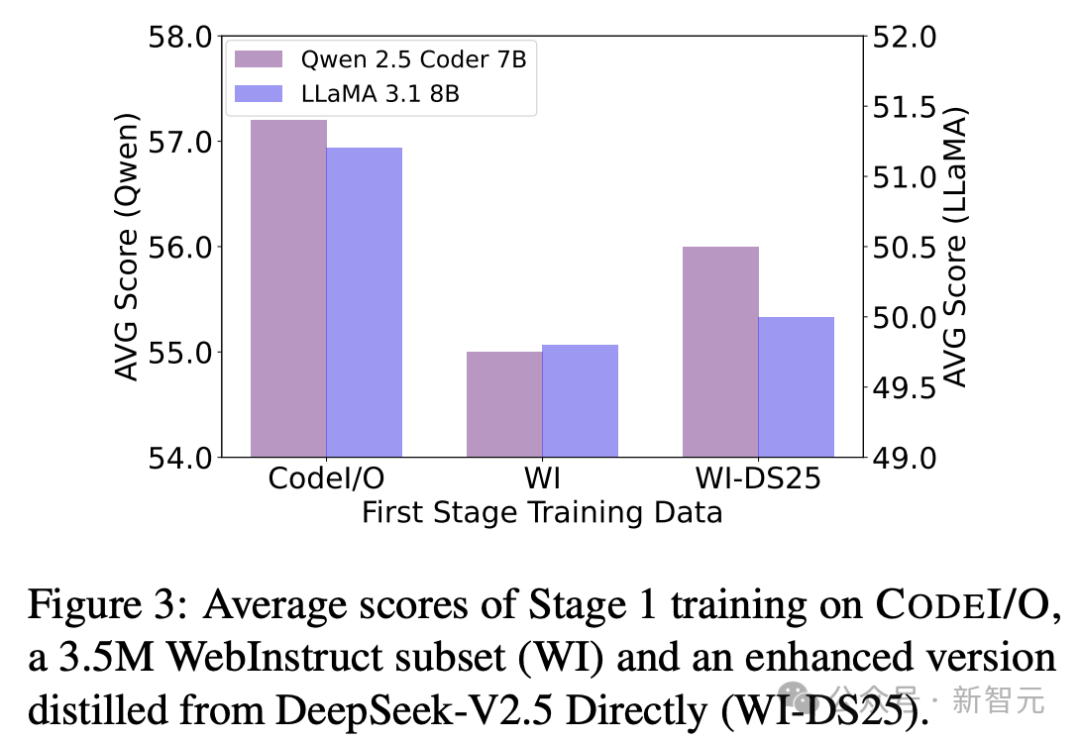

To study the effects of different comprehensive models, the author used DeepSeek-V2.5 to regenerate responses to 3.5 million WebInstruct datasets, creating an updated dataset called WebInstruct-DS25.

As shown in Figure 3, although WebInstruct-DS25 performed better than the original dataset on Qwen 2.5 Coder 7B and LLaMA 3.18B, it still failed to match CODEI/O.

This highlights the value of diverse reasoning patterns in code and the importance of task selection in training.

Overall, this comparison shows that predicting the inputs and outputs of code improves reasoning capabilities, rather than just distilling knowledge from high-level models.

Scaling effect of CODEI/O

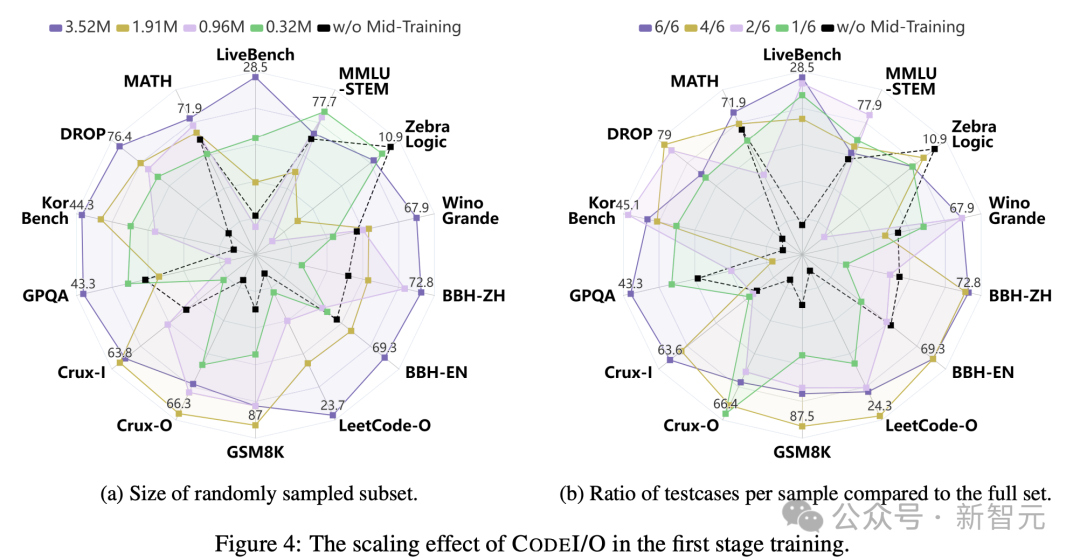

The researchers also evaluated the performance of CODEI/O under different amounts of training data.

By randomly sampling training examples, Figure 4a reveals a clear trend: increasing the number of training samples often leads to improved performance for various benchmarks.

Specifically, using a minimum amount of data performs relatively poorly in most benchmarks because the model lacks enough training to effectively generalize.

In contrast, CODEI/O achieves the most comprehensive and robust performance when training on a complete data set.

A moderate amount of data produces results somewhere between these two extremes, showing gradual improvement as the training samples increase. This highlights the scalability and effectiveness of CODEI/O in improving reasoning capabilities.

In addition, they also performed data scaling on the dimension of input-output pairs by fixing and using all unique raw code samples, but changing the number of input-output prediction instances per sample.

Figure 4b shows the proportion of I/O pairs used relative to the full set.

Although the scaling effect is not as pronounced as the training samples, significant benefits can still be observed, especially when increasing from 1/6 to 6/6.

This suggests that some inference models require multiple test cases to fully capture and learn their complex logical flow.

different data formats

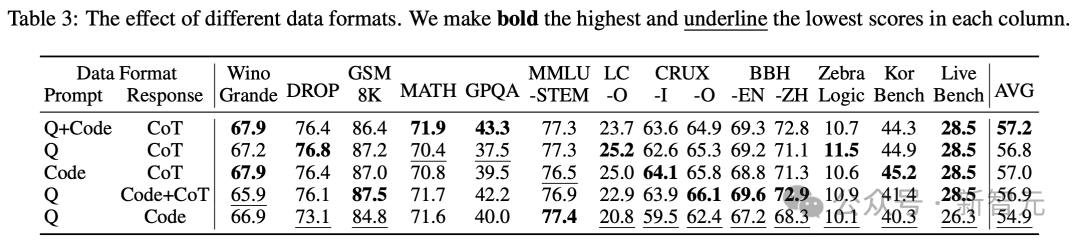

In this part, we mainly study how to best arrange queries, reference codes, and thought chains (CoT) in training samples.

As shown in Table 3, placing queries and reference codes in prompts, and thinking chains in responses can achieve the highest average scores and the most balanced performance across benchmark tests.

Results in other formats show slightly lower but comparable performance, with the worst results occurring when the query is placed in the prompt and the reference code is placed in the response.

This is similar to a standard code generation task, but with far fewer training samples.

This highlights the importance of thought chains and test case size for learning transferable reasoning capabilities.

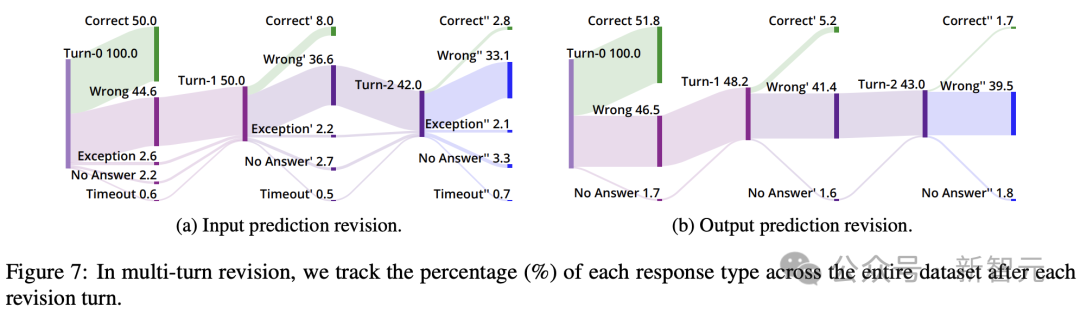

multiple iteration

Based on CODEI/O (no revisions) and CODEI/O++(single round of revisions), the researchers extended the revisions to the second round and evaluated further improvements by recreating predictions for instances that remained incorrect after the first round of revisions.

As shown in Figure 7 below, the distribution of response types in each round is visualized.

The results showed that most correct responses were predicted in the initial round, and about 10% of erroneous responses were corrected in the first round of revisions.

However, the second round produced significantly fewer corrections, and examination of the case authors found that the model often repeated the same erroneous CoT without adding new useful information.

After integrating multiple rounds of revisions, they observed in Figure 5 that there was continued improvement from round 0 to round 1, but the gain from round 1 to round 2 was small-showing a slight improvement for LLaMA 3.18B, but a performance decline for Qwen 2.5 Coder 7B instead.

Therefore, in the main experiment, the researchers stayed at a single round of revision, namely CODEI/O++.

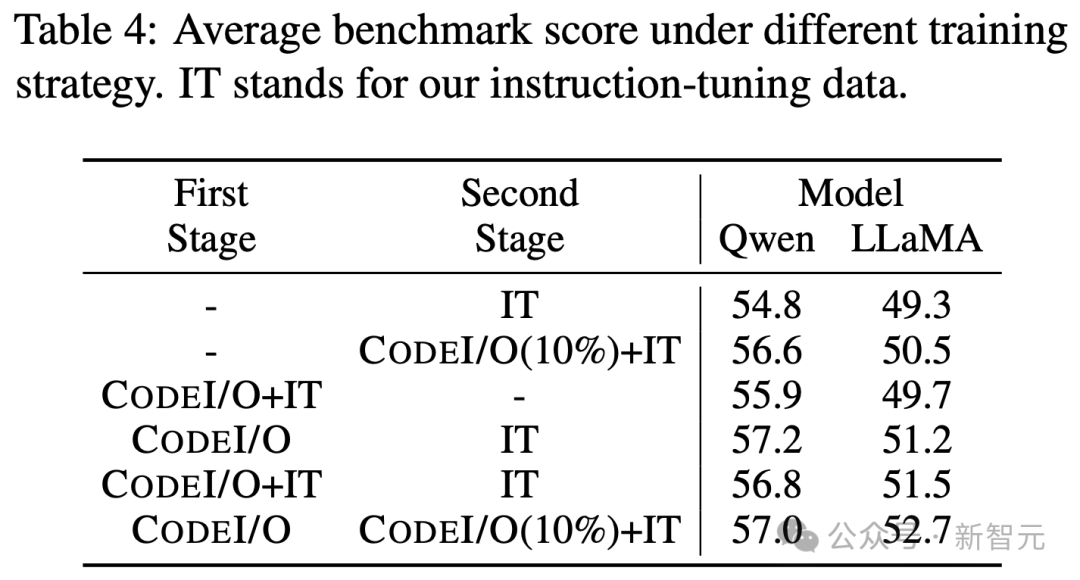

The need for two-stage training

Finally, the researchers emphasized the need to use CODEI/O data for a separate training phase by testing single-phase hybrid training and two-phase training with a mixture of different data.

As shown in Table 4, all two-stage variant models performed better than single-stage training.

At the same time, the effect of mixing data during the two stages of training varied between models.

For Qwen 2.5 Coder 7B, the best result is to completely separate CODEI/O and instruction trimming data, while LLaMA 3.1 8B performs better with mixed data, whether in the first or second phase.

the authors

Junlong Li

Junlong Li is a third-year master’s student majoring in computer science in Shanghai Jiao Tong University and studied under Professor Hai Zhao.

Previously, he received a bachelor’s degree in computer science in the IEEE pilot class in 2022.

He served as a research intern in the NLC group of Microsoft Research Asia (MSRA). Under the guidance of Dr. Lei Cui, he participated in a number of research topics related to Document AI, including web understanding and basic model of document images.

From May 2023 to February 2024, he worked closely with Professor Pengfei Liu of GAIR to mainly study issues such as the evaluation and alignment of LLMs.

Currently, he is engaged in relevant research under the guidance of Professor Junxian He.

Daya Guo

Daya Guo studied for a doctorate under the joint training of Sun Yat-sen University and Microsoft Research Asia, and was co-supervised by Professor Jian Yin and Dr. Ming Zhou. Currently serves as a researcher at DeepSeek.

From 2014 to 2018, he obtained a bachelor’s degree in computer science from Sun Yat-sen University. From 2017 to 2023, he served as a research intern at Microsoft Research Asia.

His research focuses on natural language processing and code intelligence, aiming to enable computers to intelligently process, understand and generate natural and programming languages. The long-term research goal is to promote the development of AGI, thereby revolutionizing the way computers interact with humans and improving their ability to handle complex tasks.

Currently, his research interests include: (1) Large Language Models; and (2) Code Intelligence.

Runxin Xu (Xu Runxin)

Runxin Xu is a research scientist at DeepSeek and has been deeply involved in the development of the DeepSeek series of models, including DeepSeek-R1, DeepSeek V1/V2/V3, DeepSeek Math, DeepSeek Coder, DeepSeek MoE, etc.

Previously, he received a master’s degree from the School of Information Science and Technology of Peking University, under the supervision of Dr. Baobao Chang and Dr. Zhifang Sui, and completed his undergraduate studies at Shanghai Jiao Tong University.

His research interests are mainly focused on AGI and are committed to continuously advancing the boundaries of AI intelligence through scalable and efficient methods.

Yu Wu (Wu Mata)

Yu Wu is currently a DeepSeek technician responsible for leading the LLM alignment team.

He has been deeply involved in the development of the DeepSeek series of models, including DeepSeek V1, V2, V3, R1, DeepSeek Coder and DeepSeek Math.

Prior to this, he was a senior researcher in the Natural Language Computing Group of Microsoft Research Asia (MSRA).

He received a bachelor’s degree and a doctoral degree from Beihang University and studied under Professors Ming Zhou and Zhoujun Li.

He has achieved many achievements in his research career, including publishing more than 80 papers in top conferences and journals such as ACL, EMNLP, NeurIPS, AAAI, IJCAI and ICML.

He has also released several influential open source models, including WavLM, VALL-E, DeepSeek Coder, DeepSeek V3, and DeepSeek R1.

Junxian He (He Junxian)

Junxian He is currently an assistant professor (tenure) in the Department of Computer Science and Engineering at the Hong Kong University of Science and Technology.

He received his doctorate in 2022 from the Institute of Language Technology at Carnegie Mellon University, co-supervised by Graham Neubig and Taylor Berg-Kirkpatrick. He also received a bachelor’s degree in electronic engineering from Shanghai Jiao Tong University in 2017.

Previously, Junxian He also worked for some time at Facebook AI Research Institute (2019) and Salesforce Research Institute (2020).

References:

https://codei-o.github.io/