Wen| GeeTech

In the summer of 1956, Dartmouth College in New Hampshire welcomed a special academic gathering. When mathematics professor John McCarthy first wrote the term Artificial Intelligence in his meeting proposal, he might not have expected that the discussion, which was originally planned to completely solve the problem of machine simulation intelligence in two months, would start a century-long cognitive revolution.

Wu Yongming, CEO of Alibaba Group, spoke sonorous at the earnings conference, as if he had foreseen a critical moment of a turning point in history: once AGI is truly realized, the scale of the industry it has spawned is likely to rank first in the world, and may even profoundly affect or even partially replace nearly half of the current global economic composition. rdquo;

Between surprises and worries, people are learning to accept and embrace artificial intelligence, nervously speculating when General Artificial Intelligence (AGI) will come. However, as the protagonist of this round of AI craze, the big language model may still be just a pathfinder, still far from the real AGI, or even the right way to reach AGI. In this regard, people can’t help but wonder, how far are we from realizing real AGI?

Who is the starting point of AGI?

“The term Artificial General Intelligence first appeared in an article on military technology published in 1997 by Mark Gubrud, a physicist at the University of North Carolina, in which AGI was defined as an AI system that rivals or surpasses the human brain in complexity and speed. It can acquire general knowledge, operate and reason based on it, and can play the role of human intelligence in any industrial or military activity. rdquo;

AGI has long been regarded as the holy grail in the field of artificial intelligence, meaning that machines can learn, reason and adapt to complex environments independently in multiple tasks just like humans. From GPT-4 ‘s dialogue capabilities to Sora’s video generation, despite the rapid advancement of AI technology in recent years, the implementation of AGI still faces multiple gaps.

The core of AI is to translate real-world phenomena into mathematical models, and use language to allow machines to fully understand the relationship between the real world and data. AGI goes one step further, making AI no longer limited to a single task, but has cross-domain learning and migration capabilities, so it has stronger versatility.

If we compare the characteristics of AGI, we will find that although current AI systems surpass humans in specific tasks (such as text generation and image recognition), they are still essentially advanced imitation, lack the ability to perceive the physical world and make autonomous decisions, and still do not meet AGI requirements.

First, large models have limited ability to process tasks. They can only handle tasks in the textual domain and cannot interact with the physical and social environment. This means that models like ChatGPT and DeepSeek cannot truly understand the meaning of language because they do not have a body to experience physical space.

Secondly, large models are not autonomous. They require humans to define each task in detail, just like a parrot that can only imitate trained words. Truly autonomous intelligence should be similar to crow intelligence, capable of autonomously completing tasks that are smarter than today’s AI. Current AI systems do not yet have this potential.

Third, although ChatGPT has conducted large-scale training on different corpus of text data, including texts that imply human values, it does not have the ability to understand or be consistent with human values, which means it lacks the so-called moral compass.

But these do not prevent technology giants from praising big models. Technology giants such as OpenAI and Google all regard the big model as a key step towards AGI. OpenAI CEO Ultraman has said many times that the GPT model is an important breakthrough in the direction of AGI.

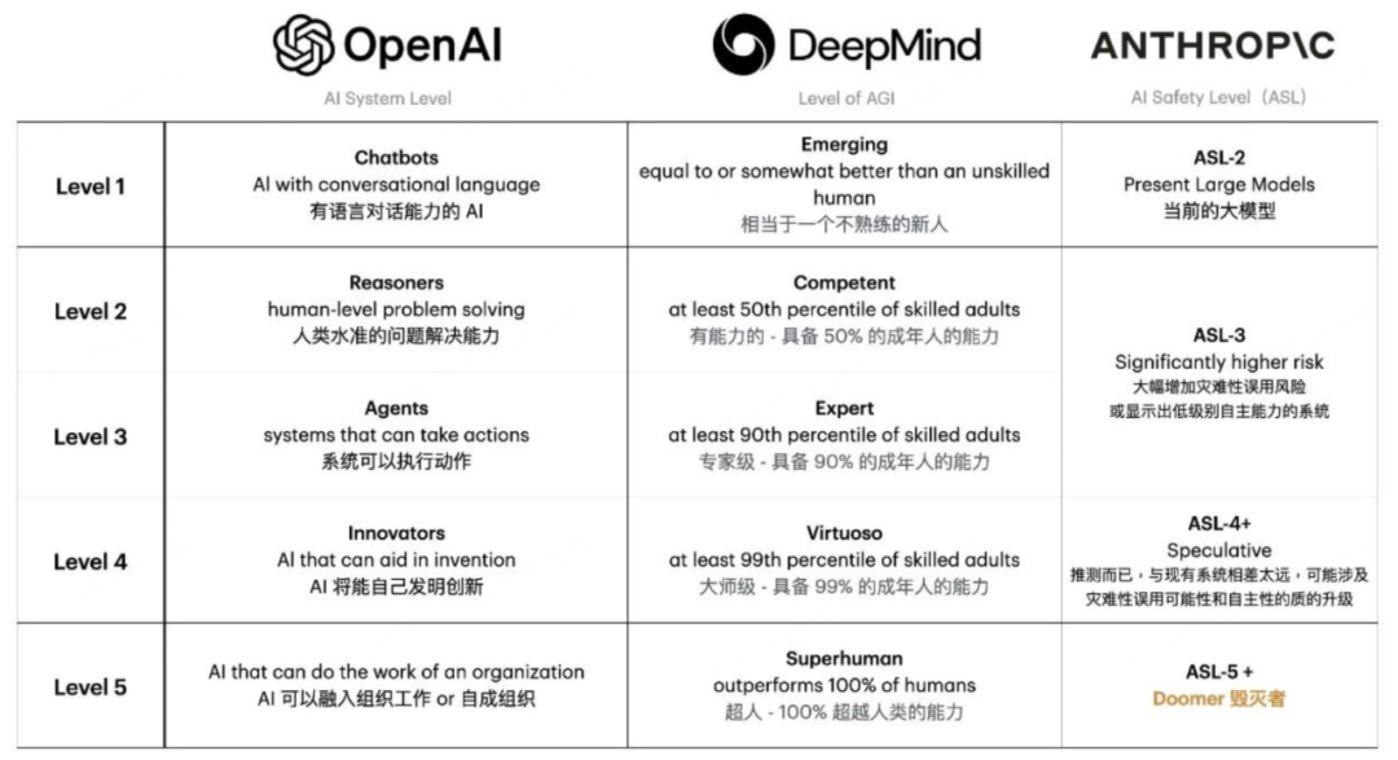

According to the AGI five-level standard proposed by OpenAI: L1 is a chat robot (Chatbots), with basic conversational language skills;L2 is a reasoner Reasoners, capable of solving human-level problems and handling more complex logical reasoning, problem solving and decision-making tasks;L3 are intelligent agents, capable of taking actions on behalf of users and having higher autonomy and decision-making capabilities;L4 is an innovator Innovators, which can assist invention and innovation, promote scientific and technological progress and social development;L5 are Organizations, which can perform complex organizational tasks and have the ability to comprehensively manage and coordinate multiple systems and resources.

Currently, AI technology is transitioning from the L2 Reasoner stage to the L3 agent stage. It is a consensus in the industry that 2025 will become the year when Agent applications explode. We have already seen applications such as ChatGPT, DeepSeek, and Sora begin to enter the popularization stage., integrate into people’s work and life.

However, the road to AGI is still full of cognitive traps. The occasional hallucination output of large models exposes the current system’s limitations in understanding causality; the decision-making dilemma faced by autonomous vehicles in extreme scenarios reflects the complexity of the real world. Sex and ethical paradox.

Just like the evolution of human intelligence has shaped a multi-layered architecture, with both rapid reactions at the instinctive level and deep thinking controlled by the cortex. For machines to truly understand the gravity behind the landing of apples, not only data correlation is needed, but also a mental model of the physical world is needed. This fundamental cognitive gap may be more difficult to cross than we think.

The only way to AGI

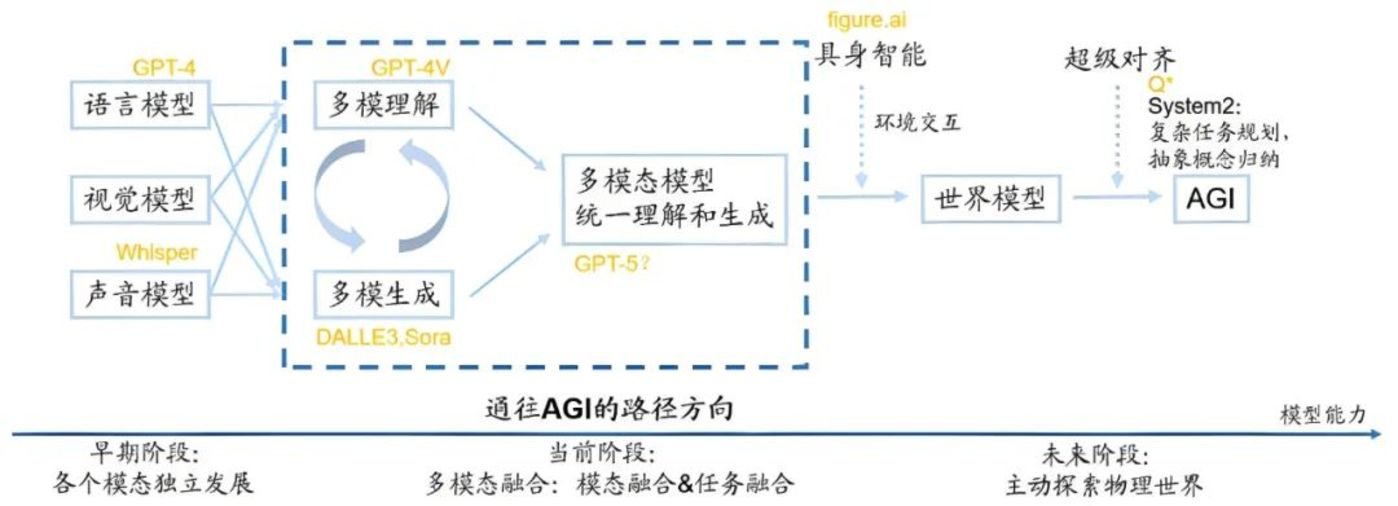

The evolution of large models will go through three stages: single modal multimodal world models.

In the early stage, language, vision, and sound were developed independently. The current stage is a multi-mode fusion stage. For example, GPT-4V can understand the input text and images, and Sora can generate video based on the input text, images, and video.

However, the multimodal fusion at this stage is not complete yet. The two tasks of understanding and generation are carried out separately. The result is that GPT-4V has strong understanding but weak generation ability, and Sora has strong generation ability but sometimes poor understanding ability. The unity of multimodal understanding and generation is the only way to AGI, which is a very critical understanding.

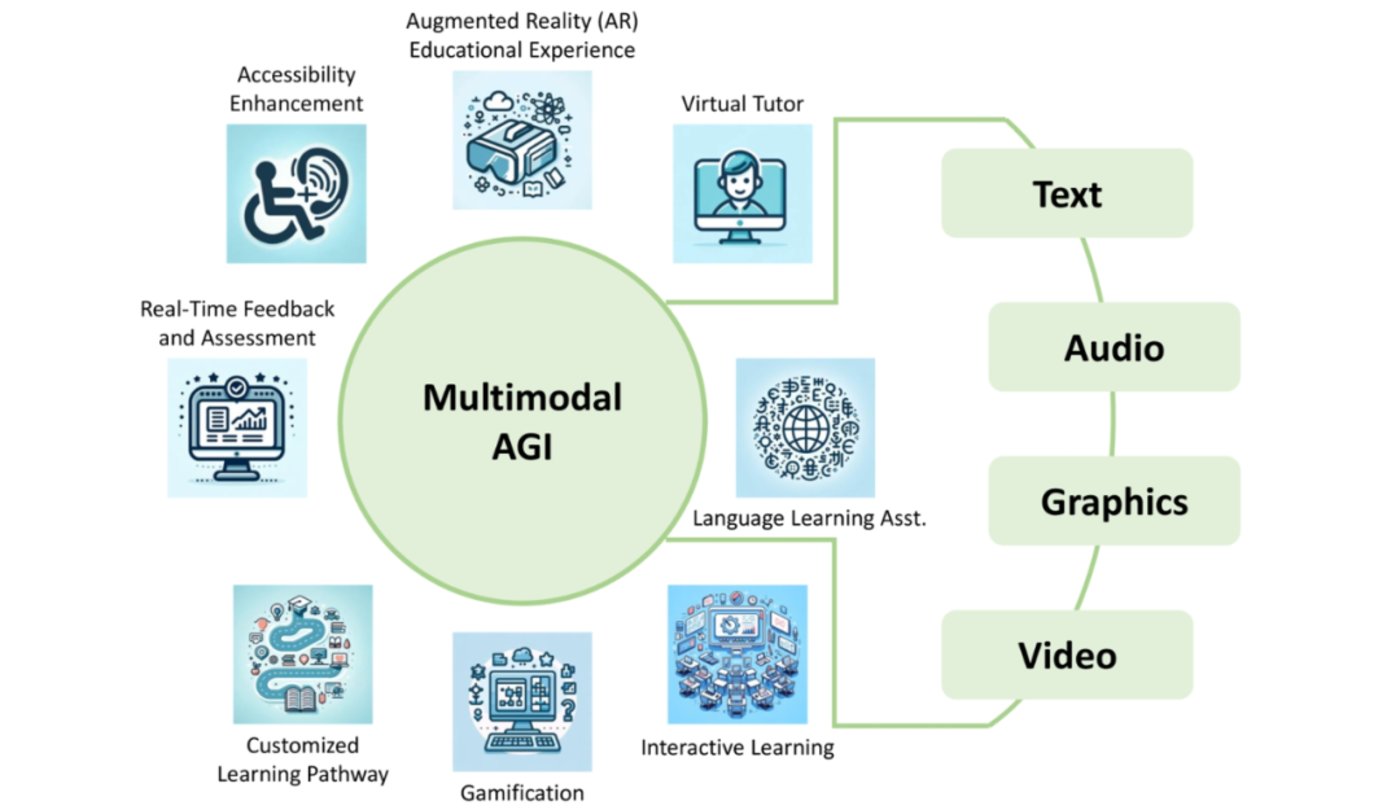

Regardless of which path is used to achieve AGI, multimodal models are an indispensable part. Human interactions with the real world involve multiple modal information, so AI must process and understand multiple forms of data, which means it must have multimodal understanding capabilities.

Multimodal models are machine learning models that can process and understand data from different modalities, such as images, text, audio and video, and can provide a more comprehensive and richer expression of information than a single modality. In addition, simulating dynamic environmental changes and making predictions and decisions also require strong multimodal generation capabilities.

Data from different modes often contain complementary information. Multimodal learning can effectively fuse these complementary information and improve the accuracy and robustness of the model. For example, in image annotation tasks, text information can help the model better understand image content; while in speech recognition, video information can help the model capture the speaker’s lip movements, thereby improving recognition accuracy.

By learning and fusing data from multiple modalities, the model can establish more generalized feature representations, thereby showing better adaptability and generalization when faced with unseen and complex data. This is of great significance for developing general intelligent systems and improving the reliability of models in real-world applications.

The research of multimodal models can be roughly divided into several technical approaches: alignment, fusion, self-supervision and noise addition. The aligning-based method maps data from different modes into a common feature space for unified processing. The fusion method integrates multi-modal data into different model layers, making full use of the information of each modal. Self-supervised techniques pre-train models on unlabeled data, improving performance on various tasks. Noise addition enhances the robustness and generalization of the model by introducing noise into the data.

Combined with these technologies, multimodal models have demonstrated powerful capabilities in processing complex real-world data. They can understand and generate multimodal data, simulate and predict environmental changes, and help agents make more accurate and effective decisions. Therefore, multimodal models play a vital role in developing world models and mark a critical step towards AGI.

For example, Microsoft recently opened up the multimodal model Magma, which not only has multimodal capabilities across the digital and physical worlds, but also can automatically process different types of data such as images, videos, and text, and can also speculate on the intentions and future behaviors of people or objects in videos.

Step Star’s two Step series multimodal large models, Step-Video-T2V and Step-Audio, have completed deep integration with Geely Automobile’s Xingrui AI large model, promoting the popularization of AI technology in smart cockpits, high-end smart driving and other fields. application.

MogoMind, an AI model that deeply integrates real-time data from the physical world, has three capabilities: multimodal understanding, spatio-temporal reasoning and adaptive evolution. It can not only process digital world data such as text and images, but also pass urban infrastructure.(such as cameras, sensors), vehicle-road cloud systems and agents (such as autonomous vehicles) realize real-time perception, cognition and decision feedback of the physical world, breaking through the limitations of traditional models relying on static data on the Internet for training and unable to reflect the real-time status of the physical world. At the same time, the large model also reconstructs the video analysis paradigm to enable ordinary cameras to have advanced cognitive capabilities such as behavior prediction and event traceability, providing services such as traffic analysis, accident warning, and signal optimization for cities and traffic managers.

However, in the development process of multimodal models, they also need to face the challenges of data acquisition and processing, the complexity of model design and training, and the problems of modal inconsistency and imbalance.

Multimodal learning requires the collection and processing of data from different sources, which may have different resolutions, formats, and qualities, and requires complex preprocessing steps to ensure data consistency and usability. In addition, obtaining high-quality, accurately annotated multimodal data is often costly.

Secondly, designing a deep learning model that can effectively process and fuse multiple modal data is more complex than a single modal model. Issues such as how to design an appropriate fusion mechanism, how to balance the information contributions of different modes, and how to avoid information conflicts between modes need to be considered. At the same time, the training process of multimodal models is more complex and computationally intensive, requiring more computing resources and tuning work.

In multimodal learning, there may also be significant inconsistencies and imbalances between different modes. For example, the data of some modes may be richer or more reliable, while the data of other modes may be sparse or noisy. Addressing such inconsistencies and imbalances and ensuring that models can fairly and effectively utilize information from each modality is also an important challenge in multimodal learning.

At present, large language models and multimodal large models still have natural limitations in simulating human thinking processes. The native multimodal technical route of opening up multimodal data from the beginning of training and realizing end-to-end input and output provides new possibilities for multimodal development. Based on this, the training stage is to align data from visual, audio, 3D and other modalities to achieve multimodal unification, and build a native multimodal large model, which has become an important direction for the evolution of multimodal large models.

Bringing AI back to the real world

Yann LeKun, chief scientist of Meta Artificial Intelligence, believes that the current large model route cannot lead to AGI. Although existing large models perform well in the fields of natural language processing, dialogue interaction, text creation, etc., they are still only a statistical modeling technology. They complete related tasks by learning statistical rules in data, and do not have real understanding in nature. and reasoning ability.

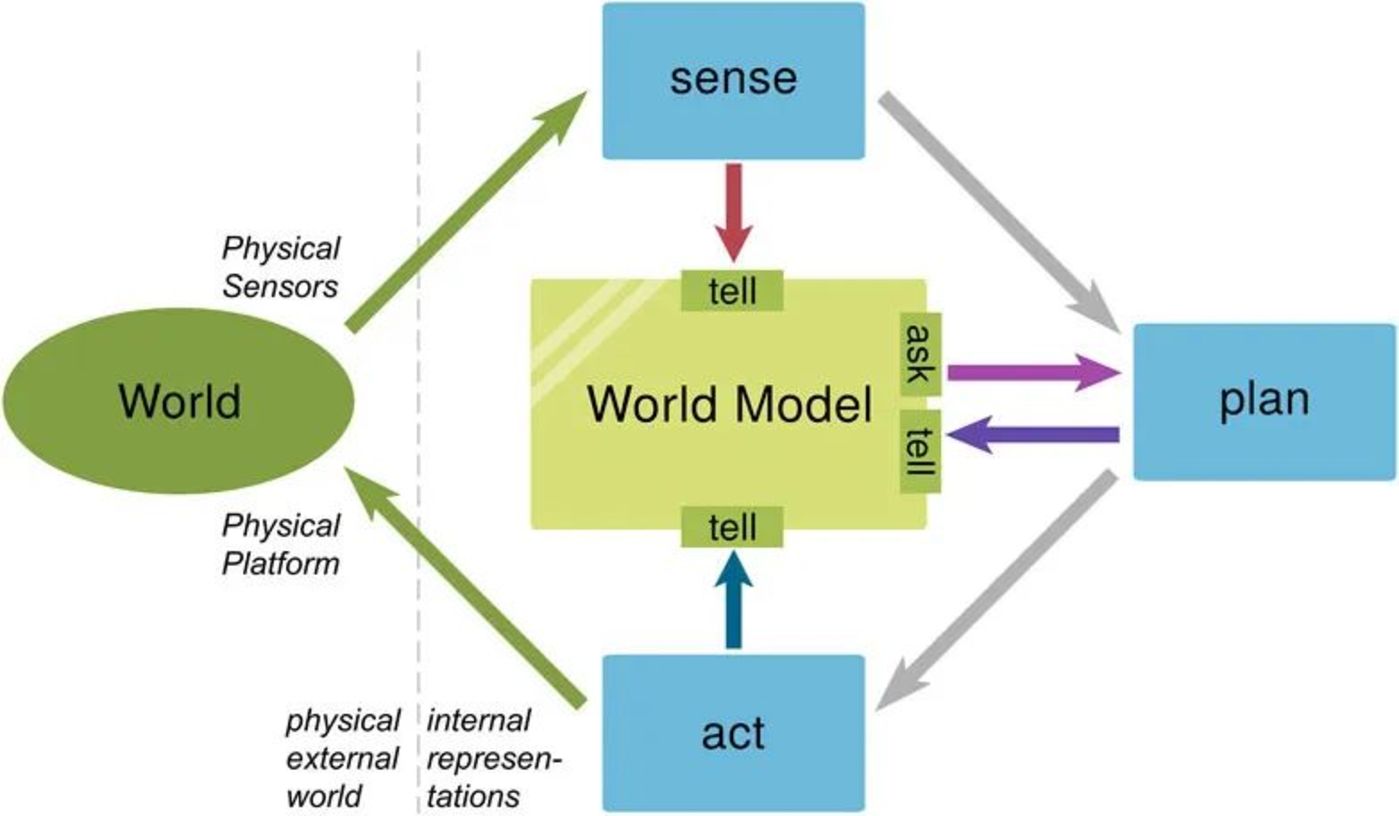

He believes that the world model is closer to true intelligence than just learning the statistical characteristics of data. Taking the human learning process as an example, when children grow up, they understand the world more through observation, interaction and practice, rather than simply being injected with knowledge.

For example, people who drive for the first time will naturally know to slow down early when crossing a curve; children only need to learn a small part of the language (their mother tongue) to master almost all of the language; animals cannot learn physics, but they will subconsciously avoid falling rocks from high places.

The reason why the world model has attracted widespread attention is that it directly faces a fundamental problem: how to make AI truly understand and understand the world. It is trying to let AI go through such an autonomous learning process through simulation and completion of video, audio and other media, thereby forming common sense and ultimately realizing AGI.

There are two main differences between the world model and the multimodal large model. One is that the world model mainly senses external environmental information through sensors including cameras. Compared with the multimodal large model, the input data form is based on real-time perception of the external environment, while the multimodal large model mainly interacts with pictures, text, video, audio and other information.

On the other hand, the results output by the world model are more time-series data (TSD), and through this data, the robot can be directly controlled. At the same time, physical intelligence requires real-time and high-frequency interaction with the real world, which requires high timeliness. The multimodal large model interacts with people more and outputs static precipitation information from the past period of time, which is time-sensitive. Lower requirements.

For this reason, the world model is also regarded by industry insiders as a dawn for realizing AGI.

Although significant progress has been made in the development of the world model, it still faces many challenges. One of the challenges is the ability to simulate environmental dynamics and causality, as well as the ability to conduct counterfactual reasoning. Counterfactual reasoning requires models to be able to simulate how the results would differ if certain factors in the environment changed, which is crucial for decision support and complex system simulation.

For example, in autonomous driving, a model needs to be able to predict how the vehicle’s path will be affected if the behavior of a traffic participant changes. However, current world models have limited capabilities in this field, and in the future, we need to explore how to make world models not only reflect real conditions, but also make reasonable inferences based on changes in assumptions.

The ability to simulate physical rules is another major challenge facing world models, especially how to make models more accurately simulate physical rules in the real world. Although existing video generation models such as Sora can simulate a certain degree of physical phenomena (such as object motion, light reflection, etc.), the accuracy and consistency of the models are still insufficient in some complex physical phenomena (such as hydrodynamics, aerodynamics, etc.).

To overcome this challenge, researchers need to consider more accurate physics engines and computational models when simulating physical laws to ensure that the generated scenes can better follow the laws of physics in the real world.

One of the key criteria for evaluating the performance of the world model is generalization ability, which emphasizes not only data interpolation, but more importantly data extrapolation. For example, real traffic accidents or abnormal driving behavior are rare events. So, whether learned world models can imagine these rare driving events requires the model not only to go beyond simply memorizing training data, but also to develop a deep understanding of driving principles. By extrapolating from known data and simulating various potential situations, it can be better applied in the real world.

For AI, the data obtained by letting a robot unscrew the bottle cap in person can build more physical intuition than watching millions of operation videos. By adding more real-time dynamic data from real scenes during the model training process, AI can better understand the spatial relationships, motion behaviors, and physical laws of the three-dimensional world, thereby achieving insight and understanding of the physical world. In the end, the arrival of AGI may not be as earth-shattering as predicted by the singularity theory, but will gradually manifest itself like mountains in the morning fog under the torrent of data.

The end of AI is not a fixed end, but a future narrative written by humans and technology. It may be a tool, a partner, a threat, or a form beyond imagination. The key question may not be what the end of AI is, but what values humans want to use to guide the development of AI. As Stephen Hawking warns: The rise of AI may be the best or worst event for mankind. rdquo; The answer depends on our decisions and responsibilities today, when AI will re-understand the world and complete a re-imagination of the future of human-computer interaction.