Image source: Generated by AI

Image source: Generated by AI

a

On the same day that Musk released Grok3, which he trained with 200,000 cards, two papers on the “opposite” route to Ma’s vigorous miracle were also published in the technology community.

Among the author names of these two papers, each has a familiar name:

Liang Wenfeng, Yang Zhilin.

On February 18, DeepSeek and Dark Side of the Moon released their latest papers almost at the same time, and the themes directly “crashed”-both challenges the core attention mechanism of the Transformer architecture to allow it to handle longer contexts more efficiently. What’s even more interesting is that the names of the tech star founders of the two companies appear in their respective papers and technical reports.

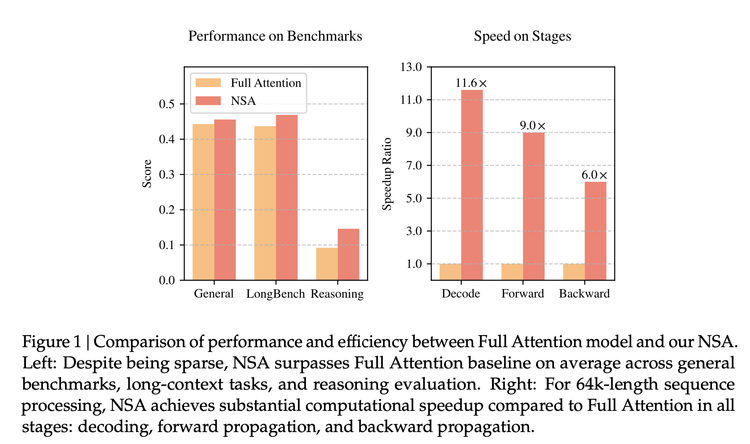

DeepSeek published a paper titled “Native Sparse Attention: Hardware-Aligned and Natively Trainable Sparse Attention.”

According to the paper, its proposed new architecture, NSA (Native Sparse Attention), has the same or higher accuracy compared to the full attention mechanism in benchmark tests; when processing 64k labeled sequences, the speed can be increased to 11.6 times. Training is also more efficient and requires less computing power; it performs well in tasks with long contexts such as book summaries, code generation, and inference tasks.

Compared with the algorithmic innovations that people have talked about before, DeepSeek has reached out to the transformation of the core attention mechanism this time.

Transformer is the foundation of the prosperity of all big models today, but its core algorithmic attention mechanism still has inherent problems: Taking reading as a metaphor, the traditional “full attention mechanism” will read every word in the text in order to understand and generate it., and compare it with all other words. This leads to the longer the text being processed, the more complex it is, the more technology becomes stuck, and even crashes.

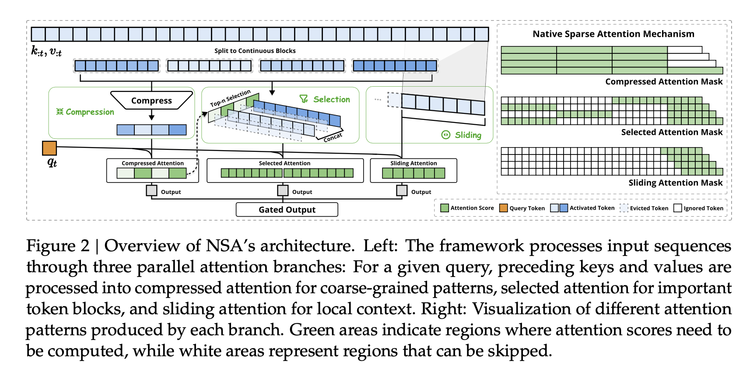

Previously, the academic community has been providing various solution ideas. Through engineering optimization and experiments in real environments, NSA has assembled an architecture plan consisting of three links that can be used in the training phase:

2) Dynamic selection-The model uses a certain scoring judgment mechanism to pick out the most concerned words from the text and perform fine-grained calculations on them. This importance sampling strategy retains 98% of fine-grained information while reducing the amount of computation by 75%.

3) Sliding window-If the first two are summaries and highlights, sliding the window is to view recent context information, which can maintain continuity, and hardware-level memory reuse technology can reduce the frequency of memory accesses by 40%.

None of these ideas was DeepSeek’s invention, but you can think of it as ASML-style work-these technical elements already exist and scattered everywhere, but engineering combines them into a scalable solution, a new algorithm architecture that no one has done yet. Now someone has built a “lithography machine” through strong engineering capabilities, and others can use this to train models in a real industrial environment.

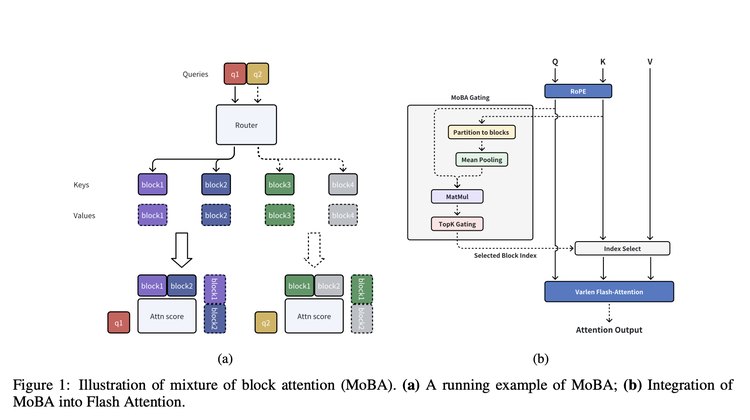

The paper published by Dark Side of the Moon on the same day proposed a very consistent framework in terms of core ideas: MoBA.(MoBA: MIXTURE OF BLOCK ATTENTION FOR LONG-CONTEXT LLMS)

As you can see from its name, it also uses the method of turning “words” into blocks. After “cutting”, MoBA has a gating network like an “intelligent filter”, which is responsible for selecting the Top-K blocks that are most relevant to a “block” and calculating attention only on these selected blocks. In the actual implementation process, MoBA also combines FlashAttention (which makes attention calculation more efficient) and MoE (Expert Hybrid Model) optimization methods.

Compared with NSA, it emphasizes flexibility and does not completely depart from the current mainstream full attention mechanism. Instead, it designs a set of methods that can be freely switched so that these models can switch between full attention and sparse attention mechanisms. Switch, giving existing full attention models more room for adaptation.

According to the paper, MoBA’s computational complexity has obvious advantages as the context length increases. In the 1M token test, MoBA was 6.5 times faster than full attention; at the 10M token, it was 16 times faster. Moreover, it is already used in Kimi’s products to handle the processing needs of day-to-day users with long contexts.

An important reason why Yang Zhilin initially attracted attention when he founded the Dark Side of the Moon was the influence and citation volume of his paper. However, before the K1.5 paper, his last paper research stayed in January 2024. Although Liang Wenfeng appears as an author in DeepSeek’s most important model technical reports, the author lists of these reports are almost equivalent to DeepSeek’s employee directory, and almost everyone is listed in it. However, there are only a few NSA paper authors. This shows the importance of these two tasks to the founders of these two companies and the significance of these two companies in understanding the technical routes of these two companies.

Another detail that can be a footnote to this importance is that some netizens found that the submission record of the NSA paper on arxiv showed that it was submitted on February 16, and the author was Liang Wenfeng himself.

second

This is not the first time that Dark Side of the Moon and DeepSeek have crashed. At the same time as the release of R1, Kimi released a rare technical report for K 1.5. Previously, the company did not focus on demonstrating its technical thinking to the outside world. At the time, both papers targeted the reasoning model promoted by RL. In fact, reading these two technical reports carefully, in the K1.5 paper, Dark Moon shared in more detail how to train an inference model. Even in terms of information and detail, it is higher than that of the R1 paper. But the subsequent trend of DeepSeek concealed much of the discussion of the paper itself.

One thing that can be confirmed is that OpenAI recently published a rare paper explaining the reasoning capabilities of its o-series models, which simultaneously named DeepSeek R1 and Kimi k1.5. “Independent research by DeepSeek-R1 and Kimi k1.5 has shown that using the Mind Chain Learning (COT) method can significantly improve the comprehensive performance of models in mathematical problem solving and programming challenges.” In other words, these are two inference models that OpenAI itself chose to compare.

“The most amazing thing about the architecture of the big model is that it seems to point out the way forward by itself, allowing different people to come up with similar directions from different perspectives.”

Zhang Mingxing, a professor at Tsinghua University who participated in the core research of MoBa, shared it on Zhihu.

He also provided a very interesting comparison.

“DeepSeek R1 and Kimi K1.5 both point to the ORM based RL, but R1 starts from Zero and is more” concise “or” less structure “. It is launched earlier and synchronizes the open source model.

Kimi MoBA and DeepSeek NSA once again point to learned sparse attention that can be passed in reverse. This time MoBA is less structured, launched earlier, and synchronized open source code.”

The continuous “collision” between these two families helps people compare the technological development of reinforcement learning for a better understanding and the evolution of attention mechanisms for more efficient and longer texts.

“Just as combining R1 and K1.5 to see how to better learn how to train the Reasoning Model, combining MoBA and NSA to better understand our belief that spark in Attention should exist and can be learned through end-to-end training from different aspects.” Zhang Mingxing wrote.

three

After the release of MoBA, Xu Xinran of the Dark Side of the Moon also said on social media that it was a job that had been working on for a year and a half and that developers could now use it out of the box.

The choice of open source at this moment is destined to be discussed in DeepSeek’s “shadow”. What’s interesting is that today, as everyone actively connects to DeepSeek and open-source their own models, the outside world always seems to think of the dark side of the moon as soon as possible. There is constant discussion about whether Kimi will connect and whether the model will be open-source. The dark side of the moon and bean buns seem to have become the two remaining “outliers”.

Now it seems that DeepSeek’s influence on the dark side of the moon is more lasting than other players. It brings challenges from technical routes to user competition: on the one hand, it proves that even when entering product competition, Basic model capabilities are still the most important; In addition, another chain reaction that is becoming increasingly clear today is that Tencent’s WeChat search and Yuanbao’s combination is using the momentum of DeepSeek R1 to make up for a marketing battle it missed before, and ultimately it is also targeting Kimi and Doubao.

The way to deal with the dark side of the moon becomes eye-catching. Among them, open source is a must step. It seems that the Dark Side of the Moon’s choice is to truly match DeepSeek’s open source ideas-most of the many open sources that have emerged after DeepSeek seem to be stress reactions, and they still follow the open source ideas of the previous Llama period. In fact, DeepSeek’s open source is different from previous ones. It is no longer a Llama-like open source that disrupts closed source opponents, but a competitive strategy that can bring clear benefits.

The Dark Side of the Moon recently reported that it “targets SOTA (state-of-the-art) results”, which seems to be the closest strategy to this new open source model. The strongest model and the strongest architecture method will be developed. In this way, it will gain the influence on the application side that it has always longed for.

According to two papers, MoBA has been used in models and products of the Dark Side of the Moon, as has the NSA. It even allows the outside world to have clearer expectations for DeepSeek’s next models. So the next question is whether Dark Side of the Moon and the next-generation models trained by DeepSeek using MoBA and NSA should collide again, and still use the open source method-this may also be the Dark Side of the Moon is waiting for that node.