Article source: AI Fan Er

Image source: Generated by AI

Image source: Generated by AI

The innovative strength of China companies in the field of artificial intelligence (AI) is increasingly attracting global attention. Previously, DeepSeek took the lead in significantly improving the reasoning performance of models through large-scale reinforcement learning (RL), a breakthrough that caused a world sensation. In this context, Alibaba’s new big language model of QwQ-32B has achieved even more amazing results.

DeepSeek’s pioneering contributions

As a China company, DeepSeek took the lead in applying large-scale reinforcement learning to post-training of AI models, successfully improving the model’s performance on reasoning tasks. Its flagship model DeepSeek-R1 has 671 billion parameters (including 37 billion activation parameters), and its outstanding performance in fields such as mathematical reasoning and programming capabilities has excited the global AI research community. This innovation opens up new possibilities for improving the performance of AI models and lays the foundation for subsequent research.

Ali QwQ-32B: A more efficient performance breakthrough

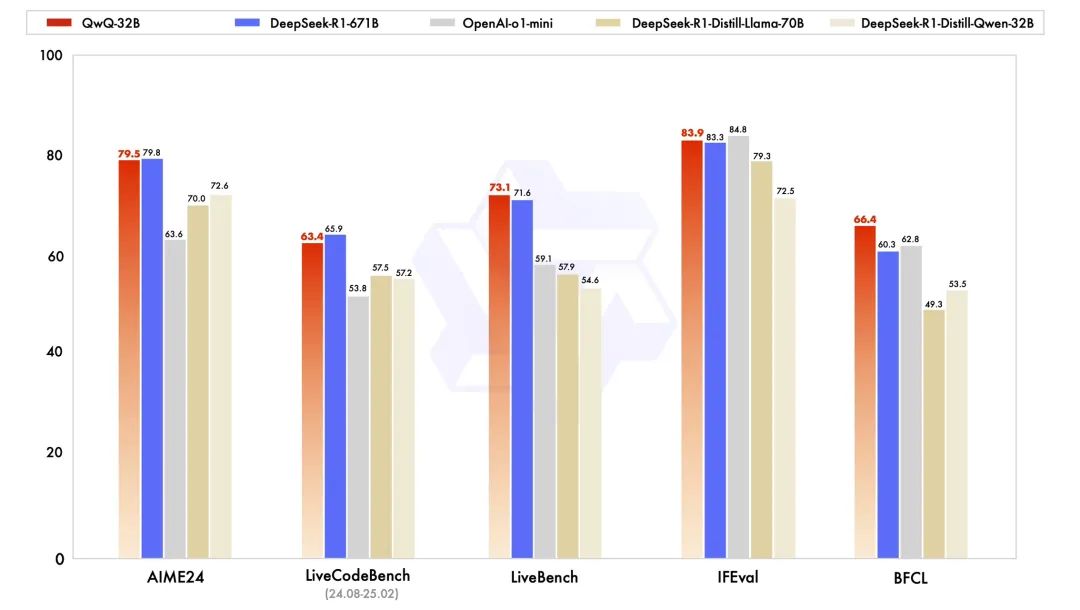

Based on DeepSeek’s pioneering work, Ali further verified and optimized large-scale reinforcement learning technology and launched the QwQ-32B model. the modelOnly 32 billion parameters, the parameter scale is much smaller than DeepSeek-R1, but it has demonstrated comparable performance in multiple benchmarks, including:

- mathematical reasoning: Ability to solve complex mathematical problems efficiently.

- programming ability: Generate high-quality code and verify it through test cases.

- general ability: Performs well in a wide range of tasks.

What’s even more amazing is that the QwQ-32B also integratesagent(Agent) related abilities, which enable them to have critical thinking ability when using tools and be able to dynamically adjust the reasoning process based on environmental feedback. This feature significantly enhances the flexibility and practicality of the model in practical applications.

Innovative intensive learning strategies

The Ali team used a unique intensive learning strategy in the training of QwQ-32B, starting from a cold start and conducting large-scale optimization for mathematics and programming tasks. Specific methods include:

- Direct feedback mechanism:

- Math Task: Provide feedback by verifying the correctness of answers.

- Programming task: Use the code execution server to check whether the generated code passes the test case.

- Two-stage intensive learning:

- The first phase focuses on improving mathematics and programming skills.

- The second phase introduces training for general abilities, combined with a general reward model and a rule-based validator, which significantly improves overall performance in just a few steps, while maintaining a high level of performance in mathematics and programming tasks.

This strategy not only verifies the potential of reinforcement learning to improve model intelligence, but also maximizes performance through an efficient training process.

Open source sharing promotes global AI development

In order to accelerate the popularization and development of AI technology, Ali released QwQ-32B on Hugging Face and ModelScope under the Apache 2.0 open source protocol for free use by researchers and developers around the world. In addition, the public can directly experience the powerful functions of this model through Qwen Chat, further shortening the distance between cutting-edge technology and ordinary users.

The success of QwQ-32B once again demonstrates that combining powerful basic models with large-scale reinforcement learning can achieve excellent performance at smaller parameter scales, providing a feasible path to General Artificial Intelligence (AGI) in the future.

From DeepSeek’s innovative exploration to Ali’s stunning optimization, the relay breakthroughs of China companies in the AI field are driving the advancement of global technology.