Article source: Intelligence emerges

Image source: Generated by AI

Image source: Generated by AI

During the Spring Festival of 2025, the most popular one is not only Nezha 2, but also an app called DeepSeek. This inspirational story has been told many times: On January 20, Hangzhou-based AI startup DeepSeek (Deep Search) released the new model R1, benchmarking OpenAI’s current strongest reasoning model o1, truly setting off the world.

Just one week after its launch, DeepSeek App has received more than 20 million downloads, ranking first in more than 140 countries. Its growth rate exceeds that of ChatGPT, which was launched in 2022, and is now about 20% of the latter.

How popular is it? As of February 8, DeepSeek has more than 100 million users, covering far more than AI geeks, but has expanded from China to the world. Everyone from the elderly and children to talk show actors and politicians is talking about DeepSeek.

Even now, the shock brought by DeepSeek continues. In the past two weeks, DeepSeek has played out TikTok’s playbook in a flash of fire and rapid growth, defeating many American rivals, and even putting DeepSeek quickly on the brink of geopolitics: the United States and Europe began discussing “affecting national security,” and many regions quickly issued orders banning downloads or installations.

A16Z partner Marc Andreessen even marveled that the emergence of DeepSeek was another “Sputnik Moment.”

(A saying originating from the Cold War period is that the Soviet Union successfully launched the world’s first artificial satellite “Sputnik-1” in 1957, which caused panic in American society and realized that its status was being challenged and its technological advantages might be overturned)

However, people are popular. In the technology circle, DeepSeek is also involved in disputes such as “distillation” and “data theft”.

As of now, DeepSeek has not made any public response, and these debates have fallen into two extremes: fanatical fans have elevated DeepSeek-R1 to a “national sport-level” innovation; there are also technology practitioners who have questioned DeepSeek’s ultra-low training costs, and distillation training methods, etc., arguing that these innovations are too sought after.

Deepseek “steals” OpenAI? It’s more like a thief calling stop thief

Almost since the explosion of DeepSeek, Silicon Valley AI giants including OpenAI and Microsoft have publicly spoken out, and the focus of their complaints has been on DeepSeek’s data. David Sachs, director of AI and encryption for the U.S. government, also publicly stated that DeepSeek “absorbs” knowledge of ChatGPT through a technique called distillation.

OpenAI reported in the Financial Times that it had found signs of DeepSeek “distilling” ChatGPT and said it violated OpenAI’s model use treaty. However, OpenAI did not provide specific evidence.

In fact, this is an untenable accusation.

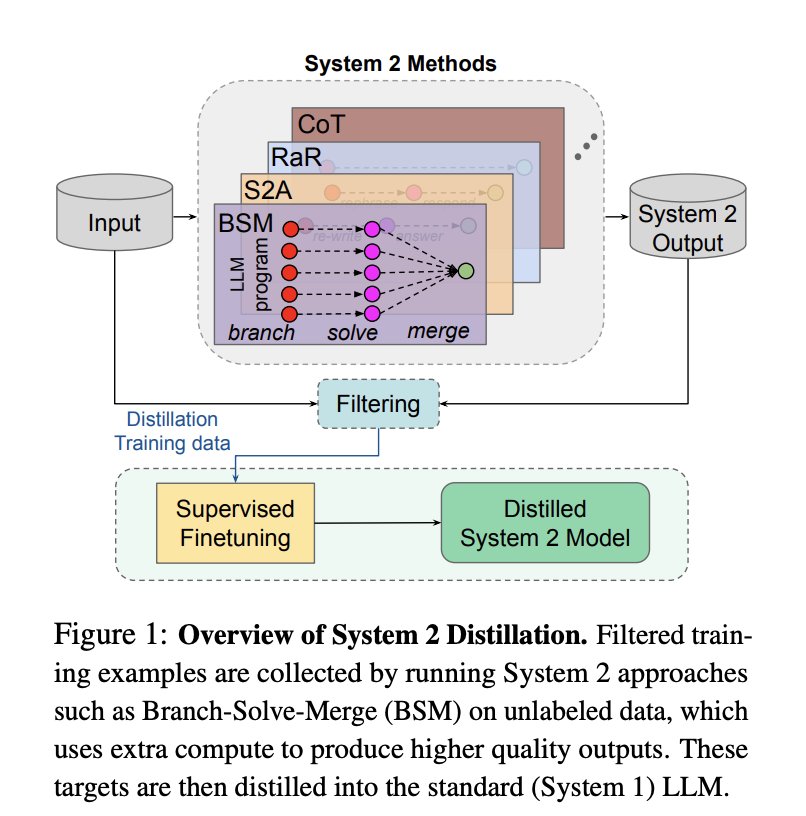

Distillation is a normal large-scale model training technique。This often occurs during the training phase of the model-by using the output of a larger, more powerful model (teacher model) to allow smaller models (student models) to learn better performance. For specific tasks, smaller models can achieve similar results at a lower cost.

Distillation is not plagiarism。To explain it in layman terms, distillation is more like asking a teacher to brush through all the problems and compile perfect problem-solving notes-this note does not only contain answers, but various optimal solutions; ordinary students (small models) only need to study these notes directly, then output their own answers, and compare the notes to see if they match the ladder ideas in the teacher’s notes.

DeepSeek’s most prominent contribution is that it uses more unsupervised learning in this process-that is, allowing machines to provide self-feedback and reducing human feedback (RLHF). The most direct result is that the training cost of the model has been greatly reduced-which is the source of many doubts.

The DeepSeek-V3 paper mentioned the specific training cluster size of its V3 model (2048 H800 chips). Many people estimate that based on market prices, this amount is about US$5.5 million, which is equivalent to tens of times the training cost of models such as Meta and Google.

However, it should be noted that DeepSeek has already stated in the paper that this is only the single operation cost of the last training session and does not include the previous equipment, personnel, and training losses.。

In the field of AI, distillation is not new. Many model manufacturers have disclosed their own distillation work. For example, Meta once announced how its own model was distilled-Llama 2 used a larger and smarter model to generate data including thinking processes and thinking methods, and then put it into its own smaller-scale reasoning model for fine-tuning.

△ Source: Meta Fair

But distillation also has its drawbacks.

A large-scale AI application practitioner told “Intelligent Emergence” that distillation can quickly increase model capabilities, but its drawback is that the data generated by the “teacher model” is too clean and lacks diversity.Learning this kind of data, the model will be more like a regular “prepared dish”, and its ability will not exceed the teacher model。

The more fatal problem is that the hallucination problem may become more serious. This is because the small model only imitates the “skin” of the large model to some extent, making it difficult to deeply understand the logic behind it, which may easily lead to a decline in performance on new tasks.

Therefore, if the model is to have its own characteristics, AI engineers need to intervene from the data stage-what kind of data, data ratio, and training method to choose will make the final trained model very different.

Typical examples are today’s OpenAI and Anthropic. OpenAI and Anthropic were among the first Silicon Valley companies to make big models. Neither side had ready-made models to distil, but crawled and learned directly from public networks and datasets.

Different learning paths have also led to significant differences in the current styles of the two models-today, ChatGPT is more like a upright science and technology student, good at solving various problems in life and work; while Claude is more good at liberal arts and is recognized as a reputation in writing tasks, but coding tasks are not inferior.

Another irony of OpenAI’s accusations is that DeepSeek is accused of using a vague clause, even if it itself did something similar.。

When it was founded, OpenAI was an open-source-oriented organization, but after GPT-4, it moved to closed-source. OpenAI training has crawled almost all over the world’s open Internet data. Therefore, after choosing to close the source, OpenAI has also been mired in copyright disputes with news media and publishers.

OpenAI’s “distillation” accusation against DeepSeek is satirized as “thief cries to catch thief” because neither OpenAI o1 nor DeepSeek R1 disclosed the details of their data preparation in their papers. This problem still exists in Rashomon.

What’s more, DeepSeek-R1 even chose the MIT open source protocol when it was released-almost the most relaxed open source protocol. DeepSeek-R1 allows commercial use and distillation, and also provides the public with six distilled small models. Users can directly deploy them on mobile phones and PCs. It is a sincere act of giving back to the open source community.

On February 5, Tanishq Mathew Abraham, former director of Stability AI research, also wrote a special article, pointing out that the accusation fell into a gray area: First, OpenAI did not produce evidence that DeepSeek directly used GPT distillation. One possible scenario he speculated was that DeepSeek found datasets generated using ChatGPT (there are many on the market), which is not explicitly prohibited by OpenAI.

Is distillation the criterion for judging whether to do AGI?

In the field of public opinion, many people nowadays use the step of “whether to distil” to determine whether to plagiarize or do AGI, which is too arbitrary.

DeepSeek’s work has reignited the concept of “distillation”, a technology that in fact emerged nearly a decade ago.

In 2015, the paper “Distillating the Knowledge in a Neural Network” jointly published by several AI giants Hinton, Oriol Vinyals, and Jeff Dean officially proposed the “knowledge distillation” technology in large models, which has also become a standard for subsequent large models.

For model manufacturers that delve into specific areas and tasks, distillation is actually a more realistic path.

An AI practitioner told Intelligent Emergence that there are almost no large model manufacturers in China that do not do distillation, which is almost an open secret.“Now that the data on the public network is almost exhausted, the cost of pre-training and data annotation from 0 to 1 is difficult to say that even a large manufacturer can easily bear.”

One exception is ByteDance. In the recently released Doubao 1.5 pro version, Byte clearly stated that “data generated by any other model has never been used during the training process and will not take the shortcut of distillation”, expressing its determination to pursue AGI.

Big manufacturers choose not to distil for practical reasons, such as avoiding many subsequent compliance disputes. On the premise of closed sources, this will also create certain barriers to model capabilities.According to “Emergence of Intelligence”, the current cost of data annotation in bytes is already at the level of Silicon Valley-up to $200 per itemThis kind of high-quality data requires experts in specific fields, such as talents at the master’s level and doctoral level or above, to mark it.

For more participants in the AI field, whether it is distillation or other engineering methods, it is essentially an exploration of the boundaries of Scaling Law.This is a necessary condition, not a sufficient condition, for exploring AGI.

In the first two years of the explosion of large models, Scaling Law was usually roughly understood as “a miracle with great efforts”, that is, computing power and parameters could allow intelligence to emerge, which was more during the pre-training stage.

Behind the heated discussion of “distillation” today, the dark line is actually the evolution of the development paradigm of the large model: Scaling Law still exists, but it has really shifted from the pre-training stage to the post-training and reasoning stage.

△ Source: Column by Zhang Junlin, PhD, Institute of Software, Chinese Academy of Sciences

OpenAI’s o1 was released in September 2024 and is considered to be a sign of Scaling Law’s shift to post-training and reasoning, and is still the world’s leading reasoning model. But the problem is that OpenAI has never disclosed its training methods and details to the public, and application costs continue to remain high: the cost of o1 pro is as high as US$200 per month, and the reasoning speed is still slow, which is also considered to be a major problem in AI application development. shackle.

During this period, most of the work in the AI circle is reproducing the effect of o1. At the same time, it is also necessary to reduce the reasoning cost so that it can be applied in more scenarios. DeepSeek’s milestone not only comes from greatly shortening the time it takes for open source models to catch up with top closed-source models-in just about three months, it almost caught up with multiple indicators of o1; more importantly, it has found o1 ‘s ability to leap into key tricks and open source it.

A major premise that cannot be ignored is that DeepSeek completed this innovation on the shoulders of giants. It is too narrow to simply regard engineering methods such as “distillation” as shortcuts, which is more of a victory for open source culture.

The ecological co-prosperity and open source effects brought by DeepSeek have quickly emerged. Shortly after its explosion, a new job by Li Feifei, the “AI godmother”, also quickly swept the screen: using Google’s Gemini as a “teacher model” and the fine-tuned Ali Qwen2.5 as a “student model”. Through distillation and other methods, for less than US$50, the inference model s1 was trained, reproducing the model capabilities of DeepSeek-R1 and OpenAI-o1.

Nvidia is also a typical case. After the release of DeepSeek-R1, although Nvidia’s market value plunged by about US$600 billion overnight, creating the largest single-day evaporation in history, it rebounded strongly the next day, rising by about 9%-the market generally Still have expectations for the strong reasoning demand brought by R1.

It is foreseeable that after all parties in the large model field absorb R1 capabilities, a wave of AI application innovation will follow.