Wen| technological whirlpool

At noon on February 18, Beijing time, Musk’s artificial intelligence startup xAI officially released a new generation of chat robot Grok3. Musk and his artificial intelligence team claim that the advanced reasoning capabilities of Grok 3 beta exceed existing artificial intelligence models.

Musk, known as the smartest AI on the surface, perfectly explains what it means to be rich and willful, just because he uses 200,000 GPUs to train models. So, can it really surpass the limelight DeepSeek and industry pioneer OpenAI?

How strong is Grok 3, which burns a lot of money?

During the live broadcast of Grok 3, Musk and others introduced the training process of Grok 3. Last year, Musk spoilt that Grok 3 was trained on 100,000 H100s, making it the first model to reach such a training cluster size. Today’s press conference revealed that by the 92nd day of training, the cluster size had expanded to 200,000 cards.

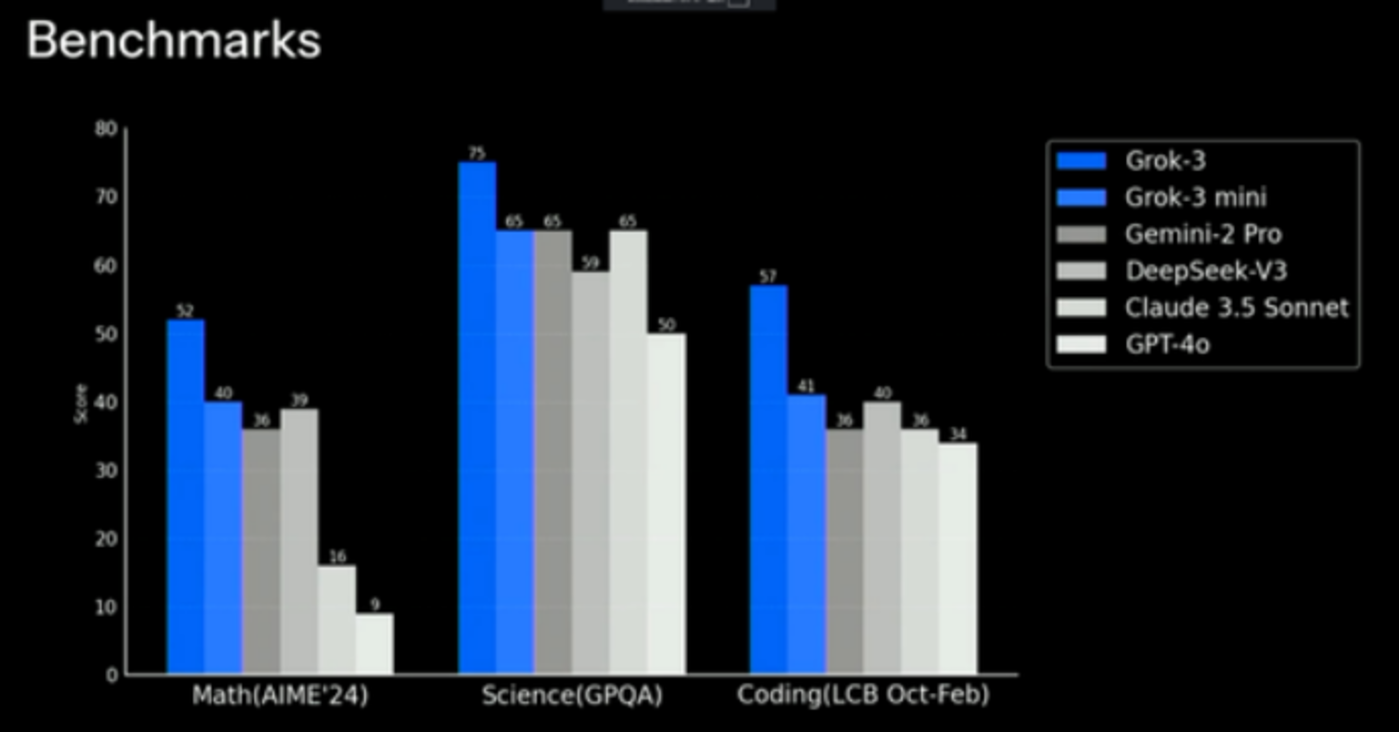

The strength of Grok 3 has indeed proved that spending money is a huge advantage in this period. This wave of Grok3 has two versions, full blood and mini, and its performance on mathematics, science, code and other datasets exceeds non-inference models such as GPT-4o and DeepSeek-V3.

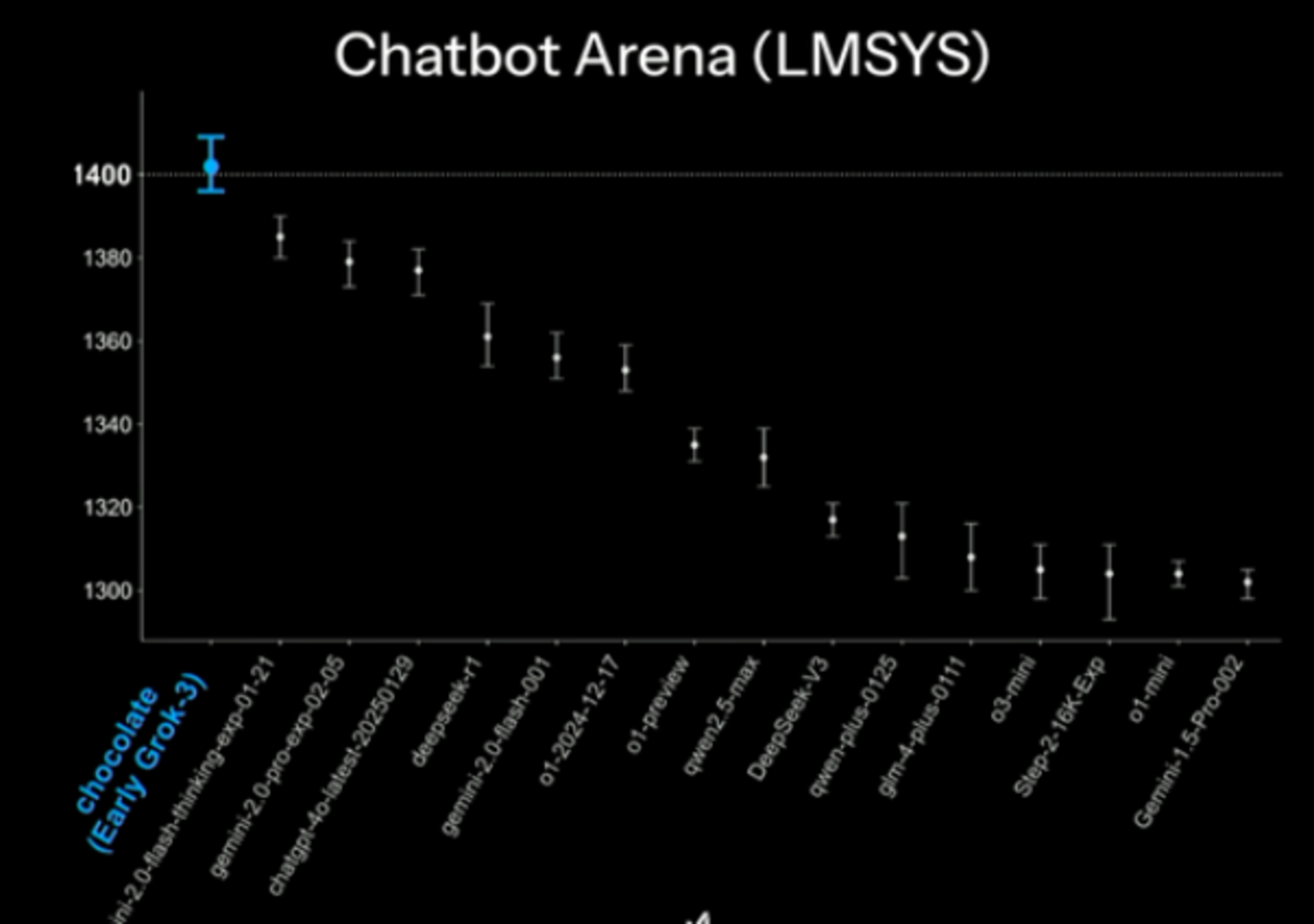

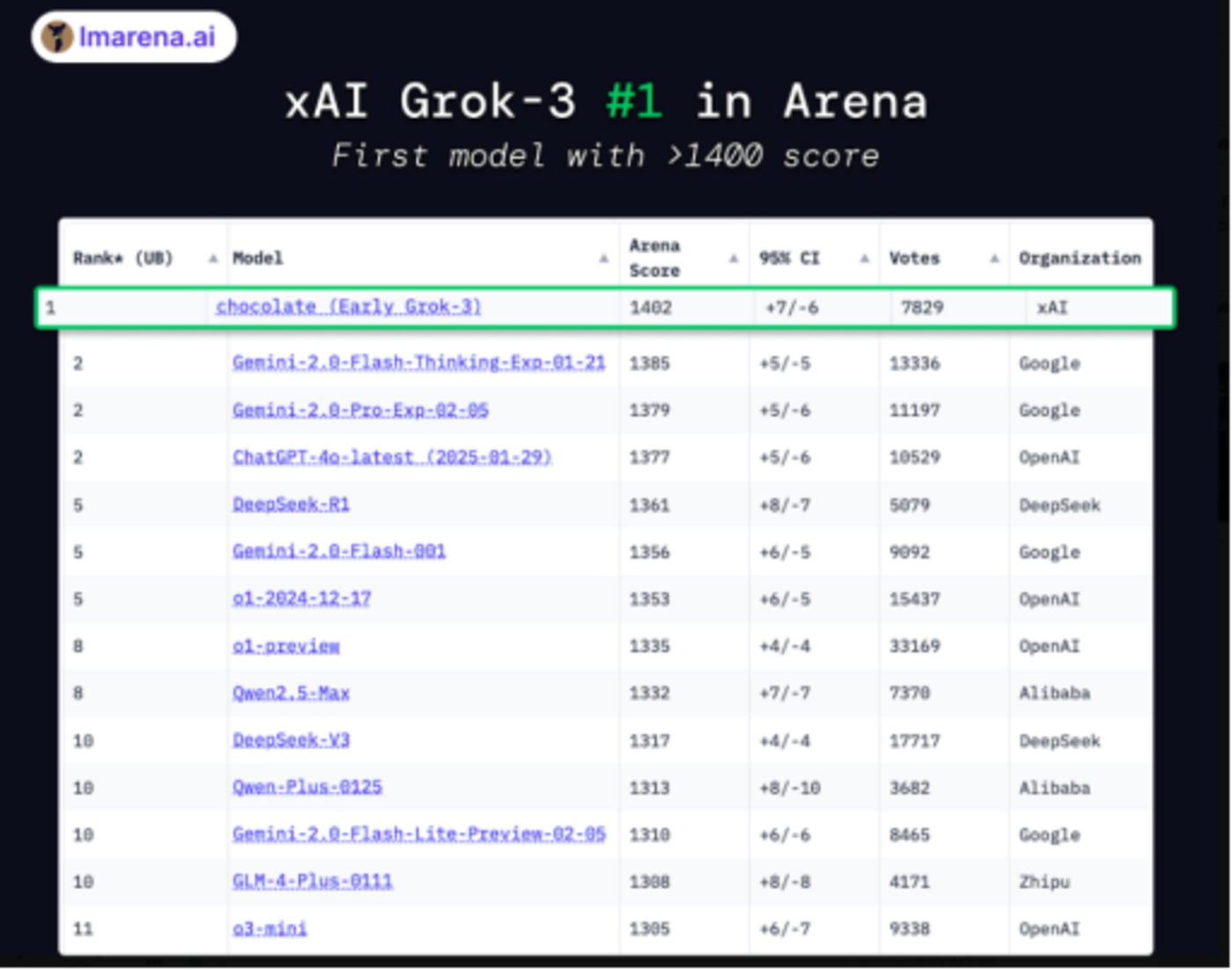

The performance of Grok-3 mini is basically ahead of or comparable to other closed source/open source models. Among the famous large model LMSYS Arena rankings, Grok-3 ranks the highest with an Elo score of over 1400. No model can compare with it, and it can be said to be a fault lead.

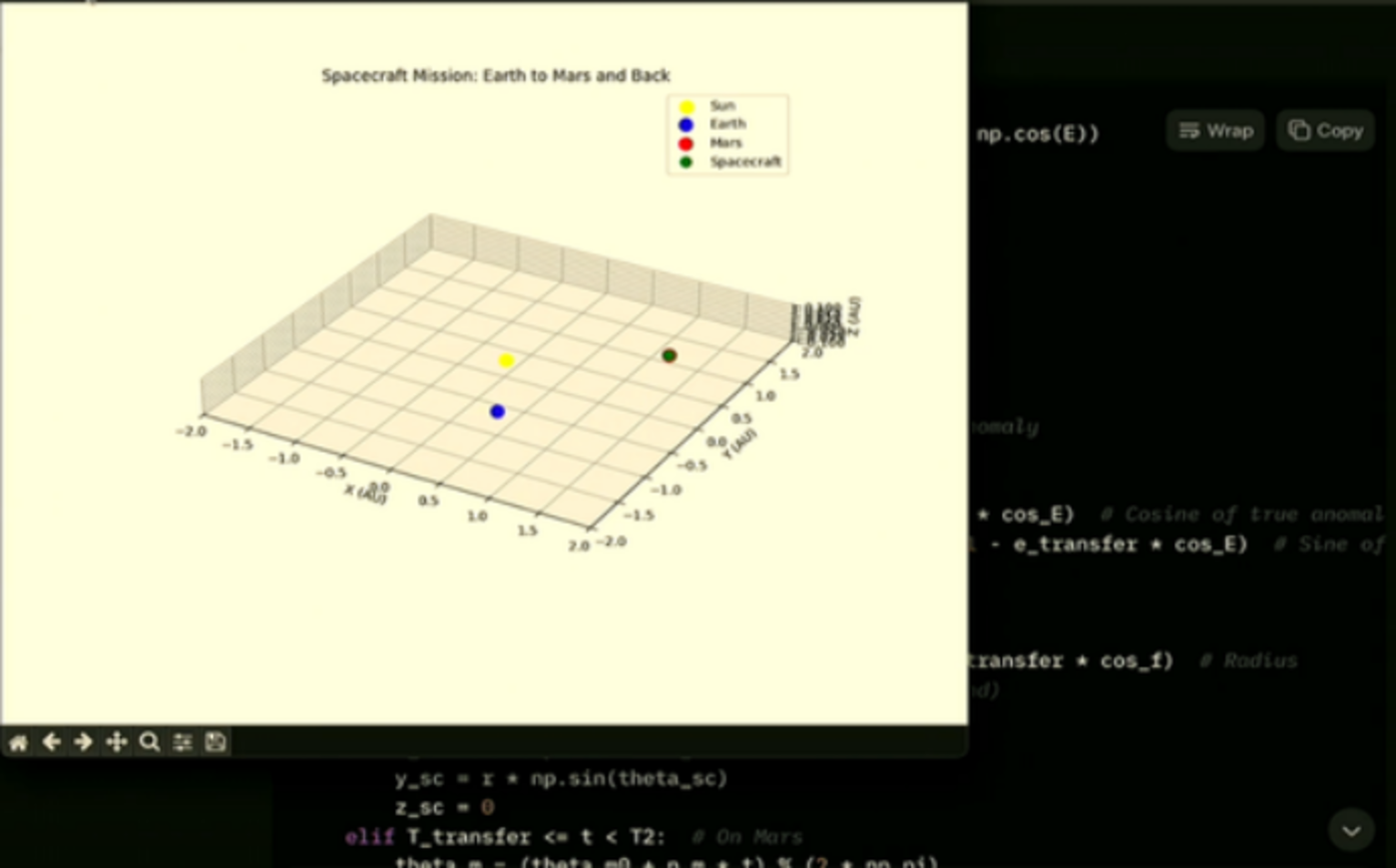

The xAI team also showed how Grok 3 performs interesting tasks. For example, calculating spacecraft missions from Earth to Mars. Grok 3 generates an animated 3D trajectory of a space launch (i.e., a feasible trajectory from Earth to Mars and then back to Earth). This involves some complex physics knowledge that Grok 3 needs to understand.

Grok 3 also demonstrates its potential in automated game development. The xAI team asked Grok 3 to create a new game on the spot that combines “Tetris” and “Bejeweled”. The Python script generated by Grok 3 defines the game’s constants, colors, square shapes and other elements, and presents a unique gameplay: when at least three squares of the same color are connected, a gravity mechanism will be triggered to eliminate the squares, which is similar to “Bejeweled”.

In addition, Grok 3 includes a feature called Big Brain, an inference model pattern that allows for more in-depth thinking when processing queries. Musk pointed out that 17 months ago, the original Grok model could hardly solve high school problems, but now it has improved a lot, and he humorously compared Grok to being ready for college.

Cost performance and spending money hard, how should we go for a big model?

As we all know, DeepSeek has significantly reduced its reliance on Nvidia’s high-end GPUs through unique algorithm optimization, architectural design and efficient resource utilization. This also caused Nvidia’s share price to plummet by 16.97%, and its market value evaporated by approximately US$592.658 billion in a single day.

DeepSeek claims that its model training cost is only 1/5 to 1/10 of that of similar models, which means that it still shows capabilities close to ChatGPT-o1 in terms of inference performance and other aspects while getting rid of the constraints of NVIDIA hardware, and some areas have surpassed it.

In contrast, Musk’s Grok 3 not only invested 200,000 GPUs, but also built a data center in just four months to launch Grok 3 as soon as possible. Such a huge investment is only a 41-point improvement in the lmArena rankings. Is this really worth it?

In fact, Musk’s crazy move to spend money may have a pre-planned consideration. Nowadays, any AI training is inseparable from GPUs, but DeepSeek follows the actuarial route through algorithm optimization to achieve 90% performance at 1/10 of the industry’s cost. Musk’s 200,000 GPUs are not only for the rapid launch of Grok 3, but also for the choice of maintaining rapid iteration in the future.

written in the end

Admittedly, Musk’s Grok 3 does overtake DeepSeek, but it seems more like an enhanced version than a large-scale lead. We look forward to seeing that DeepSeek can still shock the world again with its high cost performance.