Article source: qubit

Image source: Generated by AI

Image source: Generated by AI

Just now, Step Star teamed up with Geely Automobile Group to open source two multimodal large models!

There are 2 new models:

- Open source with the largest number of parameters in the worldVideo generation model Step-Video-T2V

- The industry’s first product-level open sourceStep-Audio, a large voice interaction model

Multimodal Volume King began to open source multimodal models. Among them, Step-Video-T2V uses the most open and relaxed MIT open source protocol.Can be arbitrarily edited and commercially applied。

(As the old rules, GitHub, Hug Face, and Take the Through Train can be seen at the end of the text)

During the development process of the two large models, the two sides complemented each other’s advantages in fields such as computing power algorithms and scene training,”significantly enhancing the performance of the multimodal large model.”

Judging from the officially released technical report, the two open source models performed well in Benchmark, and their performance exceeded similar open source models at home and abroad.

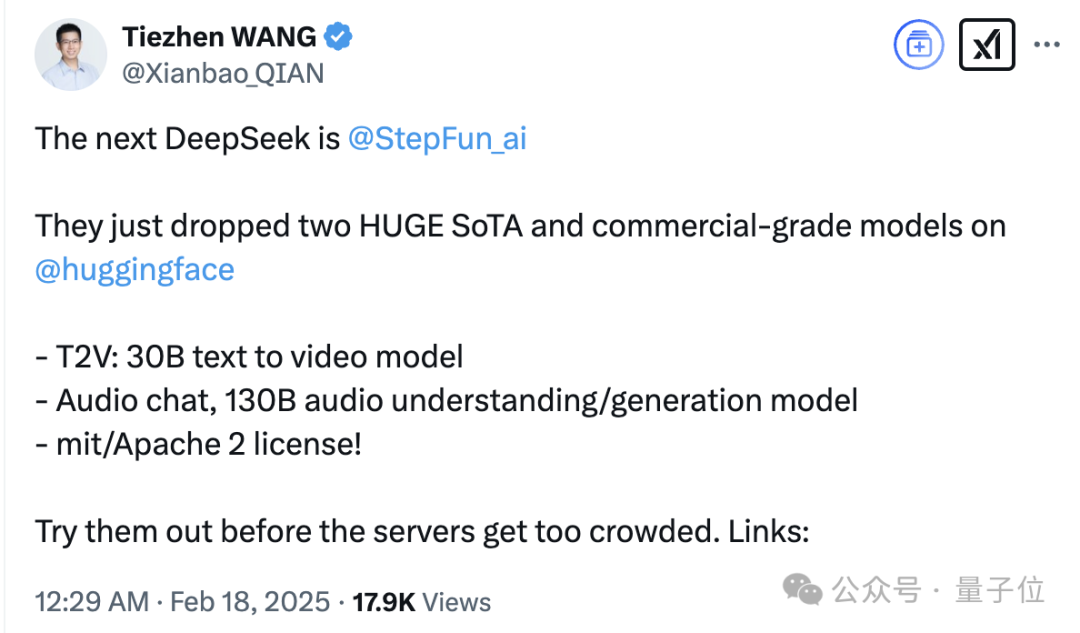

The Huashang Face official also forwarded the high praise given by the person in charge of China.

Focus on “The next DeepSeek” and “HUGE SoTA”.

Oh, yeah?

The qubits should be broken apart in technical reports and first-hand measurements in this article to see if they live up to their name.

Qubit verification, currently, this time 2 new open source modelsThey are all connected to the Yuewen App and everyone can experience it.

Multimodal Volume King first open source multimodal model

Step-Video-T2V and Step-Audio are the first open source multimodal models of Step Star.

Step-Video-T2V

Let’s first take a look at the video generation model Step-Video-T2V.

Its parameter quantity reaches 30B,It is currently the largest open source video generation model with the largest number of parameters known worldwide, natively supports bilingual input in Chinese and English.

According to the official introduction, Step-Video-T2V has four major technical features:

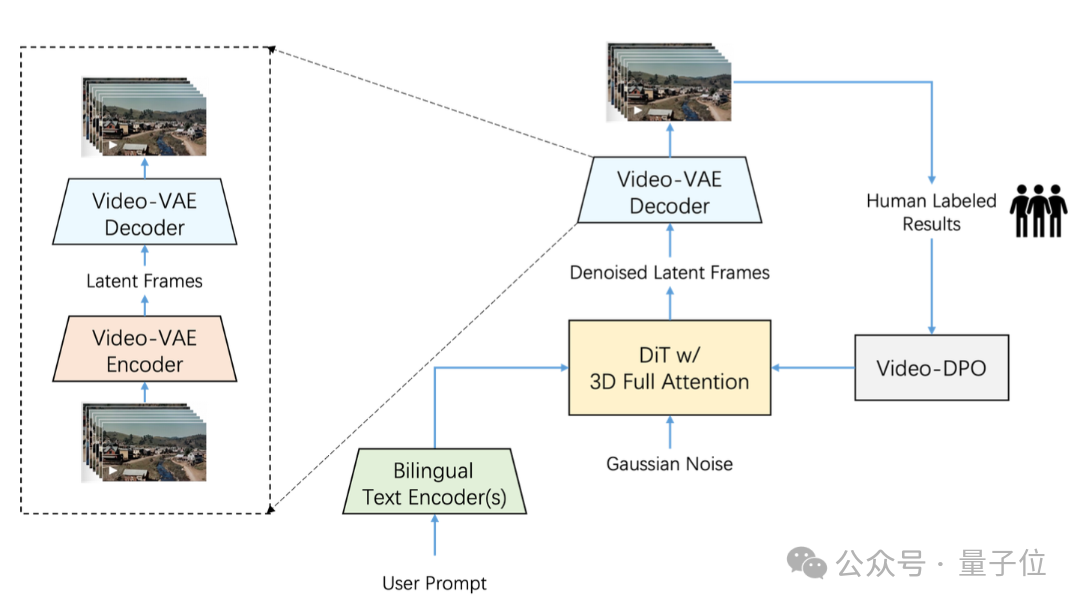

the first, can directly generate video with a maximum length of 204 frames and a resolution of 540P, ensuring that the generated video content has extremely high consistency and information density.

the second, designed and trained a high compression ratio Video-VAE for video generation tasks. While ensuring the quality of video reconstruction, it can compress video by 16×16 times in the spatial dimension and 8 times in the temporal dimension.

Most VAE models on the market today have a compression ratio of 8x8x4. Under the same video frame number, Video-VAE can compress an additional 8 times, so both training and generation efficiency are improved by 64 times.

the thirdFor the hyperparameter settings, model structure and training efficiency of the DiT model, Step-Video-T2V carries out in-depth system optimization to ensure the efficiency and stability of the training process.

the fourth, introduces in detail the complete training strategy including pre-training and post-training, including the training tasks, learning goals, and data construction and screening methods at each stage.

Also,Step-Video-T2V introduces Video-DPO in the final stage of training(Video preference optimization)-This is a RL optimization algorithm for video generation, which can further improve the quality of video generation and enhance the rationality and stability of generated video.

The end effect is to make the motion in the generated video smoother, richer in details, and more accurate alignment of instructions.

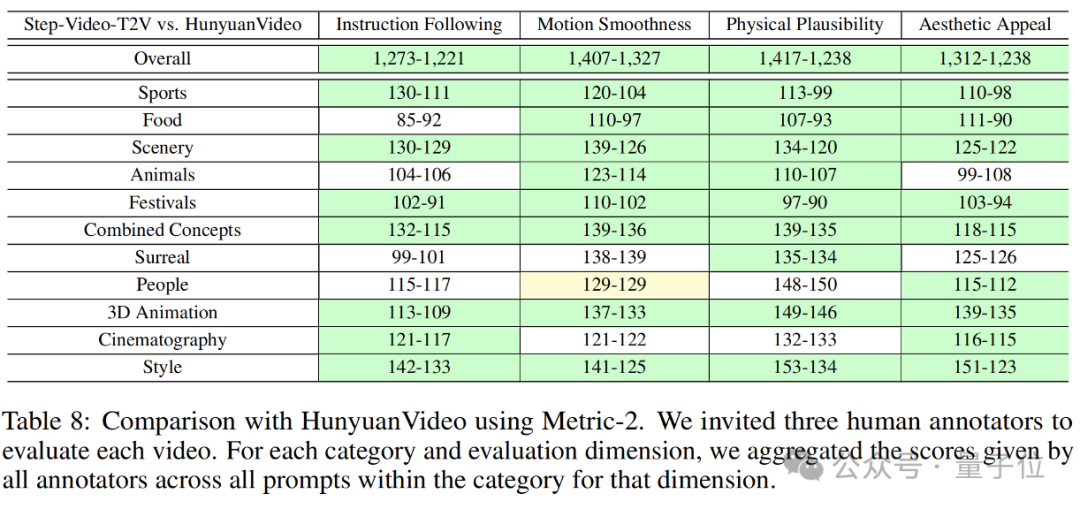

In order to comprehensively evaluate the performance of the open source video generation model, Step Step also released a new benchmark dataset for Wensheng video quality evaluation.Step-Video-T2V-Eval。

This dataset is also open source ~

It contains 128 Chinese evaluation questions from real users and aims to evaluate the quality of the generated video in 11 content categories, including sports, scenery, animals, combination concepts, surreality, etc.

See the figure below for the evaluation results of Step-Video-T2V-Eval on it:

It can be seen that Step-Video-T2V surpasses the previous best open source video models in terms of command compliance, motion smoothness, physical rationality, and aesthetics.

This means thatThe entire field of video generation can be used for research and innovation based on this new and strongest basic model.

In terms of actual results, the official introduction of Step Step:

What are you waiting for? Measured walking–

In accordance with the order of official introduction, the first level tests whether Step-Video-T2V can hold complex sports.

In previous video generation models, strange pictures would always appear when generating various complex sports segments such as ballet/national standard/China dance, rhythmic gymnastics, karate, and martial arts.

For example, the third leg that suddenly appeared, the crossed arms, etc., it was quite scary.

For this kind of situation, we conducted a directional test and gave Step-Video-T2V a prompt:

In the indoor badminton court, a head-up perspective and a fixed lens recorded a scene of a man playing badminton. A man wearing red short sleeves and black shorts, holding a badminton racket, stood in the middle of the green badminton court. The net spans the field, dividing the field into two parts. The man swung the bat and hit the badminton to the opposite side. The light is bright and even, and the picture is clear.

Scenes, characters, shots, lights, and movements all match.

The generated picture contains “aesthetic characters”, which is the second challenge posed by the qubit to Step-Video-T2V.

To be reasonable, the current level at which the Wensheng diagram model generates real-life pictures can definitely be fake in terms of static and local details.

However, when the video is generated, once the character moves, there are still recognizable physical or logical flaws.

And the performance of Step-Video-T2V–

Prompt:A man wearing a black suit, dark tie and white shirt, with scars on his face and a solemn expression. Close-up.

“There is no sense of AI.”

This is the unanimous evaluation of Xiao Shuai in the video by the students in the qubit editorial department after circulating it.

It is the kind of “no AI feeling” with regular facial features, real skin texture, and clearly visible scars on the face.

It is also realistic, but the protagonist does not have the “no sense of AI” type of hollow eyes and stiff expression.

The above two levels keep the Step-Video-T2V in the fixed lens position.

So, how does the push-pull and shaking work?

The third level tests the Step-Video-T2V’s mastery of mirror movement, such as push-pull, panning, rotation, and following.

If you want it to rotate, it will rotate:

Not bad! You can carry Stanikon your shoulders and go to the set to be a master mirror operator (no).

After some testing, the generated effect gave the answer:

Like the results of the evaluation set, Step-Video-T2V has outstanding capabilities in semantic understanding and command compliance.

evenBasic text generation is also easy to handle:

Step-Audio

At the same time, another open source model, Step-Audio, is the industry’s first product-level open source voice interaction model.

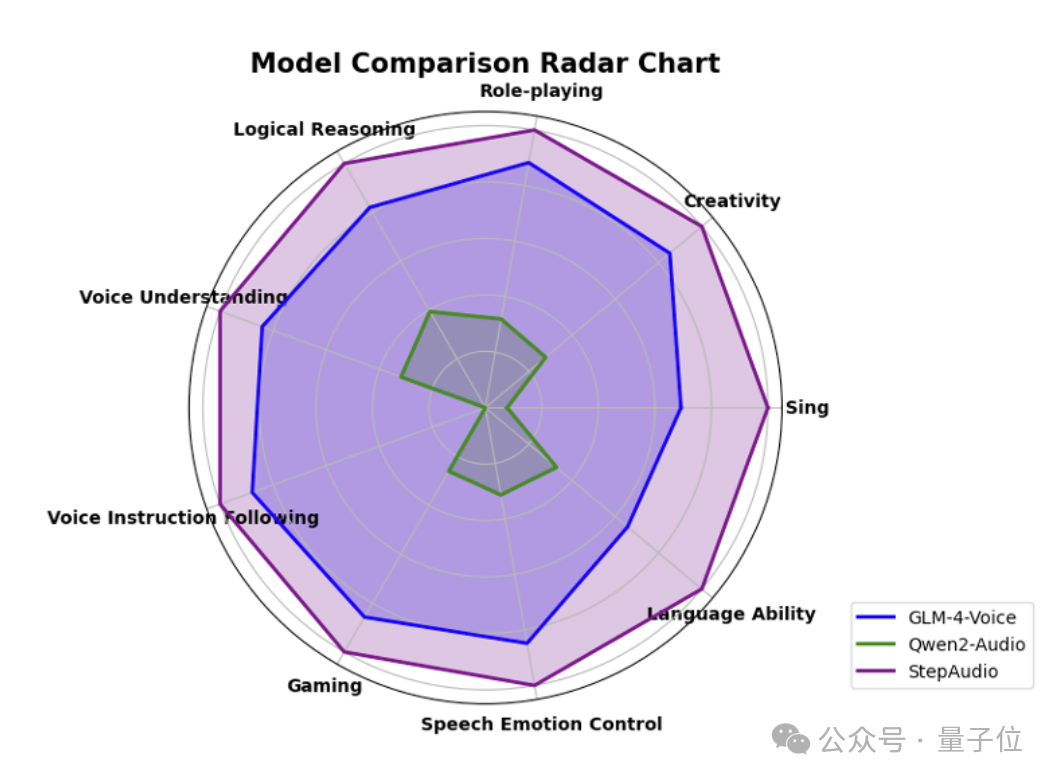

On the step-by-step self-built and open-source multi-dimensional evaluation system StepEval-Audio-360 benchmark test, Step-Audio achieved the best results in logical reasoning, creative ability, command control, language ability, role-playing, word games, emotional value and other dimensions.

In the five major public test sets including LlaMA Question and Web Questions, Step-Audio’s performance exceeds that of similar type of open source models in the industry, ranking first.

It can be seen that its performance is particularly outstanding in the HSK-6 (Chinese Proficiency Test Level 6) evaluation.

The measured results are as follows:

According to the Step Team, Step-Audio can generate expressions of emotions, dialects, languages, singing and personalized styles according to different scene needs, and can naturally have high-quality dialogues with users.

At the same time, the speech generated by it not only has the characteristics of lifelike nature, high emotional intelligence, etc., but also can achieve high-quality sound reproduction and role playing.

In short, the Step-Audio package will give you a big satisfaction for application needs in industry scenarios such as film and television entertainment, social networking, and games.

The step-by-step open source ecosystem is snowballing

How to put it, just one word: volume.

The steps are really exciting, especially when it comes to my own specialty multimodal models–

Since its birth, the multimodal model in its Step series has been the first frequent visitor to major authoritative evaluation collections, arenas, etc. at home and abroad.

Just look at the past three months, they have won the top spot several times.

- On November 22 last year, on the latest list of the big model arena, the Multimodal Understanding Big Model Step-1V was on the list. The total score was the same as that of Gemini-1.5-Flash-8B-Exp-0827, ranking first in the visual field of China’s big model.

- In January this year, the domestic large-scale model evaluation platform “OpenCompass” multi-modal model evaluation real-time list, and the just-released Step-1o series models won the first place.

- On the same day, in the latest list of large model arenas, the multimodal model Step-1o-vision won the first place in the domestic visual field for large models.

Secondly, the stepped multimodal model not only has good performance and good quality, but also has a high iteration frequency–

So far, Step Star has released 11 multi-modal large models.

Last month, 6 models were issued in six days, covering the entire track of language, speech, vision, and reasoning, further fulfilling the title of multimodal scroll king.

Two more multimodal models were opened up this month.

As long as you stabilize this rhythm, you can continue and continue to prove your status as a “barrel-level multi-modal player of the family.”

With its strong multimodal strength,Starting from 2024, the market and developers have recognized and widely accessed the Step API, forming a huge user base.

mass consumer goodsFor example, Chabaidao will allow thousands of stores across the country to access the multi-modal understanding of the large model Step-1V to explore the application of large model technology in the tea and beverage industry, and conduct intelligent inspection and AIGC marketing.

Public data shows that on average, millions of cups of tea and hundreds of courses of tea are delivered to consumers every day under the protection of large-scale intelligent inspections.

On average, Step-1V can save 75% of the self-inspection and verification time per day for tea-serving supervisors, providing tea consumers with more peace of mind and high-quality services.

independent developersFor example, the Internet celebrity AI application “Book of Stomach” and the AI psychological healing application “Chat in the Forest” finally chose the step multimodal model API after conducting AB tests on most domestic models.

(whispered: Because using it, the payment rate is the highest)

Specific data shows that in the second half of 2024, the number of calls to the Step Multimodal Large Model API will increase by more than 45 times.

Besides, this open source, the open source is the multimodal model that Step is best at.

We have noticed that we have accumulated a step forward in market and developer reputation and number,This open source is considering subsequent in-depth access from the model side.

On the one hand, Step-Video-T2V adopts the most open and relaxed MIT open source protocol and can be edited and commercially applied at will.

It can be said,”Nothing to hide.”

On the other hand, Step Yue means “making every effort to lower the threshold for industrial access.”

Take Step-Audio for example. Unlike open source solutions on the market that require workload such as redeployment and redevelopment, Step-Audio is a complete set of real-time dialogue solutions that can be directly engaged in real-time conversations as long as it is simply deployed.

You can enjoy an end-to-end experience by starting with zero frames.

After a whole set of actions, an open source technology ecosystem unique to step has been initially formed around Step Star and the multi-modal model trump card in its hands.

In this ecosystem, technology, creativity and business values are intertwined, jointly promoting the development of multimodal technology.

andWith the continued development and iteration of step models, the rapid and continuous access of developers, the assistance and synergy of ecological partners, and the “snowball effect” of step ecology has occurred and is growing.

China’s open source forces are speaking side by side with strength

Once upon a time, when people mentioned the best in the field of large model open source, people thought of Meta’s LLaMA and Albert Gu’s Mamba.

Up to now, there is no doubt that the open source power of China’s big model class has shone around the world, using its strength to rewrite “stereotypes.”

January 20, the eve of the Spring Festival in the Year of the Snake, is a day when large model immortals at home and abroad fight.

The most eye-catching thing is that DeepSeek-R1 came out on this day. Its reasoning performance is comparable to OpenAI o1, but its cost is only 1/3 of the latter.

The impact was huge, causing Nvidia to evaporate US$589 billion (approximately RMB 4.24 trillion) overnight, setting a record for the largest single-day decline in U.S. stocks.

More important and dazzling is that the reason why R1 has risen to a height that is exciting for hundreds of millions of people,In addition to excellent reasoning and people-friendly price, what is more important is its open source attributes.

One stone has stirred up thousands of waves. Even OpenAI, which has long been ridiculed as “no longer open,” CEO Ultraman has repeatedly made public statements.

Ultraman said: “We are on the wrong side of history when it comes to open source weighted AI models.”

He added: “The world does need open source models that can provide a lot of value to people. I’m very happy that there are some excellent open source models in the world.”

Now, Step Leap is also a new trump card in the hands of open source.

And open source is the original intention.

Officials said that the purpose of open source Step-Video-T2V and Step-Audio is to promote the sharing and innovation of large model technologies and promote the inclusive development of artificial intelligence.

As soon as open source appeared, it showed off in multiple evaluation sets based on its strength.

At the poker table of today’s open source model, DeepSeek strongly reasoned, step Step heavy multimodal, and a variety of players who continue to develop…

Their strength is not only the best in the open source circle, but also the entire big model circle.

—China’s open source power, after emerging, is taking a step further.

Take Step Open Source as an example. What breaks through is the technology in the multimodal field and changes the choice logic of developers around the world.

Eleuther AI and many other technology giants active in the open source community took the initiative to test the step-by-step model,”Thanks to China for open source.”

Wang Tiezhen, head of Huashang Face China, directly said that the step meeting is the next “DeepSeek.”

From “technological breakthrough” to “ecological openness,” the path of China’s big model is getting more and more stable.

Having said that, stepping forward to this open source dual model may be just a footnote in the 2025 AI competition.

On a deeper level, it demonstrates the technical confidence of China’s open source power and sends a signal:

In the future AI model world, China’s power will never be absent, nor will it lag behind others.

【Step-Video-T2V】

GitHub:

https://github.com/stepfun-ai/Step-Video-T2V

Hug your face:

https://huggingface.co/stepfun-ai/stepvideo-t2v

Magic Model Scope:

https://modelscope.cn/models/stepfun-ai/stepvideo-

Technical report:

https://arxiv.org/abs/2502.10248

Experience entrance

https://yuewen.cn/videos

【Step-Audio】

GitHub:

https://github.com/stepfun-ai/Step-Audio

Hug your face:

https://huggingface.co/collections/stepfun-ai/step-audio-67b33accf45735bb21131b0b

Magic Model Scope:

https://modelscope.cn/collections/Step-Audio-a47b227413534a

Technical report:

https://github.com/stepfun-ai/Step-Audio/blob/main/assets/Step-Audio.pdf