Image source: Generated by AI

Image source: Generated by AI

On February 18, Beijing time, Musk and the xAI team officially released the latest version of Grok, Grok3, in a live broadcast.

As early as before this press conference, relying on the release of various relevant information, coupled with Musk’s continuous 24/7 warm-up hype, global expectations for Grok3 were pulled to an unprecedented level. A week ago, when Musk commented on DeepSeek R1 on the live broadcast, he confidently said that “xAI is about to launch a better AI model.”

Judging from the data displayed on site, Grok3 has surpassed all current mainstream models in benchmark tests of mathematics, science and programming. Musk even claimed that Grok 3 will be used for SpaceX Mars mission calculations in the future, and predicted that “within three years, a Nobel Prize-level breakthrough will be achieved.”

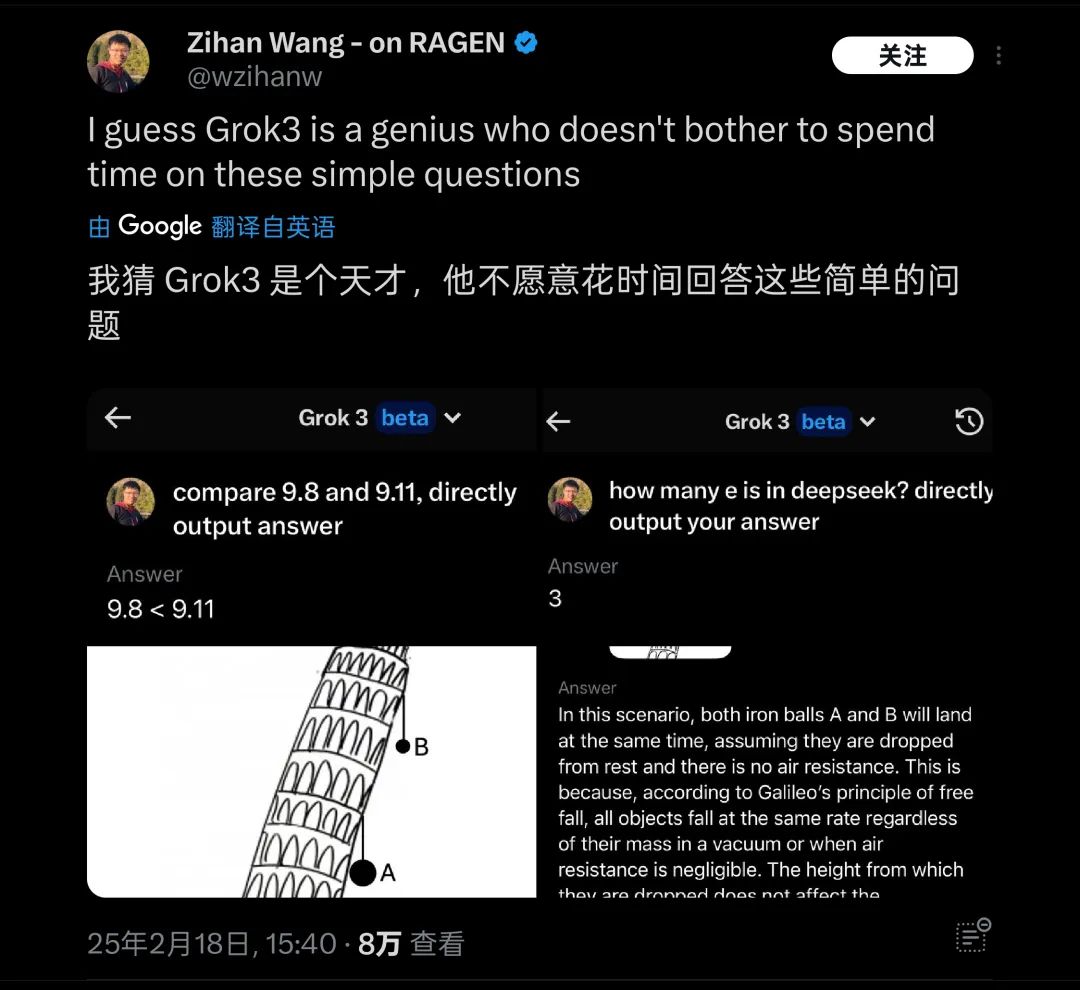

But these are currently only Musk’s words. After its release, I tested the latest Beta version of Grok3 and asked the classic question used to embarrass large models: “Which is bigger, 9.11 or 9.9?”

Unfortunately, without adding any attributes or annotations, Grok3, which claims to be the smartest at present, still cannot answer this question correctly.

Grok3 does not accurately identify the meaning of this question| Photo source: Geek Park

After this test was issued, it quickly attracted the attention of many friends in a short period of time. Coincidentally, there were many tests overseas on similar questions, such as basic physics/Mathematics problems such as “Which of the two balls on the Leaning Tower of Pisa fell first?” Grok3 was also found to be still unable to cope with. Therefore, it is jokingly called “a genius unwilling to answer simple questions.”

Grok3 “rolled over” on many common sense questions in actual testing| Photo source: X

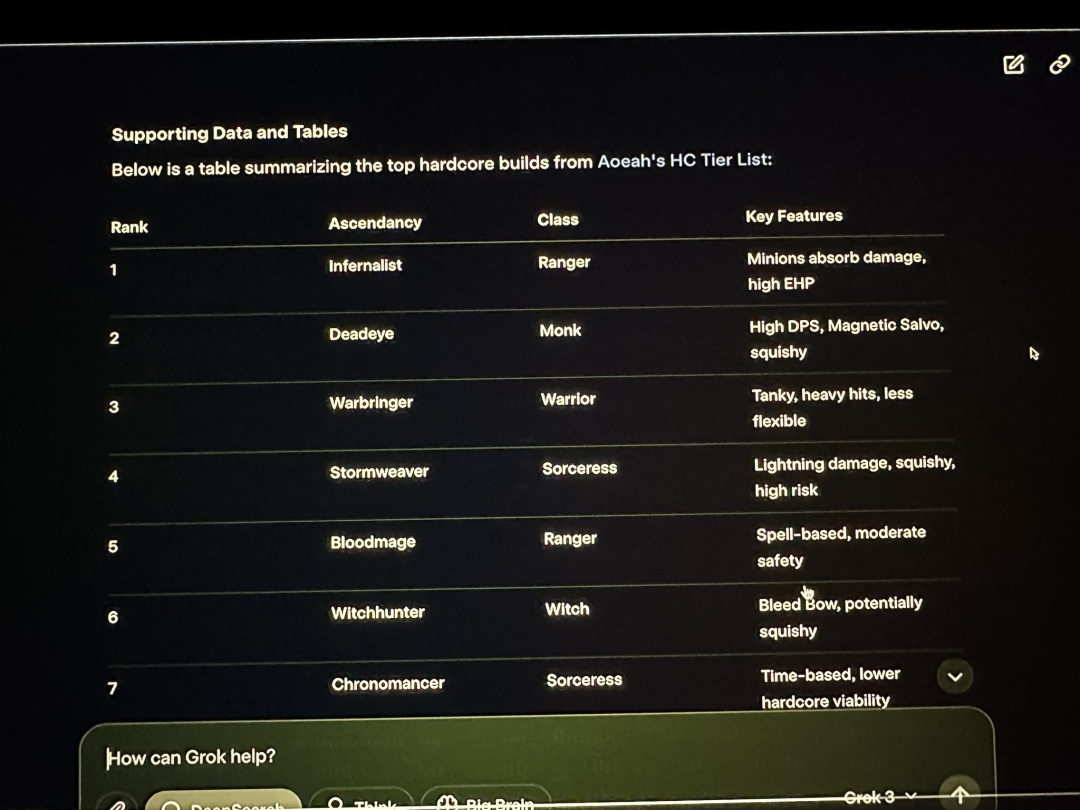

In addition to the fact that Grok3 fell over on the basics tested by netizens, during the live broadcast of the xAI conference, Musk demonstrated using Grok3 to analyze the occupations and sublimation effects corresponding to Path of Exile 2, which he claimed to play frequently., but in fact most of the corresponding answers given by Grok3 are wrong. Musk did not see this obvious problem during the live broadcast.

Grok3 also showed a large number of errors in giving data during live broadcasts| Photo source: X

Therefore, this mistake not only became real evidence that overseas netizens once again ridiculed Musk for “looking for a substitute” in playing games, but also once again raised a big question mark on the reliability of Grok3 in practical applications.

For such a “genius”, no matter what his actual capabilities are, his reliability will be questioned in extremely complex application scenarios such as Mars exploration missions in the future.

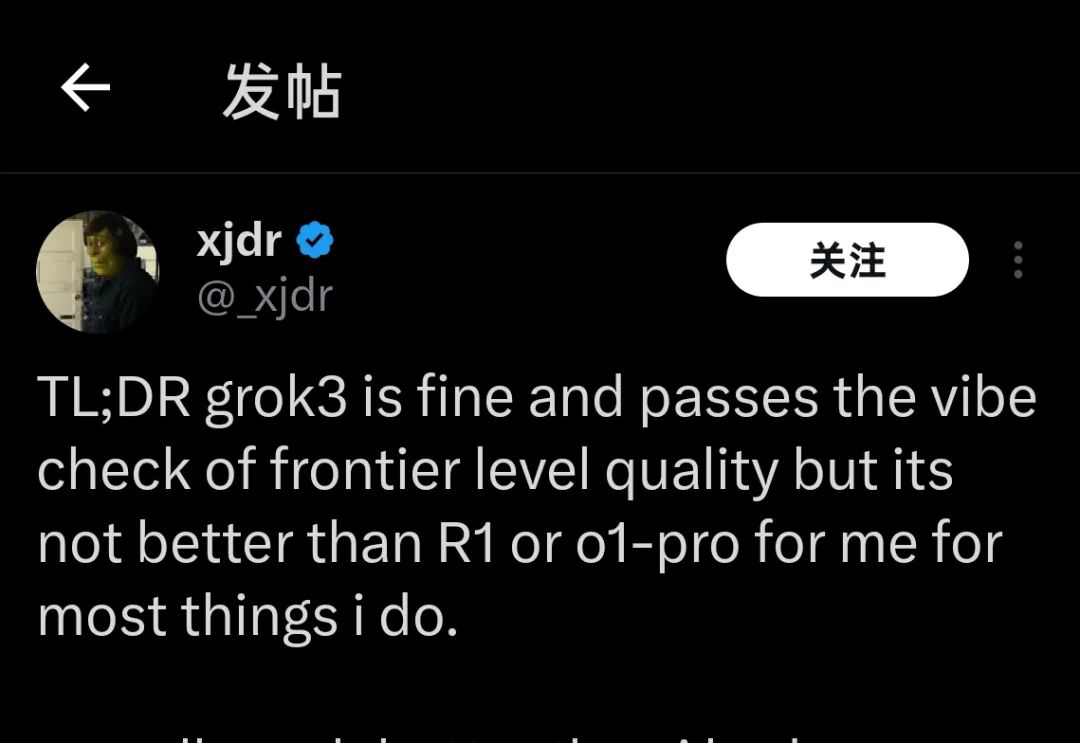

Currently, many model ability testers who qualified for the Grok3 test a few weeks ago and just used it for a few hours yesterday all point to the same conclusion about the current performance of Grok3:

“Grok3 is good, but it’s no better than R1 or o1-Pro”

“Grok3 is good, but it’s no better than R1 or o1-Pro”| Photo source: X

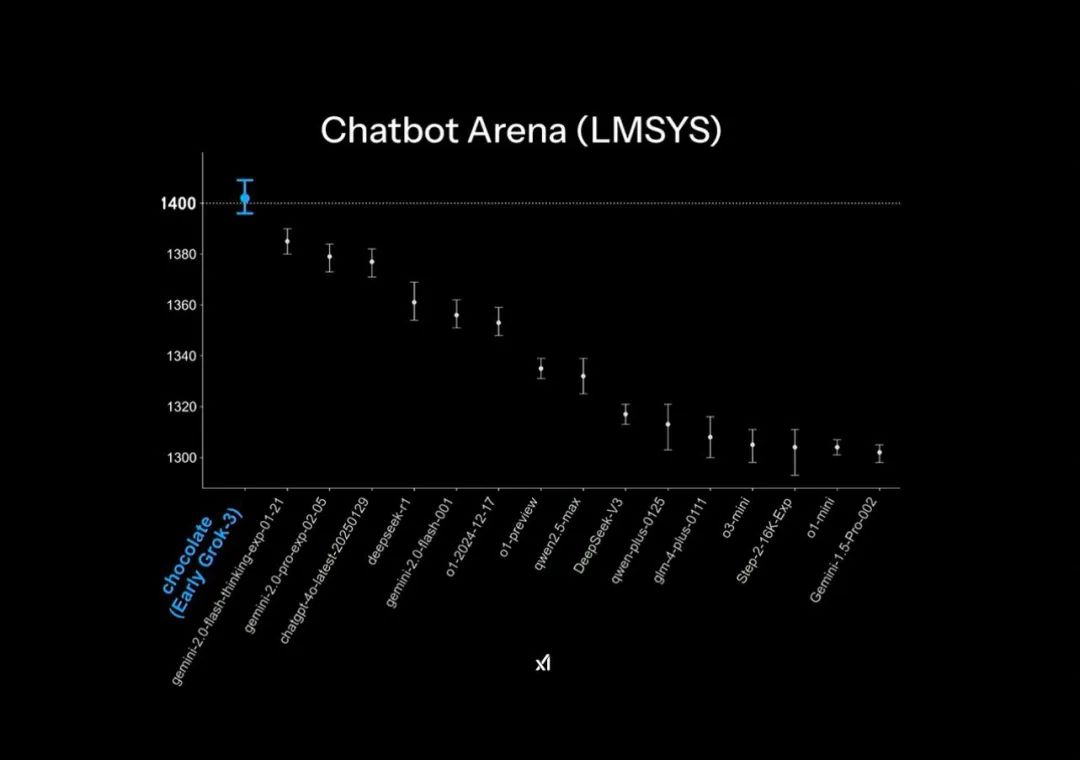

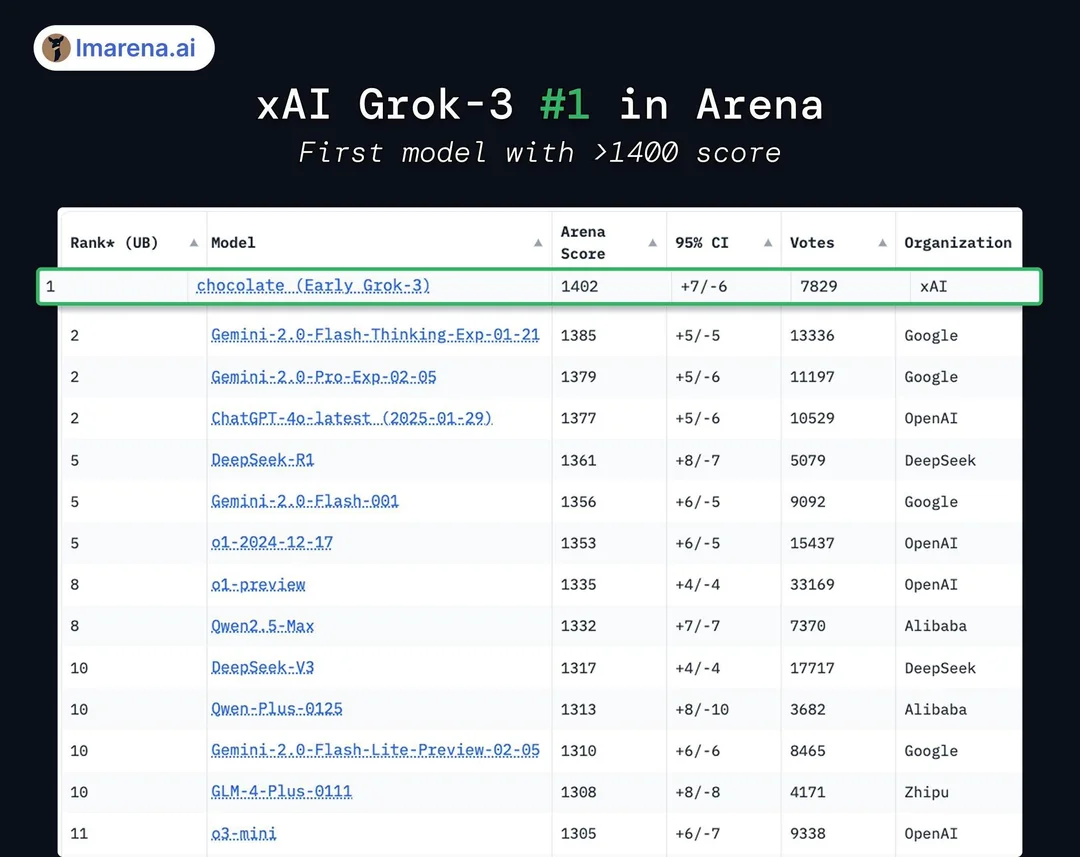

In the official PPT released during the release, Grok3 achieved “far ahead” in the Chatbot Arena, a large model arena, but this actually applied some small drawing techniques: the vertical axis of the list only listed 1400-1300 segments. The ranking made the original 1% gap in test results extremely obvious in this PPT display.

Official release of “Far ahead” effect in PPT| Photo source: X

As for the actual model score results, Grok3 actually only achieves a gap of less than 1-2% compared to DeepSeek R1 and GPT4.0: this corresponds to the somatosensory effect of many users who have “no significant difference” in actual tests.

In addition, although Grok3 exceeds all models currently publicly tested in terms of scores, this is not bought by many people: after all, xAI has “brushed” points on this list in the Grok2 era, and as the list responds to The answer length style is downgraded and scores are greatly reduced, so it is often criticized by industry insiders as “high scores and low talents.”

Whether it is the “scoring” of the list or the “tips” in the design of drawings, it all shows xAI and Musk’s obsession with being “far ahead” in model capabilities.

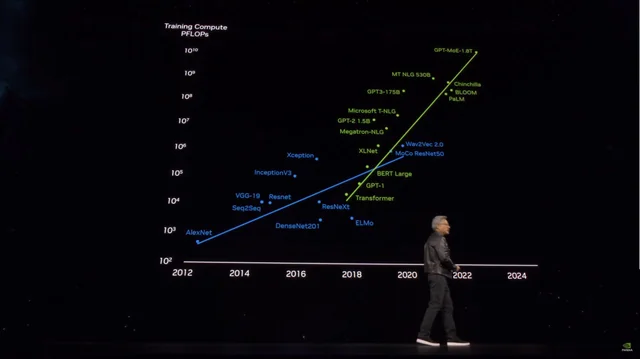

The price Musk paid for these gaps was high: at the press conference, Musk said in an almost ostentatious tone that he used 200,000 H100s (Musk’s live broadcast said he used “more than 100,000” H100s) to train Grok3, with a total training hours reaching 200 million hours. This makes some people think that this is another major positive for the GPU industry, and think that the shock DeepSeek has brought to the industry is “stupid.”

Many people believe that building computing power will be the future of model training| Photo source: X

But in fact, some netizens compared the DeepSeek V3 obtained after two months of training using 2000 H800s, and calculated that the actual training computing power consumption of Grok3 is 263 times that of the V3. The gap between the DeeSeek V3 and the Grok3, which scored 1402 points on the big model arena list, is even less than 100 points.

After the release of these data, many people quickly realized that behind Grok3 ‘s top of the world’s strongest, the logic that the bigger the model, the stronger the performance, has already had obvious marginal effects.

Even Grok2, which has “high scores and low abilities”, is backed by a large amount of high-quality first-party data on the X (Twitter) platform. In the training of Grok3, xAI will naturally encounter the “ceiling” that OpenAI currently encounters-the lack of high-quality training data, which quickly exposes the marginal effect of model capabilities.

The first people to realize and understand these facts must be the development team of Grok3 and Musk. Therefore, Musk has also repeatedly stated on social media that the version currently experienced by users is “just a beta version”.”The full version will be launched in the next few months.” Musk himself is also a Grok3 product manager, advising users to directly report on various problems they encounter while using it in the comment area.

He is probably the product manager with the most fans on the planet.| Photo source: X

But within less than a day, Grok3 ‘s performance undoubtedly sounded the alarm for latecomers who hoped to rely on “Powerful Flying Brick” to train a more capable large model: According to Microsoft’s public information, the OpenAI GPT4 parameter volume is 1.8 trillion parameters, which is more than 10 times higher than GPT3, and the rumored parameter volume of GPT4.5 will be even larger.

While the size of model parameters is soaring, so is the training cost.| Photo source: X

With Grok3 at the forefront, GPT4.5 and more players who want to continue to “burn money” and use parameter volumes to obtain better model performance have to consider how to break through the ceiling that is already close to them.

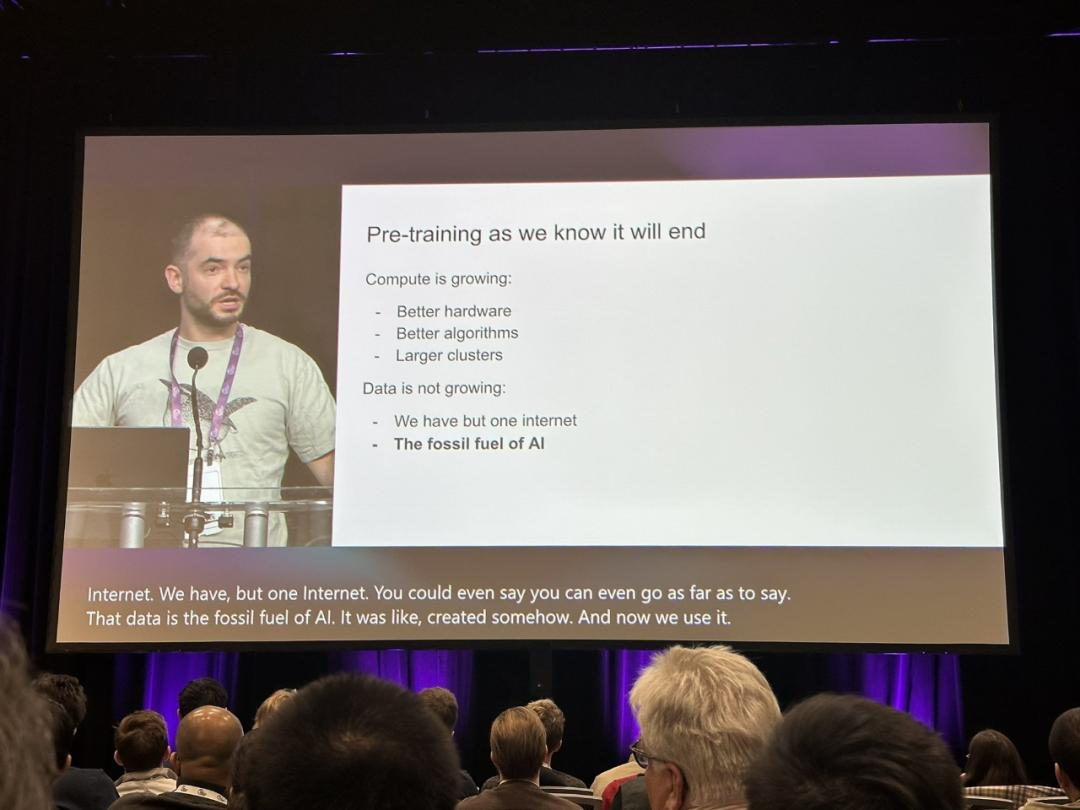

At this moment, Ilya Sutskever, former chief scientist of OpenAI, said in December last year that “the pre-training we are familiar with will end,” but was recalled and tried to find a real way out for large-scale model training.

Ilya’s views have sounded the alarm for the industry| Photo source: X

At that time, Ilya accurately predicted that the available new data would be nearly exhausted, and it would be difficult for the model to continue to improve performance by acquiring data. She described this situation as fossil fuel consumption, saying that “just as oil is a limited resource, the Internet is composed of Man-generated content is also limited.”

In Sutskever’s prediction, the next generation of models after the pre-trained model will have “true autonomy.” At the same time, it will have “brain-like” reasoning capabilities.

Unlike today’s pre-trained models mainly rely on content matching (based on what the model has learned previously), future AI systems will be able to gradually learn and establish problem-solving methodologies in a way similar to the “thinking” of the human brain.

Human beings only need basic professional books to achieve basic mastery of a certain subject, but the AI model requires learning millions of data to achieve the most basic entry effect. Even when you change the question, these basic questions cannot be correctly understood, and the model has not been improved in terms of true intelligence: the questions mentioned at the beginning of the article that are basic but still cannot be answered correctly are intuitive manifestations of this phenomenon.

But in addition to the “powerful flying brick”, if Grok3 can really reveal to the industry the fact that “the pre-training model is coming to an end,” it will still be of important inspiration to the industry.

Perhaps, after the craze of Grok3 gradually fades, we can also see more cases similar to Li Feifei’s “fine-tuning high-performance models for US$50 based on a specific data set.” In these explorations, we finally found the real path to AGI.