Wen| Academic headlines

Proposing a novel and feasible research direction and clearly knowing how to conduct subsequent explorations are crucial to accelerating scientific discovery.

However,For human scientists, this is a problem of both breadth and depth, not only need to understand the latest developments in this field, but also need to integrate knowledge from unfamiliar fields.

Today, Google launches its Virtual Science Partner AI co-scientist, maybe you can do it in “Help mankind pursue scientific progress“There is much to be done in this matter.

Paper link: storage.googleapis.com/coscientist_paper/ai_coscientist.pdf

According to reports, AI co-scientist is a multi-agent AI system built based on Gemini 2.0 and aims toReflect the reasoning process of scientific methods and discover new and original knowledge。It is not about automating scientific processes, but rather a collaborative tool that helps experts collect research results and improve their work.“Virtual scientific partners”。

Since then,Human scientists only need to use natural language to specify a research goal——For example, to better understand the spread of a disease-causing microorganism——AI co-scientist will propose testable hypotheses, summaries of relevant published literature and possible experimental methods。

Google CEO Sundar Pichai said on X that with the help of AI co-scientist, human scientists have seen insights into important research areas such as liver fibrosis treatment, antibiotic resistance and drug reuse.Promising early results”。

Empowering human scientists and accelerating scientific discovery

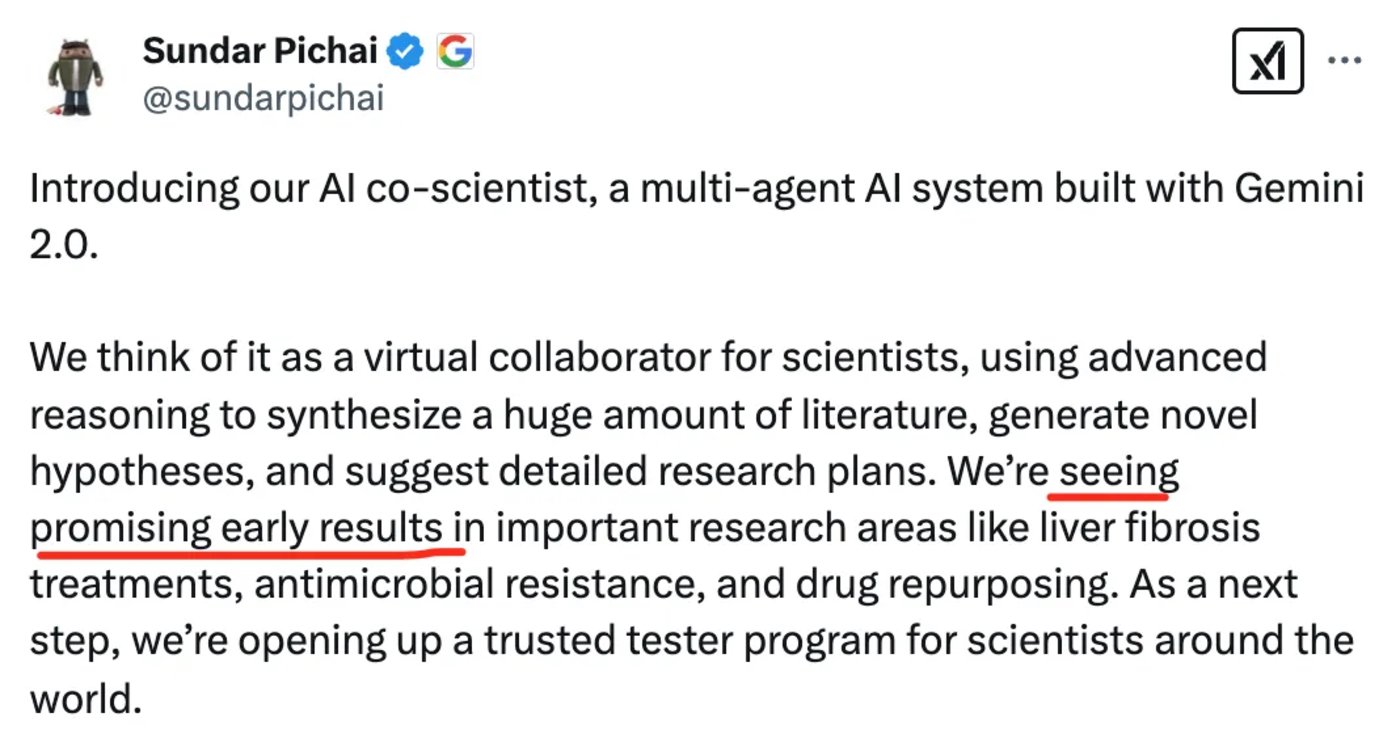

According to Google’s official blog, AI co-scientist uses a series of specialized agents (involving generation, reflection, sequencing, evolution, proximity, and meta-review) that are inspired by the scientific method itself and use automatic feedback to iteratively generate, evaluate, and optimize assumptions, creating a cycle of self-improvement that produces increasingly higher-quality and novel outputs.

AI co-scientist is built specifically for collaboration, and scientists can interact with the system in multiple ways, including directly providing their own immature ideas to explore, or providing feedback on the generated output in natural language. AI co-scientist also uses tools such as web search and specialized AI models to improve the foundation and quality of generated assumptions.

Fig.| The different components of AI co-scientist and the model of interaction between it and scientists.

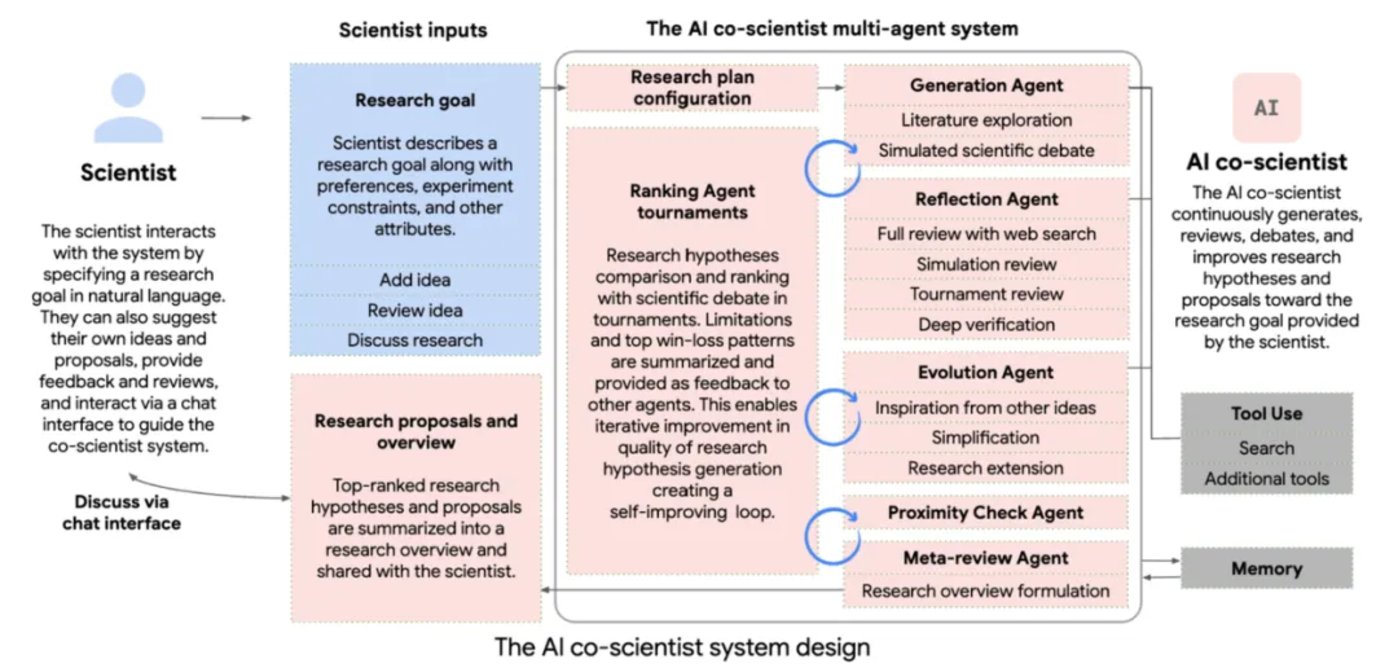

AI co-scientist is able to resolve specified goals into research plan configurations and manage them by supervisor agents. The supervisory agent allocates professional agents to the work queue and allocates resources. This design allows AI co-scientist to flexibly expand computing power and iteratively improve its scientific reasoning capabilities to achieve specified research goals.

Fig.| Overview of the AI co-scientist system. Professional agents (red boxes, with unique roles and logic); scientist input and feedback (blue boxes); system information flow (dark gray arrows); inter-agent feedback (red arrows within the agent section).

Expand test-time calculations and perform advanced scientific reasoning

AI co-scientist leverages test-time computing extensions to perform iterative reasoning, evolution, and improve output. Key reasoning steps include scientific debate based on self-games (used to generate new assumptions), ranking competitions (used to compare assumptions), and the evolutionary process (used to improve quality). The system’s agenic nature promotes recursive self-criticism, including the use of feedback tools to refine assumptions and suggestions.

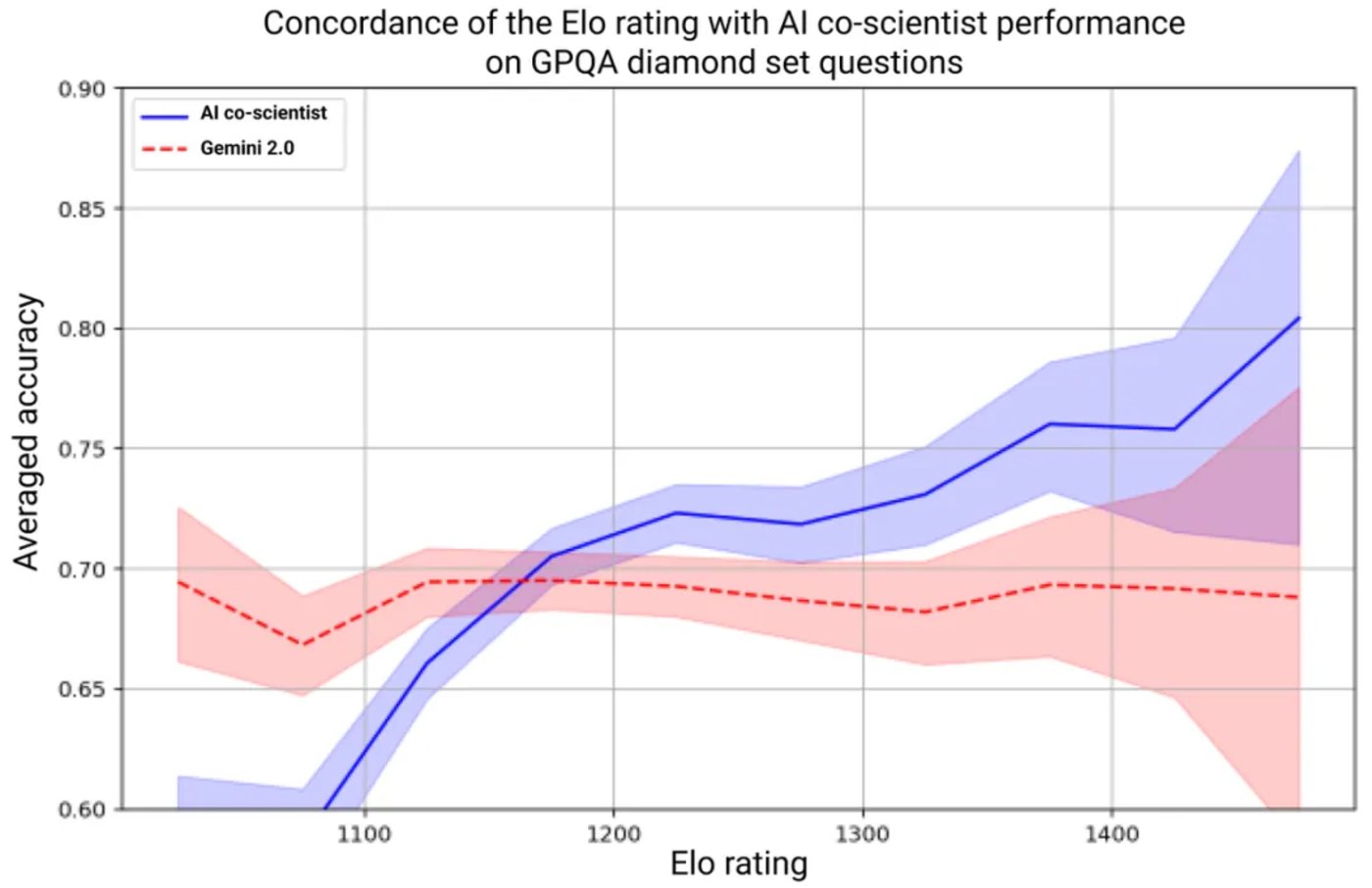

The self-improvement of AI co-scientists relies on the Elo automatic assessment metrics derived from their competitions. Due to the central role of Elo scores in the system, the Google team evaluated whether higher Elo scores were associated with higher output quality, and analyzed the consistency of automatic Elo scores and GPQA benchmark accuracy in challenging diamond question sets. The results showed that a higher Elo score was positively correlated with a higher probability of correct answers.

Fig.| Average accuracy of AI co-scientist (blue line) and Gemini 2.0 (red line) in answering GPQA diamond questions, grouped by Elo rating. Elo is an automated assessment and is not based on independent basic facts.

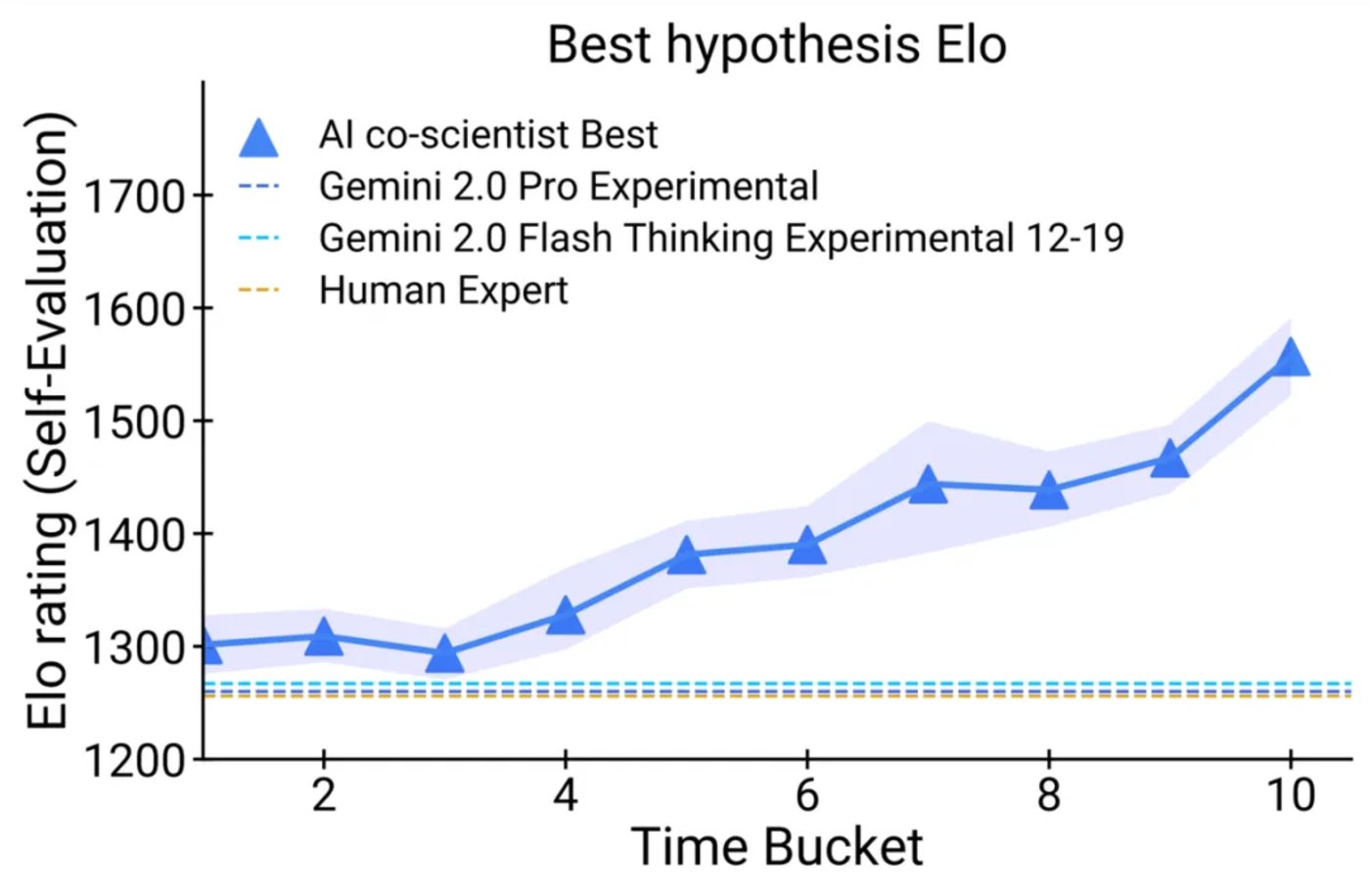

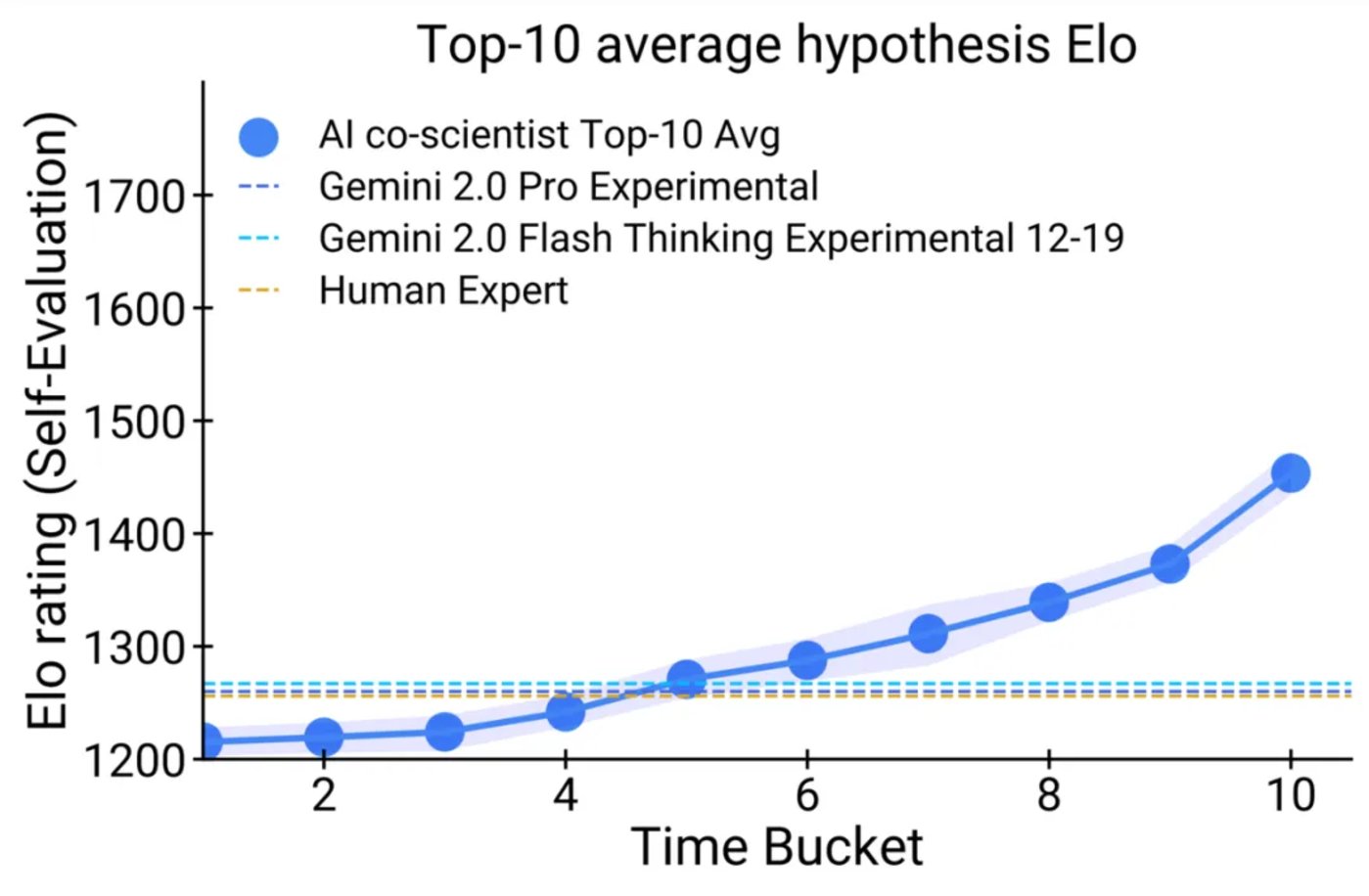

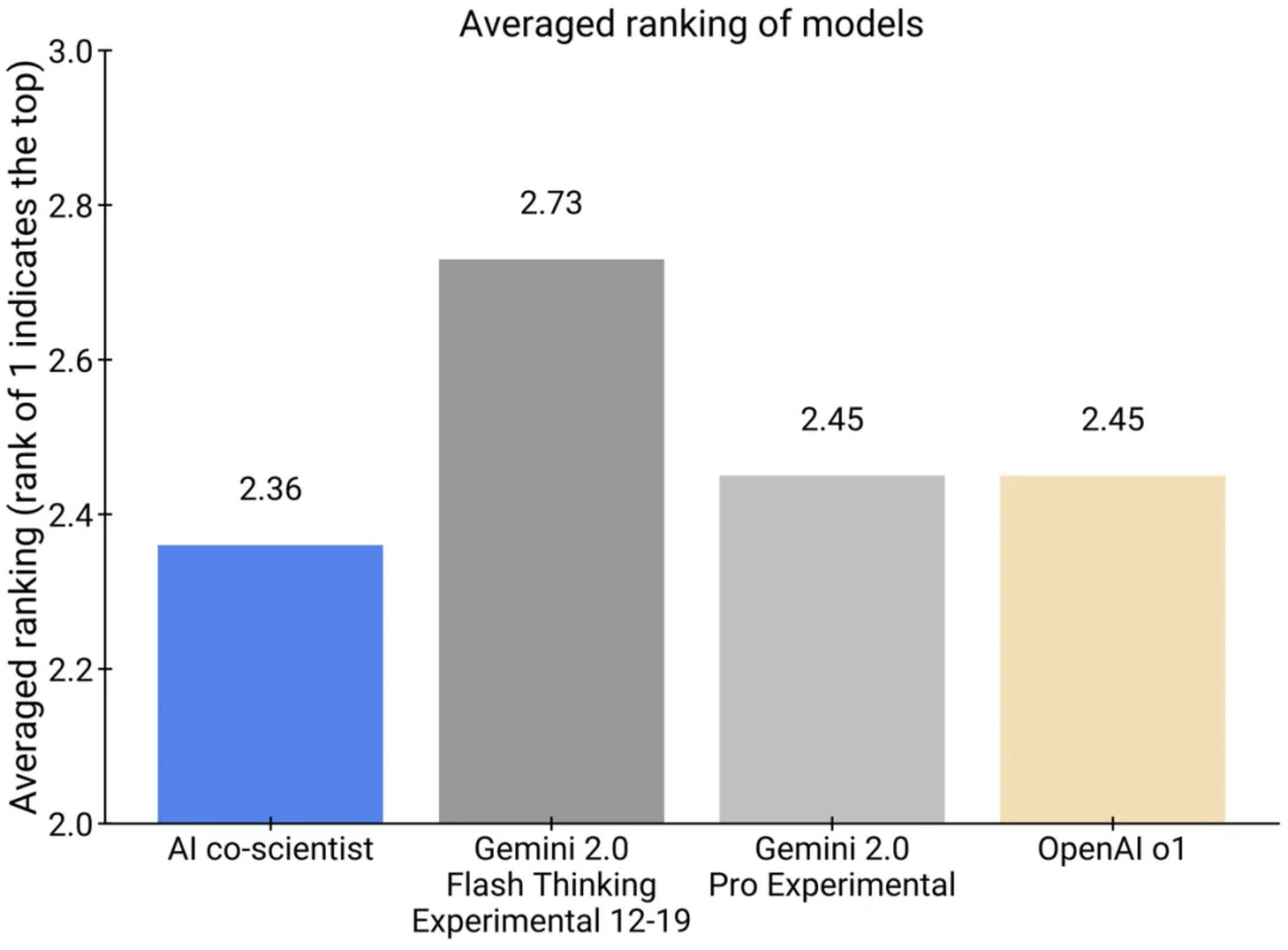

Seven domain experts planned 15 open research goals and the best solutions in their areas of expertise. Using the automated Elo scoring metric, the Google team observed that AI co-scientist outperformed other SOTA agents and inference models on these complex questions, which reflects the advantages of computing when using inductive bias in scientific methods for testing. As the system spends more time reasoning and improving, the quality of self-scoring results improves and outperforms other models and unaided human experts.

Fig.| The performance of AI co-scientist improves as system computing time increases. This can be seen from the gradual improvement in the automatic Elo indicator compared to other baselines. The top graph shows the Elo for the best-rated hypothesis, and the bottom graph shows the average Elo for the top 10 hypotheses.

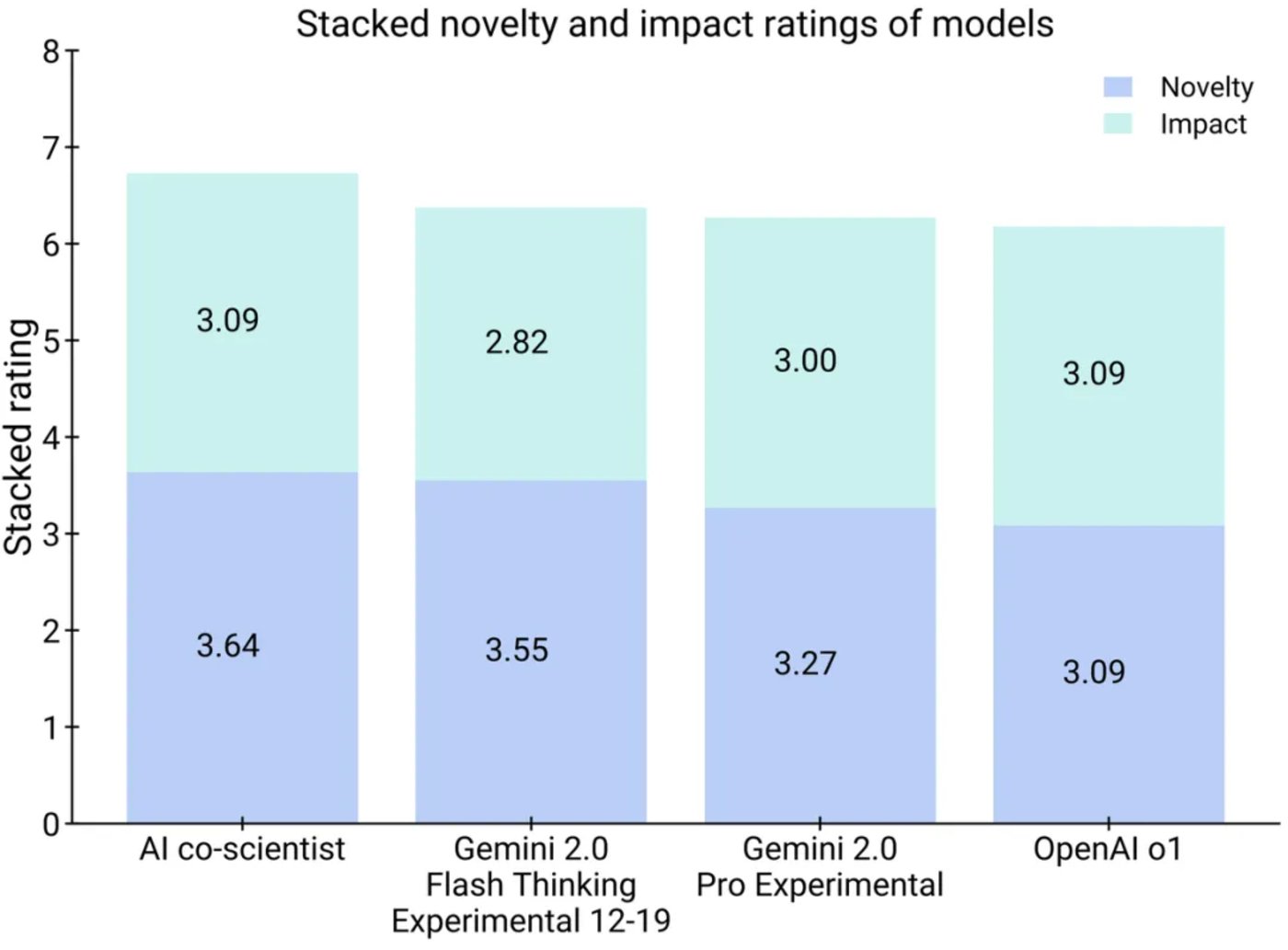

On a small subset of 11 research goals, experts assessed how the results generated by AI co-scientist performed compared to other relevant benchmarks in terms of novelty and impact, and provided overall preferences. Despite the small sample size, experts assess that AI co-scientist has higher potential in terms of novelty and influence. In addition, the preferences of these human experts appear to be consistent with the previously introduced Elo automatic assessment indicator.

Fig.| Human experts believe that the results of AI co-scientist are more novel and influential (above), and are more popular than other models (below).

How do you perform in the real world?

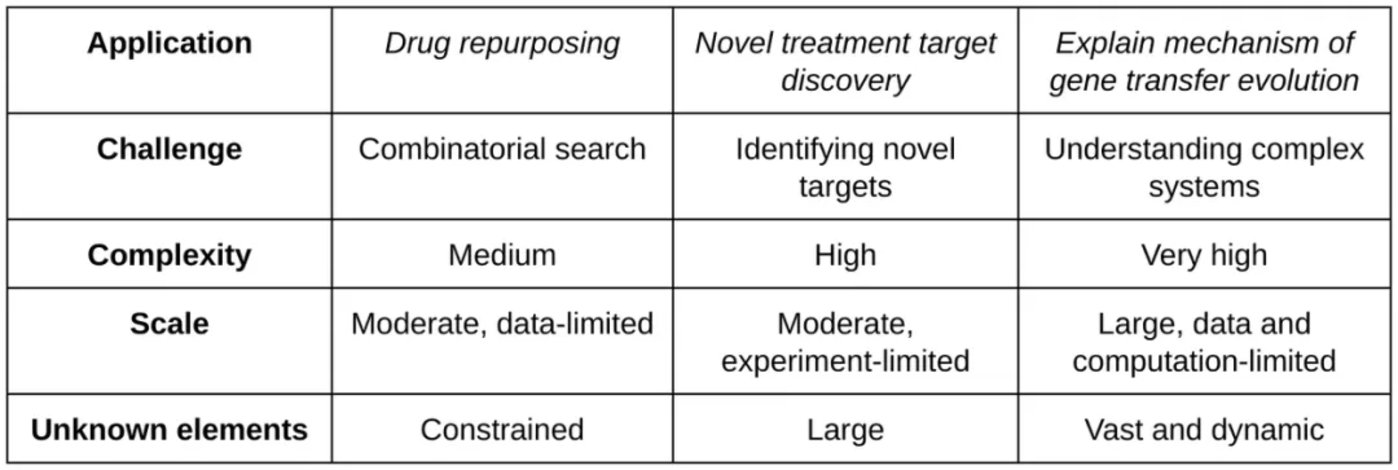

To assess the actual utility of the system’s novel predictions, the Google team evaluated end-to-end laboratory experiments that explored the assumptions and research recommendations generated by AI co-scientist in three key biomedical applications: drug reuse, proposing new therapeutic targets and clarifying the mechanisms of antimicrobial resistance. These experiments all involve guidance from experts:

1. Drug reuse in acute myeloid leukemia

Drug development is an increasingly time-consuming and expensive process, and new therapies require restarting multiple aspects of the discovery and development process for each indication or disease. To meet this challenge, drug reuse technology has found new therapeutic applications for existing drugs that exceed their original use. However, due to the complexity of this task, it requires extensive interdisciplinary expertise.

The Google team used AI co-scientists to help predict drug reuse opportunities, and worked with its team partners to verify the predictions through computational biology, expert clinical feedback, and in vitro experiments.

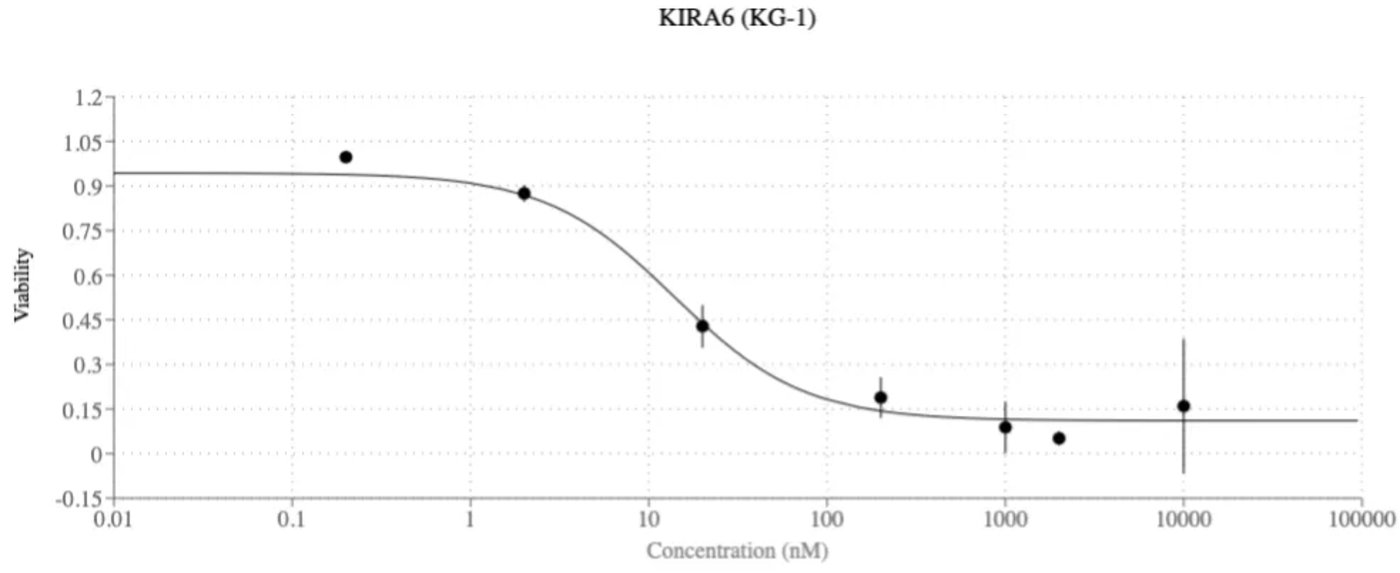

It is worth noting that AI co-scientists have proposed new reuse drug candidates for acute myeloid leukemia (AML). Subsequent experiments verified these proposals, confirming that the proposed drugs inhibited tumor cell survival at clinically relevant concentrations in multiple AML cell lines.

Fig.| Dose-response curve of one of the reuse drugs for acute myeloid leukemia predicted by AI co-scientist. At clinically relevant concentrations, KIRA6 inhibits the viability of KG-1 (acute myeloid leukemia cell line). Reducing cancer cell viability at lower drug concentrations has multiple advantages, for example, it can reduce the possibility of off-target side effects.

2. Promote target discovery for liver fibrosis

Identifying new therapeutic targets is more complex than drug reuse and often leads to inefficient hypothesis selection and improper prioritization of in vitro and in vivo experiments. AI-assisted target discovery helps simplify the experimental verification process, thereby reducing development time costs.

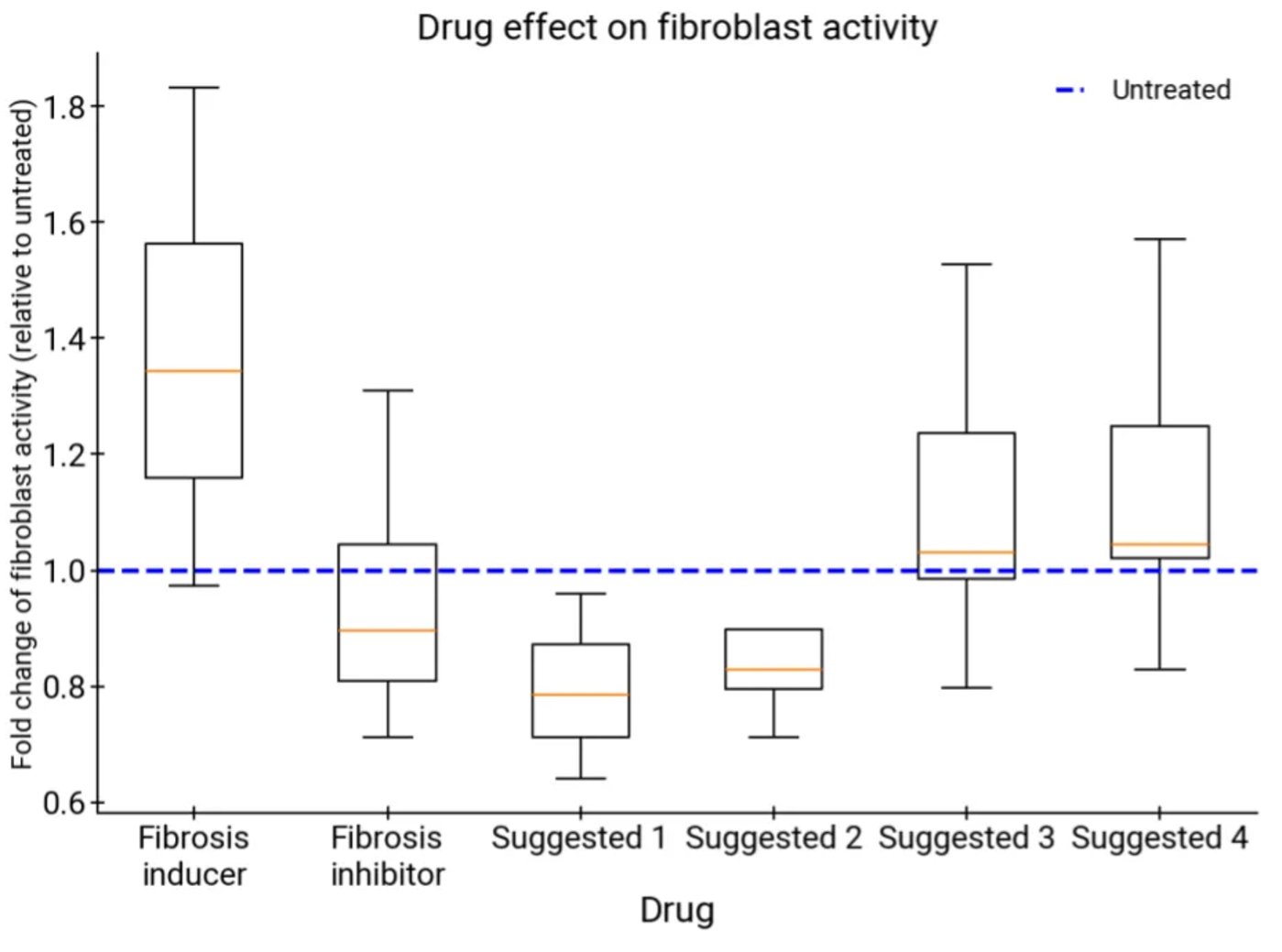

Focusing on liver fibrosis, the Google team tested the ability of AI co-scientist to propose, rank and generate target discovery hypotheses and experimental scenarios. AI co-scientist demonstrates its potential by identifying epigenetic targets with preclinical evidence for significant anti-fibrotic activity in human liver organ tissue (three-dimensional multicellular tissue cultures derived from human cells and designed to mimic the structure and function of the human liver).

Fig.| Comparison of target therapies for liver fibrosis recommended by AI co-scientist versus fibrosis inducers (negative controls) and inhibitors (positive controls). All treatments recommended by AI co-scientist showed good activity (p-values were less than 0.01 for all recommended drugs), including drug candidates that may reverse the disease phenotype.

3. Explain the mechanism of antimicrobial resistance

As a third verification, the Google team focused on developing hypotheses to explain the evolutionary mechanism of bacterial gene transfer associated with antimicrobial resistance (AMR), the mechanism that microorganisms have evolved to resist drugs to treat infections. This is another complex challenge that involves understanding the molecular mechanisms of gene transfer (conjugation, transduction, and transformation), as well as the ecological and evolutionary pressures that drive the spread of the AMR gene.

In this test, expert researchers directed AI co-scientists to explore a topic that has been made new discoveries in their group but has not yet been made public, explaining how capside-formed phage-inducible chromosomal islands (cf-PICI) exist in multiple bacterial species.

AI co-scientist independently proposed the idea that cf-PICI interacts with different phage tails to expand its host range. Before using AI co-scientist, the Google team had verified this finding in original laboratory experiments. This demonstrates the value of AI co-scientist as an assistive technology because it can leverage decades of research, including all previous open access literature on the topic.

Limitations and prospects

In the technical report, the Google team also discussed several limitations and improvement opportunities for AI co-scientist, including enhanced literature reviews, fact checking, cross-checking with external tools, automated evaluation techniques, and large-scale evaluations involving more subject experts and diverse research goals.

They also said that AI co-scientist represents an important step towards AI-assisted technology that can help scientists accelerate discovery. Its ability to generate novel, verifiable hypotheses in multiple scientific and biomedical fields, as well as its ability to recursively self-improve by increasing computing power, demonstrates its potential to accelerate scientists ‘response to major scientific and medical challenges.