Article source: Head Technology

Image source: Generated by AI

Image source: Generated by AI

Large Language Models (LLMs) are changing the way software is developed, and whether AI can now replace human programmers on a large scale has become a topic that has attracted much attention in the industry.

In just two years, the AI model has evolved from solving basic computer science problems to competing with human masters in international programming competitions. For example, OpenAI o1 once participated in the 2024 International Informatics Olympiad (IOI) under the same conditions as human contestants and successfully won the gold medal, demonstrating strong programming potential.

At the same time, the AI iteration rate is also accelerating. On the code generation evaluation benchmark SWE-Bench Verified, the score of GPT-4o in August 2024 was 33%, but the score of the new generation o3 model has doubled to 72%.

To better measure the software engineering capabilities of AI models in the real world, today, OpenAI Open Source launched a new evaluation benchmarkSWE-Lancer, for the first time, model performance is linked to monetary value.

SWE-Lancer is a benchmark test that contains more than 1400 free software engineering tasks from the Upwork platform. The total reward of these tasks in the real world is approximately US$1 million. How much money can AI make to program?

“Features” of the new benchmark

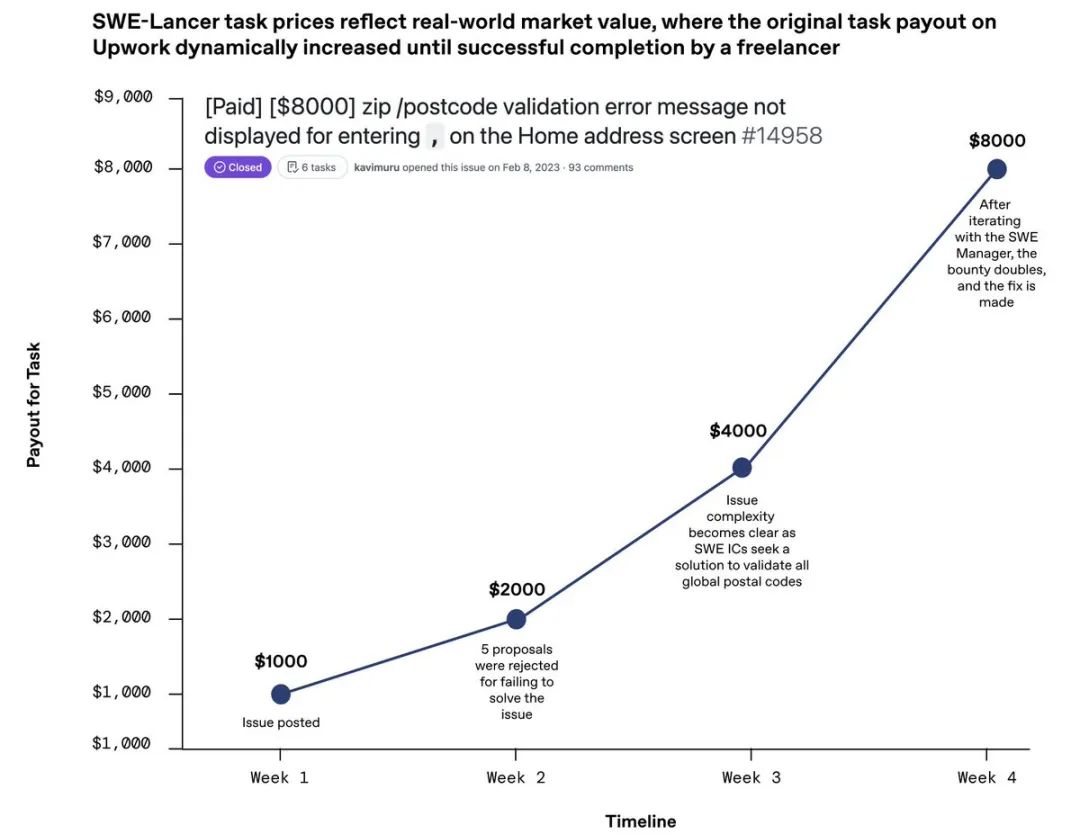

The SWE-Lancer benchmark task price reflects the true market value. The more difficult the task, the higher the reward.

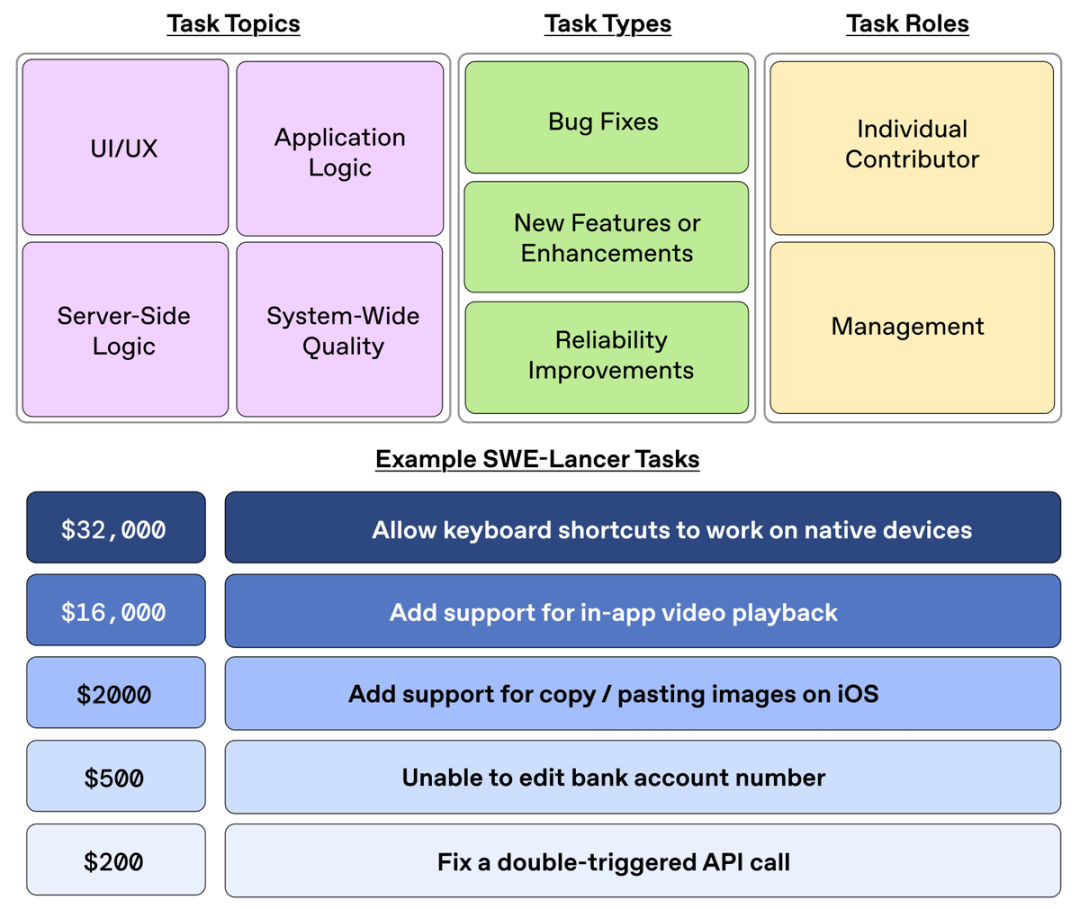

This includes both independent engineering tasks and management tasks, and can be chosen between technical implementations. The benchmark is not only for programmers, but also for the entire development team, including architects and managers.

Compared with previous software engineering testing benchmarks, SWE-Lancer has several advantages, such as:

1. All 1488 tasks represent the real remuneration paid by employers to freelance engineers, providing a natural, market-determined difficulty gradient. The remuneration ranges from US$250 to US$32,000, which is quite considerable.

35% of the tasks are worth more than $1000, and 34% are worth between $500 and $1000.Individual Contributor (IC) Software Engineering (SWE) TasksThis group contains 764 tasks with a total value of $414,775;SWE Management TasksThis group contains 724 tasks with a total value of $585,225.

2. Large-scale software engineering in the real world not only requires specific code to be developed, but also requires capable technical overall management. This benchmark uses a real-world data evaluation model to serve as the SWE’s “technical director” role.

3. Have advanced full-stack engineering evaluation capabilities. SWE-Lancer represents real-world software engineering because its tasks come from a platform with millions of real users.

The tasks involve mobile and web engineering development, interaction with APIs, browsers and external applications, and verification and replication of complex problems.

For example, some tasks are to spend $250 to improve reliability (fixing dual-trigger API calls),$1000 to fix vulnerabilities (solving permissions differences), and $16,000 to implement new features (adding in-app video playback support on the web, iOS, Android, and desktop, etc.).

4. Diversity in fields. 74% of IC SWE tasks and 76% of SWE management tasks involve application logic, while 17% of IC SWE tasks and 18% of SWE management tasks involve UI/UX development.

In terms of task difficulty, the tasks selected by SWE-Lancer are very challenging, with tasks in the open source dataset taking an average of 26 days to solve on Github.

In addition, OpenAI stated its unbiased data collection, selecting a representative sample of tasks from Upwork and employing 100 professional software engineers to write and verify end-to-end tests for all tasks.

AI coding money-making ability PK

Although many technology leaders continue to claim in their propaganda that AI models can replace “low-level” engineers, there is still a big question mark whether companies can completely replace human software engineers with LLM.

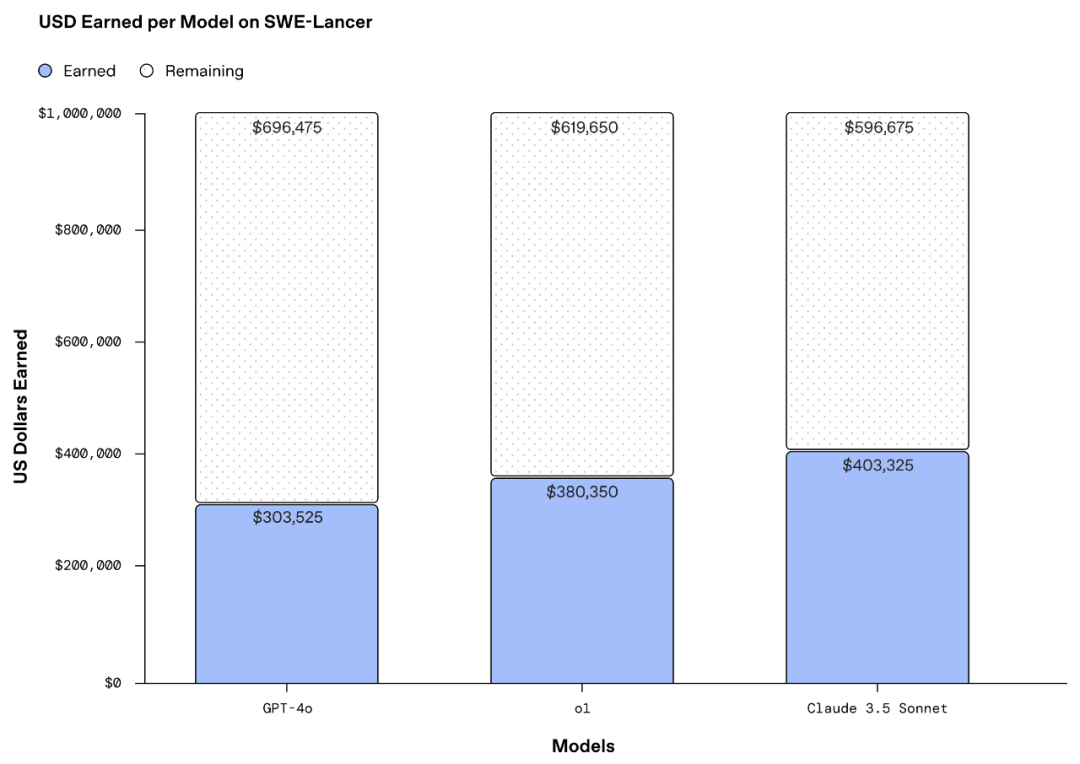

The first evaluation results show that on the complete SWE-Lancer dataset, the benefits of the AI gold medal player models currently tested are far below the potential total compensation of US$1 million.

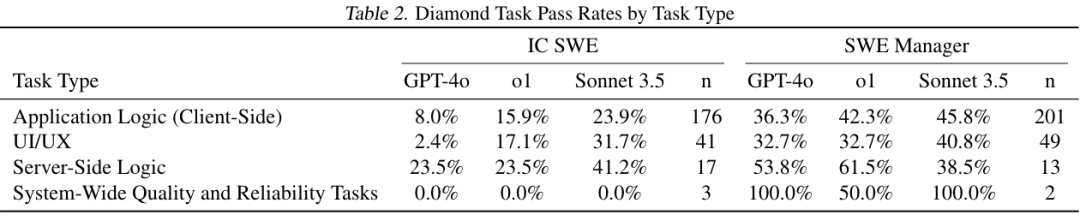

Overall,All models will perform better on SWE management tasks than IC SWE tasksHowever, the IC SWE task has not yet been fully overcome by AI models to a large extent. The best performance of the tested model is Claude 3.5 Sonnet developed by OpenAI competitor Anthropic.

On IC SWE tasks, all models have single pass rates and yields below 30%. On SWE management tasks, the best performing model, Claude 3.5 Sonnet, scored 45%.

Claude 3.5 Sonnet showed strong performance in both IC SWE and SWE management tasks, 9.7% better than the second-best performing model o1 in IC SWE tasks and 3.4% better in SWE management tasks.

If converted into revenue, the top-performing Claude 3.5 Sonnet has total revenue of more than $400,000 on the full data set.

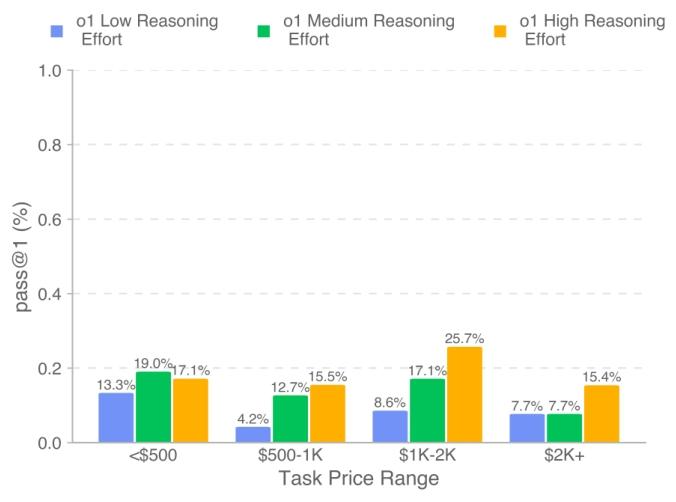

A point worth noting is thatHigher reasoning calculations will greatly help “AI make money”.

On the IC SWE mission, researchers conducted experiments on the o1 model with deep reasoning tools that showed that higher reasoning calculations could increase the single pass rate from 9.3% to 16.5%, and the revenue increased from 16,000 US dollars to 29,000 US dollars, and the yield increased from 6.8% to 12.1%.

The researchers concluded that although the best model, Claude 3.5 Sonnet, solved 26.2% of IC SWE problems, most of the remaining solutions still had errors, and a lot of improvement work was needed to achieve reliable deployment. This is followed by o1, then GPT-4o, and the single pass rate for management tasks is typically more than twice the single pass rate for IC SWE tasks.

This also means that even if the idea of AI agents replacing human software engineers is highly hyped, companies still need to think twice. AI models can solve some “low-level” coding problems, but they cannot replace “low-level” software engineers. Because they cannot understand why some code errors exist and continue to make more extension errors.

The current evaluation framework does not yet support multimodal input. In addition, researchers have not evaluated “return on investment”, such as comparing the remuneration paid to freelancers with the cost of using APIs when completing a task. This will be the focus of the next step in improving the benchmark.

Be an “AI-enhanced” programmer

For now, AI still has a long way to go before it can truly replace human programmers. After all, developing a software engineering project is not just as simple as generating code as required.

For example, programmers often encounter extremely complex, abstract, and fuzzy customer requirements, which requires a deep understanding of various technical principles, business logic, and system architecture. When optimizing complex software architecture, human programmers can comprehensively consider factors such as the future scalability, maintainability, and performance of the system, and AI may be difficult to make comprehensive analysis and judgment.

In addition, programming is not just about realizing existing logic, but also requires a lot of creativity and innovative thinking. Programmers need to conceive new algorithms, design unique software interfaces and interaction methods, etc. This truly novel idea and solution is AI’s shortcomings.

Programmers usually also need to communicate and collaborate with team members, customers and other stakeholders. They need to understand the needs and realizability of all parties, clearly express their views, and collaborate with others to complete the project. In addition, human programmers have the ability to continuously learn and adapt to new changes. They can quickly master new knowledge and skills and apply them to actual projects. A successful AI model also requires various training tests.

The software development industry is also subject to various legal and regulatory constraints, such as intellectual property rights, data protection and software licensing. Artificial intelligence may have difficulty fully understanding and complying with these legal and regulatory requirements, thus laying down legal risks or liability disputes.

In the long run, the substitution of programmer positions brought about by advances in AI technology still exists, but in the short term,”AI-enhanced programmers” are the mainstream, and mastering the use of the latest AI tools is one of the core skills of excellent programmers.