Jiang Daxin, founder and CEO of Step Star

Under the DeepSeek craze, the six big model tigers gradually began to divide, turning more to the industrial world and the commercialization stage.

GuShiio.comAGI reported on February 21 thatAt the first Step UP Ecosystem Open Day held today, Step Star, one of the six tigers of the AI model, announced the progress of the open source model, Agent agents and new open source reasoning and video reasoning models.

Jiang Daxin, founder and CEO of Step Star, said in his speech that the company is steadily moving towards the goal of achieving AGI and has now entered the development stage of Agent. He pointed out that the development of agents relies on two key elements: one is the multimodal ability, which allows agents to fully perceive and understand the world; the other is the reasoning ability, which enables agents to conduct slow thinking with long thinking chains and proactively plan, try, reflect, and provide accurate answers through constant error correction.

At the same time, Jiang Daxin revealed thatIn March this year, Step Star will open source a new graphic video model.In addition, Step Star also released a newly upgraded Star Project 2.0, which includes raising a Step Star Ecological Fund with state-owned enterprises Shanghai Yidian, Shanghai State-owned Capital Investment Co., Ltd., Xuhui Capital, etc., but the specific amount was not disclosed, but the plan aims to provide Agent application developers with all-round support such as models, computing power, capital, data and business incubation. It will also combine ModeSpace to provide office space support for high-quality entrepreneurial projects and create a large model industry aggregation effect in Shanghai.

It is reported thatStep Star was established in April 2023 and was founded by Jiang Daxin, former global vice president of Microsoft. Zhang Xiangyu, an AI scientist who is one of the authors of ResNet, and Zhu Yibo, an AI system expert with rich experience in large-scale clusters and system construction, have all joined Step Star, mainly targeting AGI.

In December 2024, Step Star announced the completion of Series B financing, with a total financing amount of hundreds of millions of dollars. The core investors include Shanghai State-owned Capital Investment Co., Ltd. and its funds, and the strategic and financial investors include Tencent Investment, Wuyuan Capital, Qiming Venture Capital, etc.

In the past year, Step Star has released a total of 11 multimodal large models, covering comprehensive capabilities in speech recognition, speech generation, multimodal understanding, image and video generation. In January this year, Step Star released Step R-mini, becoming the first inference model in the Step series, further expanding the capabilities boundaries of large models.

Step Star has recently continued to make efforts to open source,Two graphics video models with 30 billion parameters, Step-Video-T2V, and a voice model with 130 billion parameters, have been successively open sourced. In addition, on February 18, Step Star and Tsinghua University jointly open-source Open-Reasoner-Zero, a large-scale reinforcement learning RL reasoning model, available in 7B and 32B versions. Among them, version 32B performs better than DeepSeek-R1-Zero-Qwen-32B, reducing training steps to 1/30 of the original, and improving efficiency by 25 times.

For open source reasons,Jiang Daxin said that under the inspiration of DeepSeek, Step Star has had a profound accumulation in the multimodal field and created powerful models. Developers are also eager to see whether very powerful multimodal models can also appear in the multimodal field. Model, therefore, Step Star chose to release open source multimodal model technology in the near future.

“Multimodal understanding continues to lead the way, and multimodal reasoning takes the lead in exploring.& rdquo; Obviously, moving from training to reasoning, multimodal will become a mainstream requirement.

Jiang Daxin also revealed two key directions for Step Star in the future: multimodal reasoning and Agent agent technology to realize the technical route of Step Star AGI, a unified world model AGI that is unimodal, multimodal and multimodal understanding and generation.

Among them, in multimodal reasoning,Step Star is developing a visual reasoning model. He mentioned that this model can realize slow thinking in visual space.

“At this stage of the simulated world, the main paradigm for training models is imitation learning, and the main goal of learning is the representation of various modalities, including the physical world from sound, text, images, and video to 4D space-time. For solving complex problems, the human brain activates a set of second systems, or the ability to think slowly. At each step, if we find that the starting idea is wrong, we may reimagine our thinking and keep exploring until the solution is successful.& rdquo; Jiang Daxin said.

Agent level,Step Star regards intelligent terminal Agents as the core breakthrough point in the implementation of large model technology. In Jiang Daxin’s view, the two key factors affecting Agents, multimodal and slow thinking, have made significant progress in 2024. Therefore, Step Star focuses on the implementation of vertical Agent (enterprises and developers) and intelligent terminal Agent (automobiles, mobile phones, embodied intelligence, IoT) agents, including cooperation with Geely Automobile Group, Qianli Technology, OPPO, Intelligent Robot, TCL and other companies have cooperated to jointly create an innovative C-end application experience in vertical scenarios.

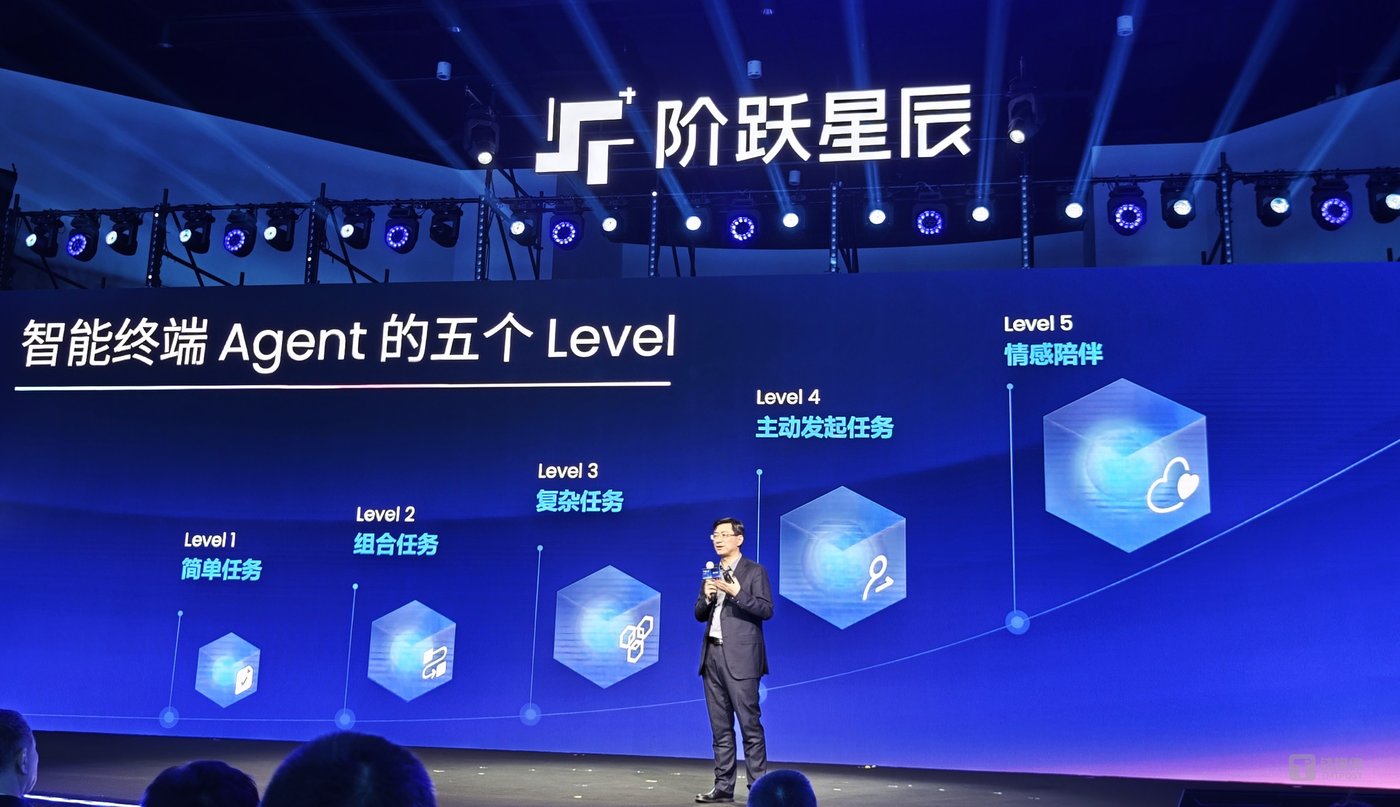

“So the so-called initiative is an Agent, which can actively observe the environment around the user, or the context around him proactively initiates or completes tasks, rather than just passively accepting tasks. If everyone wants to be able to complete complex tasks independently, this goal also requires some auxiliary functions. rdquo; Jiang Daxin said that as the capabilities of the base model continue to increase, Agent capabilities will continue to evolve and will go through five stages. In the end, Level 5 rose from IQ to EQ.

In addition, it is worth mentioning that Yin Qi, co-founder of Quangshi Technology, one of the four AI dragons(Shangtang, Quangshi, Yitu, and Yuncong), has a new identity as chairman of Qianli Technology and vice president of Geely Automobile Group Li Chuanhai, president of Geely Automobile Research Institute, appeared together at the Ecology Day Roundtable Forum.

This is Inqi’s first appearance.

It is reported that on February 18, Lifan Technology officially changed its name to Qianli Technology (SH: 601777, formerly known as Lifan Co., Ltd.), and the transformed and upgraded brand will focus on the core strategy of AI+ vehicles and take technological innovation as its core competitiveness., innovate industrial development models, and comprehensively enhance research and development capabilities in autonomous driving, smart cockpits and other fields. Inqi is the shareholder and chairman of Qianli Technology, and Geely Industrial Investment is also one of the shareholders of Qianli Technology.

AI day, a year in the world, now thousands of miles, thousands of miles a day. Inch said that the most successful AI products currently are still Tesla and Douyin, but the large model will also bring greater market space to applications.

GuShiio.comAGI compiled the important information mentioned at the scene of Inky’s debut:

- Inch believes that his identity has not changed and he has always been an AI entrepreneur. He believes that AGI and robotics are two very important directions in the past entrepreneurial process.

- Inch saidThe development direction and rhythm of an industry may be more important than the direction.

- GuanIn the field of intelligent driving and intelligent driving cabins: In my own judgment, the next three years should be a convergence period, and L3 and L4 should be gradually de-promoted. This is the beginning of the ecological and intelligent development of the entire car. Even this may be the first season. Only when the car can drive automatically, it may provide a foundation for the popularization and humanization of the car behind it. This is one of the potential and hope points where large-scale Agent applications in vehicles have.

- Qianli Technology’s core positioning is AI+ vehicles, which mainly builds AI native and combines software and hardware. It is hoped that based on Geely Automobile’s ability to serve the Geely ecosystem well and make good use of the step-by-step model, it needs a supplier of an overall solution to truly integrate technology, products, software and hardware are combined together.

- Talk about DeepSeek: The Spring Festival is very lively. I think all industrial development is continuous. DeepSeek is a very excellent open source model in China, and the development of AI in China is a continuous process and a process of accumulation and development.

- In current development, the most important thing is the relationship between Agent and terminal. With the arrival of Agent, I heard the idea that terminals are divided into three categories: me, you, and him. Among them, my type of hardware is represented by mobile phones, glasses and headphones are extensions of organs, a large type of hardware; the second is your type of hardware, cars, and future embodied intelligence; the third is other types of hardware, such as Xiaomi Ecological IoT.

- Therefore, the two most important pieces of hardware for an Agent are mobile phones and cars. In the future, the difference between the incision and explosion point combined with Agents is that Agents can allow terminals to transcend segmented applications and directly provide services to users. You can imagine that if there is a better ecosystem for applications that favor efficiency and tools, it will become more and more insensitive to users. Each hardware can help users reach and help them solve some physical executions. Then users really don’t care who the service provider is. What they need is reliable, high-performance and low-price services. Hardware will become unprecedentedly important. Back then, we felt that mobile phones were very important, but terminals will be more important in the future. Looking at the entire industry chain, terminals do not actually exert their greatest commercial value, and the mobile Internet has done a lot of value sharing. I believe that building closer and strategic relationships with good terminals, as well as allocating new values of terminal interests, and matching the big model era may be an important topic in the next three years.

- Now, differentiation has become important, and AI and hardware have not reached the top, but combining boxing will truly open the value chain on the AI closed loop.

- As Agents become emotional, the relationship between people and cars will be reshaped. If five years later, the average amount of time spent using the car exceeds 3 hours, then the attributes of the car may change. It may not only be a means of transportation, it will become a third space. Therefore, the car will become another after the mobile phone. A brain combines three roles: driving role + space role + robot companion role

On this open day, Geely Automobile Group, Qianli Technology and Step Star jointly announced that they will further strengthen the existing technology cooperation partnership between the three parties and promote the deep integration of AI+ vehicles.

“I think that the connection between chat robots and humans is still very superficial now, because chat robots can only know the user’s situation through chat content, and humans can accompany the user. Agent products need to truly empathize with each other to provide emotional value.& rdquo; Jiang Daxin said at the end of his speech.