Wen| Wang xinxi

Musk’s artificial intelligence startup xAI has released an updated version of the Grok 3 model, which Musk calls the smartest artificial intelligence on earth.

Grok3 was developed using 200,000 Nvidia chips and its computing power is 10 times that of the previous generation.

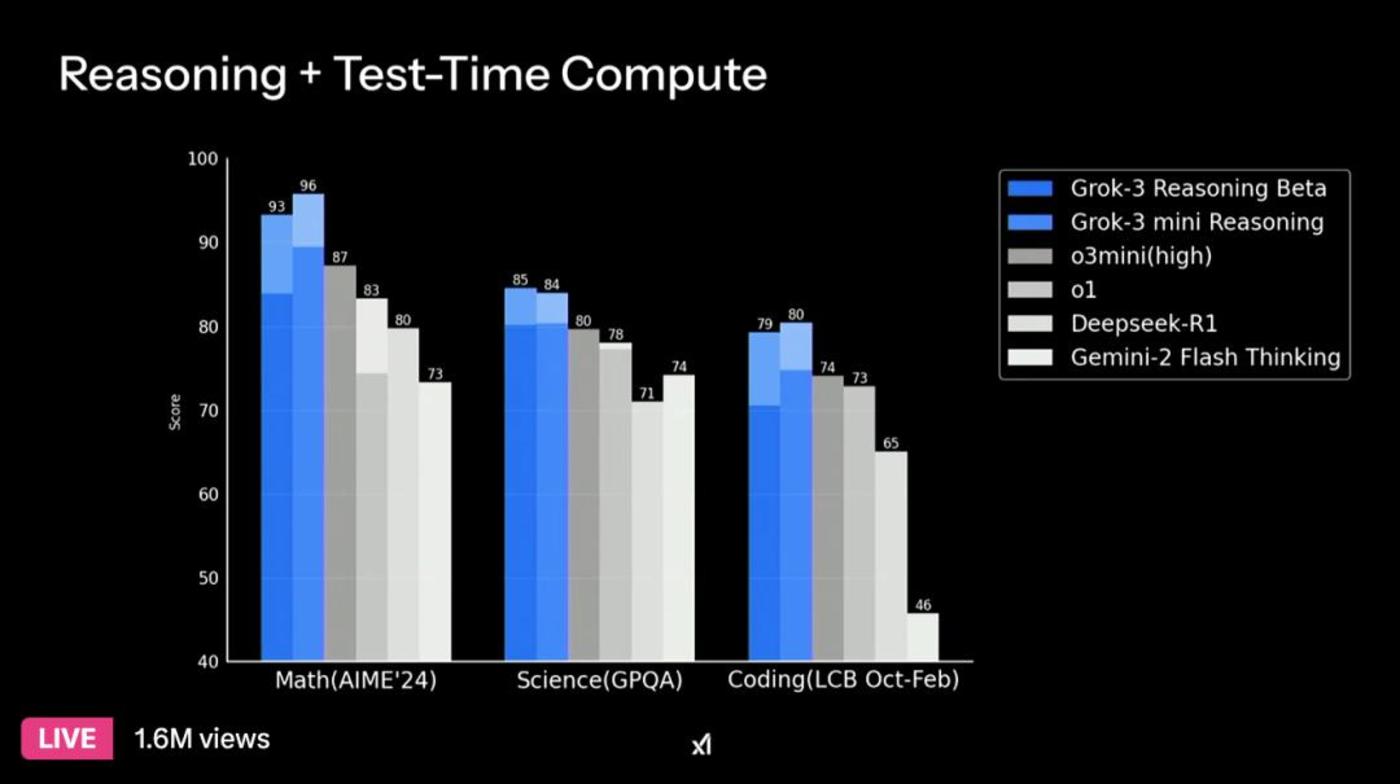

On xAI’s live broadcast that day, Musk gave a live demonstration with three of the company’s engineers. Grok 3 defeated Google Gemini, DeepSeek’s V3 model, Anthropic’s Claude and OpenAI’s GPT-4o in mathematics, science and programming benchmarks.

According to Musk previously, Grok 3 was trained on a large amount of synthetic data. It checks the data repeatedly to try to achieve logical consistency. If there is wrong data, it will reflect on and delete the wrong data.

Judging from the current tests, Grok3 scores higher than DeepSeek in many tests such as the AIME’24 Mathematical Proficiency Test, GPQA Scientific Knowledge Assessment, and LCB Oct-Feb Programming Proficiency Test. It has a thinking chain reasoning mechanism that can gradually disassemble complex tasks like humans. The number of parameters reaches 1 trillion.

Grok3 does perform well on a number of tasks, such as when dealing with complex logic and reasoning tasks, where its capabilities do have something. However, Grok3 used a large number of GPUs to brush up the scores on the list, and scored high in mathematics and programming. In fact, it was not much better and did not open the gap. DeepSeek’s advantages lie in Chinese understanding and multimodal interaction. Its performance has always been very stable and has been verified a lot in practical application scenarios.

The most critical issue is that the cost of Grok3 is extremely high, and its computing capacity is 10 times that of the previous generation Grok 2. It is said that its training cost is only US$3 billion. According to relevant engineers, the computing power of xAI’s supercomputing center has doubled.

In April last year, Musk believed that the only way for xAI to successfully build the best AI was to build its own data center. Due to the urgency of launching Grok 3 as soon as possible, it was decided to build the data center within four months. In the end, it took the team 122 days to get the first 100,000 GPUs up and running, but building the ideal AI required doubling the cluster size. The press conference revealed that the team had doubled the computing power of the supercomputing cluster in just 92 days, that is, the number of GPUs had reached 200,000.

How much does 200,000 GPU cost? Taking the NVIDIA H100 as an example, the price of a single card is about US$25,000 to US$30,000. 200,000 pieces and 30,000 dollars = 6 billion dollars (hardware purchase costs only). This is only the cost of GPU hardware. The actual deployment cost is higher. Supporting servers, network equipment, power, cooling facilities, etc. need to be considered. The total cost may reach the order of 10 billion US dollars, and if you cut it by half, it will reach 5 billion US dollars.

Deepseek is only US$6 million in training costs, which is far lower than the US$78 million for GPT-4. Subsequent deepseek funds, talents, and resources have increased, and its comprehensive capabilities are definitely not worse than Grok3.

Grok 3 confirms that DeepSeek is really strong

What does this mean? It means two points. First, Musk spent so much money and piled up more computing power than OpenAI, and finally came up with a product similar to OpenAI. Second,This proves that DeepSeek is really strong. Musk burns a lot of dollars as firewood for alchemy. The effect relies entirely on hardware. Moreover, judging from the data, there is not much gap between DeepSeek and DeepSeek., DeepSeek’s low-cost route was almost tied, which only shows that DeepSeek is very strong.

Because these are two different routes, one is to make great efforts to create miracles and firmly spend money and accumulate computing power. The other is innovative engineering design and efficient training methods to optimize and save resources and pursue ultimate cost performance. They are two completely different routes.

andThe game of accumulating computing power is a game that makes AI a game that Americans dominate the allocation of computing power resources.The United States can accurately regulate global AI productivity levels by controlling the export of GPU chips. And dividing the global acquisition of AI computing power into three hierarchical circles and allowing it to control distribution, which has plunged other countries into despair.

Moreover, Trump has also invested US$500 billion in Stargate, with the purpose of firmly locking AI leadership in the United States, attracting related funds from Japan, South Korea, the Middle East, Europe and other countries, and firmly tying other powerful countries except China to the United States ‘AI chariot.

DeepSeek has broken this dominance and given all other countries the hope of independently developing AI, because when it achieves the same performance as the OpenAI model, it only needs 5% of the other party’s computing power, directly overturning it in a low-cost model. Many technology giants in the United States, and now all countries feel they can do it. Because this means that all countries no longer need to rely on U.S. high-computing power GPU chip resources, and costs can be greatly reduced.

This is an ability that Trump, who is now making money everywhere, particularly values. DeepSeek came into being. Why is it not only Nvidia, AMD and other companies quickly connected, Trump also uncharacteristically praised them for the same purpose: saving costs, saving total social costs, and saving the entire country money.

Moreover, with the development of the AI model so far, it is actually difficult to determine the absolute winner and winner in model capabilities. Against the background of the continuous convergence of big indicators, open source is the big move. Well-known investor Zhu Xiaohu once put forward a judgment that the foundation of open source models is the cut-off line of closed-source models. In the future, closed-source models must be two to three times the foundation of open source to have a chance to survive. Otherwise, if you spend ten times the cost, you will only improve performance by 20%, and even investors in Silicon Valley will not accept such a result.

As for how it actually works, only open source can be fully tested. If you don’t open source, people still pay for it, and if you don’t have anything particularly advanced, it will be difficult to establish a business model.

Coupled with the great success of Chatgpt and deepseek, grok1 and grok2 are almost ignored. There is little chance that grok3 will simply spend money and accumulate computing power to achieve a reversal of the plot.

If he does not open source, Musk’s huge investment cannot be realized, and he may use his own robots and FSD.But then again, Tesla’s FSD user subscriptions are not high at present, but Grok-3 can still enhance the competitiveness of FSD.

Because Grok3 is trained on 100,000 NVIDIA H100 chips, it can process more than 1.5 trillion parameters per second, and can analyze sensor data such as in-vehicle cameras and radars in real time. It is more accurate than competing products in identifying the depth of water on roads in heavy rains. 37%, can help autonomous driving systems more accurately sense the surrounding environment.

In addition, Grok3 introduces thought chain technology, which can simulate the gradual reasoning process of humans. It can comprehensively analyze real-time traffic data, charging pile availability and user schedule recommended routes during navigation. In autonomous driving scenarios, when faced with complex road conditions and traffic signals. Make more reasonable and safe decisions.

If Tesla uses it in FSD, it means that the competition for smart driving among new energy vehicle companies will become more intense. China car companies must be sufficiently aware and prepared for this.

Compared with Grok3, deepseek’s advantages are the ultra-low cost of innovative engineering design and algorithm optimization. Secondly, the ecological and technical optimization capabilities of the open source model will make it possible for many scientific researchers, including the United States, to develop on the AI model of Dongda in the future! Dongda is expected to become the world’s AI development center. Coupled with the support of our whole country, hundreds of industries in China are rapidly connecting, promoting their continuous evolution and combining practice with thousands of industries to generate productivity. The future development potential may not be comparable to Grok3, so we will wait and see.