Wen| Alphabet List, Author| Bi Andi, Editor| Zhao Jinjie

It really makes DeepSeek’s number one black fan beautiful.

On February 25, Beijing time, Anthropic received two good news.

The first good news is that Anthropic released its first hybrid model, Claude 3.7 Sonnet. When Tencent, Ali, xAI, etc. followed up with DeepSeek and launched inference models, Anthropic said that I wanted them all, combining timely response with in-depth thinking, and abandoning the practice of stacking multiple models.

The second good news is that almost at the same time as Claude 3.7 Sonnet was released, The Wall Street Journal said that Anthropic was close to completing a new round of financing of $3.5 billion, and its valuation could reach $61.5 billion, more than three times the previous valuation of $18 billion.

Anthropic has many titles. This company is a representative of the OpenAI rebels and a star artificial intelligence startup in Silicon Valley. At the same time, in the past month, the company has also become DeepSeek’s number one black fan. Not only did it question the V3 ‘s US$6 million training cost, but one of the co-founders personally wrote a denunciation calling for strengthening U.S. chip export controls.

Now that the new model has been released and the news that the financing is about to be overcompleted, Anthropic has temporarily withstood the pressure from DeepSeek.

This is not only good news for Anthropic. In fact, when Wall Street and the public are questioning Silicon Valley’s high-spending AI strategy because of DeepSeek, Anthropic’s performance proves that the myth has not yet been shattered. For companies such as OpenAI that are also raising funds, it is undoubtedly a positive signal.

01

Driven by the DeepSeek R1 reasoning model, OpenAI quickly launched o3 mini, and Musk’s xAI also brought Grok Reasoning when it released Grok 3 last week.

Anthropic chose at this time: do a mixed big move.

It has been rumored that Claude 4 will be released soon, but Anthropic actually released Claude 3.7 Sonnet this time.

Anthropic said the Claude 3.7 Sonnet is the first hybrid model on the market and will be available immediately.

This mixture refers toThe industry’s first model to integrate Fast thinking and slow thinking on a single architecture.In comparison, whether it is DeepSeek’s R1 model or OpenAI’s o3-mini, they are both strict inference models.

When using R1 and o3-mini, the thinking process is mandatory and users can only wait, which slows down the time it takes to get answers. However, some problems do not actually require long thinking. At this time, users need to judge and switch to a timely response model.

But in a hybrid model like Claude 3.7 Sonnet, users don’t have to switch to get real-time responses or in-depth thinking.

“This model integrates all functions. Our goal is to have a unified AI that can be used in various scenarios. This will be easier for our customers.& rdquo; said Jared Kaplan, co-founder and chief scientist of Anthropic.

Kaplan likens this to the way the human brain works: some questions require in-depth thinking, and some questions require quick answers.Claude 3.7 Sonnet integrates these two capabilities into the same model rather than completely separating them.

In addition, users can use the draft function to guide the model to think more accurately when the problem is complex;API users can also finely control the thinking time of Claude 3.7 Sonnet, and even control the thinking budget, such as telling Claude how many tokens to think about when answering.

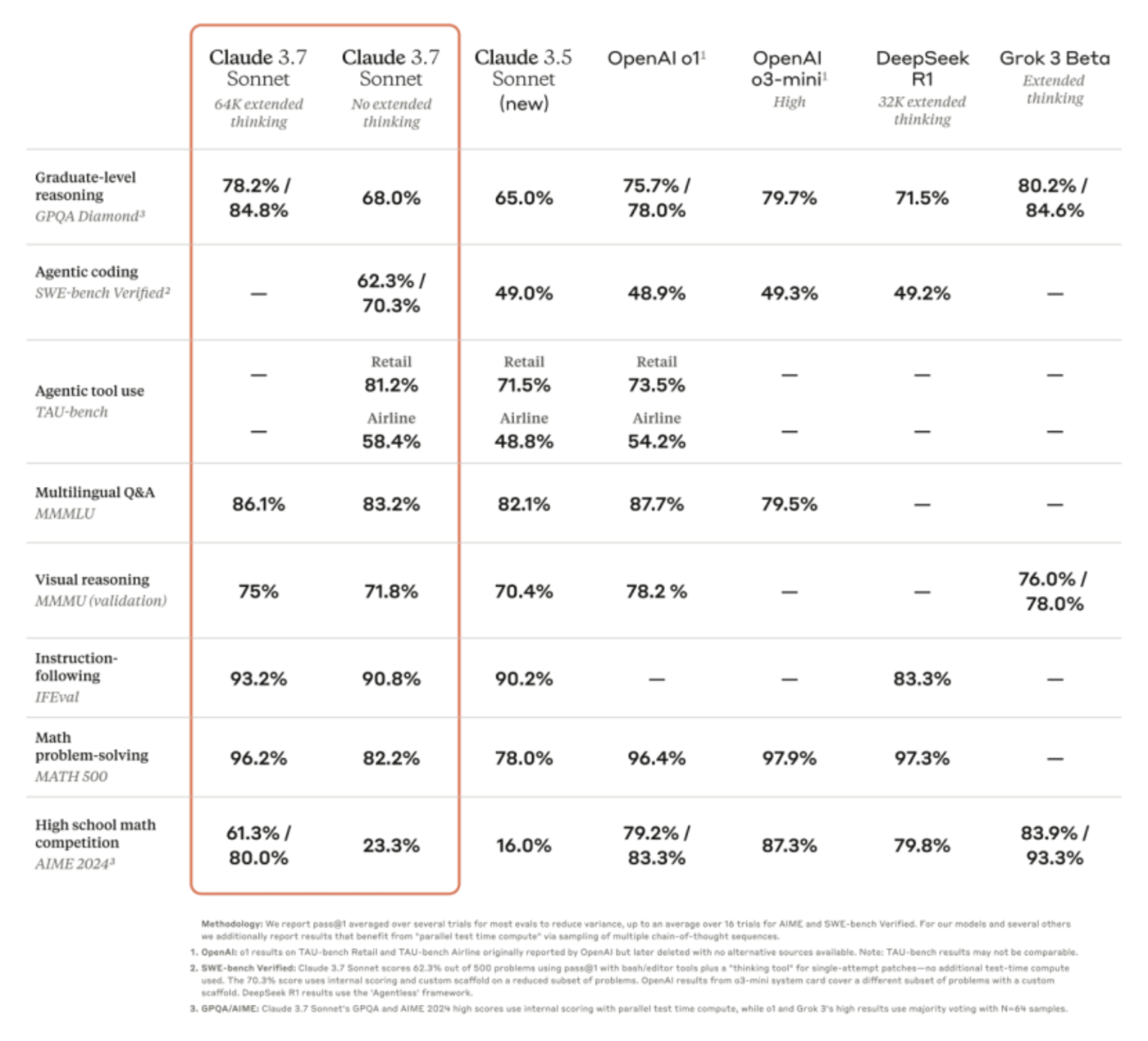

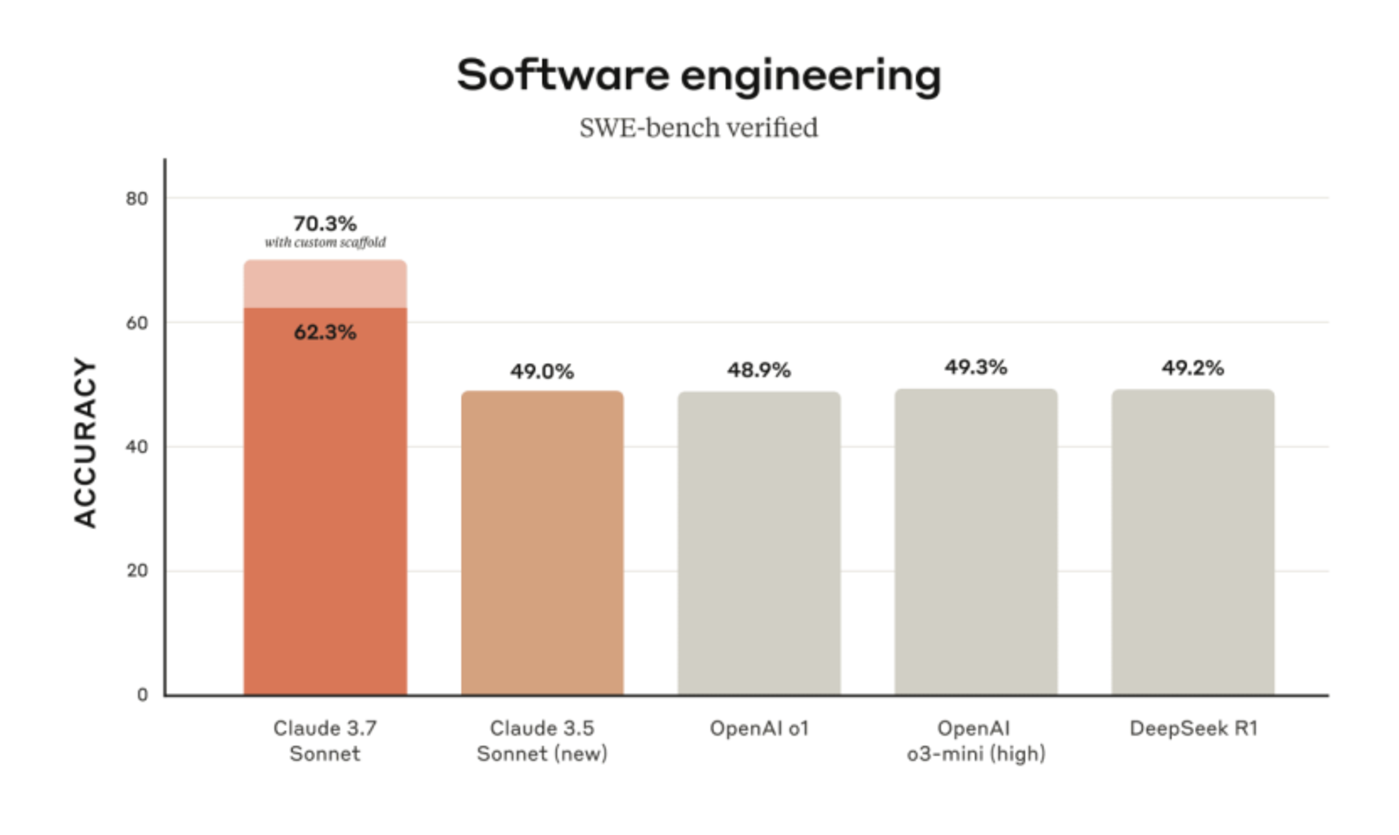

In terms of model performance, compared to the previous generation of Claude 3.5 Sonnet: Claude 3.7 Sonnet performs well in following instructions, general reasoning, multimodal capabilities and autonomous coding, and extended thinking provides significant improvements in mathematics and science. rdquo; It is worth mentioning that its mathematical and coding capabilities have been improved by 10%. Among them, the coding ability is particularly outstanding and has been demonstrated in multiple tests.

According to SWE Bench test data, Claude 3.7 ‘s coding capabilities significantly exceed DeepSeek R1 and OpenAI’s o1 and o3 models. Cursor, which focuses on AI programming, has announced integration with Claude 3.7 Sonnet.

Claude 3.7 Sonnet is fully launched and supports free, professional, team and enterprise editions. It can also be used on Anthropic API, Amazon Bedrock and Google Cloud’s Vertex AI. However, free users are currently unable to use the Extended Thinking Mode.

In terms of pricing, the price of Claude Sonnet 3.7 is: input US$3/million tokens, output US$15/million tokens. This is consistent with previous models and is significantly higher than competing pure inference models such as OpenAI o3 mini (input $1.1/million tokens, output $4.4/million tokens) and DeepSeek R1 (input $0.55/million tokens, output $2.19/million tokens).

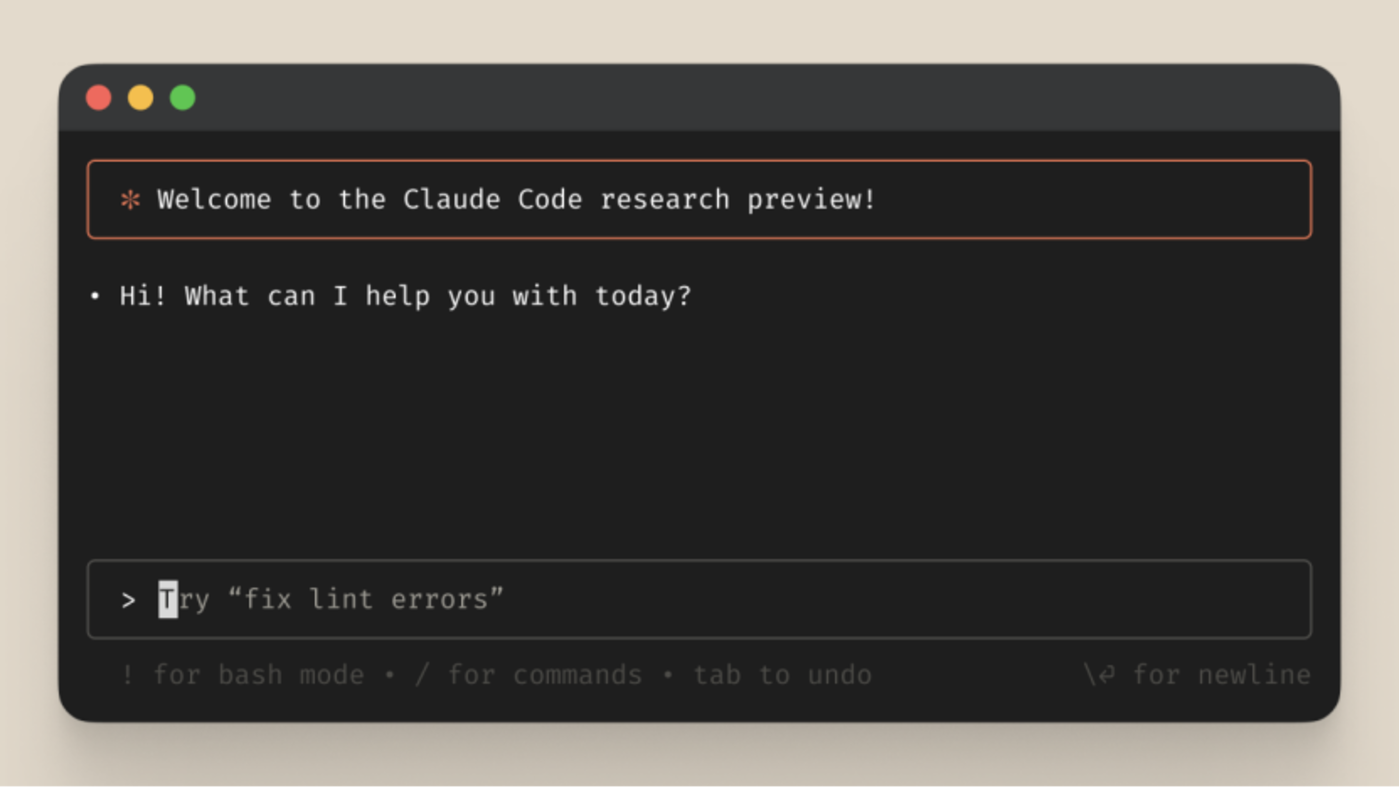

On this basis, Anthropic has also simultaneously released Code-focused Agent Claude Code, which can be run directly on the terminal to help developers complete programming tasks.

From searching, reading code, editing files, writing code, running tests, all the way to submitting code to GitHub. However, Claude Code is currently only available as a limited research preview.

02

While releasing the big model, there was also good news from Anthropic’s ongoing financing:

The Wall Street Journal reported: The company behind Claude overcame investor concerns caused by the success of DeepSeek in China and reached a valuation of US$61.5 billion. rdquo;

Anthropic is said to be about to complete a $3.5 billion round of financing, with a valuation of $61.5 billion. Investors in the latest round include venture capital firms Lightspeed Venture Partners, General Catalyst and Bessemer Venture Partners. Abu Dhabi-based investment firm MGX is also in talks to participate.

Although this figure is still far from OpenAI’s $157 billion valuation, it exceeds xAI’s $40 billion valuation at the end of last year. xAI is also seeking financing and is expected to be valued at US$75 billion.

You know, Anthropic’s valuation before this financing was only US$18 billion.

People familiar with the matter told the Wall Street Journal that Anthropic originally planned to raise $2 billion, but successfully increased the amount during negotiations with investors.

It’s not surprising that Anthropic is catching up and even acting like he wants to outperform all of you. It can be said that today’s two good news are consolidating the label Anthropic is attached to it.

Since its establishment in 2021, Anthropic has been nicknamed OpenAI Rebel because it was founded by former OpenAI employees.

Anthropic has been one step ahead of OpenAI many times before. For example, last year’s agent function, OpenAI followed up after Anthropic.

The release of hybrid models this time holds high the banner of anti-multiple model building solutions, and also reminds people of the GPT5 plan recently released by OpenAI CEO Ultraman. At that time, Ultraman said that he realized that the model and product functions were too complex and would be unified in the future. Unexpectedly, Anthropic beat me to it again.

Anthropic co-founders Kaplan and Mike Krieger both said they expect competitors to move in the direction of this hybrid model soon.

On the other hand, inAfter DeepSeek R1 was born, Anthropic became DeepSeek’s number one black fan.

Most Silicon Valley bosses praise DeepSeek with bitterness, but their words are polite.

For example, before the release of Grok3, Musk praised DeepSeek R1 as strong and praised China engineers, but also said that what DeepSeek brought was not a breakthrough in the AI field, and praised his own model.

While praising DeepSeek as an undoubtedly impressive model, Ultraman secretly stated that DeepSeek may have violated its terms of service by training its own models with OpenAI proprietary models. Later, he generously stated that he had no plans to sue DeepSeek.

Anthropic was very rude. Not only did he not believe that the training cost of DeepSeek V3 was only US$6 million, but even Dario Amodei, one of the founders, also released a censure “About DeepSeek and Export Control.”

The core idea of the article is that since DeepSeek V3 cannot only cost US$6 million, and we heard that they smuggled chips, we must control chip exports well. Previously, it was obviously not enough to just focus on high-end chips such as H100 and H800. We had to control the H20 as well.

Anthropic certainly does not want to admit it. Although it is called an OpenAI rebel, it is the same in terms of relying on giants to burn money. OpenAI has Microsoft before it and SoftBank, while Anthropic has embraced Google and Amazon.

Amazon, in particular, invested US$4 billion in Anthropic in 2023 and committed to investing another US$4 billion in 2024.

And DeepSeek’s fire coincides with Anthropic’s new round of financing, so it is reasonable to be anxious (although the method is a bit despicable). The left-handed denunciation suppressed DeepSeek and the right-handed release of a hybrid model of timely response + in-depth thinking, which finally stabilized the situation. If nothing goes wrong, Anthropic will exceed its financing target this round.

03

Anthropic’s two good news can also give Silicon Valley a temporary relief.

Anthropic is not the only one that is financing.

According to the Wall Street Journal and other media, OpenAI is negotiating huge financing, with a proposed financing amount of up to US$40 billion, and its valuation may be pushed up to US$300 billion. In addition, xAI is also conducting a new round of financing, seeking US$10 billion in financing at a US$75 billion valuation. They have undoubtedly all felt the investor concerns brought by DeepSeek.

Anthropic has at least proved that the AI path of investment in Silicon Valley has not yet been shattered, and that vigorous miracles can still impress people’s hearts to some extent, although it may require more lobbying costs than before.

But the war is far from over.

On the one hand, Anthropic still faces commercialization problems, which are even more dazzling in the post-DeepSeek era. Anthropic’s previous valuation to revenue ratio reached 68.6 times, while OpenAI was about 42.4 times.

According to The Information, Anthropic’s revenue is expected to surge from $2.2 billion in 2025 to $12 billion in 2027. The challenge lies in spending. Anthropic expects to burn US$3 billion this year, which is already lower than the US$5.6 billion the previous year. Company executives said they expect to stop deficit spending and achieve profitability by 2027.

On the other hand, while its own commercialization is difficult, external competition is also intensifying.

Grok 3 has just been released, Anthropic has released a hybrid model, followed by Google’s video generation model Veo2 API that was also announced to be released at the beginning of the year, OpenAI’s GPT-4.5 may be born at any time, and GPT-5 is expected to be released at the end of May.

The battlefield of open source is also becoming more and more lively. Musk’s xAI continues the practice of releasing new generation and open-sourcing previous generation models, and announced that it will open source Grok 2. OpenAI, who was previously regarded as closed-source representatives together with Anthropic, also relaxed. Faced with the fire at DeepSeek, Ultraman directly admitted that he had been on the wrong side in the past and launched a vote on social media to release the signal that there would be an open source project.

At present, DeepSeek is not waiting to wait and die, but announced the launch of Open Source Week. It has now open source the code of FlashMLA (literally translated as the Fast Multiple Potential Attention Mechanism) and the EP communication library.

Anthropic withstood pressure from DeepSeek, released a hybrid model and reported over-target financing. But there are still many challenges to keep the good news going.