Yin Qi, co-founder of Guangshi Technology and chairman of Qianli Technology

As Inch transforms into a new identity, AI+ cars will become his leaderQianli Technologynew strategy.

GuShiio.comAGI reported on March 3 that at the Geely AI Intelligent Technology Conference held tonight, Qianli Technology Chairman Yinqi delivered a speech with the theme of “From Car +AI to AI+ Car.”

Inch said that with the resonance of AI technology and the automobile industry, we are at a historical turning point from car +AI to AI+ car. This year will start the first year of AI+ vehicles, and the deep integration of AI and vehicles will determine the development pattern of the industry in the next ten years.

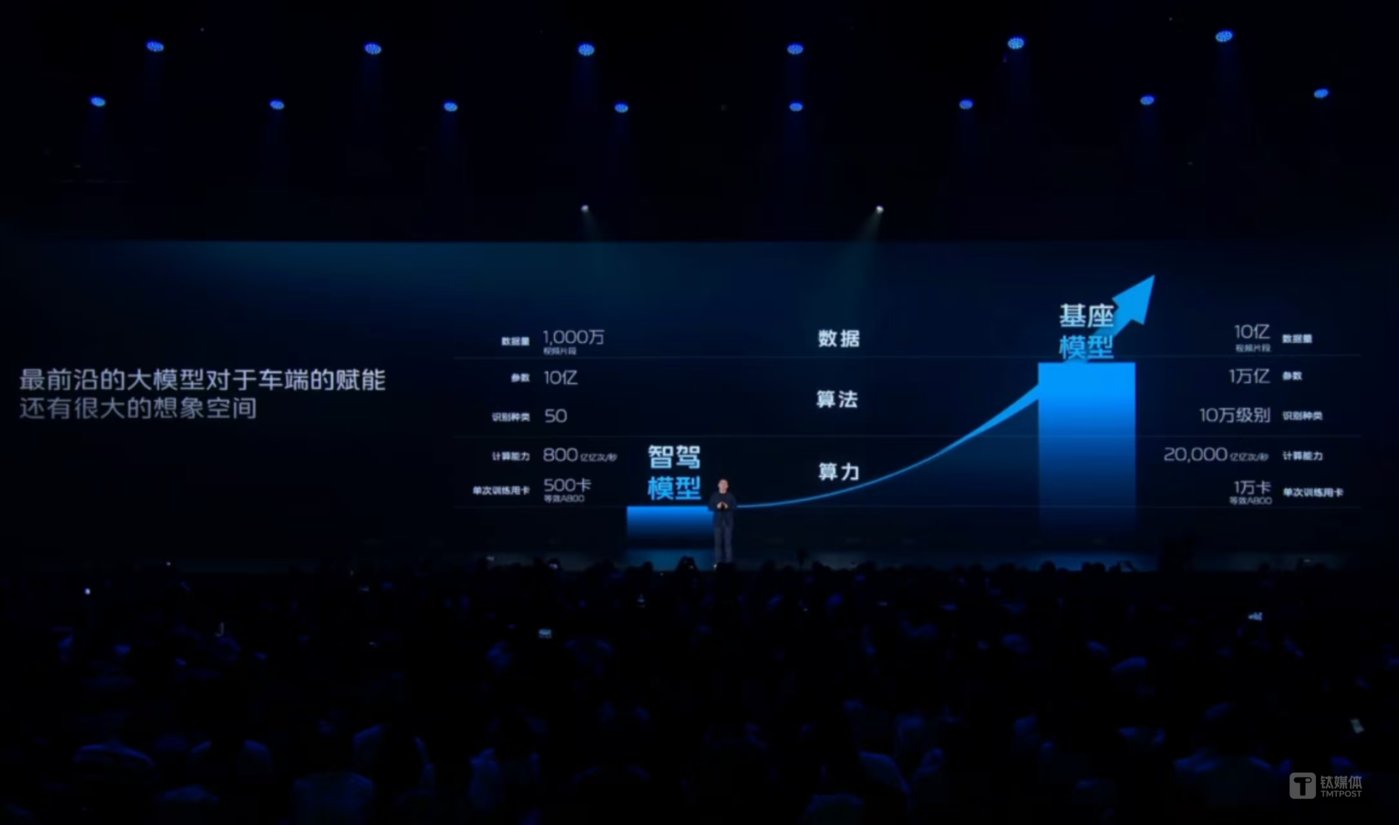

Inky believes that there is still a lot of room for imagination for the large model to empower the car end. He introduced that if we simply compare the current mainstream smart driving model in the industry with the most cutting-edge base model, we will find that there are two or even three orders of magnitude improvement in computing power, algorithm and data consumption between the two. The real AI model is one to two generations advanced than the intelligent models on the car today. This means that we have just begun in the field of AI+ vehicles and have huge potential and space. In the future, we will see Scaling Law in the car and witness ChatGPT moments.

In his view, cars in the future will increasingly tend torobot, is also the largest robot landing scene.“In the future, cars will not only be smart driving, but also our first robot carrier with the strongest brain in the true sense, Inky said.

This is the first time that Inqi, co-founder of AI Four Dragons, has delivered a public speech in his new capacity as chairman of Qianli Technology.

Prior to this, on February 18, Lifan Technology officially changed its name to Qianli Technology (SH: 601777, formerly known as Lifan Co., Ltd.). The new brand will focus on the core strategy of AI+ vehicles and enhance research and development in areas such as autonomous driving and smart cockpit. strength. Inqi is the shareholder and chairman of Qianli Technology, and Geely Industrial Investment is also one of the shareholders of Qianli Technology. On February 21, Inky appeared for the first time at Step Up Ecological Open Day for a roundtable discussion.

Now at this Geely event,Inqi announced that it is based on Qianli Technology andGeely AutomobileThe group’s in-depth technical cooperation will launch a new AI+ smart driving brand,And this year, the industry’s first L3-level smart driving solution with mass production capabilities will cover all Geely models at different price points. Geely Galaxy’s new products in the future will be equipped with Qianli Vast, and Geely China Star will also be equipped with Qianli Vast.Achieve the popularization of high-end smart driving.

As a member of Geely’s AI technology ecosystem, Qianli Technology is conducting in-depth cooperation with Geely to develop industry-leading smart driving and smart cabin solutions.

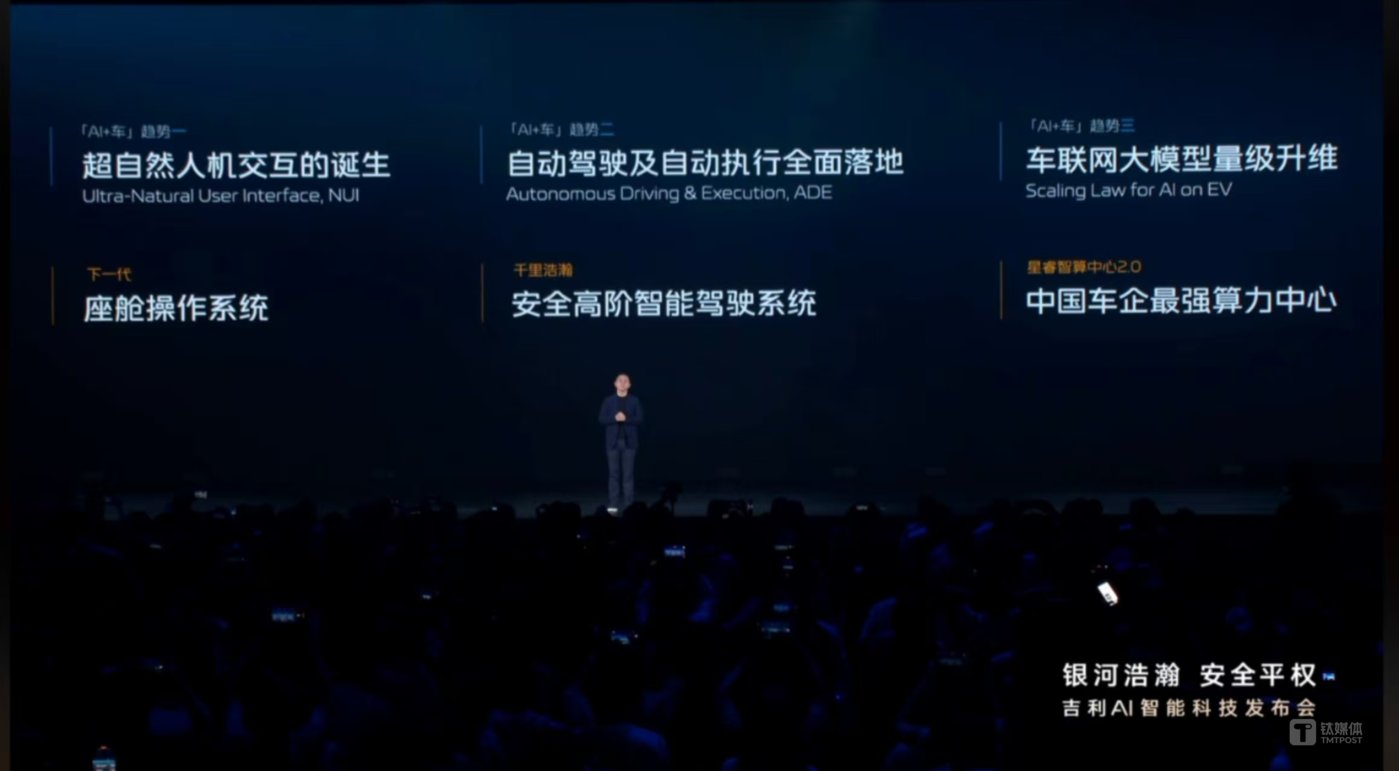

Inqi said that currently Qianli Technology is focusing on the strategic direction of AI+ vehicles, quickly intervening in the field of smart cars, and continues to increase its business layout in the fields of smart driving and smart cabin. In the future, three cutting-edge technological breakthroughs: the birth of supernatural human-computer interaction, the full implementation of autonomous driving and automatic execution, and the upgrade of the large-scale model of the Internet of Vehicles are expected to truly open up the imagination of AI+ cars and shape new smart car products and experiences.

Inch emphasized that the AI+ vehicle era has given rise to a new industry proposition, and in the future, a solution provider that can systematically integrate large model technology, product scenario definition and soft and hard collaboration capabilities is needed. I believe that with the deep integration of Geely’s AI technology ecosystem, the era of AI+ cars will accelerate. rdquo;

The following is the full text of Inky’s speech:

Hello, everyone, I am Inqi.

I am very happy to be a member of Geely’s AI Technology Ecosystem to share it with you at the Geely AI Intelligent Technology Conference. The theme I shared today is “From Car +AI to AI+ Car”.

Looking back at the two biggest technological revolutions of our era, one was the technological revolution of new energy, and the other was the technological revolution of AI. If we look back twenty years, we will find that these two lines will have a state of development, continuous interweaving, and deep integration. I want to give you three important historical points.

- The first node was around 2017, when such a new AI technology architecture represented by Transformer was launched. Just a year later, we saw Waymo launch Robotaxi’s first commercial service, and then Tesla’s FSD Beta version It’s really online, and the car and AI have a magical first intersection.

- The second node is in 2021. Just this year, Geely released the Smart Geely 2025 strategy, creating one network and three systems. Shortly after, OpenAI released InstructGPT, the predecessor of ChatGPT. In 2023, Tesla released FSD V12, realizing a pure vision end-to-end smart driving architecture for the first time. The combination of cars and AI has undergone a qualitative change.

- The third node is 2025. I think everyone will have a very AI Spring Festival this year. We have been screened by DeepSeek, and we will be amazed at the great changes that the development of AI has made in our lives. Immediately afterwards, Geely and our common ecological partner Step Star jointly opened up two multimodal models, making it the world’s leading open source multimodal model. This year is also the final year of Smart Geely’s 2025 strategy. You will see Geely joining forces with the AI ecosystem to release more industry-leading smart driving and smart cabin products. With this series of breakthroughs, we can say that in 2025, it is not just cars +AI, but AI+ cars that have officially arrived in the first year.

When we talk about AI, the word we think of most is model. In Geely’s AI ecosystem, there is actually a very comprehensive and leading model matrix.

Then the first type of model is a language model, which has the ability to language and logical reasoning. The most representative one is DeepSeek. Geely’s Xingrui model has deeply integrated DeepSeek’s capabilities to further enhance human-computer interaction and intelligent driving capabilities.

The second category, which is also very integrated with the scenes of our car, is the multimodal model, which includes image understanding and generation models, video understanding and generation models, end-to-end speech models, and music models. Geely’s AI ecosystem partner Step Star is a global leader in the field of multimodal large models.

The third category is also a pan-world model that we believe will have greater influence on future industries. Among them are VLA models proposed based on robot scenarios, as well as world models that are more essential to the future. Including the Geely AI Drive model that Forrest Gump just introduced to you, we have all done in-depth cooperation and empowerment.

From model capabilities to application implementation, 2025 is also a very critical year.According to the AGI five-level development framework proposed by OpenAI:

L1 is a chat robot with dialogue capabilities;L2 is a reasoner who can solve problems like humans;L3 is an agent who can take action;L4 is an innovator who assists in invention and creation;L5 is an organizer who completes organizational work.

Currently, AI technology is transitioning from L2 reasoner to L3 agent stage, and it is a consensus in the industry that 2025 will become the year when Agent applications explode. We believe that this trend will be the first to ignite the application of Agents in vehicles, turning vehicles from travel tools to travel smart life forms.

Next, I want to give you a few small examples to make you feel more aware of our multimodal model. First, here is an example of voice interaction that I like very much. The boy here is an AI and the girl is a real person. This is a little interesting interaction between a person and an AI. We can listen to this first.

I think such a conversation is actually a good textbook for all straight men. We will find that AI is better at coaxing girls than all of us. So when we compare the simple interactions of current AI vehicles, we will find that large models can give people more emotional value and help us complete more experiences.

In addition to voice interaction, in fact, we will enter a state called multimodal interaction in the future. So what I want to show you is that Qianli has achieved such a dynamic video interaction on mobile phones in Geely’s AI technology ecosystem.

Mobile phones can identify scenes in real time, view Mini programs, search for Ruixing Coffee, and you can order orders and takeout at will. Get a new navigation experience. I think the voice and video examples we just showed show that AI has given us completely different possibilities for human-computer interaction.

And not only input, but also very good output. All the videos you see here are generated by Geely and Step Star jointly open source, the Step Step-Video model. AI allows every ordinary person to become an excellent creator and unleash our imagination.

So empowered by such a powerful multi-modal model, I want to propose the first trend of AI+ cars, called:The birth of supernatural human-computer interaction.

When we went from the PC called GUI back then, to the Touch UI our mobile Internet, to the UI that we think can truly Ultra and Nature now, it will bring infinite imagination to our cars.

In this field, Geely’s entire AI ecosystem is very advanced. Next, the ecological alliance between Qianli and Geely will work together to redefine the next generation cockpit operating system Agent OS. It has three technical characteristics: supernatural dialogue, Agent OS, and cross-domain collaboration. This Agent OS will be released within this year. It will break the stereotyped functions of traditional cockpit and bring a different intelligent experience to users.

Next, back to this big picture of the model, we focus on the pan-world model. This is the part of our large model matrix that is most relevant to the physical world. I would like to share with you two latest scientific research results of Geely’s AI technology ecosystem.

The first scientific research result is the VLA model of a robotic arm in the field of robotics.Our team is the first team in China to achieve an average success rate of 90% in cross-task testing on RLBench, a benchmark testing platform for robot learning. Our robotic arms and dexterous hands are already capable of completing thousands of tasks. A very important point is that such a model uses the same model framework as our latest end-to-end smart driving model, both of which are Vision-Language-Action Models. Such a model will be widely used in vehicles in the future. In the future, cars will not only be smart driving, but also our first robot carrier with the strongest brain in the true sense.

The second scientific research achievement is to generate a model in the world’s leading world.As our smart driving technology becomes more mature and convergent, we will find that smart driving competes not only about algorithms and computing power, but also data. The data that can be accumulated in real scenarios is very limited. For example, data on real vehicle accidents and data in extreme weather are actually very rare. If these data only rely on the return of real data, it is likely that they will not be able to meet the needs of rapid iterative upgrades of smart driving technology. Therefore, we need to use a simulated world generation model to generate a large amount of data like the real world to meet the needs of future model training and rapid model iteration. This requires the use of a world generation model.

Focusing on the world generation model, we have a very deep integration with Geely’s AI Drive model, forming a strong scene generation capability. This will help us generate a lot of unusual data during smart driving training to help our models better understand the rich and complex real world, thereby greatly improving the performance and accuracy of smart driving solutions.

Based on the capabilities of the VLA and the World Model,The second trend I want to share is that in the future, there will be not only autonomous driving, but also autonomous execution. So under such a system, we will think that the future car is truly the scene where we are most likely to move towards robots and the largest robot landing.

Based on our leading model capabilities in Geely’s AI technology ecosystem, after deep integration, we will launch a new AI+ smart driving brand, and this year, the industry’s first L3 smart driving solution with mass production capabilities will be available on Geely’s models. Chen Qi will give you a more detailed explanation in the next session of the press conference.

After talking about the model,I actually want to mention another most important word in AI: Scaling Law.

Our old saying in China is that quantitative change produces qualitative change.

If we simply compare the current mainstream smart driving model in the industry with the most cutting-edge base model, we will find that there are two or even three orders of magnitude in terms of computing power, algorithm and data consumption between the two. improvement.

For example, judging from the amount of data in the model,Currently, the smart driving model uses about 10 million video clips, but our base model basically uses 1 billion video clips.Judging from the types of objects recognized, the types of obstacles recognized by smart driving are on the order of tens to 100. Most categories cannot be recognized, but are simply classified as general movable/immovable obstacles. The visual recognition types of large models can reach at least 100,000 levels. So I think this represents a real AI model, one to two generations advanced than the intelligent models on cars today, but it also means that we have just begun in the field of AI + cars and have huge potential and space.“”

Therefore, I think the third major trend is the magnitude increase of the large model of the Internet of Vehicles.We will see Scaling Law in cars, not just cars, but also in the complete car + cloud. In this way, on the Internet of Vehicles, we will truly innovate and incubate more AI-supported scenarios.

Forrest Gump also mentioned just now that Geely has teamed up with its science and technology ecological partners,The world’s only smart car computing power alliance has been established, which is Xingzhi Computing Center 2.0, and the comprehensive computing power has been increased to 23.5EFLOPS. This is definitely the first among all China car companies.With such a strong AI infrastructure, we can accelerate the Scaling Law of the Internet of Vehicles, truly empower the strongest AI models to our cars, and open up unlimited intelligent experience space in the future.

To summarize with you, my speech today will focus on sharing the three technology trends from car +AI to AI+ car.They are the birth of supernatural human-computer interaction, the full implementation of autonomous driving and autonomous execution, and the magnitude upgrade of the large model of the Internet of Vehicles.In every direction, Geely’s AI ecosystem has profound technical accumulation and product layout, and will continue to bring surprises to everyone this year.

Finally, I want to use a video completed by AI to take everyone to imagine the bright future of AI+ cars. The video depicts a middle-aged man coming out of the boss’s office and walking out of the office building on a rainy night in a city. He took out his mobile phone and summoned his car with one click.

Here, the car has transformed into a super-intelligent body. It is not only a reliable old driver, but also an emotional companionship, making the car become our smartest and most comfortable intelligent travel life.

I believe that with the deep integration of Geely’s AI technology ecosystem, this day will come soon! AI+ car, the future is promising!