Wen| Big Model Home

How big is the gap between DeepSeek-R1 7B, 32B, and 671B?

Let’s start with the conclusion. Compared with the full-blooded version of 671B’s DeepSeek-R1, the distilled version is almost the difference between beef-flavored meatloaf and beef meatloaf.…

Recently, Deepseek has become the hottest topic in the AI circle. On the one hand, it has achieved efficient training and reasoning capabilities through innovative methods such as sparsely activated MoE architecture, MLA attention mechanism optimization, and mixed expert allocation strategies, while significantly reducing API call costs., reaching the industry-leading level. On the other hand, Deepseek achieved a rate of more than 100 million users in 7 days, surpassing OpenAI’s ChatGPT (ChatGPT is 2 months).

Online tutorials on local deployment of Deepseek-R1 have sprung up on various network platforms like bamboo shoots after rain. However, these local deployment tutorials will often tell you how powerful Deepseek-R1 is, but not how bad the locally deployed distilled version of Deepseek-R1 is compared to the full blood version.

It is worth noting that the currently publicly released small-size DeepSeek-R1 models are distilled from R1 through Qwen or Llama, and the size has been reduced to accommodate the call of DeepSeek-R1 models by devices with different performance.

In other words, whether it is the 7B or 32B DeepSeek-R1, it is essentially more like the R1-flavored Qwen model, which is almost the difference between beef-flavored meatloaf and beef roll. Although it has some of the former’s characteristics, it is more the latter who implements similar reasoning functions.

There is no doubt that as the model’s size shrinks, its performance will become worse, and the gap with the full-blooded version of the R1 will become wider. Today, the Big Model Home will take you to see how big the difference is between DeepSeek-R1 of different size and the full blood version?

language proficiency tests

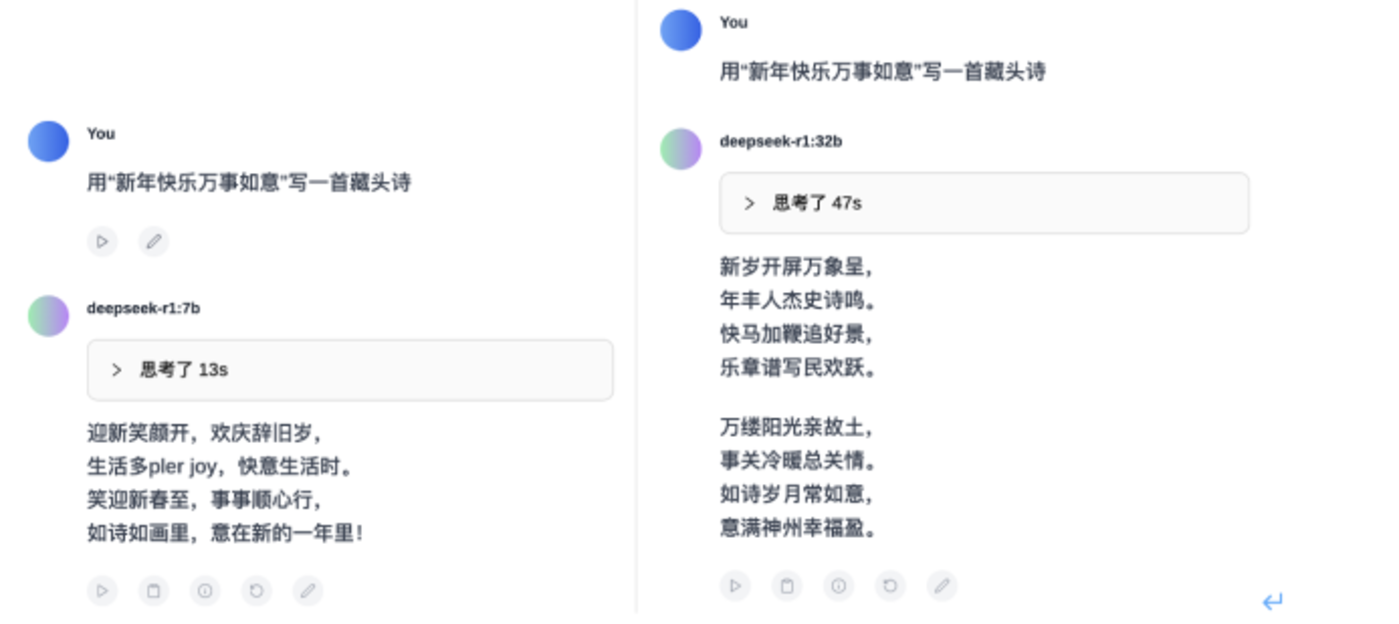

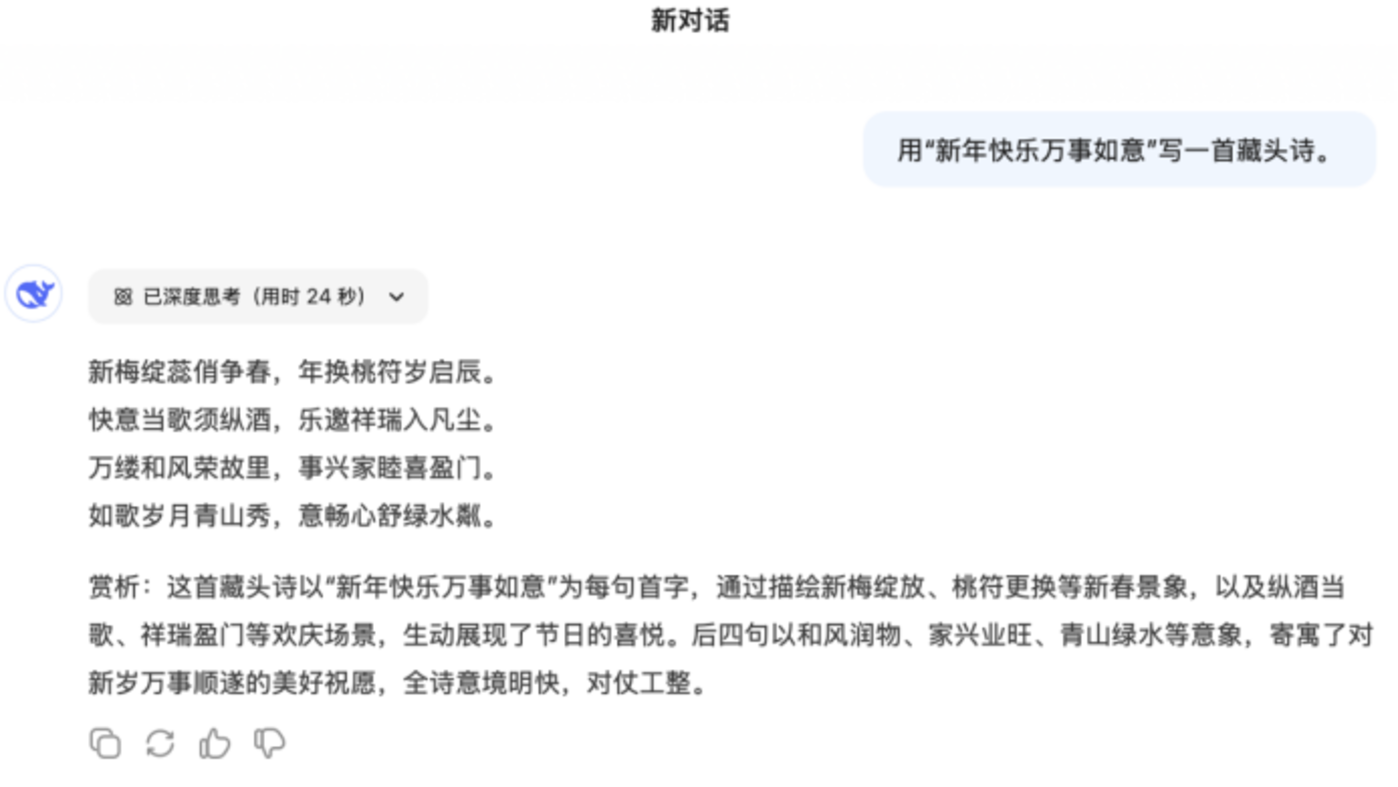

In the language proficiency test session, the Big Model Home asked DeepSeek-R1 of 7B, 32B, and 671B to write a Tibetan poem with Happy New Year and Good Everything.

In this scene, which seems to have been ruined by major models, it seems to many people to be the smallest pediatric scene of LLM.

However, just as the saying goes, if nothing goes wrong, something will happen. In this link, the 7B version of R1 was the first to have a bug!

It can be seen that the output result of the 7B version R1 on the left is neither hidden nor like a poem. It even begins to speak English in the text. Obviously, in terms of the most basic text generation capabilities, 7B has not reached the passing line.

In contrast, 32B’s R1 can normally output text content and successfully complete the creation of Tibetan poems. Although there are some flaws in the rhyme, the advantage is that the seven-character verse is neat and there are no logical errors in the content.

Of course, the one with the best effect is the full-blooded version of R1, which not only has neat pairs and appropriate rhymes, but also provides the appreciation content of the poem. Behind the Tibetan poem that clarifies a happy New Year and everything goes well, there is a good wish for everything to go well in the new year.

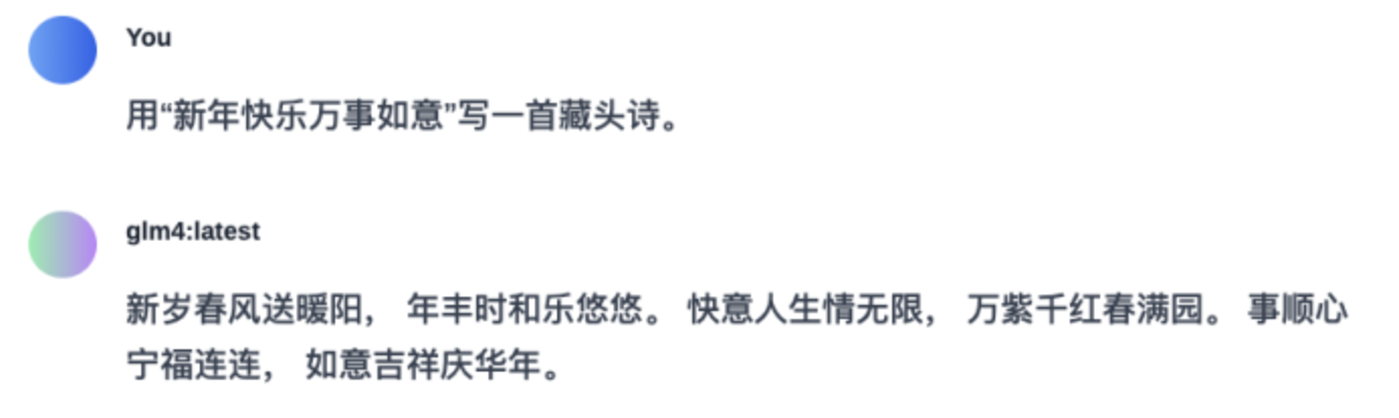

In order to explore whether language ability declined due to the small size, the large model home also used a 9B GLM4 of similar size to test with the same prompt words. It was found that GLM4 is not inferior to DeepSeek-R1 in terms of language expression capabilities. It can be seen that when the scale of R1 is small to a certain extent, its basic text capabilities will also be seriously affected.

Networking summary testing

After testing the language ability, we combined R1 with web search to test the ability to summarize web content under different size.

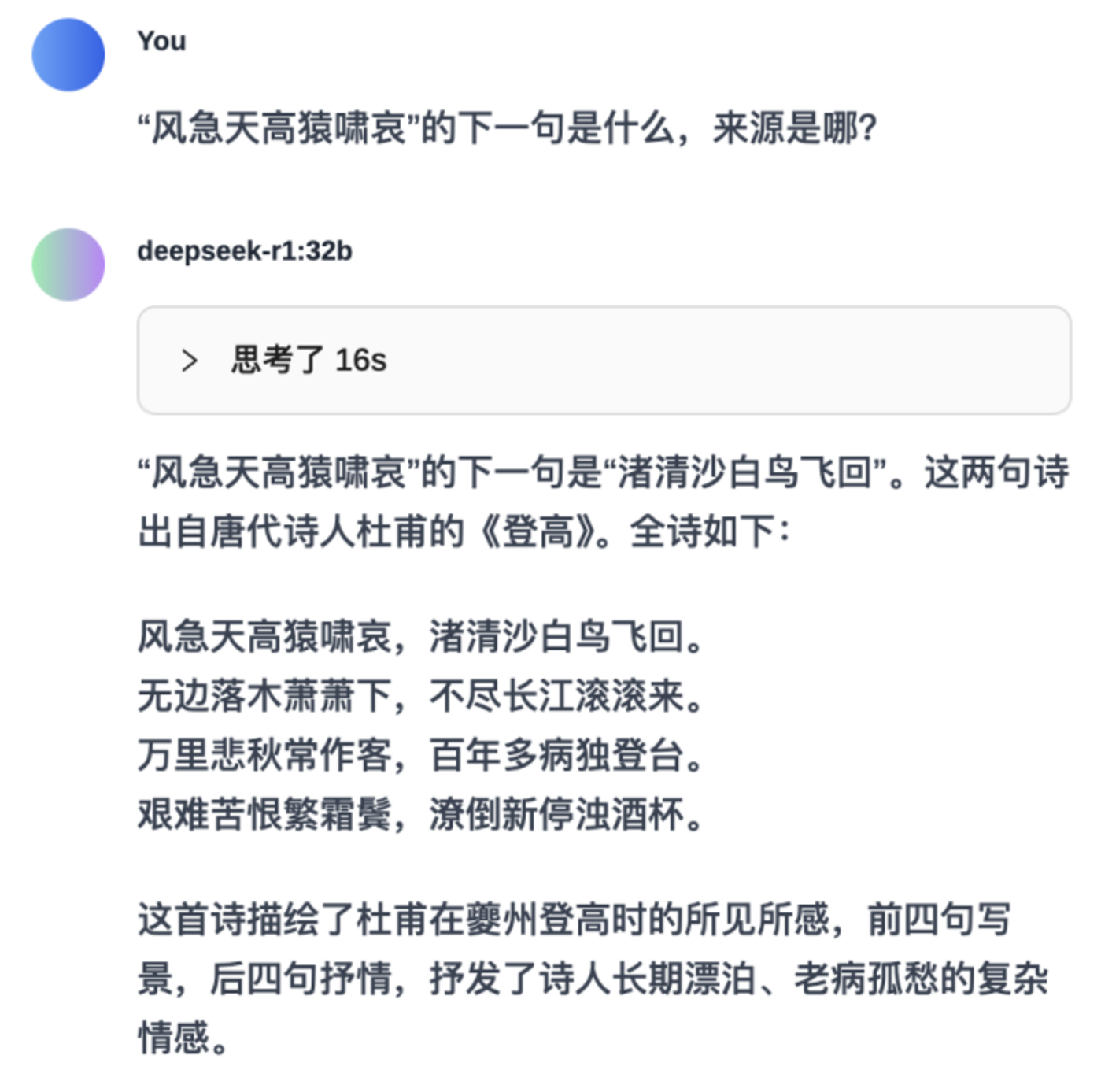

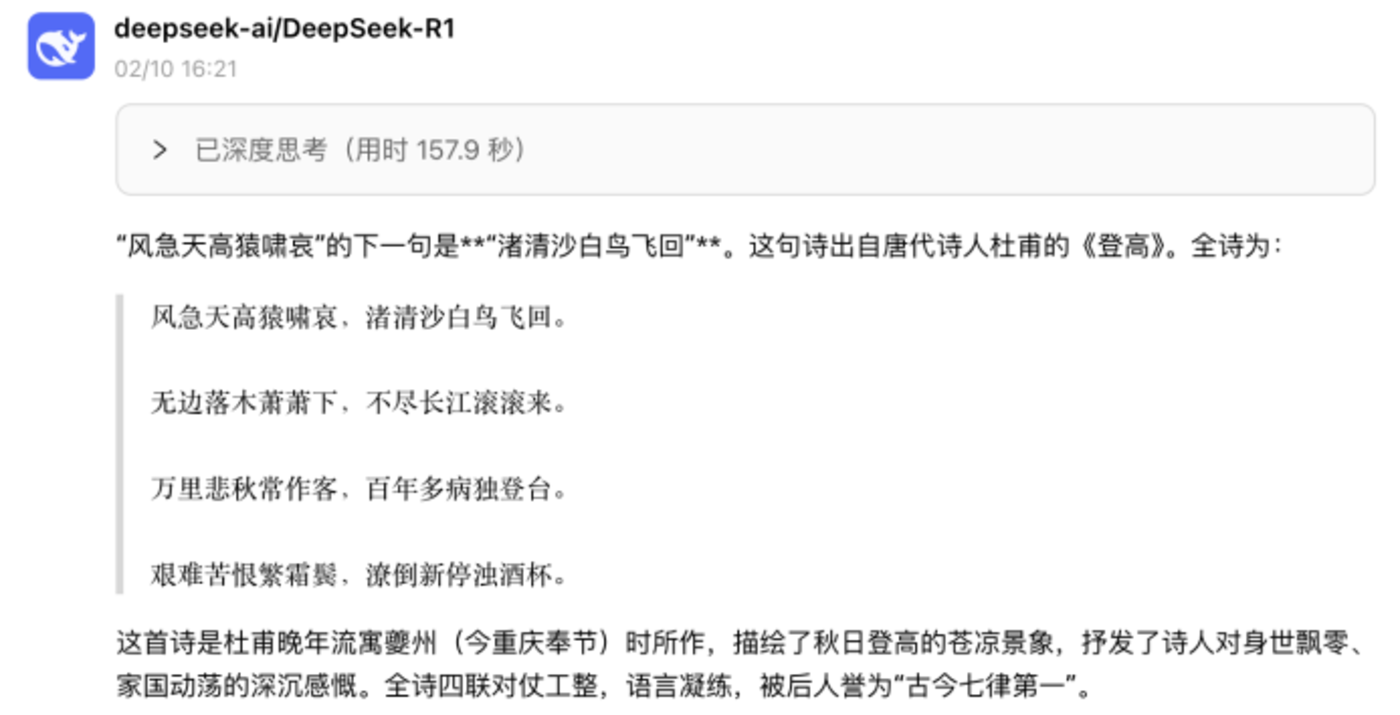

The Big Model Home used Du Fu’s “Climbing Up” to test the performance of the R1 big model when connected.

At first glance, the output of the 7B model is excellent enough (left), but in actual testing, the Big Model House found that the output of the 7B model is unstable. Occasionally, there will be scenes where there is a deviation in the understanding of poetry on the right. It can also be seen that under the same network search situation, the R1 model has been greatly affected in terms of language understanding and generation capabilities due to its reduced size.

In contrast, the R1 output of 32B is relatively stable. Although there will be left and right jumps showing the entire poem and a sentence in the output result, the accuracy of understanding the content of the poem has been greatly improved.

The full-blooded version of R1 is still the best performer. It not only can fully display the content of the poem, but also add some comments and background statements to the answers to increase the knowledge and professionalism of the answers.

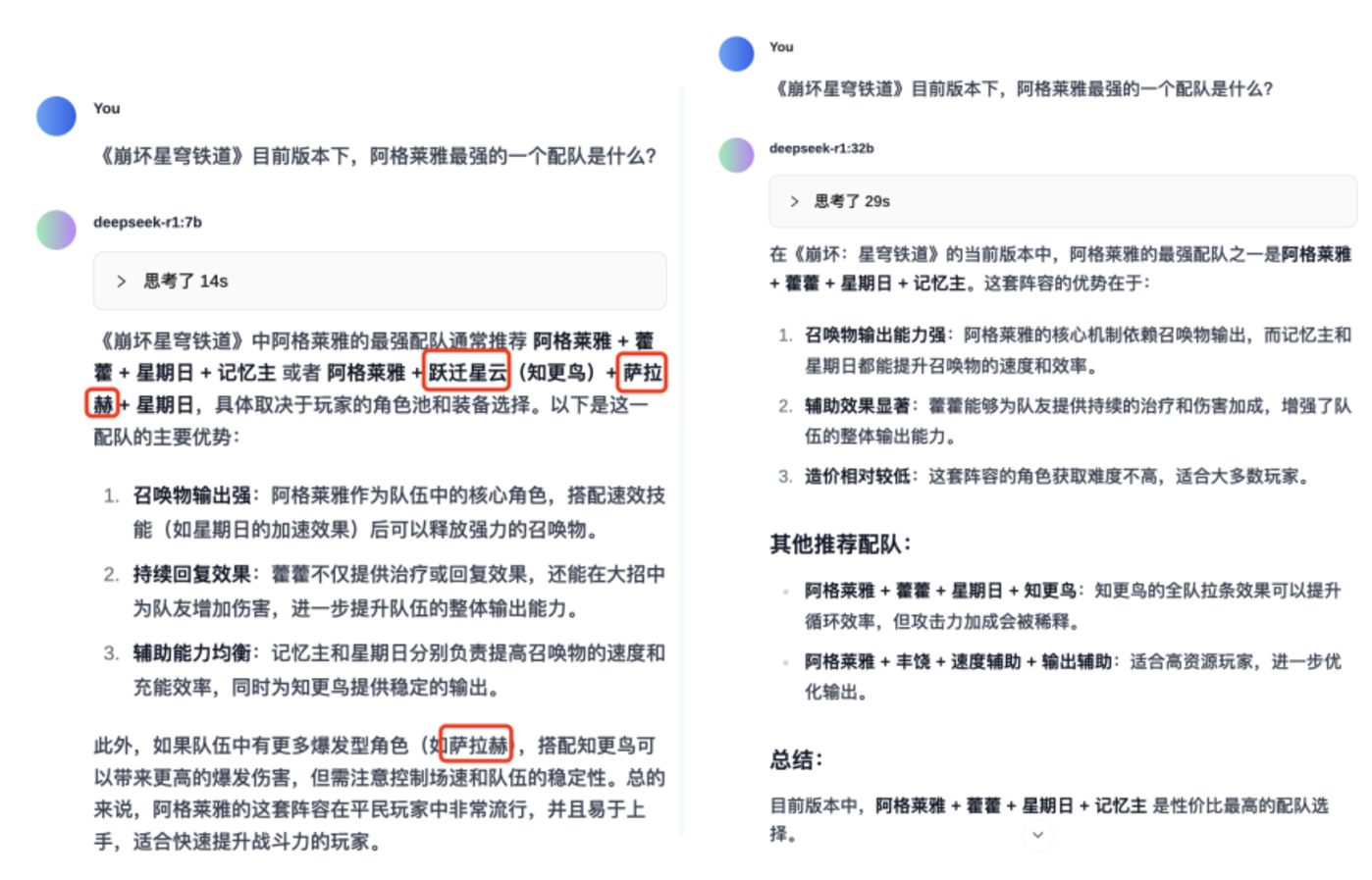

In another set of tests, Model House selected the matching team of a certain character in the current game to test the language understanding ability of 7B and 32B.

In this set of tests, the 7B model showed characters that did not exist in the game, while the 32B model was able to accurately grasp the character names. At the same time, the content given by the 32B model was more scientific and reasonable in terms of recommended reasons for team allocation.

Logical reasoning test

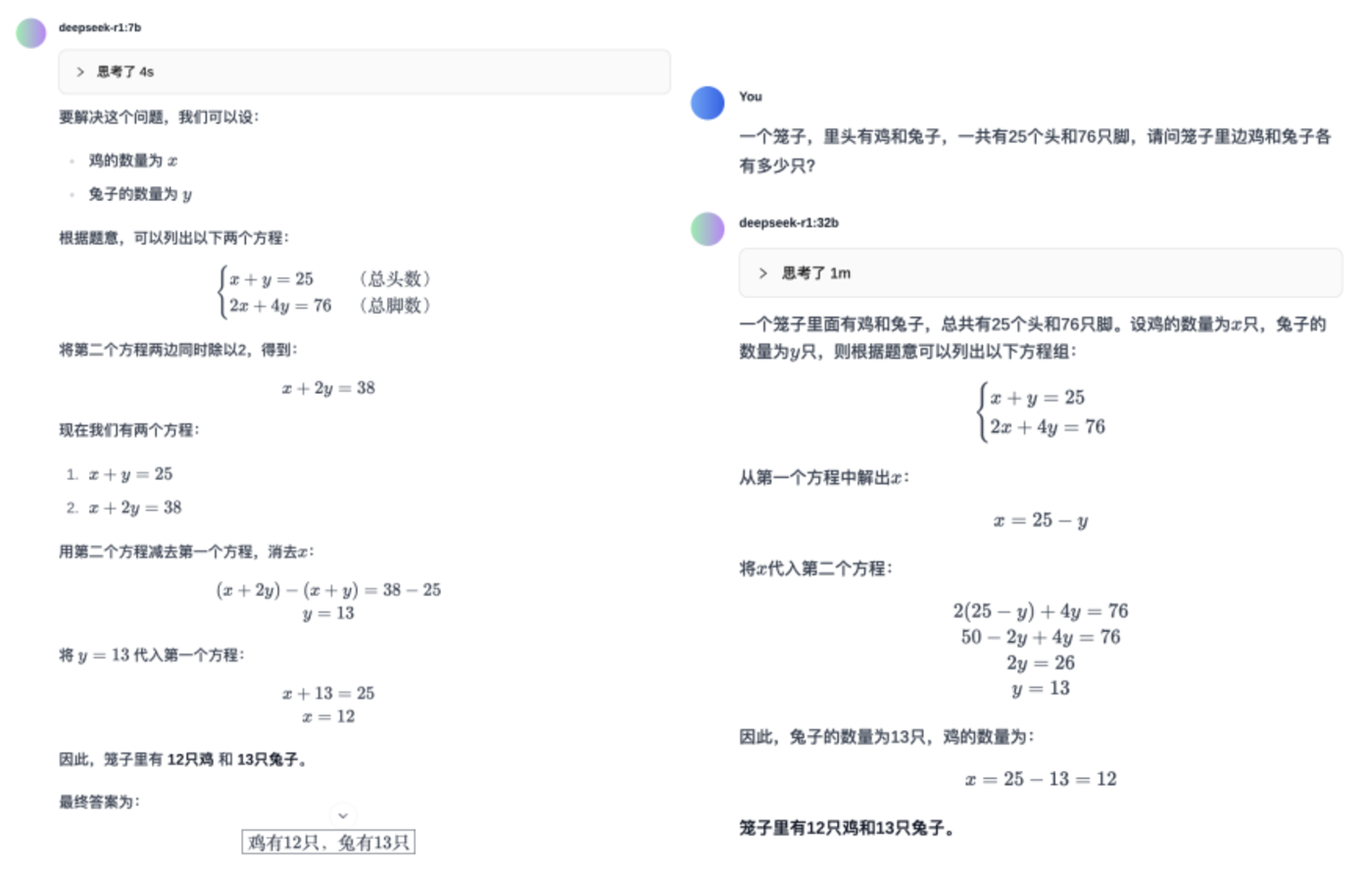

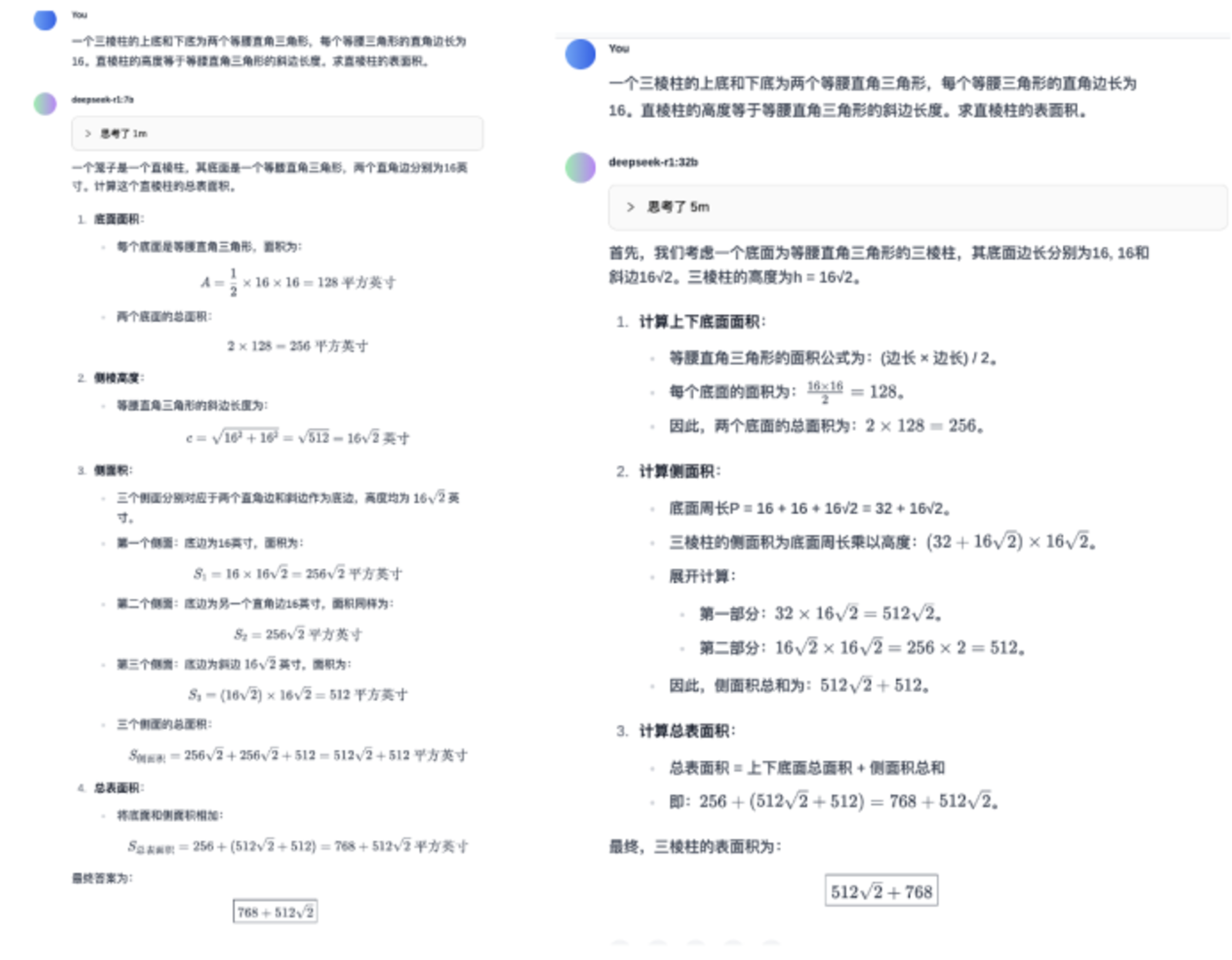

In the second part of the test, we used a classic chicken-rabbit cage problem to test R1 models of different size. The prompt words are:There is a cage with chickens and rabbits in it. There are a total of 25 heads and 76 feet. How many chickens and rabbits are there in the cage?

Perhaps the problem of chickens and rabbits being caged is too simple for R1, so the upper and lower bases of a triangular prism, which is more difficult, are two isosceles right triangles, and the right sides of each isosceles triangle are 16. The height of a straight prism is equal to the length of the hypotenuse of an isosceles right triangle. Find the surface area of a straight prism.& rdquo;

What is more surprising is that both the 7B and 32B models can output the correct answer. It can be seen that in terms of mathematical computing power, distillation retains the mathematical power of the R1 model as much as possible.

Code Ability Testing

Finally, let’s compare the coding capabilities of 7B and 32B. In this connection, the Big Model Home asked R1 to write a“Snake game that can be opened in the browser”。

The code is too long, let’s go straight to the good results:

The game program generated by Deepseek-R17B has a bug. It is just a static picture and the snake cannot move.

The game program generated by Deepseek-R132B can run normally. The normal movement of the snake can be controlled through the keyboard direction keys, and the panel can score normally.

The threshold for local deployment is high, so ordinary users try carefully

Judging from a series of tests, there are obvious gaps between the 7B and 32B of DeepSeek-R1 and the full-blood version of 671B. Therefore, local deployment is more used to build private databases or allow capable developers to conduct fine-tuning and deployment. For ordinary users, the threshold is relatively high, both in terms of technology and equipment.

Official test conclusions also show that the 32B DeepSeek-R1 can achieve 90% of the performance of 671B, and the effect is slightly better than OpenAI’s o1-mini in some scenarios such as AIME 2024, GPQA Daimond, and MATH-500.

In the actual experience, we can also see that it is basically consistent with the official test conclusions. Models above 32B are still barely available for localized deployment, andNo matter how small the size, the model is too weak in terms of basic capabilities, and even the output results are inferior to other models of size. Especially for the 1.5B, 7B, and 8B size models recommended by a large number of local deployment tutorials on the network, forget about them, except for low configuration requirements and fast speed, they are not ideal to use.

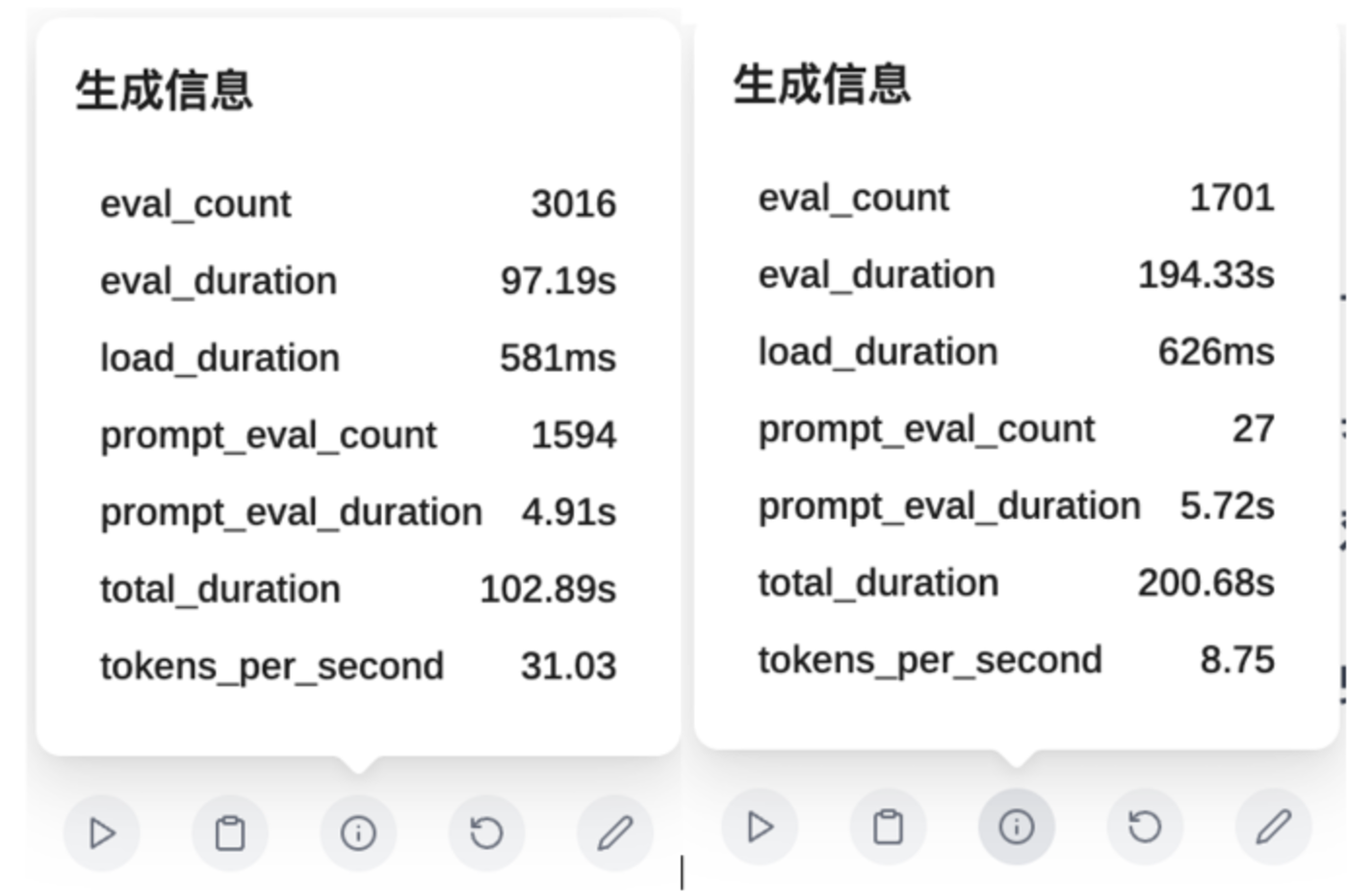

The left is 7B model generation information, and the right is 32B model generation information. The former generation speed is 3.5 times that of the latter.

So, in conclusion,If you really want to deploy a DeepSeek-R1 model locally, then it is recommended to start building the Big Model Home from 32B to have a relatively complete big model experience.

So, what is the cost of deploying a 32B model?

Photo source: 51CTO

Running the 32B R1 model, the official recommendation is 64GB of memory and 32- 48GB of memory. Coupled with the corresponding CPU, the price of a computer host is about 20000 yuan. If you run with the lowest configuration,(20GB memory +24GB video memory), the price will exceed 10,000 yuan. (Unless you buy API)

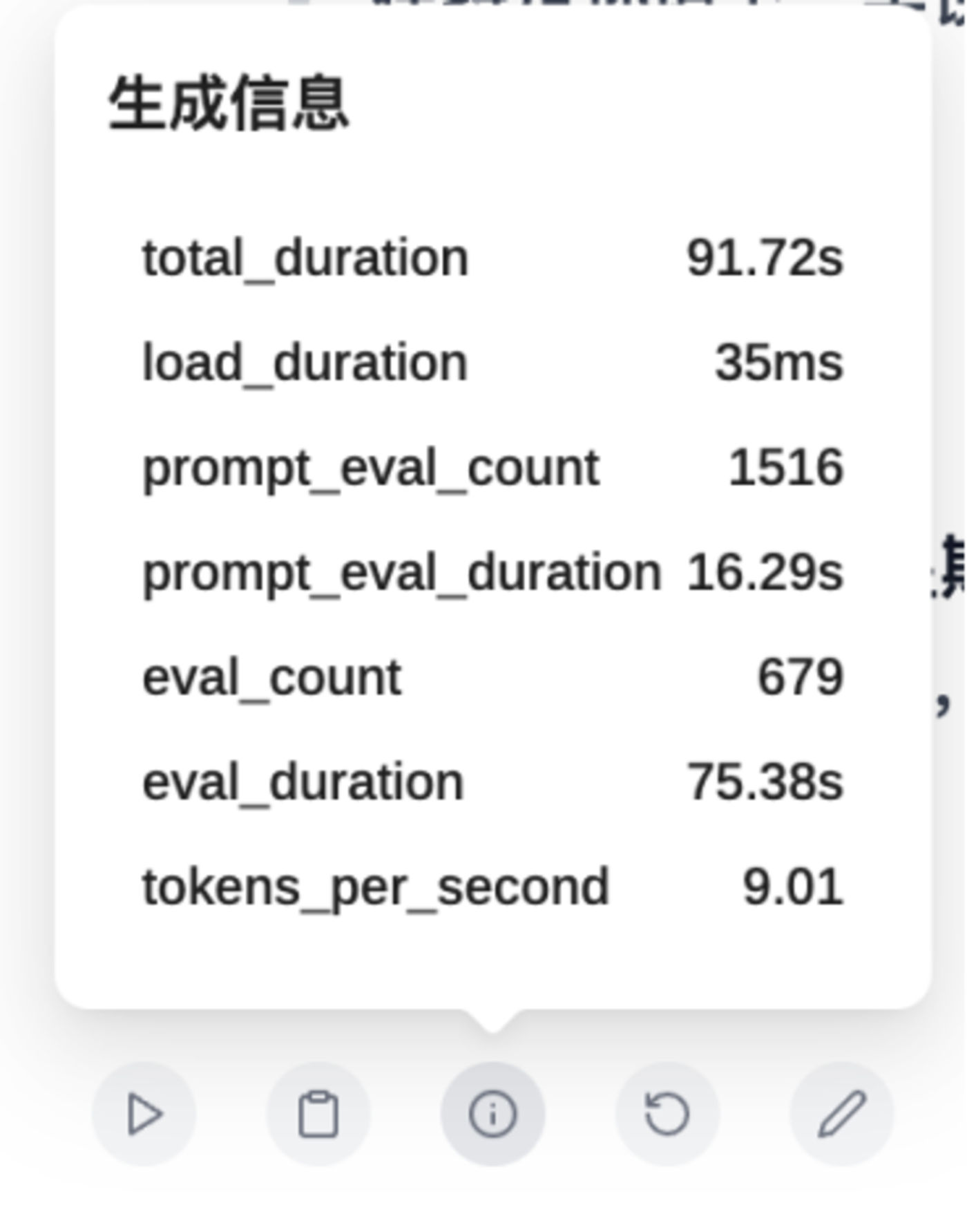

The device I use is the M2Max MacBook Pro (12-core CPU+30-core GPU+32GB unified memory). When running the 32B model, it can only output 8-9 tokens per second. The generation speed is very slow. At the same time, the power consumption of the whole machine continues to remain at 60-80W, which means that if the large model is continuously used on battery power, it will only have 1 hour of use time.

Not only that, after localized deployment of the R1 model, additional ways are needed to add networking functions or localized databases to the model. Otherwise, the data in the model will be disconnected from the ever-changing Internet. In most cases, the experience is far less than that of the current fully connected networking functions. Free large model products.

Therefore, for most ordinary users, the local large model you have worked so hard to build may not be as simple, convenient, and effective as the mainstream free large model products on the market. It just allows you to deploy local large models.

The success of the DeepSeek series of models has not only changed the technological competition landscape between China and the United States, but has also had a profound impact on the global scientific and technological innovation ecosystem. According to statistics, more than 50 countries have reached varying degrees of cooperation agreements with DeepSeek to carry out in-depth cooperation in technology applications and scenario development.

It can be seen from the global attention DeepSeek has attracted that artificial intelligence has become an important force in reshaping the international landscape. In the face of this unprecedented technological change, how to transform technological innovation advantages into sustained competitiveness while building an open and inclusive cooperation network will be key challenges in the future. For China, this is not only a test of technological strength, but also a competition for the right to speak in scientific and technological innovation.