Image source: Generated by AI

Image source: Generated by AI

DeepSeek’s frequent replies “The server is busy, please try again later” are driving users everywhere crazy.

DeepSeek, which was not well known to the public before, gained fame with the launch of V3, a language model that competes to GPT 4o, on December 26, 2024. On January 20, DeepSeek released another language model R1, which is benchmarking OpenAI o1. Later, due to the high quality of the answers generated by the “Deep Thinking” model and its innovation revealed positive signals that the cost of model training may plummet in the early stage, DeepSeek and the application are completely out of the circle. Since then, DeepSeek R1 has been experiencing congestion. Its online search function has been intermittently paralyzed, and deep thinking mode has frequently prompted “server busy”. This phenomenon has troubled a large number of users.

More than a dozen days ago, DeepSeek began to experience server outages. At noon on January 27, DeepSeek’s official website had displayed “DeepSeek web page/api is not available” several times. On that day, DeepSeek became the most downloaded app on the iPhone during the weekend, surpassing ChatGPT in the US download list.

On February 5, DeepSeek’s mobile terminal was launched for 26 days, with daily activity exceeding 40 million. ChatGPT mobile terminal’s daily activity reaching 54.95 million. DeepSeek’s rate was 74.3% of ChatGPT’s. Almost at the same time as DeepSeek emerged from a steep growth curve, complaints about its busy servers poured in. Users around the world began to experience the inconvenience of downtime after asking a few questions. Various alternative access began to appear, such as DeepSeek’s alternative website. Major cloud service providers, chip manufacturers and infrastructure companies have all launched, and personal deployment tutorials are everywhere. But people’s craziness has not eased: almost all major manufacturers around the world have claimed to support the deployment of DeepSeek, but users everywhere are still complaining about the instability of the service.

What happened behind this?

1、People who are used to ChatGPT can’t stand DeepSeek that cannot be opened

People’s dissatisfaction with “DeepSeek’s busy server” comes from the previous AI top-streaming applications dominated by ChatGPT, and rarely get stuck.

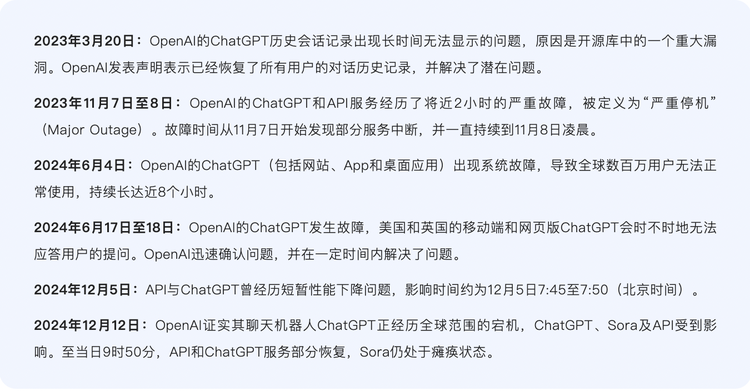

Since the launch of the OpenAI service, although ChatGPT has experienced several P0-level (the most serious incident level) outages, overall, it is relatively reliable, has found a balance between innovation and stability, and has gradually become a key component of similar traditional cloud services.

ChatGPT’s large-scale outages are not too many

ChatGPT’s reasoning process is relatively stable, including two steps: encoding and decoding. The encoding stage converts the input text into a vector, which contains the semantic information of the input text. In the decoding stage, ChatGPT uses the previously generated text as a context and generates the next word or phrase through the Transformer model until a complete statement that meets the requirements is generated. The large model itself belongs to the Decoder architecture. The decoding stage is the output process of tokens (the smallest unit of text used by the large model). Every time ChatGPT is asked a question, an inference process is started.

For example, if ChatGPT is asked,”How are you feeling today?”, ChatGPT will encode this sentence and generate an attention representation for each layer. Based on the attention representations of all previous tokens, it will predict the first output, token I, and then decode it to splice “I” into “How are you feeling today?” “How are you feeling today? I “, get a new attention expression, then predict the next token : , then follow step 1 and step 2 cycle, and finally get” How are you feeling today? I am in a good mood.”

Kubernetes, the tool for orchestrating containers, is the “behind-the-scenes commander” of ChatGPT, which is responsible for scheduling and allocating server resources. When the influx of user bearers completely exceeds the capacity of the Kubernetes control plane, it will lead to a complete paralysis of the ChatGPT system.

The total number of times ChatGPT has been paralyzed is not too many, but behind this is the strong resources it relies on as support, and behind maintaining stable operation is the strong computing power, which is something that people ignore.

In general, since the scale of data processed by inference is often small, the requirements for computing power are not as high as training. Some industry insiders have estimated that in the normal large model reasoning process, the weight of the main model parameters occupied by video memory accounts for the majority, accounting for more than 80%. The reality is that among the multiple models built in ChatGPT, the default model size is smaller than the 671B of DeepSeek-R1. In addition, ChatGPT has much more GPU computing power than DeepSeek, which naturally shows a more stable performance than the DS-R1.

DeepSeek-V3 and R1 are both 671B models. The model start-up process is a process of reasoning. The computing power reserve during reasoning needs to be commensurate with the number of users. For example, if there are 100 million users, a graphics card with 100 million users is required. It is not only huge, but also independent of the computing power reserve during training and is irrelevant. Judging from information from all parties, DS’s graphics card and computing power reserves are obviously insufficient, so it frequently stumbles.

This comparison is not used to users who have adapted to the ChatGPT silk skating experience, especially now that their interest in R1 is growing.

2、Cut, cut, still cut

Moreover, on closer comparison, the situations encountered by OpenAI and DeepSeek are very different.

The former is backed by Microsoft. As the exclusive platform of OpenAI, Microsoft’s Azure cloud service is equipped with ChatGPT, Dalle-E2 image generator, and GitHub Copilot automatic coding tool. Since then, this combination has become a classic paradigm of cloud +AI and has been rapidly popularized. Become standard in the industry; although the latter is a start-up, it mostly relies on self-built data centers, similar to Google, rather than third-party cloud computing providers. After reviewing public information, the Silicon Star people found that DeepSeek had not started cooperation with cloud manufacturers and chip manufacturers at any level (although cloud manufacturers announced that DeepSeek models would run on them during the Spring Festival, they did not engage in any real cooperation).

Also, DeepSeek has experienced unprecedented user growth, which means it also has less time to prepare for stressful situations than ChatGPT.

DeepSeek’s good performance comes from its overall optimizations at the hardware and system levels. DeepSeek’s parent company Magic Square Quantification spent 200 million yuan to build the Firefly-1 supercomputing cluster as early as 2019, and silently stored 10,000 A100 graphics cards in 22 years. In order to provide more efficient parallel training, DeepSeek has developed its own HAI LLM training framework. The industry believes that the Firefly cluster may use thousands to tens of thousands of high-performance GPUs (such as NVIDIA A100/H100 or domestic chips) to provide powerful parallel computing capabilities. At present, the Firefly cluster supports the training of models such as DeepSeek-R1 and DeepSeek-MoE, which perform close to GPT-4 in complex tasks such as mathematics and code.

The Firefly cluster represents DeepSeek’s exploration of new architecture and methods. It also makes the outside world believe that through such innovative technologies, DS reduces the cost of training and can train with only a fraction of the computing power of the most advanced Western models. R1 has comparable performance to top AI models. SemiAnalysis calculated that DeepSeek actually has a huge computing power reserve: DeepSeek has accumulated a total of 60,000 NVIDIA GPU cards, including 10,000 A100, 10,000 H100, 10,000 “special version” H800, and 30,000 “special version” H20.

This seems to mean that R1 has sufficient card capacity. But in fact, R1, as an inference model, is benchmarked against OpenAI’s O3. This kind of inference model requires deploying more computing power for the response link. However, the computing power saved by DS on the training cost side and the sudden increase in the reasoning cost side. It is not clear which is higher or lower.

It is worth mentioning that DeepSeek-V3 and DeepSeek-R1 are both large language models, but their operation methods are poor. DeepSeek-V3 is an instruction model, similar to ChatGPT. It receives prompt words and generates corresponding text to reply. But DeepSeek-R1 is an inference model. When a user asks R1 a question, it will first carry out a large amount of inference processes and then generate the final answer. The first thing that appears in the tokens generated by R1 is a large number of thought chain processes. Before generating answers, the model will first explain and decompose the problems. All these reasoning processes will be quickly generated in the form of tokens.

In the view of Wen Tingcan, vice president of Yaotu Capital, DeepSeek’s huge computing power reserve refers to the training stage. During the training stage, the computing power team can plan and expect that it is not easy to have insufficient computing power, but the inference computing power is highly uncertain. Because it mainly depends on the size and usage of users, it is relatively flexible.”Inference computing power will increase according to a certain rule. However, as DeepSeek becomes a phenomenal product, the user size and usage will explode in a short period of time. This led to an explosive increase in computing power demand during the reasoning stage, so there was a jam.”

Immediately active model product designer and independent developer Jiizang agreed that the number of cards was the main reason why DeepSeek was stuck. He believed that DS, as the mobile application with the highest number of downloads in 140 markets around the world, current cards could not hold up no matter what, even if you use a new card, because “it takes time for a new card to become a cloud.”

“The cost of running Nvidia A100, H100 and other chips for one hour has a fair market price. DeepSeek is more than 90% cheaper than OpenAI’s similar model o1 in terms of the inference cost of outputting tokens. This is not much different from everyone’s calculations. Therefore, the model architecture MOE itself is not the main problem, but the number of GPUs the DS owns determines the maximum number of tokens they can produce and provide per minute. Even if more GPUs can be used to serve users for inference rather than pre-training research, But the upper limit is there.”Chen Yunfei, a developer of the AI native application of Kitty fill light, holds a similar view.

Some industry insiders also mentioned to Silicon Star people that the essence of DeepSeek’s failure lies in the fact that private clouds are not done well.

Hacking is another driving factor for R1 stuck. On January 30, the media learned from network security company Qianxin that the intensity of attacks on DeepSeek’s online services suddenly escalated, and its attack orders surged hundreds of times compared with January 28. Qianxin’s Xlab laboratory observed at least two botnets participating in the attack.

However, there is a seemingly obvious solution for this kind of stuck service of R1 itself, which is for a third party to provide services. This is also the most lively sight we have witnessed during the Spring Festival-various manufacturers have deployed services to meet people’s demand for DeepSeek.

On January 31, NVIDIA announced that NVIDIA NIM can now use DeepSeek-R1. Previously, NVIDIA was affected by DeepSeek and its market value lost nearly US$600 billion overnight. On the same day, Amazon Cloud AWS users can deploy DeepSeek’s latest R1 basic model on their artificial intelligence platforms, Amazon Bedrock and Amazon SageMaker AI. Subsequently, upstart AI applications including Perplexity and Cursor also connected DeepSeek in batches. Microsoft took the lead in deploying DeepSeek-R1 on cloud services Azure and Github before Amazon and Nvidia.

Starting from the fourth day of the Lunar New Year on February 1, Huawei Cloud, Alibaba Cloud, Volcano Engine owned by ByteDance and Tencent Cloud also joined in. They generally provide DeepSeek full-range and size model deployment services. Then there are AI chip manufacturers such as Biren Technology, Hanbo Semiconductor, Shengteng, and Mu Xi, who claim to have adapted the original DeepSeek version or a smaller size distilled version. In terms of software companies, UFIDA, Kingdee, etc. have connected the DeepSeek model to some products to enhance product power. Finally, some products of terminal manufacturers such as Lenovo, Huawei, and Glory have connected the DeepSeek model to be used as end-side personal assistants and car smart cockpits.

So far, DeepSeek has relied on its own value to attract a comprehensive and huge circle of friends, including domestic and overseas cloud manufacturers, operators, securities firms and national platforms, national supercomputing Internet platforms. Since DeepSeek-R1 is a completely open source model, accessing service providers have become beneficiaries of the DS model. This has greatly increased the sound volume of the DS, and at the same time caused more frequent stuck phenomena. Service providers and the DS itself are increasingly trapped by the swarming of users, but they have not found a key solution to the problem of stable use.

Considering that the original versions of DeepSeek V3 and R1 models both have 671 billion parameters and are suitable for running on the cloud, cloud manufacturers themselves have more computing power and reasoning capabilities. They launched DeepSeek related deployment services to lower the threshold for enterprise use. After deploying the DeepSeek model, they provide the API for the DS model to the outside world. Compared with the API provided by DS itself, it is believed to provide a better experience than the official DS experience.

But in reality, the experience problem of running the DeepSeek-R1 model itself has not been solved in each service. The outside world believes that service providers are not short of cards, but in fact, the developers have unstable response experience in the R1 they deploy. The frequency of feedback is exactly the same as that of R1, which is more because the number of cards that can be allocated to R1 for reasoning is not too many.

“The popularity of R1 remains high. Service providers need to take into account other models of access. The cards that can be provided to R1 are very limited, and R1 is highly popular. Anyone who joins R1 and provides it at a relatively low price will be washed out.” Guizang, the model product designer and independent developer, explained the reason to the Silicon Planet.

Model deployment optimization is a broad area covering many aspects. It involves multiple levels of work, from training completion to actual hardware deployment. However, for DeepSeek’s stuck event, the reasons may be simpler, such as too big a model and insufficient optimization preparation before going online.

Before a popular large model is launched, it will encounter multiple challenges involving technology, engineering, and business, such as the consistency of training data and production environment data, data latency and real-time impact on model reasoning effectiveness, and high online reasoning efficiency and resource consumption., insufficient model generalization capabilities, and engineering aspects such as service stability, API and system integration.

Many popular models attach great importance to reasoning optimization before they are launched. This is because of computing time consuming and memory problems. The former means that the reasoning delay is too long, resulting in poor user experience and even failure to meet the delay requirements, which is stuck. The latter means that the model has too many parameters, consumes video memory, and even a single GPU card cannot fit, which will cause stuck.

Wen Tingcan explained the reason to the Silicon Star. He said that service providers encountered challenges in providing R1 services. The essence was that the DS model had a special structure. The model was too large +MOE (Expert Hybrid Structure, an efficient computing method) architecture.”(Service providers) Optimization takes time, but there is a time window for market popularity, so they are all first and then optimized, rather than fully optimized and then launched.”

If R1 wants to operate stably, the core now lies in the ability to reserve and optimize the reasoning side. What DeepSeek needs to do is find a way to reduce the cost of reasoning and reduce the output of cards and the number of tokens output at a time.

At the same time, Caton also stated that the computing power reserve of the DS itself is likely not as large as SemiAnalysis described. Magic Square Fund Company needs to use cards, and the DeepSeek training team also needs to use cards. There are not many cards that can be discharged to users. According to the current development situation, DeepSeek may not have the incentive to spend money to rent services in the short term and then provide users with a better experience for free. They are more likely to wait until the first wave of C-side business models are sorted out before considering the issue of service leasing. This also means that the stuck situation will last for a long time.

“They probably need two steps: 1) implement a payment mechanism to limit the use of free user models;2) find a cloud service vendor to cooperate with others ‘GPU resources.” The temporary solution given by developer Chen Yunfei is quite consensus in the industry.

But for now, DeepSeek does not seem to be too anxious about its “busy server” problem. As a company chasing AGI, DeepSeek seems reluctant to focus too much on the flood of user traffic. Maybe users will get used to the “busy server” interface in the near future.