Wen| Silicon Valley 101

DeepSeek’s V3 model achieves performance similar to that of the OpenAI O1 inference model with a training cost of only 5.576 million yuan, which triggered a chain reaction on a global scale. Since it can achieve a leap in AI capabilities without using such advanced Nvidia chips, Nvidia fell by as much as 17% in one day on January 27, and its market value once evaporated by US$600 billion. Some investors worry that this will reduce the market’s demand for advanced chips, but there is also a general opposite view in the technology circle: a large model with high performance, low cost and open source will bring prosperity to the entire application ecosystem, but will instead benefit Nvidia’s long-term development.

These two contradictory views are shaping the game. But if analyzed from a technical perspective, DeepSeek’s impact on Nvidia, chips and even the entire technology industry is not so simple. For example, John Yue, founder and CEO of Inference.ai, believes that DeepSeek has hit NVIDIA’s two major barriers, NVLink and CUDA, which has knocked down NVIDIA’s premium to some extent, but it has not broken down the barriers.

In this episode, the host Hong Jun invited Chen Yubei, assistant professor of the Department of Electronic and Computer Engineering at the University of California, Davis and co-founder of AIZip, and John Yue, founder and CEO of Inference.ai, to explain in detail DeepSeek’s core technological innovation and impact on the chip market.

The following are some of the interviews

01 DeepSeek’s core innovation is basic model capabilities

Hong Jun:Can you first analyze the more amazing aspects of DeepSeek from a technical perspective?

Chen Yubei:Judging from DeepSeek’s progress this time, although intensive learning plays an important role in it,But I think the capabilities of the basic model DeepSeek V3 itself are the key.This can be confirmed by DeepSeek’s paper data. When R1 Zero did not undergo reinforcement learning, it had a success rate of about 10% for every 100 pieces of content generated, which is a very significant improvement.

DeepSeek uses the GRPO (Group Relative Policy Optimization) method this time, and some people suggest that using other reinforcement learning methods such as PPO (Near Policy Optimization) can achieve similar results.

This tells us an important message:When the capabilities of the basic model reach a certain level, if a suitable reward function can be found, self-improvement can be achieved through methods similar to search.So this progress sends a positive signal, but reinforcement learning plays a secondary role in it, and the capabilities of the basic model are the foundation.

Hong Jun:To summarize your point of view, the reason why DeepSeek is good is essentially because V3 ‘s performance is very amazing, because various methods such as MoE are used to make this basic model perform better. R1 is just an upgrade on top of this basic model, but do you think V3 is more important than R1-Zero?

Chen Yubei:I think they all have some important points. From the perspective of V3, it mainly focuses on improving the efficiency of model architecture. There are two important tasks:One is the Hybrid Experts Network (MoE)。Load Balancer by different experts was not very good in the past. When it was dispersed to different nodes, its Load Balance would have problems, so they optimized the Load Balancer.

Secondly, it is on the Attention Layer, and it needs to save key value cache (KV Cache), which is actually improving the efficiency of the architecture. These two points are its core innovations, making it perform quite well on a large model of more than 600B. In DeepSeek R1 Zero, they first designed a simple and intuitive rule-based reward function. The basic requirement is to ensure that the answers to the math questions and the answer format are completely correct. They used DeepSeek V3’s method: generate 100 answers for each question, and then filter out the correct answers to increase the proportion of correct answers.

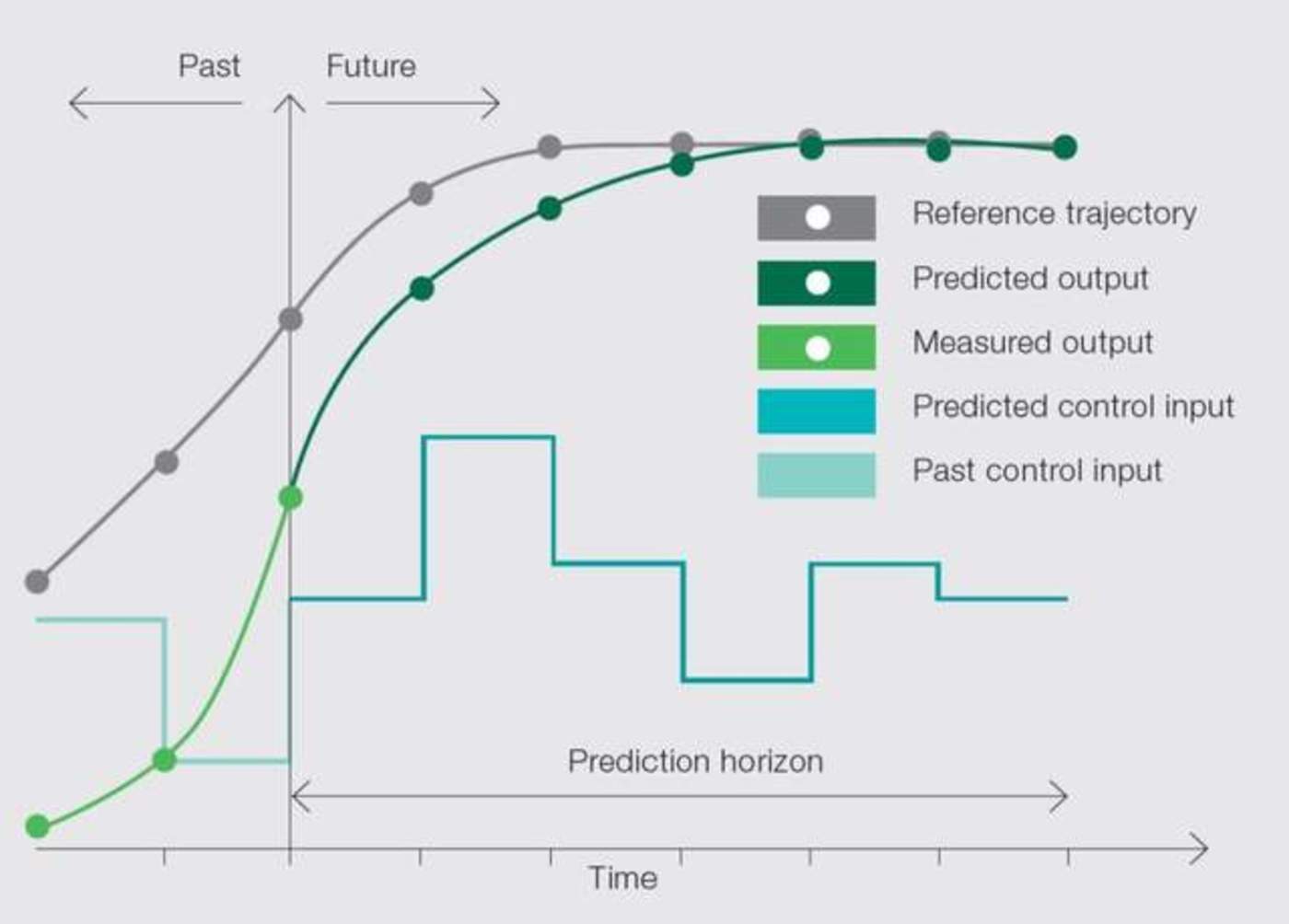

This approach actually bypasses the most difficult sparse reward question in reinforcement learning. If I answer 100 questions or 10,000 questions correctly, then I have no way to improve. But if the task already has a certain success rate, you can focus on strengthening these successful parts, so that sparse rewards are transformed into relatively dense rewards, and there is no need to bridge, model, and build intermediate reward functions. With the help of V3 ‘s basic capabilities, R1 Zero told us that if the basic capabilities of this model are already good, then it is possible for me to improve myself through this model. In fact, this idea has many similarities with Model Predictive Control and the World Model.

The second is to let large models train small models, seems to be an obvious result but this time it also had a major impact. They first trained a large model with a size of more than 600B and answered 100 questions through self-heuristics. Then they gradually improved this ability using a self-guidance method, increasing the success rate from 10% to 70-80%. This large model can also be used to teach small models.

They did an interesting experiment. They did various sizes of distillation learning on Qwen, ranging from 1.5B to over 30B, using the reasoning and planning capabilities learned by the large model to improve the performance of the small model on related issues. This is a relatively easy direction to think of, because in all self-enhancement, model predictive control, and model-based reinforcement learning, if the model itself is not good enough, improving the effect through search methods will not be ideal. But if you use a large model with strong search capabilities and good performance and directly pass on the learned capabilities to the small model, this method is feasible.

Source: ABB

Hong Jun:So on the whole, DeepSeek adopts a combination boxing strategy. Every step of the evolution from V3 to R1-Zero to R1 has its merits in terms of direction choice. So have Silicon Valley companies like OpenAI, Gemini, Claude, and LlaMA adopted similar model training methods?

Chen Yubei:I think many such ideas have appeared in previous research work.

For example, the Multihead Latent Attention mechanism used in the DeepSeek V3 model. Meta previously published a study on the Multi-Token Layer, and the effect is similar. In addition, there has been a lot of related research on reasoning and planning before, as well as on reward mechanisms and model-based methods.

In fact, I just think that the naming of DeepSeek R1 Zero this time is a bit similar to AlphaZero to a certain extent.

02 Good and bad for Nvidia: impact on premiums but not break down barriers

Hong Jun:I want to ask John, because you are in the GPU industry, do you think DeepSeek R1 is good or bad for Nvidia? Why did Nvidia’s share price fall?

John Yue: This should be a double-edged sword, with both good and bad results.

The positive side is obvious. The emergence of DeepSeek has given people a lot of imagination. Many people have given up making AI models in the past, but now it gives everyone the confidence to let more start-ups come out and explore application-level possibilities. If more people make applications, this is actually the situation Nvidia hopes to see most, because after the entire AI industry is revitalized, everyone needs to buy more cards. So from this perspective, this is more beneficial to Nvidia.

On the downside, Nvidia’s premium did take some impact. Many people initially believed that its barriers had been knocked down, causing the stock price to plummet. But I feel that the actual situation is not that serious.

Hong Jun:What are barriers?

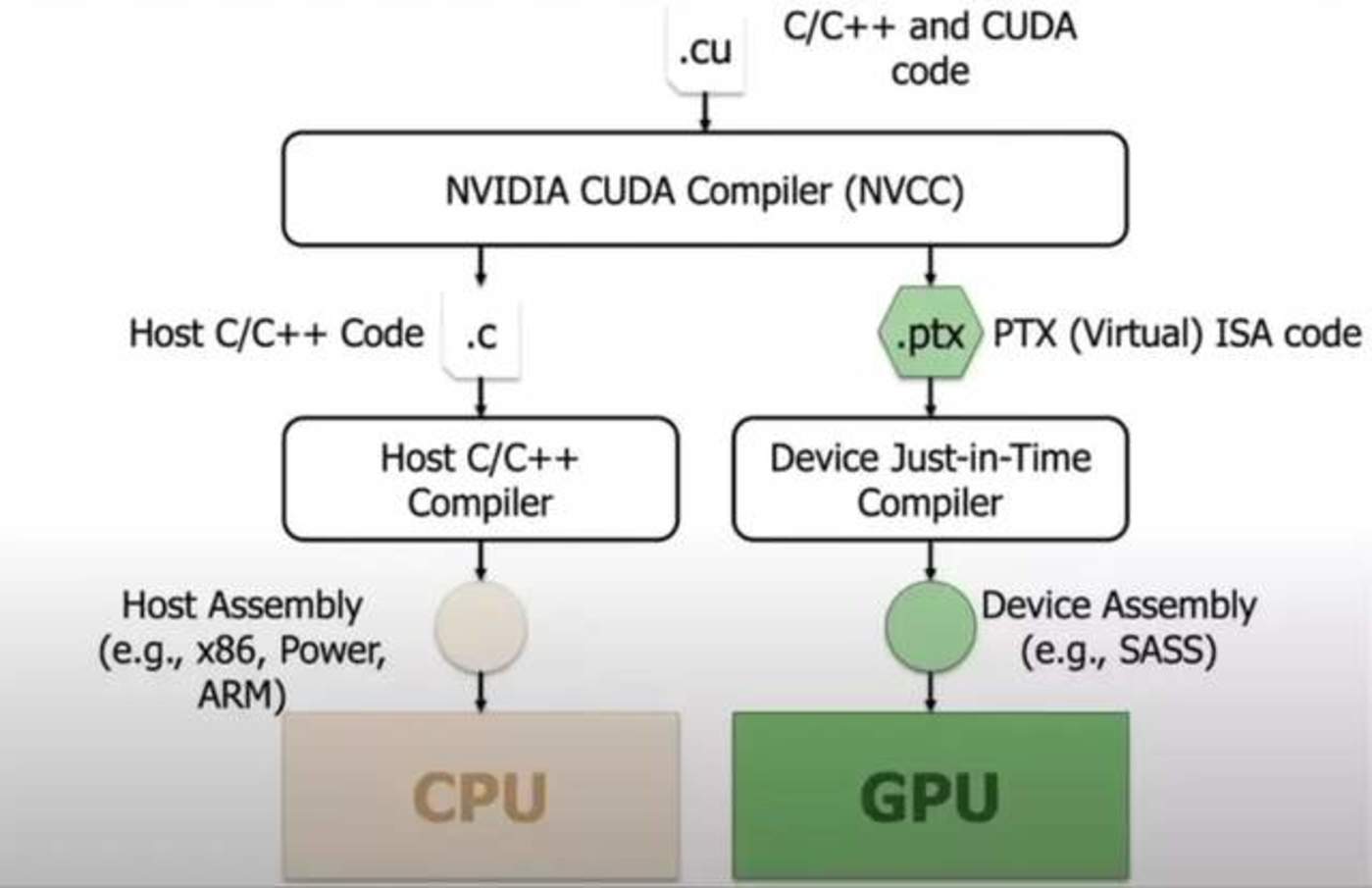

John Yue: Nvidia has two biggest barriers: one is Infiniband (chip interconnection technology); the other is CUDA (Unified Architecture for Graphics Computing). Its complete GPU-calling system no longer competes at the same level as other chip companies such as AMD. While other companies are competing for the performance of a single graphics card, Nvidia is competing for chip interconnection technology and software calls and ecosystem maintenance. For these two barriers, DeepSeek did hit its premium slightly, but it did not completely break the barriers.

Specifically, the impact on Nvidia’s premium is reflected in:

- The optimization of MOE actually weakens the importance of this part of Nvidia Internet to a certain extent. What happens now is that I can put different experts on different computing cards, making the interconnection between cards less critical. Moreover, some experts who do not need to work for the time being can go into hibernation, which has indeed had a certain impact on the demand for NVIDIA Internet technology.

- On the other hand, in terms of CUDA, this is actually telling everyone that there is a new possibility. In the past, everyone might have thought that CUDA could not be avoided, but now our (DeepSeek) team has proved that it is indeed possible to bypass CUDA and directly use PTX for optimization. This does not mean that all teams will have such capabilities in the future, but at least, it provides a feasible solution, which means that it is now possible to do this. And this possibility will lead to the fact that I don’t have to buy Nvidia graphics cards in the future, or that I don’t need the most advanced Nvidia graphics cards, or I can use a smaller Nvidia graphics card to run the model.

Hong Jun:What does it mean to bypass CUDA? Does it really bypass CUDA? What I heard was that it did not use CUDA’s higher-level APIs, but it still used lower-level APIs.

John Yue:Yes, my words are inaccurate. To be precise, I don’t completely bypass the CUDA ecosystem, but can directly call lower-level libraries, not using high-level APIs, but directly call PTX (Parallel Thread Execution), which is an instruction set. The instruction set level above it, and then optimize it directly at this level. However, this is also a big project, and not any small company has the ability to do this.

Source: medium

Hong Jun:If DeepSeek has this capability, can other companies gain similar capabilities? Assuming that we can’t buy NVIDIA GPUs now and switch to AMD GPUs, then you mentioned that NVIDIA’s two core barriers: NVLink and CUDA have been hit to some extent. Is this a good thing for companies like AMD?

John Yue: In the short term, it will be good for AMD, because AMD has recently announced that it will transplant DeepSeek. But in the long run, Nvidia may still have the advantage. After all, this is just DeepSeek, and the great thing about CUDA is that it is a universal GPU call system, and CUDA can be used by any software. DeepSeek’s approach only supports DeepSeek itself, and if a new model appears, it will have to be re-adapted again.

We are betting on whether DeepSeek can really become the industry standard and the next OpenAI on which all startups can build. If so, it’s really good for AMD, because it has already completed the migration of DeepSeek. But what if it wasn’t DeepSeek? DeepSeek’s advantages mainly lie in its improvements to methods such as reinforcement learning and GRPO. If more models appear later that use other methods, they will have to be re-adapted. It is much more troublesome than using CUDA directly. It is better to use Cuda directly.

Hong Jun:So your core view is that it has shaken NVIDIA’s two core barriers, NVLink and Cuda. What about GPU needs?

John Yue: I don’t feel like these two barriers have been shaken. Currently, Nvidia’s two barriers are still very strong.It just has an impact on the premium. You may not be able to charge such a high price, but this does not mean that other competing products can suddenly come in.

Hong Jun:Is it a very long process?

John Yue:Other competing products do something different from these two barriers. CUDA can be bypassed for individual models, but no one has yet been able to come up with a universal alternative. So there are actually no barriers that shake Nvidia. It’s like a wall that everyone used to think couldn’t get over, but now DeepSeek has jumped over. Can others come over? It just provides a spiritual encouragement.

Hong Jun:Will the need for GPUs decrease? Because DeepSeek’s training cost is low this time, to some extent, the decline in stock price also means that better models can be trained with fewer GPUs?

John Yue:If you only look at the training model, this is indeed the case. But the real significance of DeepSeek is that it rekindled the enthusiasm of AI practitioners. Looking at it this way, more companies should enter the market and they will buy more chips. So this may lead to a lower premium but an increase in sales. As for whether the final market value will increase or decrease, it depends on this proportional relationship.

Hong Jun:What do you think?

John Yue:This is hard to say, but the key still depends on the application. What kind of applications can you develop by 2025? If the main resistance to application development before was the price of GPUs, then as the price dropped to one-tenth or even lower, this resistance will be eliminated and the market value should rise. But if the main resistance is in other areas, it is hard to say.

Hong Jun:In fact, with the increase in AI applications, DeepSeek has lowered the threshold. From the perspective of GPU requirements, it is generally more favorable to Nvidia.

John Yue:Right. Because these application developers won’t form their own teams to repeat DeepSeek’s work, such as bypassing Cuda to call PTX. Some small companies need out-of-the-box solutions. So this is good for Nvidia. What Nvidia hopes to see most is the emergence of more AI companies.

Hong Jun:As more AI companies come out, do they need GPUs to train models, or do they need more reasoning?

John Yue:I personally think that the field of inference chips will also be Nvidia in the future. I don’t think these small companies have any advantages in the long term. Everyone has advantages in the short term. For a long time, I feel that reasoning is Nvidia, and training is Nvidia.

Hongjun: Why is reasoning also Nvidia?

John Yue:Because it is still CUDA and the leader in this industry. The two barriers mentioned just now have not been shaken either.

Today’s ASIC (application specific integrated circuit) companies mainly face two problems: insufficient software support and lack of hardware barriers.In terms of hardware, I don’t see any strong barriers, and everyone basically tends to be homogeneous.

Software is another big issue. These ASIC companies are not good enough in software maintenance, and even PTX-level maintenance is not perfect. These two factors have led to Nvidia still occupying a leading position.

Hong Jun:Do inference chips have equally high software requirements? In the entire GPU and the training chip, Nvidia has an absolute monopoly, because you cannot do without this system or it is difficult to bypass it. But in terms of reasoning training, is it convenient to bypass it?

John Yue:Inference is also very demanding on software, and it still requires calling the underlying instructions of the GPU. Grok is far behind Nvidia in terms of software. You see, their current model is getting heavier and heavier, from just making chips at first, to now building their own data centers, and then to making their own cloud services. It is equivalent to building a complete vertical industrial chain. But its funding is far behind that of Nvidia. Why can it do better?

Hong Jun:Are there any chip companies worthy of attention on the market now?

John Yue: I think AMD has a certain chance, but other ASIC companies may be worse. Even AMD is still a long way to go compared with Nvidia.

I personally feel thatIf you want to innovate in the chip field, you may need to focus on software maintenance of the chip rather than making changes in the hardware.。For example, adjusting the ratio between DDR (Double Data Rate), Tensor Core (Tensor Core), and CUDA Core (General Purpose Computing Core) is actually of little significance. Doing so is tantamount to helping Nvidia as a big soldier and seeing if there is a market for products with this proportion, but you can’t build any barriers.

However, there is still a lot of room for optimization in the software sector, such as developing a software system that is better than CUDA. There may be a great opportunity, but it will not be an easy task.

03 Open Source Ecosystem: Reducing entry barriers for AI applications

Hong Jun:What impact do you think DeepSeek’s choice of open source will have on the industry ecology? Recently, on reddit in the United States, many people have begun to deploy DeepSeek’s model. After it chose open source, how did this open source help DeepSeek make its model better?

John Yue:Recently, we have also deployed some DeepSeek models on our platform. I think its open source is a very good thing for the entire AI industry. Because after the second half of last year, everyone will feel a little lost because AI applications seem to be unable to get up. One of the main reasons why I can’t get up is that many people think that Open AI can break down barriers to all applications by 80 to 90%, and everyone is quite scared. That is, if I make something, OpenAI will release o4 next year, and it will cover all my things.

Then if I build this thing based on OpenAI, it will come up with a new model that completely includes my applications; I can’t compete with him in terms of price, and I can’t compete with him in terms of functions. This has caused many companies to dare to do it, and VCs to dare to come in.

This time DeepSeek open source has a benefit to the entire industry: I am now using a model that is very good at open source. If I have a certain degree of continuity, I will have greater and more confidence to do more applications.

If DeepSeek has the ability to surpass OpenAI, it will be better for the entire industry. It is equivalent to saying that there is a dragon and now that it no longer exists, everyone can develop better.

The more people use it, it will be the same logic as LlaMA. There will be more people use it and more feedback, so its model can do better. The same is true for DeepSeek. If there are more application developers, it will definitely collect data much faster than other models.

Hong Jun:Now we can see an open source model whose overall performance is basically on the same level as OpenAI’s o1. Then you can expect that after OpenAI releases the o3 mini, the open source model may also be upgraded, and there will be a next version that will surpass these closed-source models. I am thinking that when an open source model has good enough performance, what is the significance of OpenAI’s closed-source models? Because everyone can directly get the base of the best open source model and use it.

John Yue: The significance of DeepSeek is that its price has dropped a lot. It is open source。

It’s not that it’s better than OpenAI. Closed-source models will also be a leading trend. The significance of open source may be that it will be like Android, which can be used by anyone, and then very cheap. In this way, it lowers the barrier to entry into the industry, so it is a factor that really makes the industry prosperous.

These closed-source models may always be the leader. If closed source is not as good as open source, it may not make sense, but it should have management advantages and can surpass the open source model.

Hong Jun:So now it seems that there are indeed a number of closed source products that are not as good as open source.

John Yue:Then pray for yourself. If closed source is better than open source, I don’t know what this company is doing, you might as well free.

Chen Yubei:I think the open source ecosystem is very important. Because in addition to being in the laboratory, I used to participate in a company called AIZip, which also did many full-stack AI applications. Then you will find one thing that you can’t directly use many of these open source models, that is, you can’t directly use these open source models for product-level things. But if there is such an open source model, it may greatly improve your ability to produce such a product-level model and greatly improve your efficiency.

So whether you are like DeepSeek or LlaMA, I think this open source ecosystem is a crucial thing to the entire community. Because it lowers the entry threshold for all AI applications。It is very good news for everyone who does AI to see more AI applications and touch more.

So I think what Meta is doing is very important. LlaMA has been insisting on open source construction so that all AI developers can make their own applications. Although LlaMA did not directly complete this application for you, he provides you with a Foundation. As the name suggests, Foundation is a floor, right? You can be on this floor, you can build the kind of application you want to build, but he does 90% of the tasks for you.

I think a better Foundation is very important to the entire ecosystem. If OpenAI works hard to optimize some of its capabilities, it will still have such advantages. But we don’t want OpenAI to be the only one in the market, which may be bad news for everyone.

04 The drop in API prices and the imagination of small models

Hong Jun:How did DeepSeek reduce the price of the API interface? Because I looked at its R1 official website and it said that for every million tokens entered, cache hits are 1 yuan, cache misses are 4 yuan, and every million tokens outputted are 16 yuan. I calculated the overall price of o1 and found that almost every gear is 26 to 27 times higher than theirs. How did it reduce the cost of this API?

John Yue:It is equivalent to doing the entire set of optimizations from top to bottom. From how to call PTX, the underlying GPU to the MOE architecture, to Low Balance, it has made a set of optimizations.

Perhaps the most important point here is that it can reduce the requirements for chips. You had to run on the H100, the A100, you can now use a slightly lower end, or you can even use Grok. You can use the strict version of the H800 cards in China to run. In this way, it has actually significantly reduced the cost of each Token.

If you do further optimizations in it, such as slicing the GPU, it can actually be reduced a lot. Moreover, people inside OpenAI may have lowered the price long ago. It just doesn’t want to lower the price of Retail, which is not certain.

I think it’s mainly these two. One is architecture and the other is chips, which can be downgraded.

Hong Jun:Will chip downgrade become a common phenomenon in the industry in the future?

John Yue:I don’t think so, because Nvidia has stopped production of all old chips, and there are only a limited number of them on the market. For example, although it can run on the V100, the V100 has long been discontinued. Moreover, depreciation has to be calculated every year, and the V100 may not be found on the market in two years. Nvidia will only produce the latest chips.

Hong Jun:Is its cost still low?

John Yue:If you make some optimizations on new chips, such as our GPU slicing scheme, the cost may be reduced. Because the model has become smaller. We recently ran its 7B model and only needed about 20GB. We can cut an H100 into three parts to run DeepSeek, which directly reduces the cost by one-third.

I think more virtualized GPUs may be used to reduce costs in the future. It is unrealistic to rely solely on old cards and game cards. There are several reasons. First, Nvidia has a blacklist mechanism that does not allow these models to be officially deployed with game cards; in addition to the production of old cards, there are also many maintenance problems. So I don’t think chip degradation will become a mainstream phenomenon.

Hong Jun:So now you are providing customers with chip optimizations to help save costs. Then your customers should have increased sharply recently. Do you think this is benefiting from DeepSeek, or are you guys doing this all the time?

John Yue:We’ve been doing this since last year and have been betting that there will be more small models in the future. After DeepSeek came out, as just said, it brought about a trend to distil more small models. If you want to run more small models, you need different models of chips, and it may be difficult to use physical chips every time.

Hong Jun:DeepSeek reduces the overall API cost, and you just analyzed its research methods. Do you think this research method may be used in more scenarios in the future, such as when you are doing GPU sharding and customer models? Will it lead to savings in GPU costs across the industry?

Source: DeepSeek Platform

John Yue: It should be possible. The emergence of DeepSeek proves to the industry that there are now better reinforcement learning methods. I think there will definitely be many people who will adopt the same method later. No one dared to try calling CUDA before. They proved that several doctoral graduates could quickly bypass CUDA. Later, many model companies may follow suit. If everyone does this, the cost will definitely drop.

Hong Jun:So I understand that training costs have been reduced and reasoning costs have also been greatly reduced. So when you help customers deploy this kind of GPU, what are the main needs of customers?

John Yue:Simple and convenient, rapid deployment and low price. We can solve the deployment cost issue because there is a lot of waste. For example, an A100 or H100 is 80GB, but if you want to distil some small models, or use existing models such as Snowflake and Databricks, you may only need 10GB, and some are even smaller. Deploying 10GB of content on an 80GB GPU means that most of the GPU is wasted, but you still have to pay for the entire GPU.

In addition, the workload is elastic during inference, sometimes with more customers and sometimes with fewer customers. If there is wasted space on every card, there will be such waste on every card when expanding. What we are doing now is to virtualize it, so that there is no waste at all, which is equivalent to solving many GPU deployment cost problems relatively simply and roughly.

Chen Yubei:There is actually another interesting direction in this field. Small models have progressed very rapidly in the past 6 to 8 months, which may bring about a change. Previously, 99% of the world’s computing power was invisible to everyone, and people would not realize that ARM chips or Qualcomm chips had AI capabilities. In the future, if there are a large number of small language models, visual language models (VLMs), audio intelligence and other capabilities, they may appear more and more on platforms that will never be used before. For example, Tesla has already used a lot of them in cars.

You will find that more and more devices, such as mobile phones, headphones, and smart glasses, are now a popular category. Many companies are making them and will be equipped with device-side On-Device AI. This has huge opportunities to reduce costs and improve AI availability.

Hong Jun:Is the small model easy to use?

Chen Yubei:Small models actually have many basic applications in many fields. When you give the small model enough training, it will eventually perform similar to the large model.

Hong Jun:Tell me a specific application scenario.

Chen Yubei:For example, if we use this microphone, it has a noise reduction function, which can be implemented with a very small neural network, and this neural network can be placed in the microphone. Even if the model is enlarged 10 times or 100 times, the performance difference will not be much.

Such functions will be integrated more and more. For example, small language models can be placed on smart watches to do some basic questions and answers, call APIs, and complete basic work. More complex tasks can be transferred to the cloud, forming a layered intelligent system. Now a smart watch can do very complex reasoning. The Qualcomm chip on the mobile phone can have an inference power of 50TOPS (trillion operations per second), which is a lot of computing power and is not much different from the A100. Many small models can do what large models are already doing, which will greatly help reduce costs and increase the popularity of AI.

Hong Jun:Is the mini model local or networked?

Chen Yubei:Local.

Hong Jun:So in the future, there may be various small models in our entire world. When this small model is not enough, it will mobilize this large model, so that this part of the reasoning cost can be greatly saved?

Chen Yubei: Yes, I think the infrastructure of AI in the future should be hierarchical. The smallest one can go to the terminal equipment and do some basic calculations in the sensor. There will be more AI functions at the edge, and then in the cloud, forming a complete end-edge-cloud system.

I mentioned a number before. If you do a simple calculation and add up the computing power of terminals and edges around the world, it will be 100 times the computing power of GPUs in global HPC (High Performance Computing). This is a very scary thing because it is too big. Shipments of high-performance GPUs may be in the order of one million, but mobile phones and edge devices may reach the order of tens of billions, and the sensor level may be two orders of magnitude larger. When the volume is increased, the added computing power is extremely large.

Hong Jun:Are the chips enough? For example, Qualcomm chips.

Chen Yubei:

The other is that 90% to 99% of the world’s data is actually on the end and edge. But in most cases now, it’s just use it or lose it. For example, you can’t send all the video from your camera to the cloud. If there is AI function on the terminal and edge, you can filter out the most valuable data to upload, which is huge. At present, these data have not been fully utilized.

In the future, when primary AI functions increase, these primary AI models can instead be used as a data compression tool for large models.

Hong Jun:Are you deploying DeepSeek’s small model now or LlaMA’s?

Chen Yubei:In fact, it may not be at all. There are Qwen, LlaMa, and DeepSeek in the entire ecosystem, as well as many self-developed ones, so I think that in the entire ecosystem, more and more such small models are emerging, and their capabilities are rapidly improving.

Hong Jun:What are the key points in selecting a model?

Chen Yubei:The first is efficiency: the model must run fast and be small.

But what’s more important is the quality requirements: No one will pay for a fast, small model that doesn’t work well. The model must be competent for the tasks it is dealing with. This is what I call AI robustness, which is very important. For example, the noise reduction function of a microphone must ensure sound quality. If the processed sound is rough and no one will use it, people will still choose to use post-processing software.

Hong Jun:So on the application side, everyone is not looking at what the cutting-edge model is, but what is the most suitable model for me, and then you can choose the one with the lowest cost.

Question 05 DeepSeek: Data and Continuous Innovation

Hong Jun:Because a lot of information about DeepSeek has been made public now, do you have any questions that are very curious about this company?

Chen Yubei:In the article they published, the specific data composition was not disclosed in detail, and many training details were only mentioned at the macro level. Of course, I understand that not everything should be made public, and this requirement is unreasonable. But it might be better to provide more details to make it easier for others to replicate the work. This trend is common in all cutting-edge research laboratories, and it is vague when it comes to data.

Hong Jun:Some don’t even dare to write OpenAI, and all large model companies don’t dare to answer when asking for data.

Chen Yubei:Not even how the data is balanced, the duration and the specific processing process are written. I understand that I don’t write the specific data composition, but I can at least write how the data is organized. But many times no one writes these details, and I think these are the most critical parts. Other methods are easy to come up with, such as using a search method for reasoning planning, or using a bootstrap method to improve performance when the model is good enough, or using a large model to directly self-cite the results to a small model.

What is really difficult to think of are two aspects: the specific composition of the data and the underlying innovation in the architecture.I think these are the most critical content.

John Yue:I am more concerned about whether DeepSeek can continue to surprise everyone and continue to challenge OpenAI. If it can continue to surprise us and let everyone eventually develop applications on DeepSeek, it will indeed bring about major changes to the landscape of the entire chip and infrastructure field.

As I said just now, DeepSeek has bypassed CUDA to adapt many things. If it can continue to maintain this position, other chip manufacturers may also have opportunities, which will also pose certain challenges to Nvidia’s ecosystem, and the premium will definitely decline. But if the next model, such as Llama 4, comes out, if it is much better than DeepSeek, it may have to go back to square one.