Article source: Smart Things

Image source: Generated by AI

Image source: Generated by AI

The focus of the current big model is undoubtedly DeepSeek, which is popular all over the world, but it is essentially a pure language model, and the battle on the multi-modal big model track is equally hot.

Zhidongxi reported on February 18 that today, Kunlun WanweiOpen source China’s first video generation model for AI short drama creation SkyReels-V1、China’s first SOTA-level emotion-action controllable algorithm SkyReels-A1 based on video dock model。

Competition for video generation models has climbed to new heights.

SkyReels-V1 is trained based on the Mixed Model and is the Human-Centric Video Foundation Model (people-centered video basic model). It supports Wensheng video and Picture-generated video. Its performance can be benchmarked against closed-source models such as Quick-Hand Kokui and MiniMax Conch AI. Micro-expression restoration, professional camera operation, and Hollywood-level picture composition can all be completed with one click.

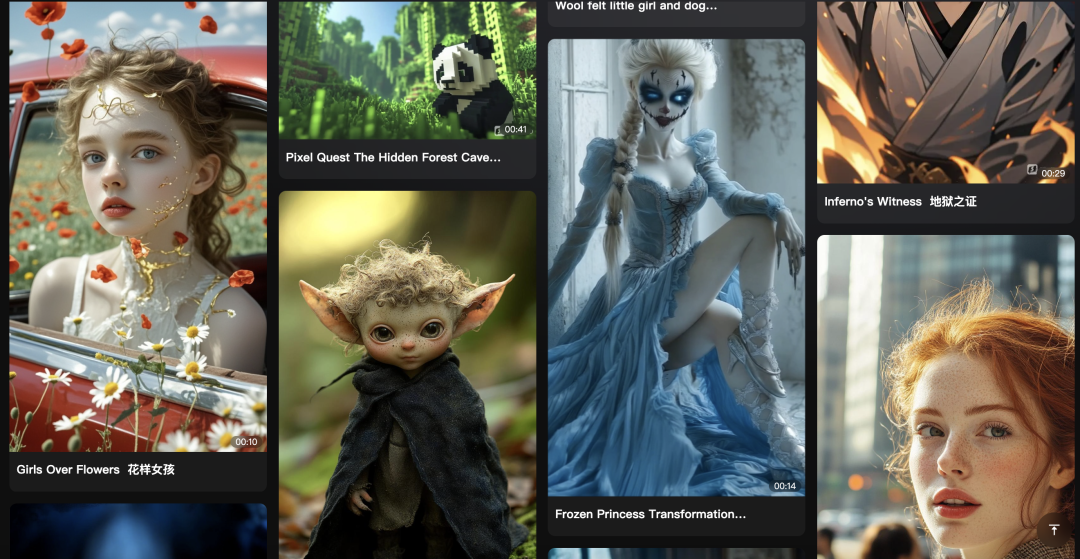

The capabilities of these two models can currently be experienced on Kunlun Wanwei’s AI short drama platform SkyReels. Many examples of complex task generation have appeared at the bottom of the platform’s homepage, and various Hollywood blockbusters, namely visual videos, have been used by many users. Created it in a few minutes.

▲SkyReels platform homepage

Nowadays, AI short dramas are very popular in the field of video generation. SkyReels covers all aspects from script generation, camera splitting, BGM to character dialogue.”One person makes cool dramas” has become a reality, lowering the threshold of short drama creation., accelerating the application and popularization of video generation models.

Against this background, how does the amazing effect of the SkyReels-V1 video model actually perform? Can everyone really become a short drama creator? With these questions in mind, Zhidongxi Early Access was the first to experience Kunlun Wanwei’s newly upgraded AI short drama generation platform SkyReels.

Open source address:

https://github.com/SkyworkAI/SkyReels-V1

https://github.com/SkyworkAI/SkyReels-A1

Technical report address:

https://skyworkai.github.io/skyreels-a1.github.io/report.pdf

01.

Take a short drama with one click! The face and the camera’s expression are not broken

Lens operation and composition are Hollywood level

The box office of “Nezha: The Devil Boy Coming into the World”, which became popular during the Spring Festival, broke through 10 billion yuan. Many users started a second video creation around the starring role of Nezha 2.

SkyReels ‘graphic video effects are naturally not a problem. After I uploaded a still picture of Nezha riding a “Flying Pig” into a car (the still picture at the top of the video), I entered the prompt words, and the details of Nezha’s hair flying in the wind while driving were also fully presented. In addition, the “hot wheel” on the feet of the Flying Pig also appeared, and the characters were very harmonious with the background of the picture.

Looking back at the time when the video generation model was first born, users often faced slow generation speeds when experiencing related products, and the generation effects were quite different from the user’s prompt words, and the characters ‘expressions were stiff and unnatural. Nowadays, with the development of technology, Kunlun Wanwei SkyReels, Quick Hand, MiniMax Conch AI and many other products have greatly enhanced the usability of the video generation model.

First of all, for videos and short dramas,Character’s micro expressionsIs a big difficulty. Generally speaking, the character’s micro-expressions have a short duration and small change range, but they are crucial for conveying the character’s true emotions.

In the hospital scene video generated by SkyReels, during the conversation between the male protagonist on the hospital bed and the female protagonist next to him, the expressions of both changed very naturally. It can be seen that the female protagonist’s expression in the face-to-face camera will not collapse at all. Accompanied by the choking voice, the female protagonist’s chin will tremble slightly when she lips her lips. When the male protagonist lying on the hospital bed speaks, the wrinkles and skin on his face and neck will also change accordingly. The changing handling of these details is the key to accurately conveying the characters ‘current feelings.

the second point isUse lens language to enhance the story of the picture。In a video, different camera positions are often used to depict the key points, such as using a large panoramic view to show the location of the event, or moving the camera to different people to reflect the changes in the main body of the picture.

For example, in the following video, the scene from the church is first introduced to show the environment where the male protagonist is located, and then the identity of the male protagonist is explained through the transfer of the scene and the changes in the surrounding characters. The same is true for the plot where the female protagonist appears, which promotes the development of the plot through the environment and close-ups of the characters. During the dialogue between the man and the woman, the camera will follow the speaker to give the protagonist a close-up.

The third point is the pictureCharacter positioning, composition and switching of the main body of the pictureetc. In live-action shooting, actors and directors often need to cooperate with each other, as well as setting up scenes or using the weather to achieve the best shooting results. AI can now do it anytime, anywhere.

Just as in the video below, the transformation of the camera is silky. At the beginning, the protagonist’s voice is interspersed with memories of family photos, lying little girls, running wounded people, etc., enriching the details of the story. As the camera slowly moves, the protagonist appears, and then uses special effects to switch the screen, from the character to the final revenge scene.

With such realistic and rich story details, the video generation model can be generated by itself based on the understanding of the text, always forming a complete short drama.

It can be seen that the effects generated by SkyReels have been significantly improved in terms of character expressions, lens switching and picture composition.

02.

Key breakthroughs in the video generation model:

Accurate generation, faster speed, and controllable results

The core behind the upgrade of the SkyReels platform is Kunlun Wanwei’s latest SkyReels-V1 video model.

Since its development, the video generation track has evolved from a simple PPT-style picture that often “rolls over” to a smooth video with smooth lens changes and angle shifts. For AI short dramas, users pay more attention to the generation of characters ‘micro-expressions. Only then can the plot be accurately conveyed. Therefore, the requirements for micro-expressions and accurate and controllable generation effects are higher. How to improve the generation effect so that users can use it immediately becomes a difficult problem for players of video generation models.

When generating videos and short dramas, the most critical requirement isGeneration is accurate, highly controllable, and the reasoning and generation speed is fast enoughThis is also the core killer of the technology behind the SkyReels-V1 video model.

first inAccurate generationOn the one hand, video generation must accurately present the micro-expressions of the characters, and at the same time, it needs to grasp the reasonable composition of the entire picture, subtle light and shadow changes and many other elements.

Currently, SkyReels-V1 can already support33 delicate character expressions and more than 400 natural movementsCombination can highly restore most of the characters ‘expressions. At the same time, the training data behind it is Hollywood-level film and television data, and the composition of the picture, actor positions, and camera angles are all more film-level.

The girl crying in the video below fully demonstrates her current sadness from her slightly red eyes, tears in her eyes, trembling lips, frowning, slightly red nose, and swaying hair.

Behind the realistic expression of the characters ‘expressions, movements, picture composition, and lens language is Kunlun Wanwei’s self-developedCharacter intelligent analysis system, which includes the film-based expression recognition system, character spatial location perception, behavioral intention understanding, and performance scene understanding.

Under the comprehensive effect of the system, the model can realize the understanding of 11 kinds of character expressions and accurately express expressions such as disdain, impatience, helplessness, and disgust. At the same time, based on human three-dimensional reconstruction technology, it can realize accurate understanding of the character’s position and character’s behavior at the film and television level. At the same time, the correlation analysis is formed between the characters, clothing, scene and plot, which ultimately makes the video effect remain complete, consistent and realistic.

It can be seen that the short 4-second video below contains many key elements. Only the figure in the back trembled slightly when speaking, and the change in the eyes of the figure and the slight frown revealed the positive expression conveyed his feelings. There was also the change in the light and shadow on the male protagonist’s shoulder, which caused the figure to intersect with the surrounding environment.

The accuracy of generation is also reflected inPrecise and controllableThe generated video accurately extracts the expressions and action characteristics from the Driving Video, and completely reproduces the mouth shape of the speech, changes in facial features and even the slight shaking of the head. However, Runway did not succeed when generating this video.

Even with Driving VideoCompletely different facial angle, the generated video effect can also accurately present the vivid changes in expressions. Compared with the generation effect of Runway, it can be seen that the video generated by SkyReels-A1 is richer in expressions. During the speech, not only the eyebrows and eyes change, but also forehead wrinkles appear, making the character effect more realistic.

second isspeedThe speed of video generation is also an important factor in user experience perception. SkyReels-Infer, a self-developed reasoning optimization framework based on Kunlun Wanwei, SkyReels-V1 realizes 544p resolution inference, which takes only 80 seconds based on a single 4090, also supports distributed multi-card parallelism, and supports Context Parallel, CFG Parallel, and VAE Parallel.

At the same scale as RTX4090, Kunlun Wanwei’s solution has a better end-to-end latency than HunYuan Video, a large video generation model. 58.3%。

In order to reduce the impact of users ‘own hardware performance on generation speed and expand the user base that experiences faster reasoning speeds, Kunlun Wanwei adopts many strategies to meet the operating requirements of user-level graphics cards with low memory and optimize latency, such as adopting FP8 quantification and parameter-level unloading., support Flash Attention, SageAttention, model compilation optimization and other further optimization latency.

At the same time, in order to allow users to generate more content generated by UGC videosPrecise and controllable, researchersOpen source SOTA-level emotion-action controllable algorithm SkyReels-A1 based on video dock model, benchmarking Runway’s generative role performance tool Act-One to achieve film-level expression capture.

Although the girl in the video shook her head greatly from left to right, the horrified expression on her face was accurately presented from the beginning to the end.

This kind of video generation effect is based on the core technology self-developed by Kunlun Wanwei, which allows users to easily realize it on their own computers. High cost performance and controllable generation are its advantages. The tide of low-cost AI short dramas has emerged. It has become a reality.

On the other hand, advanced self-developed technology and comprehensive product layout are also driving Kunlun Wanwei to become a leader in the application field of video generation models.

03.

Large model technology innovation and application implementation have blossomed

Adhere to open source strategy

The popularity of short dramas has spread to video generation platforms, and users have begun to explore homemade Short Video.

In August last year, SkyReels, an AI short drama product released by Kunlun Wanwei, is a master of video generation tools, covering all steps from script creation to finished short drama.

The SkyReels platform integrates Kunlun Wanwei’s self-developed script model SkyScript, the self-developed mirror division model StoryboardGen, the self-developed 3D generation model Sky3DGen, and the industry’s first innovative platform that deeply integrates an AI 3D engine with a video model WorldEngine.

SkyReels can generate complete scripts, shots, character dialogues and BGM with one click of AI, support custom adjustments of character images, timbre and shots, and can automatically convert content into 1080P 60 frames of high-definition video, which can be generated in a single time. The video length reaches 180 seconds.

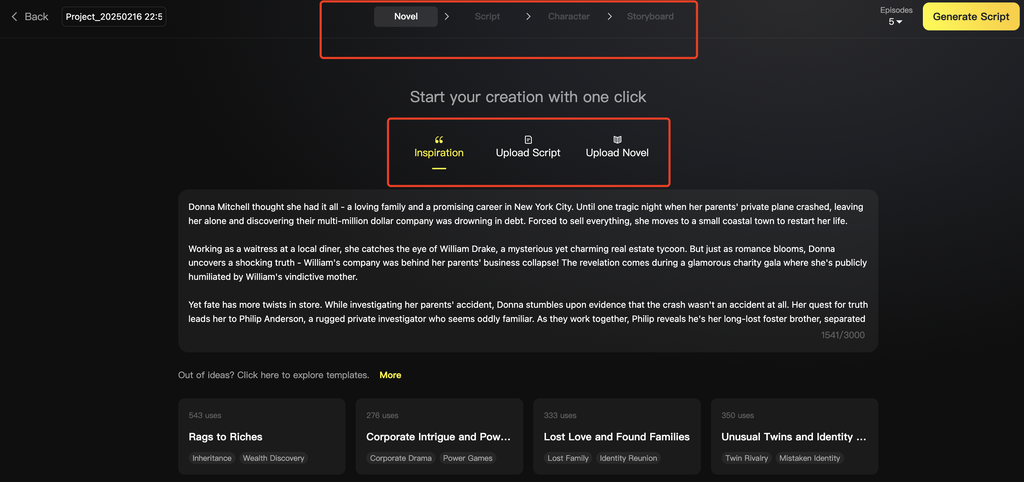

▲SkyReels short drama creation homepage

Users can upload short drama inspirations, scripts or novels to the platform, and SkyReels will automatically analyze the content to generate corresponding scripts and corresponding main characters. If they are not satisfied, they can also reset the device’s sound and character image. Finally, SkyReels will synthesize the scripts from different shots to form a complete short drama. During these processes, users can modify them at any time according to their own needs.

The short dramas in the Short Video platform focus on high-intensity and cool points, integrating multiple types such as revenge and rebirth. Based on this, Kunlun Wanwei has also built a billion-levelHigh-quality short drama structured dataset SkyScript-100M, it carries out high-quality annotations on the plot rhythm, coolness, and emotional changes of a large number of wonderful short dramas.

SkyReels is currently a major innovation direction for large-scale application of video generation models. On the one hand, this comprehensive short drama product lowers the production threshold of short dramas and stimulates users ‘creative interest; on the other hand, the SkyReels platform is also an effective path for large-scale model capabilities to be applied to application products.

These achievements are not only a strong proof of Kunlun Wanwei’s technical strength, but also reflect that it is becoming an important vane in the AIGC era.

In April 2023, Kunlun Wanwei proposed“All in AGI and AIGC”Strategy is not limited to a single product or technology, but to build a complete AI ecosystem and gradually take shapeAI big model, AI search, AI music, AI social, AI games, AI short dramasSix major business matrices. It has also launched the first new AI segment application creative product in China many times, starting from“Tiangong AI Search”, the first AI search product in ChinatoChina’s first AI music generation product “Tiangong SkyMusic”。

In fact, Kunlun Wanwei’s layout in the fields of AIGC and large models can be traced back to 2020, and its R & D investment has always been at a leading level. Kunlun Wanwei’s financial report for the third quarter of 2024 shows that its research and development expenses in the first three quarters increased to 1.144 billion yuan, a year-on-year increase of 84.47%.

Currently, the company has completed“Computing Infrastructure-Large Model Algorithms-AI Applications”With the layout of the entire industrial chain, under the combined effect of strategic layout, technology accumulation, and accurate insight into the market, Kunlun Wanwei’s advantages in the large model industry have gradually increased.

More importantly, the current explosion of DeepSeek has once again triggered the industry to think about the open-source model. Many companies have shifted from closed-source to open source, and Kunlun Wanwei has attached great importance to it from the beginning.open source ecosystem,

As early as 2022, Kunlun Wanwei open-source the full range of Kunlun Tiangong AIGC algorithms and models. Since then, it has open-source 200 billion sparse large model Skywork-MoE, 400 billion parameter MoE super model, and digital agent full-process R & D tool package AgentStudio, etc.

One of the consistent open source strategies is accelerating the healthy and rapid development of the large-scale model ecosystem.

04.

Conclusion: Kunlun Wanwei uses full-stack AI layout

Install acceleration engines for the AGI era

While Kunlun Wanwei adheres to the strategic layout of “All in AGI and AIGC”, it has already achieved many industry application results. Under the layout of its open source strategy, these results are accelerating the development of the domestic large model industry. Kunlun Wanwei adheres to self-developed core technological breakthroughs, and has now unveiled a powerful open source video generation model, making leaps in many key areas of video generation. At the same time, in terms of large-scale model applications, Kunlun Wanwei’s attempt also pointed out the path for the birth of large-scale killer applications.

In the past, short drama creation required professional team collaboration among writers, directors, photographers, actors, etc., as well as high capital investment. The emergence of SkyReels-V1 and SkyReels-A1 is breaking this creative process and meeting more diverse and personalized creative needs. With the emergence of products like SkyReels and the improvement of performance, the creative ecosystem of AI short dramas will gradually mature, optimize model performance, improve the quality of short dramas, thereby promoting technological innovation and content innovation in the entire industry.

On the road to exploring large model applications, Kunlun Wanwei has been at the forefront of the industry. As the video generation model is further improved in terms of fluency, authenticity, resolution, etc., as well as issues such as motion accuracy and scene logic are gradually improved, the application of video generation will have great potential. Especially in the current popular short drama field, products such as SkyReels can help users quickly transform ideas into short drama works, making creation more liberal and popular, helping to promote the construction of short drama content ecology, and reshaping AI short drama industry.

What is certain is that the release of the first AI short drama creation model has allowed us to see the huge potential and infinite possibilities of large-model technology. Coupled with the fact that the open source models SkyReels-V1 and SkyReels-A1 are widely used in various industries and fields, even individuals or small teams without a strong R & D team and a large amount of financial support can obtain advanced video generation technology, thus promoting the acceleration of the era of universal artificial intelligence.