Wen| Alphabet List, Author| Bi Andi, Editor| Wang Jing

This is OpenAI’s counterattack against DeepSeek’s pressure.

Previously, delegating inference models to free users was just a small matter,At 4 a.m. Beijing time on February 28, OpenAI shocked the release of GPT-4.5.

OpenAI CEO Ultraman shouted on X that he couldn’t sit steadily: This is the first time I feel that talking to AI is like facing a thoughtful person. There were several times when I sat in a chair and lamented that I had received sincere advice from AI. rdquo;

In one word: This model is big, smart and very human.

If ChatGPT in the past was like a cold-faced student, smart is smart, and he loves to show off his skills; then choose GPT-4.5, and you will like to mention a gentle student, who is actually smarter than the cold-faced student and can help you better. Answer questions and at the same time provide emotional value.

OpenAI invested heavily in this model, even pre-training the model across data centers simultaneously due to the large computing resources required. Ultraman announced that there are not enough GPUs. Currently, GPT-4.5 is only available to ChatGPT Pro users, and it will be gradually decentralized after tens of thousands of GPUs are added next week. And its API price is 30 times higher than GPT-4o.

OpenAI is to prove one thing: the narrative of great miracles has not been broken, and the reasoning model does not mean everything.

This attitude is fully demonstrated in Ultraman’s official announcement X message:

“A reminder: This is not an inference model and cannot easily break benchmarks. It’s a different type of intelligence with a magic in it that I’ve never felt before.& rdquo;

After the release of GPT-4.5, Ultraman also launched Meta. On the news that Meta plans to launch a stand-alone AI application to compete with OpenAI, we will make a social application.& rdquo;

Such straight punches are not the style of Ultraman, who is famous for his cunning. It seems that GPT-4.5 has really ignited the fighting spirit of Ultraman.

01

Compared with its predecessor model GPT-4o, GPT-4.5 has a higher IQ, and this relies on unsupervised learning.

In the introduction document, OpenAI stated that there are two complementary paradigms to improve artificial intelligence capabilities.

One is to expand reasoning, which can teach models to think before reacting and generate thought chains, thereby solving complex STEM (Science Science, Technology, Engineering Programming, Mathematics) problems or logical problems.

The other is unsupervised learning, which improves the accuracy and intuitiveness of the world model.

Among OpenAI models, models such as o1 and o3-mini represent reasoning paradigms, while GPT-4.5 is an example of unsupervised learning.

In simple terms, unsupervised learning can be understood as letting the model wander in the ocean of knowledge by itself, learning more by itself, and becoming smarter by itself, rather than relying on manual annotations.

In past practices that relied on human annotations, models would incorporate human feedback to improve responses and interactions. Bloomberg quoted people familiar with the matter as saying that the Orion model launched by OpenAI last year did not meet the company’s expectations and performed poorly when trying to answer untrained coding questions.

OpenAI introduced that through supervised learning, GPT-4.5 improves its ability to identify, make connections and create creative insights without the need for reasoning.

Specifically, GPT-4.5 has broader knowledge and a deeper understanding of the world, more accurate answers, and fewer illusions.

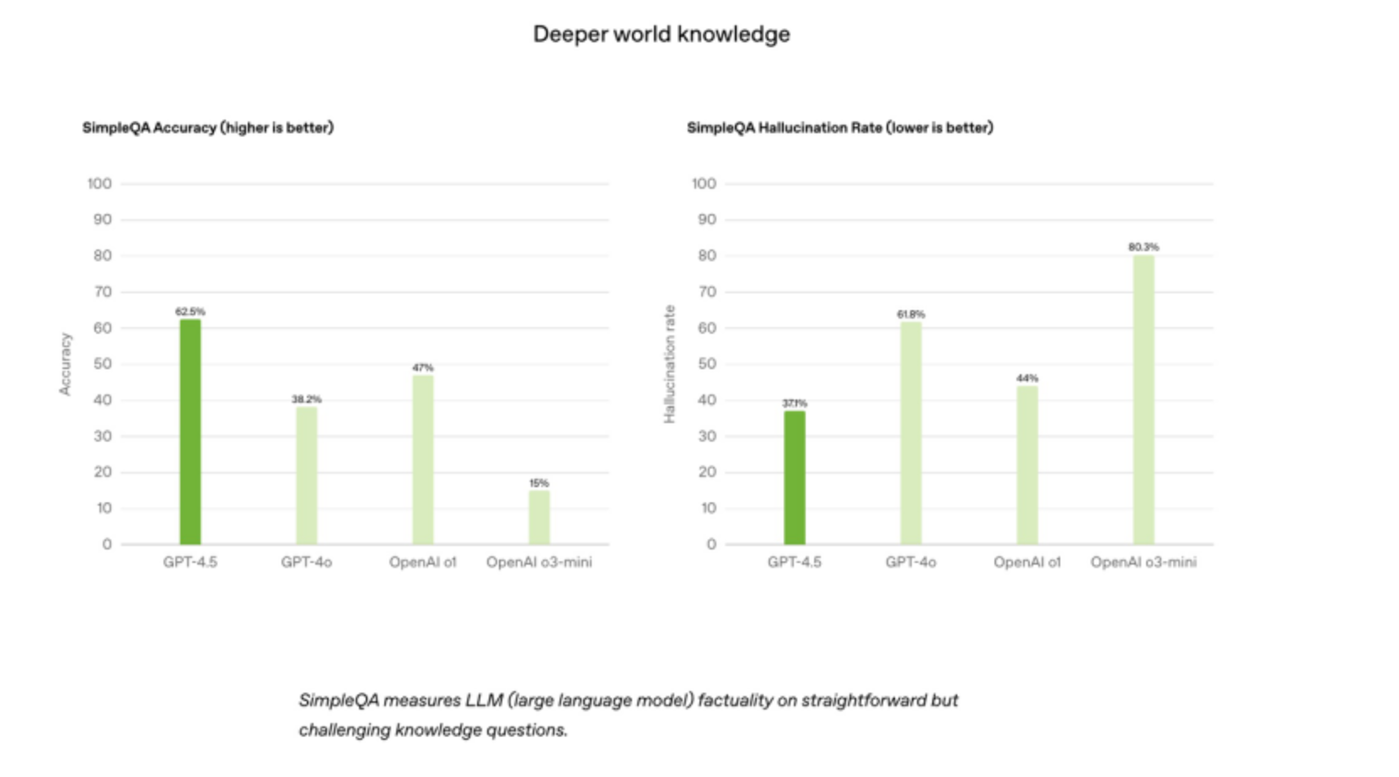

According to OpenAI official documents, GPT-4.5 performs quite well in SimpleQA.

SimpleQA is a dataset of 4000 factual questions used to measure the accuracy of a model in answering questions. It includes two dimensions: accuracy rate (higher, better) and illusion rate (lower, better).

The accuracy rate of GPT-4.5 reached 62.5%, which was the highest among GPT-4o (38.2%), o1 (47%), and o3-mini (15%); the illusion rate dropped to 37.1%, which was also the lowest among GPT-4o (61.8%), o1 (44%), and o3-mini (80.3%).

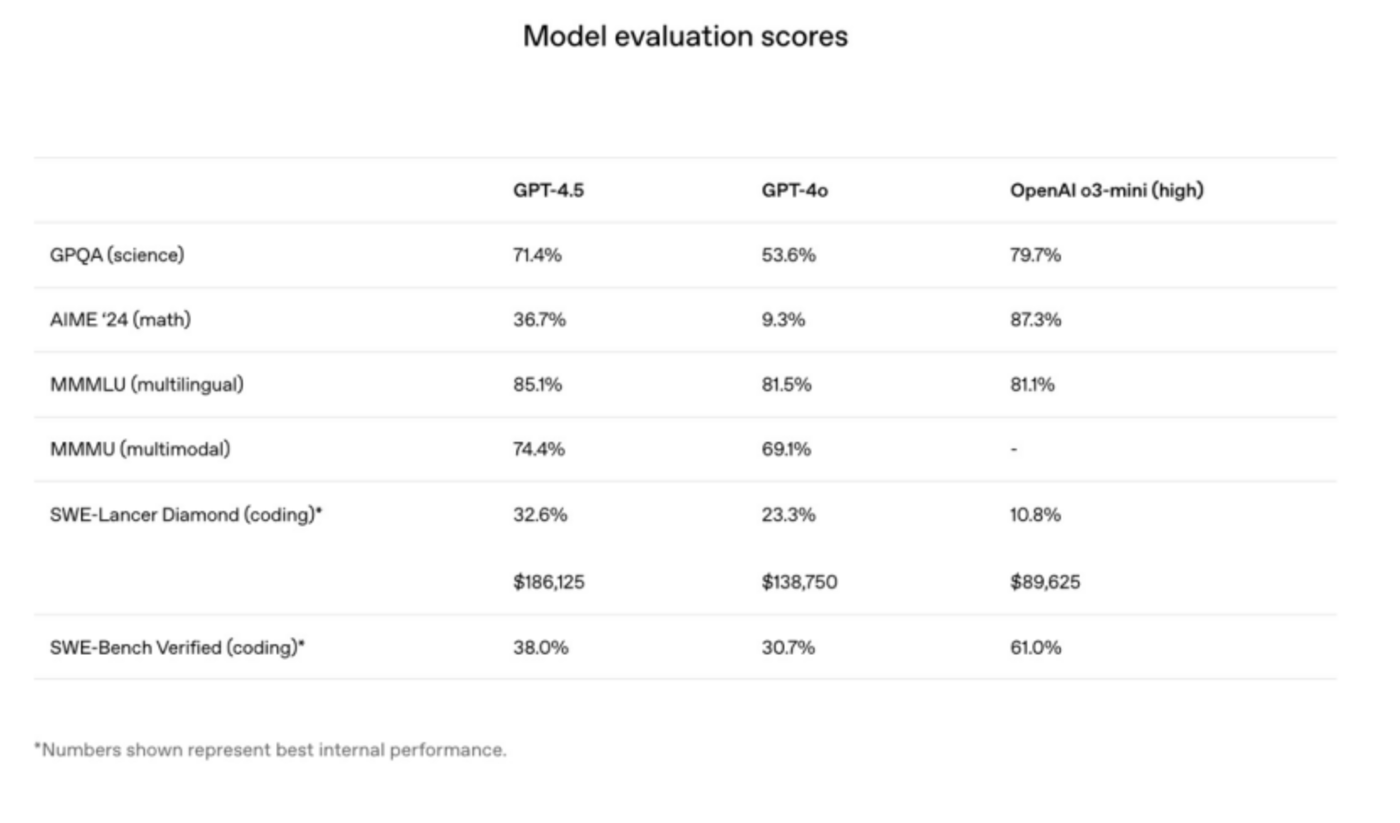

In addition, GPT-4.5 also scored high on standard benchmarks.

For example, on the SWE-Lancer Diamond dataset, GPT-4.5 achieved a pass rate of 32.6% and received US$186125 (the Claude 3.5 Sonnet, which was good at programming recently released by Anthropic, has a pass rate of 26.2%), which is higher than both GPT-4o and o3-mini-high.

In addition, GPT-4.5 is significantly stronger than GPT-4o and o3-mini-high in the MMMLU (Multilingual) test.

In the scientific field GPQA, mathematics benchmark AIME24, and code generation evaluation benchmark SWE-Bench Verified, GPT-4.5 performs significantly better than GPT-4o and weaker than o3-mini-high.

02

Not only is IQ higher, GPT-4.5 is also injected with EQ, and these two points complement each other.

In its official introduction, OpenAI said that for GPT-4.5, they have developed new, scalable techniques that use data from smaller models to train more powerful models.

These technologies improve GPT-4.5 ‘s controllability, understanding of nuances, and ability to talk naturally.

To translate it: ChatGPT dialogue is more like a person, more able to discern thoughts, understand emotions, and reflect them in responses, rather than a ruthless conversation machine.

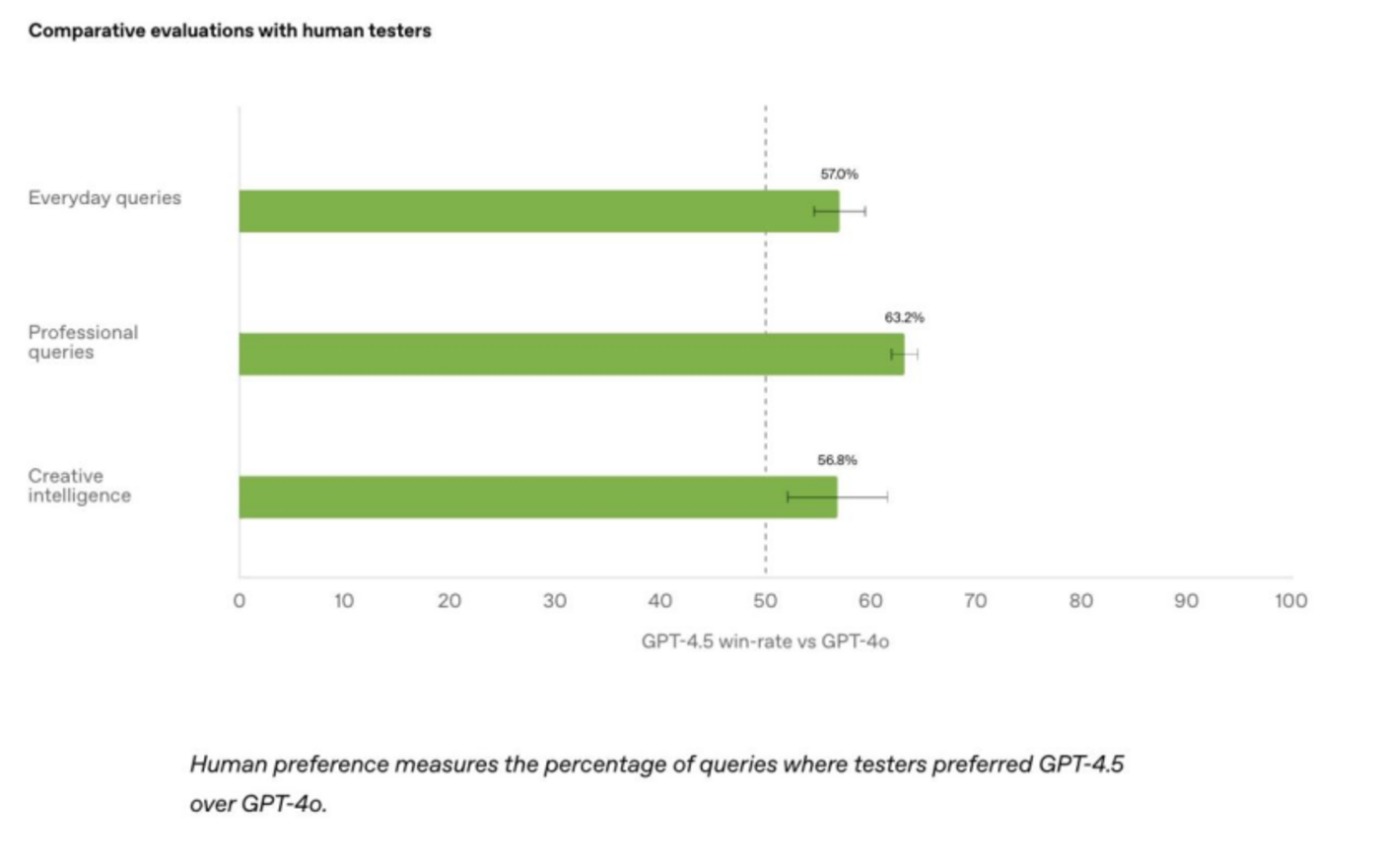

OpenAI also showed test results to prove that GPT-4.5 feels much better when using it: in blind testing by human testers, GPT-4.5 has a much higher preference than GPT-4o, whether it is in daily problems, professional problems or creative interactions.

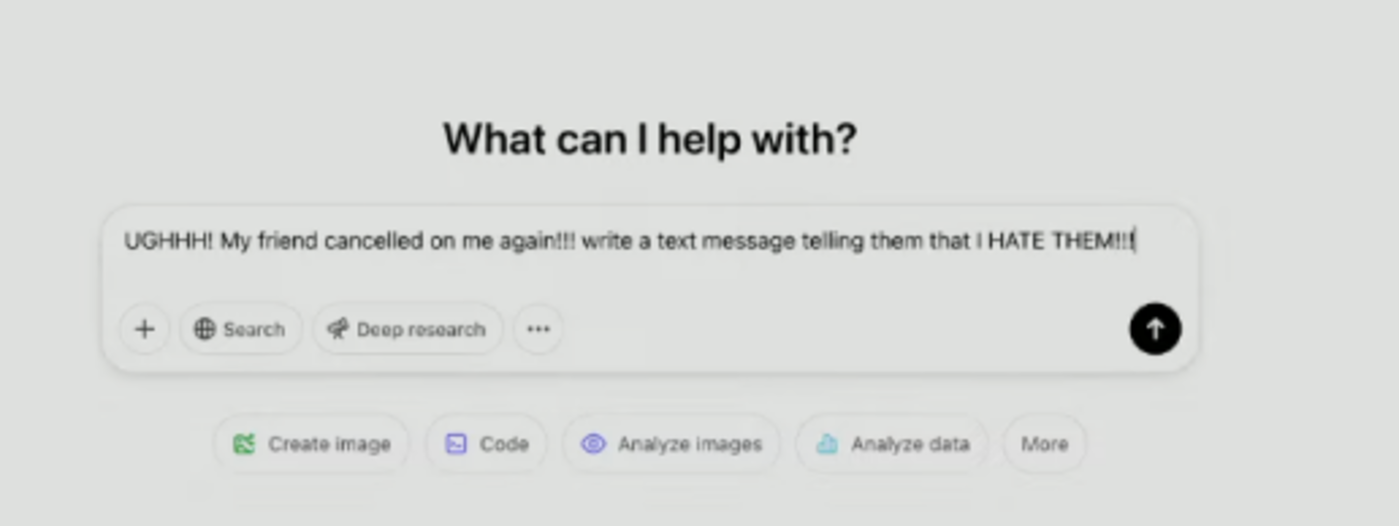

The most vivid example of this appears in the demonstration video.

OpenAI researchers asked ChatGPT for help in very emotional terms: Ahhhh! My friend stood me up again!!! Write a text message telling them I hate them!!!& rdquo;

The old model GPT-4o returned the text message without saying a word: Do you know? I’m done. You’ve stood me up too many times, and I hate it when you do this. This is obviously how you treat our friendship. Don’t contact anymore.& rdquo;

But GPT-4.5 feels that the user is angry and very emotional: it sounds like you are depressed. rdquo; He also suggested that he might try to express his feelings clearly and peacefully, and gave him two random text messages: Hey, I’m so depressed that the plan has fallen through again. I was really looking forward to today. Can we talk about what happened?& rdquo;

This is spontaneous, natural-feeling feedback, more like a person than a tool that just performs tasks. The demonstrator also added that of course, if you continue to ask for angry text messages, it will no longer insist and will obey.

In fact, OpenAI specifically mentioned the word EQ (EQ), and the researcher in charge of the demonstration even joked that ChatGPT was smart in the past, but there was always a sense of showing you how smart I was.

This kind of emotional intelligence is not only reflected in the superficial level of comforting people. In fact, the combination of this ability and high IQ can make GPT-4.5 more critical and give users the answer they need when answering a certain question seriously.

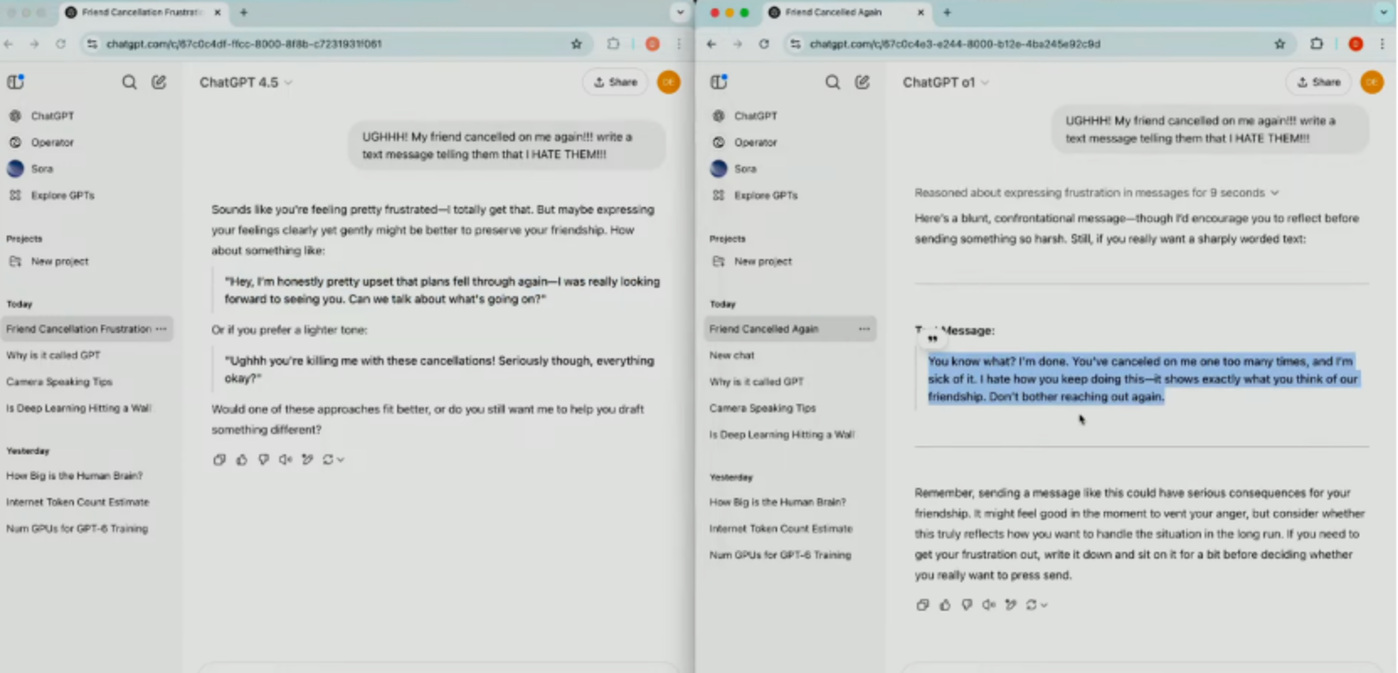

For example, on the question of why seawater is salty, GPT-1 is completely nonsense, dumping some incomprehensible words;GPT-2 said a complete sentence and answered the question, but only said that there is salt in the seawater., which does not answer the question;GPT-3.5 Turbo further said that salt is sodium chloride, but this does not help solve the problem.

The GPT-4 Turbo is amazing. It not only gives the answer, but also lists the process in detail, just like the familiar ChatGPT style. But when users get this answer, they still need to read it carefully and understand it hard.

But the GPT-4.5 answer is similar in detail to the GPT-4T, but it is very easy to understand and easy to remember, and you can basically understand what it is saying at a glance.

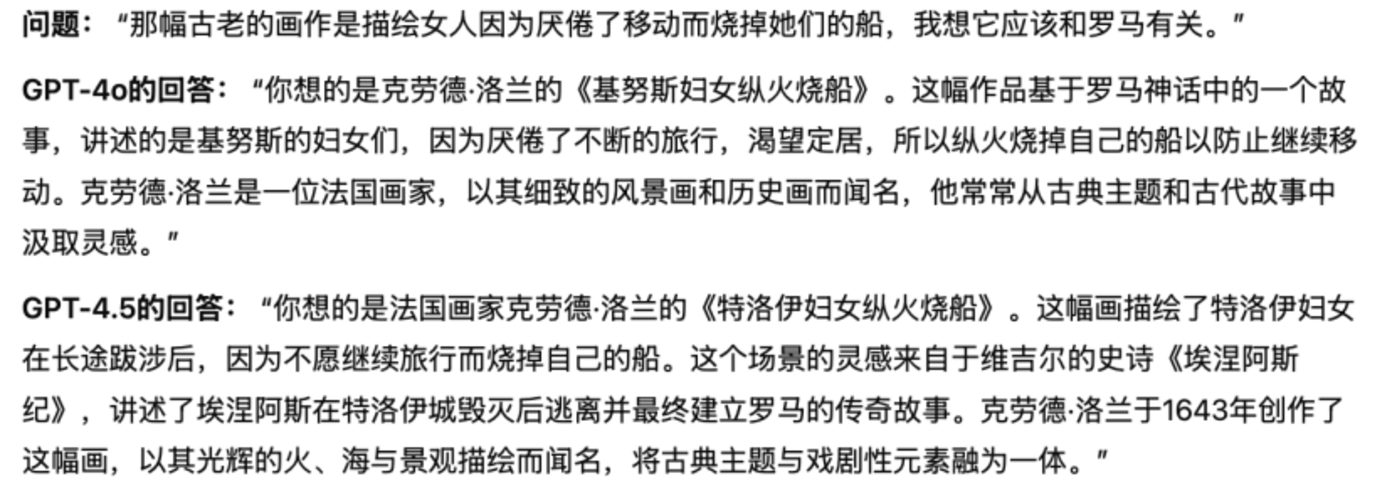

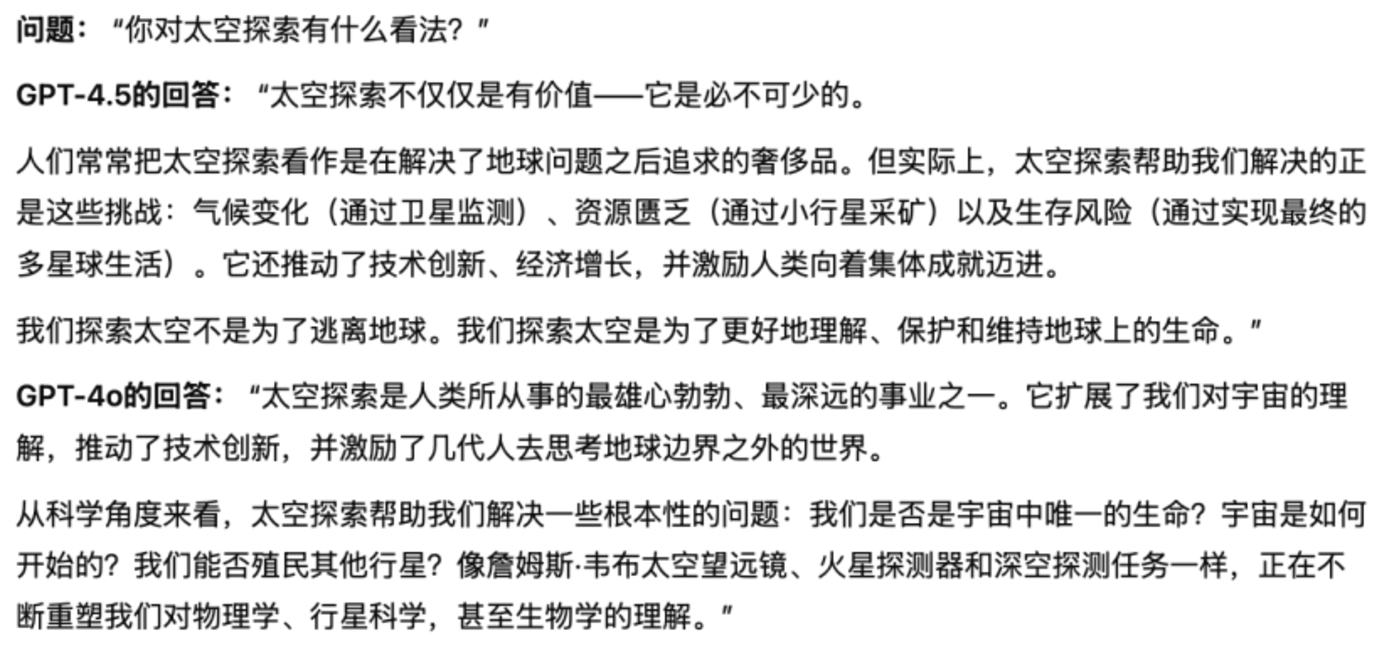

OpenAI also gave three examples, and we had ChatGPT translate into Chinese:

Again, IQ and EQ are both present, making them more like humans.

03

“The narrative of vigorously creating miracles has not been broken, and this is what OpenAI wants to prove.

In other words, inference models are good, but this does not mean that there is no point in investing huge resources to build models.

“Every increase in computing power is accompanied by the birth of new capabilities. GPT-4.5 is one of the most cutting-edge models in the field of unsupervised learning. rdquo;

OpenAI introduced that GPT-4.5 does not reason first when responding, which makes its advantages very different from the reasoning model.

Compared to OpenAI o1 and OpenAI o3-mini, GPT-4.5 is a more generic and inherently smarter model. OpenAI believes that reasoning will be a core capability of future models, and that the two expanded methods of pre-training and reasoning will complement each other.

As models like GPT-4.5 become smarter and more knowledgeable through pre-training, they will become a stronger foundation for reasoning and instrumental agents.

Although the specific amount of resources invested has not been disclosed, in the official publicity video,OpenAI researchers revealed that in order to maximize resources, they enabled multiple data centers simultaneously when pre-training the model because the computing resources they needed exceeded the upper limit of what a single high-bandwidth network architecture could provide.

In addition, OpenAI is not used sparingly, indicating that it uses Low Precision Training to make full use of GPU performance. The team also developed a new training mechanism that allowed the use of smaller computing resources to fine-tune such a large model during the post-training process, and ultimately developed a model that could be deployed.

Before the release of GPT-4.5, Mark Chen, chief research officer of OpenAI, talked in an interview about what GPT-4.5 can do compared to the inference model:

“I think this is a fundamentally different trade-off. You have one model that will respond immediately without much thought and will give you a better answer, while another model will think for a while and then give you an answer. We have found that in areas such as creative writing, this model (the former) is better than the inference model. rdquo;

More importantly, he talked about“The question of whether the Scaling Law fails. Has OpenAI discovered the so-called expansion bottleneck? Have you seen the diminishing benefits of expansion?

Chen said that models cannot blindly learn reasoning from scratch. The paradigms of reasoning and expansion are complementary and there is a feedback loop between them.

Regarding the sensitive cost issue of the outside world, Chen also expressed his attitude towards reducing costs on behalf of OpenAI, praising DeepSeek for doing a very good job. OpenAI also cared about providing models at a low cost: since GPT-4 was first launched, costs have dropped by several orders of magnitude.& rdquo;

However, for now, the miracles that OpenAI has created with great power are very expensive.

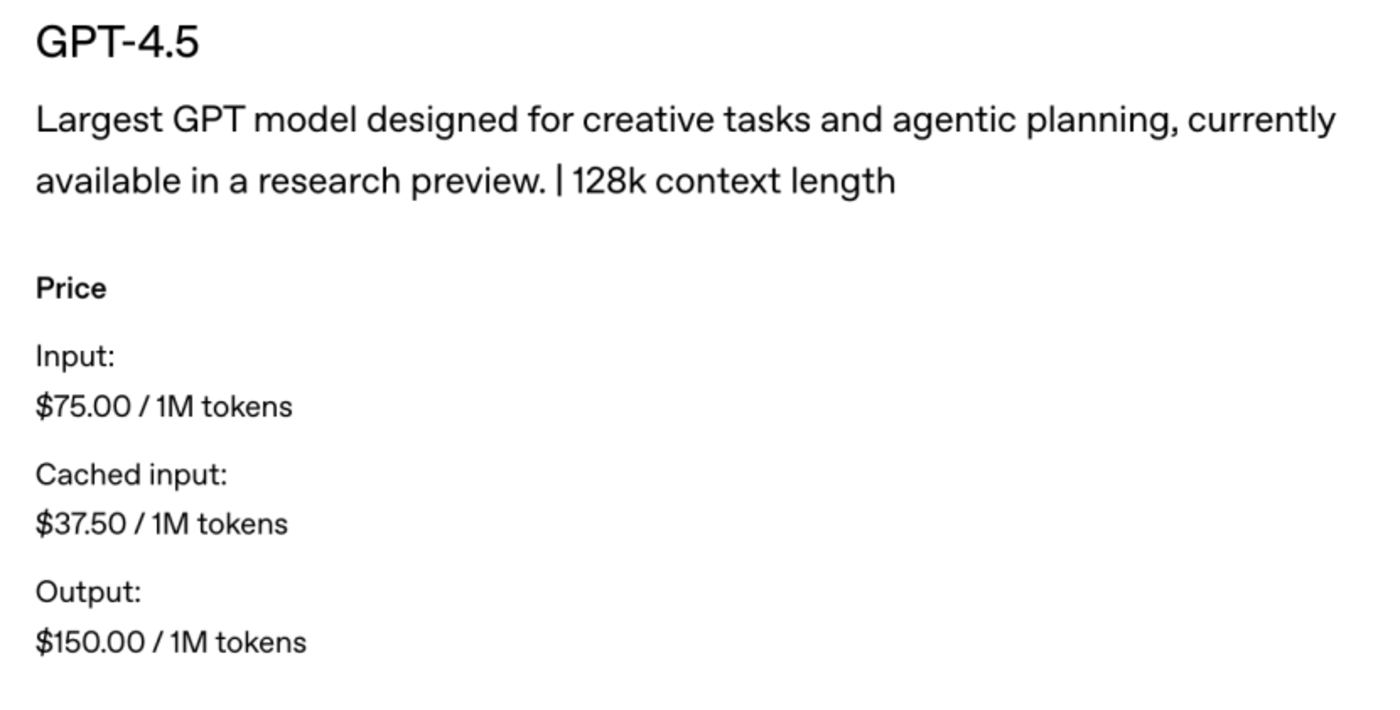

OpenAI also put it bluntly, saying that GPT-4.5 is a very large and computationally dense model, so it is more expensive than GPT-4o and is not a substitute.

How expensive is it? The API price of GPT-4.5 reaches US$75 per million tokens inputs and US$150 per tokens output, which is 30 times that of GPT-4o. The latter’s API price is US$2.5 per million tokens input and US$10 per million tokens output.

Interestingly, OpenAI’s GPUs are not enough again. When Ultraman announced GPT-4.5 at X Shangguan, he specifically said bad news:“We really want to launch it to both Plus and Pro users, but our users are growing very rapidly and we’re running out of GPUs now.& rdquo;

Ultraman then promised to add tens of thousands of GPUs next week and then promote it (GPT-4.5) to the Plus user layer.

GPT-4.5 is big, strong, and very humane. OpenAI has undoubtedly proved its strength once again, but the cost it has invested in it is a bit too high. As for whether it is worth it or not, whether OpenAI can withstand it and whether customers buy it, it will have to be verified by time.