Image source: Generated by AI

Image source: Generated by AI

DeepSeek R1 is having a huge impact on the entire technology field, subverting people’s perceptions of AI. On the mobile side, innovation is happening rapidly.

On February 20, Qualcomm released its latest AI white paper“AI change is driving end-side reasoning innovation”, introduces the prospects of end-side high-quality small language models and multimodal reasoning models.

In the process of gradually implementing AI on a large scale, we have gradually realized that end-to-side large-model reasoning can bring higher reliability to people and also improve data security. With the rapid development of technology, more advantages are emerging.

Qualcomm pointed out that four major trends are driving end-side AI transformation:

- Current advanced AI mini models have excellent performance. New technologies such as model distillation and new AI network architecture can simplify the development process without affecting quality, allowing the performance of new models to be rapidly improved and close to the cloud model;

- The scale of model parameters is rapidly shrinking. Advanced quantification and pruning techniques allow developers to reduce the size of model parameters without materially affecting accuracy;

- Developers can create richer applications on the edge side. The rapid proliferation of high-quality AI models means that features such as text summaries, programming assistants, and real-time translation are becoming popular on terminals such as smartphones, allowing AI to support commercial applications deployed on a large scale across the edge side;

- AI is becoming the new UI. Personalized multimodal AI agents will simplify interactions and efficiently complete tasks across various applications.

While cutting-edge large-model technology continues to make breakthroughs, the technology industry has also begun to devote its energy to efficient deployment on the edge side. Driven by falling training costs, rapid inference deployments, and innovation for edge environments, the industry has spawned a large number of smarter, smaller, and more efficient models.

These technological advances are gradually being transmitted to chip manufacturers, developers and consumers, forming new trends.

Models becoming smaller has become inevitable for development

Looking at the development of large language models in recent years, we can clearly see some significant trends, including the shift from parameter scale to application, from single modal to multimodal, the rise of lightweight models and deployment to terminal side, etc.

In particular, the recent launch of DeepSeek V3 and R1 reflects these development trends in the AI industry. The resulting reduction in training costs, rapid inference deployment and innovation for edge environments are driving the proliferation of high-quality small models. Looking deeper into the reasons, the current shift to small models is the result of a combination of several aspects.

one isModel network architecture continues to innovateFrom the initial mainstream Transformer to the later coexistence of Mixed Expert Models (MoE) and State Space Models (SSM), the computing overhead and power consumption during the development of large models have been continuously reduced. As a result, more and more models are beginning to adopt new architectures.

second isKnowledge of the use of distillation technologyThis has become the key to developing efficient “basic and task-specific” small models. By migrating the knowledge of complex teacher models to smaller student models, on the one hand, the model’s parameter and calculation amount are significantly reduced, the training process is simplified, and the storage space is occupied is less, making it suitable for deployment on resource-limited devices; on the other hand, student models can also gain rich knowledge and ensure model accuracy and generalization capabilities.

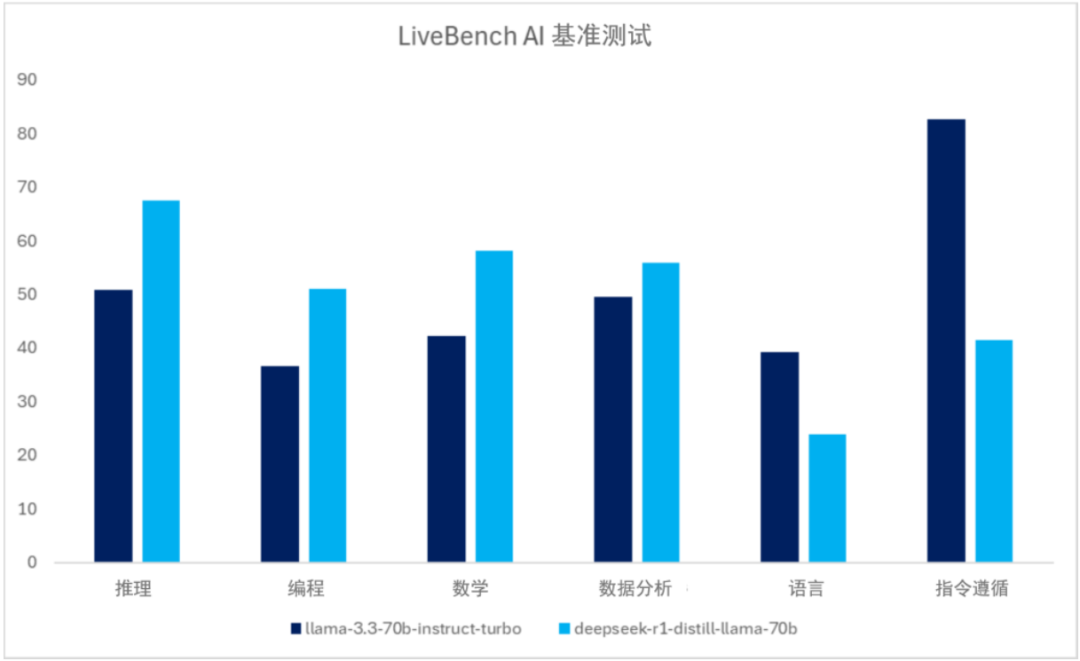

Comparison of LiveBench AI benchmark average results between Meta Llama’s 70-billion-parameter model and DeepSeek’s corresponding distillation model. Source: LiveBench.ai

third isContinuous improvement in optimization and deployment technologies for large models such as quantification, compression and pruning, further promoting the model scale to be smaller. These technologies can also significantly reduce the calculation and storage requirements of models while maintaining high performance.

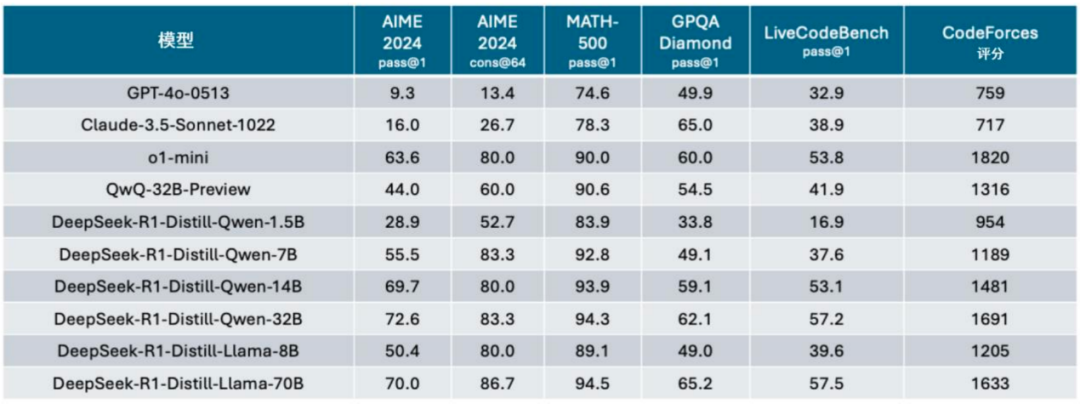

With the above innovations and advances at the underlying architecture and technology levels, the capabilities of small models are approaching or even surpassing the much larger cutting-edge models. For example, in the GPQA benchmark, the DeepSeek distillation version based on the Tongyi Qianwen model and Llama model achieved similar or higher performance than GPT-4o, Claude 3.5 Sonnet, and GPT-o1 mini.

Source: DeepSeek, January 2025.

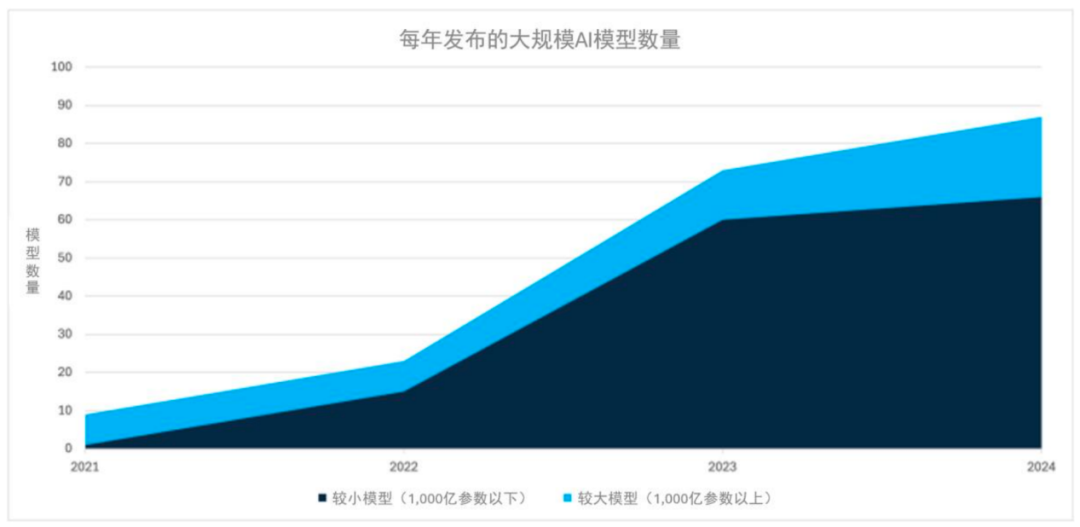

From an industry-wide perspective, advances in technology have driven the proliferation of high-quality generative AI models. According to Epoch AI statistics, among the AI models released in 2024, more than 75% of models with a scale of less than 100 billion have become mainstream.

Source: Epoch AI, January 2025.

Therefore, driven by many aspects such as cost and computing power requirements, and performance trade-offs, small models are replacing large models as the first choice for many companies and developers. Currently, mainstream models including DeepSeek R1, Meta Llama, etc. have launched small model versions and performed well in mainstream benchmark tests and domain-specific task tests.

In particular, small models exhibit faster reasoning speeds, less memory usage, and lower power consumption, making such models the first choice for terminal side deployments such as mobile phones and PCs.

In the AI field, terminal-side model parameters are usually between 1 billion and 10 billion, while the scale of some recently released new model parameters has dropped below 2 billion. As the scale of model parameters continues to decline and the quality of small models improves, parameters are no longer an important indicator to measure model quality.

In contrast, current flagship smartphones have a running memory configuration of more than 12GB, which is theoretically enough to support the operation of many models. At the same time, small models for mainstream mobile phones are also constantly emerging.

As high-quality small models accelerate the pace of large-scale deployment on mobile phones, PCs and other terminals, AI reasoning functions and multimodal generative AI applications (such as document summaries, AI image generation, real-time language translation, etc.) have been further promoted. The widespread implementation of AI technology on the terminal side has provided important support for the popularization of AI technology to a wider range of end-side ordinary users.

In the process of promoting the implementation of end-side AI, Qualcomm has been paving the way for the industry.

In the era of AI reasoning, Qualcomm will lead industry transformation

Qualcomm is leading and benefiting from this change with technical expertise such as energy-efficient chip design, advanced deployment of AI software stacks, and comprehensive development support for edge applications.

Durga Malladi, senior vice president and general manager of Qualcomm Technologies ‘technology planning and edge solutions business, said that today’s small model performance has surpassed the large cloud model launched a year ago.”Our focus is no longer on the model itself, but the application development that evolves into the terminal. As more and more high-quality AI models can be run on the terminal side, AI applications are beginning to emerge. AI is redefining the user interface of all terminals, which also means that AI is becoming the new UI on the terminal side.”

Qualcomm believes that in the new era of AI definition, multiple sensor data, including voice, text, and images, will first be processed by AI agents-rather than directly applied to a certain App. After the agent obtains information, it allocates tasks to different background applications, a process that is insensitive to the user.

In conventional mobile phone systems, the number of terminal-side models available to developers is rapidly increasing, and AI agents need to select the required model from a large number of AI models available to the terminal-side to complete the task. This process will significantly reduce the complexity of interactions, achieve highly personalized multimodal capabilities, and complete tasks across various applications.

For end users, AI agents are the only UI that interacts with them on the front end, and all actual application processing is completed in the background.

Using the capabilities of high-quality small models, terminals such as smartphones can achieve interactive innovation. Qualcomm has certain strategic advantages in the transformation of AI from training to large-scale reasoning and expansion from the cloud to the end:

- Scalability covering all key edge segments: Qualcomm’s scalable hardware and software solutions have empowered billions of smartphones, cars, XR headsets and glasses, PCs, and the Industrial Internet of Things and other terminals, providing a basis for a wide range of transformative AI experiences;

- Active ecosystem: Through Qualcomm’s AI Software Stack, Qualcomm AI Hub and strategic developer collaboration, Qualcomm provides tools, frameworks and SDKs for model deployments across different edge terminal domains, empowering developers to accelerate the adoption of AI intelligence on the edge side. and applications.

Qualcomm not only predicted the explosion of terminal-side models, but also promoted the implementation of edge AI reasoning on cross-terminal devices.

Cristiano Amon, President and CEO of Qualcomm, shared his views on current AI industry trends during a recent first-quarter earnings conference call: “The recent DeepSeek R1 and other similar models demonstrate that AI models are evolving faster and faster, they are becoming smaller, more powerful, more efficient, and can run directly on the terminal side. In fact, DeepSeek R1 ‘s distillation model runs on smartphones and PCs equipped with the Snapdragon platform within just a few days of release.”

As we enter the era of AI reasoning, model training will still take place in the cloud, but reasoning will increasingly run on the terminal side, making AI more convenient, customizable and efficient. This will promote the development and adoption of more targeted dedicated models and applications, and thus drive the demand for computing platforms from various terminals.

The explosion of DeepSeek R1 appropriately verified Qualcomm’s previous judgment on terminal-side AI. With its advanced connectivity, computing and edge AI technologies and unique product portfolio, Qualcomm not only maintains a highly differentiated advantage in the terminal-side AI field, but also provides strong support for its realization of its hybrid AI vision.

In the future, end-side AI will play an increasingly important role in various industries.