Article source: Silicon-Based Research Laboratory

Image source: Generated by AI

Image source: Generated by AI

As the competition for big models enters its third year, DeepSeek, a local start-up from Hangzhou, China, is like a catfish, completely disrupting the global big model ecosystem.

Since the Spring Festival of the Year of the Snake, a competition around “Access to DeepSeek” has swept the AI industry in China. In just over a month, more than 100 China companies, from chip manufacturers, cloud manufacturers, computing service providers, software manufacturers to various hardware manufacturers for end users, have announced that they will join DeepSeek’s circle of friends, using its open source model capabilities to enhance their business imagination.

There is no doubt that DeepSeek has made large models more popular, but there is also a more critical issue in parallel with surging traffic-computing power.

Focusing on this key issue, the market’s attitude has experienced “repeated jumps”: in the initial stage, DeepSeek’s low computing power cost characteristics once hit the stock prices of computing power manufacturers such as Nvidia; after that, with the surge in visiting users and With the demand for private deployment, computing power concept stocks have become strong again, and demand exceeds demand. Recently, DeepSeek also announced its theoretical costs and profit margins, which once again triggered discussions in the AI community.

As DeepSeek’s circle of friends continues to expand, can the arms race initiated by OpenAI on the chip hardware side continue? What new rules will this computing game bring?

DeepSeek is known for its low cost and high performance. According to official data, there are 73.7k/14.8k input/output tokens per second on each H800 node, with a theoretical total single-day revenue of US$562027 and a cost-profit margin of 545%. Its “China-style innovation” activates the domestic computing power ecosystem.

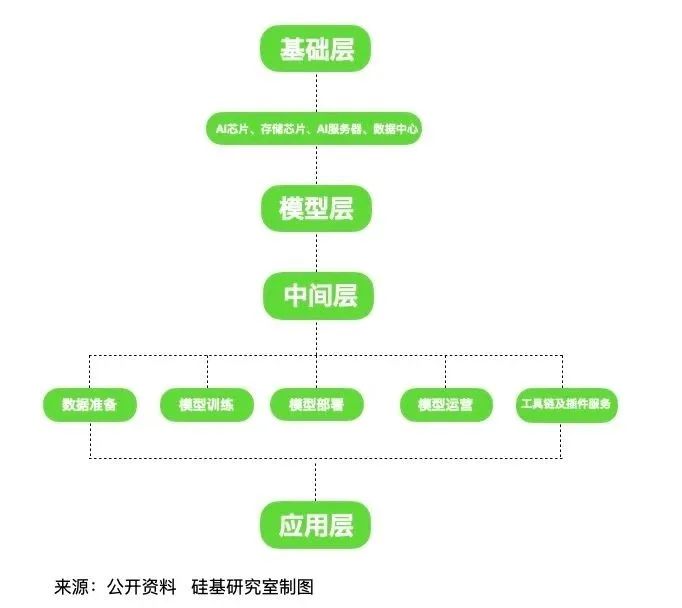

Judging from DeepSeek’s “circle of friends”,The most active and fastest performing players can be divided into four categories: the basic layer (including domestic chip manufacturers and cloud manufacturers), the middle layer (AI Infra manufacturers), and software and hardware manufacturers at both ends of B and C.

According to incomplete statistics from the “Silicon-Based Research Laboratory”, the first batch of companies to connect to DeepSeek is nearly 100.

“There are more than a dozen consultations a day, and there has been no rest since the Spring Festival resumed work.” An AI Infra manufacturer business BD told the “Silicon-Based Research Laboratory”.

As an intermediate layer connecting the underlying computing power of a large model with downstream applications,AI Infra manufacturers were not only the first players to catch DeepSeek’s “traffic”, but also the first beneficiaries of traffic spillover.

Yuan Jinhui, founder of AI Infra manufacturer silicon-based mobility, once reviewed it in the circle of friends. After DeepSeek left the circle, they quickly found Huawei on February 1 to complete the adaptation of DeepSeek-R1 and V3 in the Shengteng ecosystem.

There is also a domestic chip manufacturer with similar sensitivity to AI Infra manufacturers. Li Yang (pseudonym), a certain smart computing center service provider, has a direct feeling that in this round of DeepSeek craze,”domestic AI chip manufacturers have responded very quickly this round, almost with Nvidia and these international players are connected synchronously.”

Closely followed by cloud vendors and terminal-oriented software and hardware vendors.

In terms of cloud vendors, almost all “Internet Cloud” launched API services based on the DeepSeek model during the Spring Festival, launching a new round of “low API prices + open source model war.” Cloud computing power has promoted DeepSeek to accelerate its penetration into different industries.

In terms of hardware, end-side mobile phone manufacturers were also the first players to embrace DeepSeek. On the software application side where users have the most intuitive experience, major manufacturers including Tencent are involved in the competition with “Super Apps +DeepSeek”, giving the big model competition another boost.

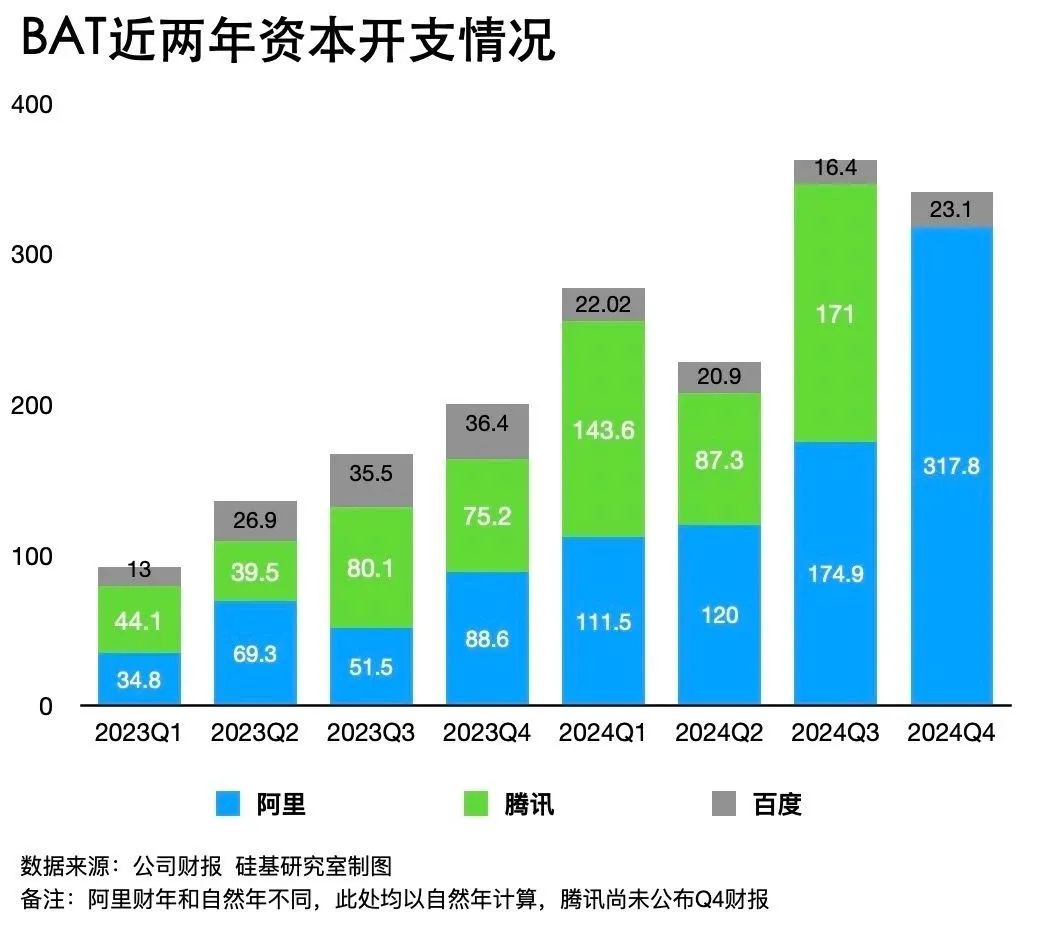

Behind the sharing of DeepSeek traffic, different players actually “have their own thoughts.”From the perspective of computing power, cloud vendors with relatively sufficient computing power reserves are obviously more motivated.Cloud vendors are the main investors in China’s computing power market. Externally, they will invest large-scale fixed assets, including purchasing chips, servers, leasing land to build data centers, etc., and internally develop their own chips.

Take Ali and Baidu as examples. For the whole year of 2024, Ali’s capital expenditures totaled more than 72.4 billion yuan, and Baidu exceeded 8.2 billion yuan. According to previous reports by Caijing, Ali and ByteDance have already completed 100,000 card level computing power reserves.

In addition, DeepSeek has verified the feasibility of reproducing high-performance models with low computing power and cost through various technical means such as model compression, sparse computing, and mixed-precision training, and has also brought “self-supply” to large manufacturers that develop self-developed chips. opportunity.

“With self-supply and external leasing, cloud manufacturers have their own closed loop of business.”Yu Hao, vice president of Legend Holdings, told the “Silicon-Based Research Laboratory.”

Secondly, at the strategic level, with their own cloud services, BAT and ByteDance can achieve two major intentions by borrowing DeepSeek: First, through DeepSeek, a super traffic portal, they collaborate with their own products to undertake DeepSeek’s traffic in the short term. The second is to help spur internal teams and improve their own model capabilities through comparison.

The former is more like a style that big companies are good at in the mobile Internet era. Chen Wei, a senior chip expert and chairman of China Deposit Computing, believes that the emergence of DeepSeek has changed the public’s thinking about the business nature of large models: “Originally, the public thought that the large model might be a tool for daily dialogue and application of office work, but in fact, after the emergence of DeepSeek, the large model can also become a super traffic portal that transcends the Internet and even transcends the original operating systems.”

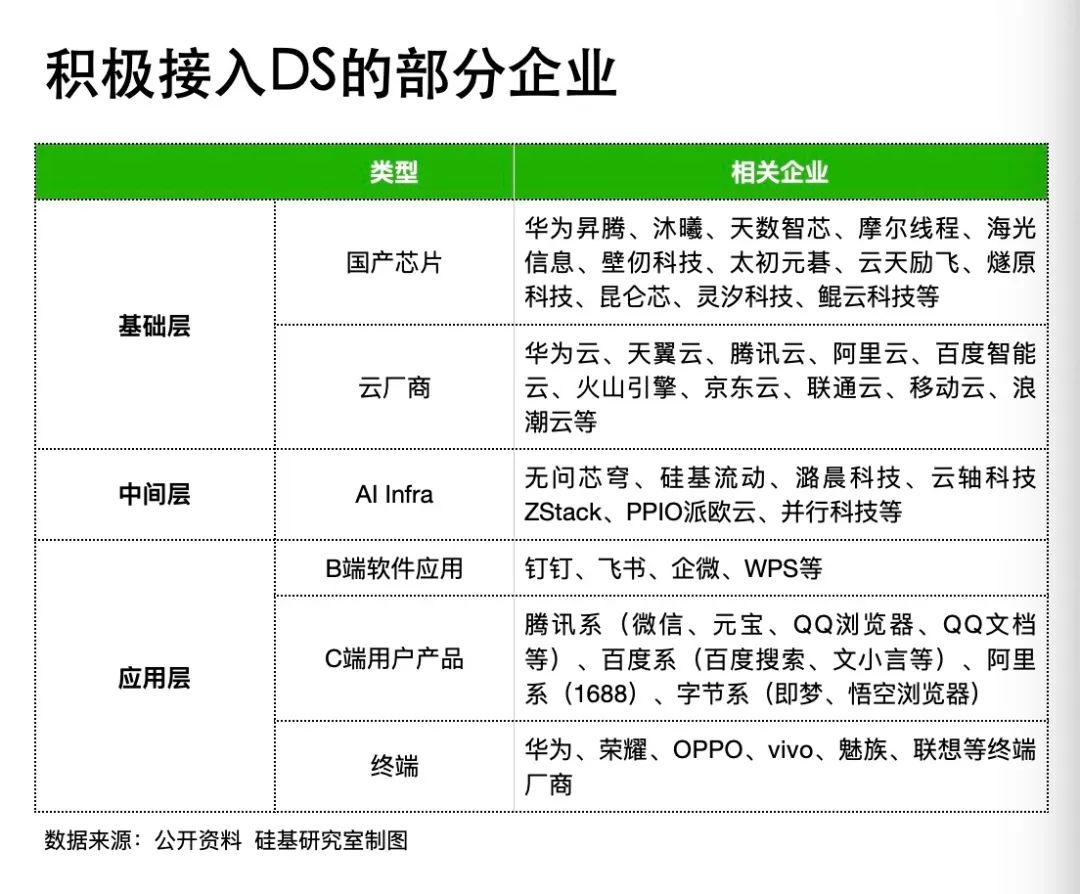

DeepSeek mobile daily activity data trend in the past 30 days Source: Sensor Tower

Sensor Tower data shows that as of February 24, DeepSeek’s daily activity data has dropped from a peak of more than 15 million to around 7 million. Correspondingly, Yuanbao, Doubao, Tongyi, etc. have all achieved significant increases in visits and daily activities.

Yu Hao mentioned that DeepSeek’s model capabilities are obvious to all. It has traffic and market. In the early stage, DAU’s lightning expansion was achieved in a free model on the C-side. The leading manufacturers will naturally follow suit quickly.

Even if you look at the entire history of the Internet in China, it is almost difficult to find other cases for products like DeepSeek that have been sought after and competed for access by the entire industry, apart from last year’s “native craze.”

Finally, back to the application side, DeepSeek supports complex AI task operations with low cost and low power consumption, promoting AI to further sink to smart terminals, automotive smart driving and industry.

Taking manufacturers as examples, mobile phone brands such as Huawei, Glory, OPPO, vivo, Meizu, and Nubia have announced access to DeepSeek through AI assistants.

In the long run, mobile phone manufacturers collectively embrace DeepSeek, which will help it expand the cloud AI ecosystem on the one hand. On the other hand, it is also expected to continue to drive the demand for mobile SoCs and create more software and hardware upgrades on the end and edge sides.

Qualcomm CEO Ammon said in a recent earnings call: “DeepSeek-R1 and other similar models have recently shown that AI models are becoming faster, smaller, more powerful, and more efficient, and can now run directly on devices.”

Accompanying the wave of “access to DeepSeek” comes a reminder that the server is busy.

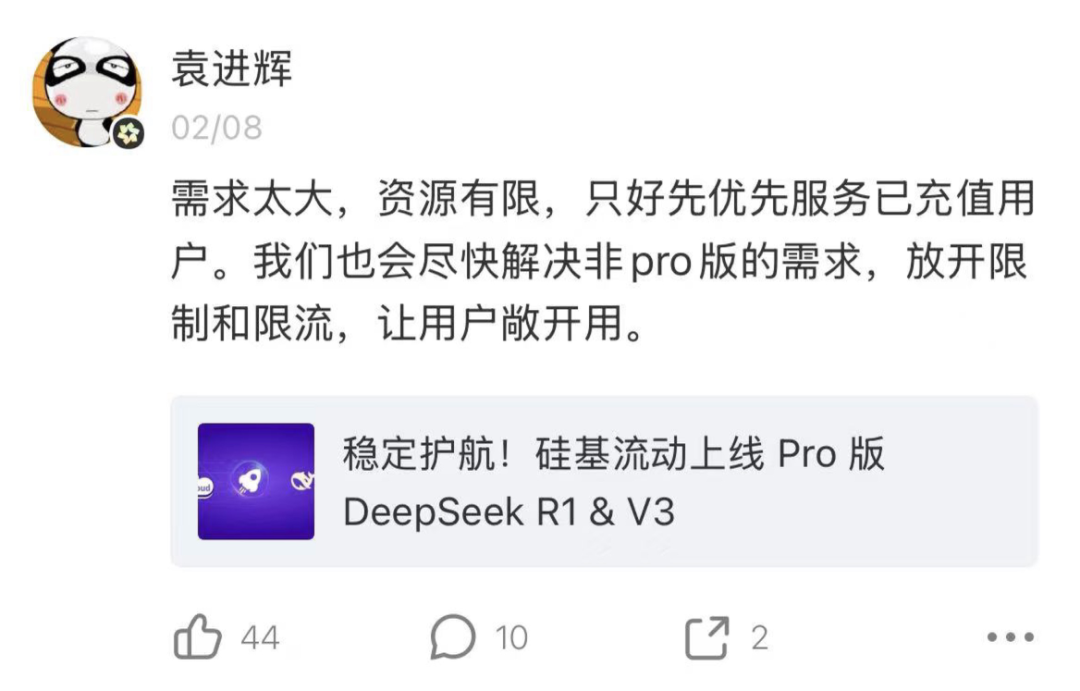

Judging from the user’s direct experience, servers are busy and delays are increasing, and even silicon-based mobile traffic has begun to have to limit traffic and seek more computing power resources, especially when large companies bringing super applications in the mobile Internet era, the huge number of users has exacerbated anxiety about computing power.

Silicon-based flow Yuan Jinhui announced that current restrictions have begun due to strong demand

Are these DeepSeek “circles of friends” enough computing power?

Various parties in the “Silicon-Based Research Laboratory” have learned that on this issue, the current consensus in the industry isShort-term computing power is shuffled, and long-term computing power is short.

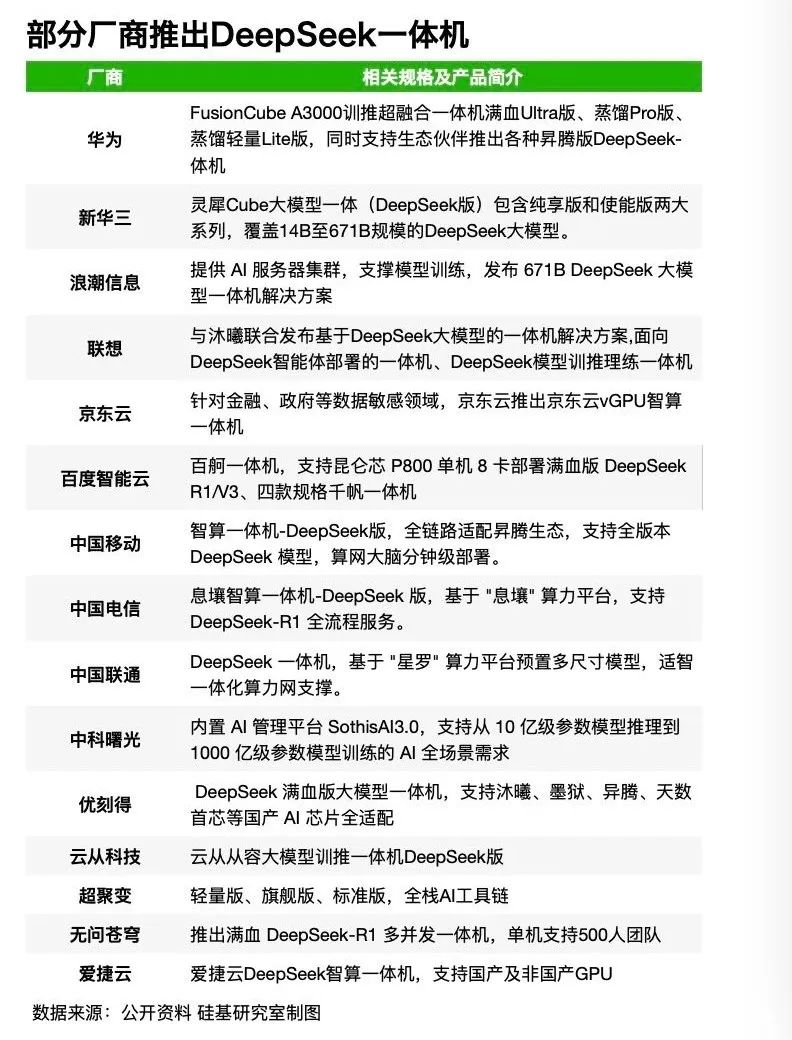

“Short-term computing power reshuffle” means that DeepSeek has broken the past narrative of model manufacturers “vigorously creating miracles”. Its system-level engineering methods on the model architecture, pre-training and reasoning sides have lowered the computing power threshold for model deployment. In the short term, it provides a window of opportunity for domestic chips, computing power service providers, etc. to integrate domestic computing power and promote the reshuffle of domestic computing power.

Specifically speaking, it can be divided into two aspects.The first is to provide more opportunities for domestic chips; the second is to solve the dilemma of idle computing power in some smart computing centers.

Regarding the first point, strong performance has always been the moat of NVIDIA’s high-end GPUs, and it is also a major weakness of domestic chips that started late.

The emergence of DeepSeek has reduced its reliance on high-performance chips to a certain extent. As a large model using MoE architecture, DeepSeek does not have high requirements for chip training performance. Even performance castrated GPUs like the NVIDIA H20 can meet DeepSeek’s localized deployment needs, and even become a “hot seller” on the current channel side.

A chip channel dealer told the “Silicon Research Laboratory” that the current price of H20 141GB eight-card servers is 1.2 million units, which is a futures market. It will take 4-6 weeks to wait. There are still many 96GB H20 eight-card servers in stock, but “one price per day.”

Compared with training scenarios, DeepSeek’s requirements on the hardware side are more focused on the “stacking process”.

Chen Wei told the “Silicon-Based Research Laboratory”,”Very large and ultra-sparse MoE models like DeepSeek are far from enough video memory. From an industry perspective, high-bandwidth memory (HBM) adapted to high-end GPUs is expensive.

This is why the industry has been exploring more cost-effective deployment solutions. “One reasonable deployment method is a CPU/GPU multi-expert multi-graphics card deployment solution. Another is to directly use the CPU’s memory to serve as a storage cache for infrequently used segmentation experts.” Chen Wei said.

And these two solutions correspond to“Computing power cost-effective” and “heterogeneous collaboration” capabilities are the differentiated advantages of domestic chips for a long time.

Another person in the big model industry mentioned that the domestic ecosystem is no stranger to DeepSeek. When DeepSeek released the second-generation open source model DeepSee-V2 in 2024, silicon-based mobility launched an inference service based on the NVIDIA ecosystem, and is also very familiar with its model architecture and other aspects.

The work done by AI Infra manufacturers covers data preparation, model training, deployment and application integration. Silicon-based mobility mainly uses products such as its model cloud service platform SiliconCloud and large-language model inference engine SiliconLLM to enable model capabilities to be called on demand. For example, they are equivalent to a “chef” who processes raw computing power resources into “finished dishes” that meet user needs. Since the launch of DeepSeek R1/V3 reasoning service, SimilarWeb data shows that it has brought silicon-based flows. With tens of times of traffic growth, SiliconCloud has currently gained more than 3 million users.

For some smart computing centers that deploy domestic chips, DeepSeek’s release has also solved the problem of idle computing power and fragmentation in the short term. Li Yang, the above-mentioned smart computing center service provider, has calculated that there are currently more than 600 smart computing center projects in China (including under construction), and various places are also building kcal and ten-thousand-cal computing power resource pools.

According to incomplete statistics from the organization’s “IDC Circle”, as of November 20, 2024, the number of smart computing center projects in China has reached 634.

Under the vigorous “wave of computing power”, why does the “idle problem” still appear?

In Li Yang’s view, before DeepSeek left the circle,Domestic computing power centers lack a low-cost, high-performance and open-source model.“Most smart computing centers are domestically produced cards. When a big model becomes popular, a bunch of them have to be adapted every time. Coupled with limited manpower and no good open source models, many of them are not used in the end.”

Another reason is that computing power centers are mainly for industries, academic circles, etc., and customers are also very sensitive to computing power costs.In the past, on the reasoning side, smart computing centers provided computing power services in the form of a single card. The cluster scale effect of smart computing centers was not prominent, and domestic computing power was not effectively consumed.

“Cost and production capacity are the main problems. Chips with low production capacity have high prices. Only when production capacity is stable can there be scale effects and reduce the cost of smart computing centers.” Li Yang told the “Silicon-Based Research Laboratory.”

But DeepSeek broke this dilemma: first, domestic chip manufacturers responded quickly, and secondly, as Li Yang called a truly “good open source model”, it has driven the upstream and downstream ecosystem, superimposed policy drives, and promoted the idle domestic computing power in the past to be truly used.

In addition, AI Infra vendors as the middle layer are also rapidly integrating ecosystems and accelerating the reshuffle of the computing power market. Under the guidance of DeepSeek, for example, they have launched agile multi-concurrent all-in-one machines to integrate software and hardware to provide more elements and efficient inference service solutions.

However, after the short-term reshuffle, in the long run, computing power is still short.

Training and reasoning are the main task scenarios of AI chips. DeepSeek drives changes in the structure of intelligent computing power and promotes the transfer of Scaling Law to the backward training and reasoning stages.

According to IDC data, in 2024, the scale of China’s intelligent computing power will reach 72.53 trillion trillion cycles per second (EFLOPS), a year-on-year increase of 74.1%, which is more than three times the growth rate of general computing power (20.6%) during the same period. In terms of structure, in the future, the proportion of internal training computing power of intelligent computing power will fall to 27.4%, and the proportion of inference computing power will rise to 72.6%.

Based on the current daily activity volume and average daily token calls, Minsheng Securities has made a conservative estimate of the connection of “super applications” to DeepSeek. After 1 billion-level DAU applications are connected to DeepSeek and fully popularized, the scale of inference computing power required is about 280,000 H20s.

Soochow Securities also took AI mobile phones as an example and calculated that the demand for end-side computing power will basically maintain a growth rate of more than double between 2024 and 2027. The demand for cloud computing power of AI mobile phones will be converted into the FP8 computing power of Blackwell GPU cards. In 2025, the annual demand is approximately 120,000.

“Computing never sleeps.” A person in the large model industry explained to the “Silicon-Based Research Laboratory” that the long-term demand for computing power can be roughly understood from the Token consumption announced by major manufacturers.

On December 18 last year, Byte announced that the average daily Token consumption of the bean bag universal model had exceeded 4 trillion yuan. Baidu announced in August last year that the Wenxinyiyan universal model processed more than 1 trillion yuan Tokens text per day. According to Volcano Engine Wu Di, head of intelligent algorithm, previously predicted that the daily Token consumption of bean bags in 2027 is expected to exceed 100 trillion yuan, more than 100 times the original.

The above-mentioned large model industry person said thatTaking into account factors such as future video reasoning and user growth, the demand for reasoning computing power may move towards one million cards in the long run, and “long-term computing power is difficult to accurately estimate.”

In fact, China’s major technology companies have entered a new round of expansion cycle-taking Ali’s capital expenditures in the past two years as an example, it has shown a high growth trend in every quarter, and even showed a triple-digit increase in some quarters. Ali’s management The guidance given by the latest earnings call is that infrastructure investment in cloud and AI in the next three years will exceed the total of the past ten years, which will be approximately 380 billion yuan.

According to incomplete statistics from the “Silicon-Based Research Laboratory”, after the beginning of the New Year, many China cloud manufacturers announced new node plans, and Alibaba Cloud successively announced the launch of new data centers in Thailand and Mexico.

The ones that entered computing power investment earlier than China’s major technology companies are overseas technology companies. The “silicon-based research laboratory” said in “DeepSeek Panic, Why is it difficult to stop Microsoft from spending money crazily?” It was mentioned that looking at the longer timeline, starting from Q2 2023, the capital expenditures of Microsoft, Meta, Amazon and Google will show a clear upward trend.

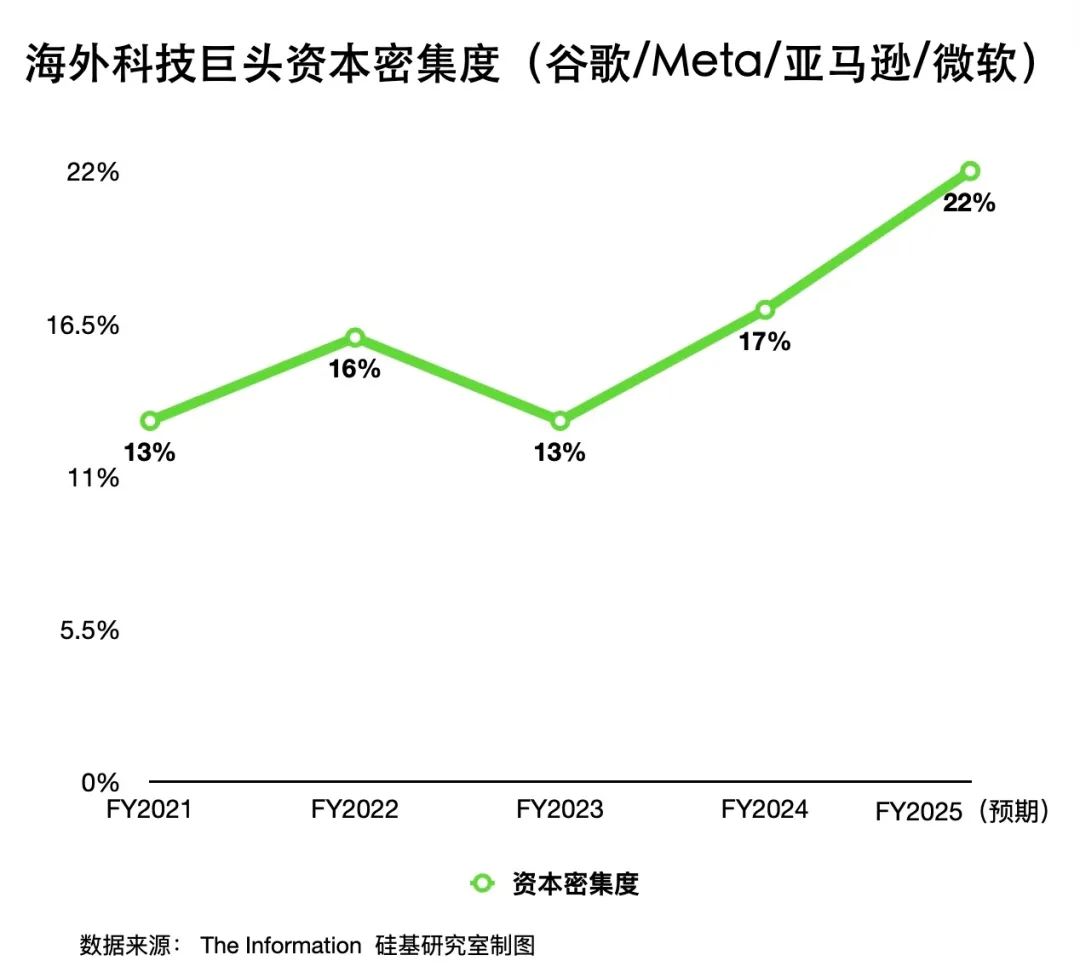

If you look at the indicator of “capital intensity”(capital expenditure as a proportion of revenue),In 2024, capital expenditures by Microsoft, Meta, Amazon and Google accounted for 17.2% of total revenue, which is even higher than the previous round of capital expenditures by large energy companies.

Is this an emotion of fear of missing out, or is it the truth of the popular “Jevons Paradox”? No one can give a definite answer.

Previously, Meta founder Zuckerberg was also asked by a Bloomberg host: “Is investment in data centers a bubble?” Zuckerberg, who was unable to answer directly, could only find the answer from the Internet bubble:“Many so-called bubbles will eventually become very valuable. It’s only a matter of time. I don’t know how AI will eventually develop. It’s still difficult to predict.”

Compared with discussing “whether it is a bubble”, there are more things that need to be done in domestic computing power at this stage.

Yu Hao believes that the development of domestic computing power in recent years can be divided into two stages: one is “small horses pulling carts”, starting with chasing, and the collective will align with OpenAI. Star companies have raised a lot of funds but are disappointed; the other is “millet plus rifle”, DeepSeek breaks the game, eating every bite of grain on the blade, aiming every bullet at the target, using both soft and hard to squeeze computing power and optimize it to the extreme.

“The industry needs more cost-effective computing power,” concluded Chen Wei, who has been exposed to artificial intelligence in the Tsinghua Laboratory since 2003.

In Chen Wei’s view,The first is to firmly support valuable domestic independent innovations like DeepSeek; the second is to actively replicate DeepSeek’s technical route and the computing power solution of “more cost-effective while running” in specific business scenarios.“Everyone should support more cross-border joint innovation like DeepSeek, rather than just a single point of innovation.”

DeepSeek alone makes it difficult to move the moat of international big names on the hardware side.

Take the previous media report that “DeepSeek breaks down the CUDA ecosystem” as an example. In essence, DeepSeek relies on PTX (Parallel Thread Execution, an intermediate instruction set within the CUDA ecosystem) to bypass the high-level API of the CUDA ecosystem and directly command and optimize the underlying hardware. However, the above technical route is essentially still seeking breakthroughs within Nvidia’s framework.

Even DeepSeek, which focuses on technological route innovation, still has not completely bypassed the Nvidia CUDA ecosystem, which means that on the domestic AI chip side, taking advantage of DeepSeek’s momentum to gather together to warm up and establish a domestically independently controllable CUDA ecosystem is a long-term task.

A server expert who asked not to be named told the “Silicon-Based Research Laboratory” that domestic AI chips still need to be specially designed based on large models, such as “low-precision and high-cache”, and speed up support for FP8 data types (DeepSeek adopts FP8 mixed precision training), which makes reasoning cheaper and also facilitates the design of downstream server manufacturers.

On the side of cloud vendors and computing service providers,In addition to big bets, we need to calculate the economic accounts of the large model-in addition to purchasing chips, data center construction also includes cost expenditures such as energy supporting facilities, manpower supporting facilities (operation and maintenance, R & D) and data assets.

In addition, before the large model reaches the final training, the trial and error costs of various aspects such as preliminary research, data training used, and personnel compensation are also part of the total cost. Model manufacturers will not disclose these hidden costs.

Therefore, how to reduce resource waste in every aspect of computing power construction not only tests the Infra capabilities of each major factory, but also tests its own expected management.

The common practices of overseas cloud manufacturers include extending the server depreciation cycle, cooperating with energy companies, and some also stop losses in a timely manner. Previously, it was revealed that Microsoft had suspended the construction of part of the artificial intelligence data center in Wisconsin that OpenAI plans to use because it had overestimated the computing power needs of some regions.

On the one hand, we plan resources more rationally and improve computing power utilization. On the other hand, cleverly use financial skills to ensure profit margins. This is also the trend that subsequent cloud vendors respond to the fundamentals. Specific to the model side, embracing open source and continuously optimizing algorithms has become the consensus of major manufacturers at present.

At the same time, promoting product explosion and implementation on the application side has also become the focus of manufacturers to expand their “computing power stories.” According to the “Silicon-Based Research Laboratory”, personal agents on the C-side, enterprise privatization deployment on the B-side, and government public intelligent computing cloud on the G-side are the three major directions that the industry is currently focusing on.

If OpenAI set off a computing power game in the past, then this game will continue after DeepSeek comes out of the circle, but it has a new attribute. This is a game of “computing power efficiency”.

Although the market value of US$600 billion was once destroyed,Huang Renxun still gave positive comments on DeepSeek many times. In the latest earnings conference call, he said: “Thanks to DeepSeek, it has open-source an absolutely world-class inference model.”