Wen| Extra studio, author| Lin Shu, Editor| Emperor Bai

With the recent access to DeepSeek by Internet giants such as Tencent and Baidu, DeepSeek not only occupies the two super-entrances of WeChat and Baidu search, but is also fully embedded in the ecosystems of Tencent and Baidu, and its influence covers hundreds of millions of users.

Behind the Internet giant’s hot access to DeepSeek, on the one hand,It reflects the helplessness and passivity of traditional Internet giants being forced to access third partiesDeepSeek’s advantages in performance, cost, and ease of use indeed dwarf the self-developed model.The giants have to maintain competitiveness through access。

On the other hand, it also showsThe giants are trying to use the existing ecosystem to quickly integrate advanced technologies to consolidate their market positionWith strategic choices, the giant is trying to transform DeepSeek into its own moat through ecological integration and organizational change.

Essentially,This is a competition and game between a rising star in the AI era and the old forces of traditional giantsIt is also the only way for traditional giants to rebuild their competitive advantages in the AI era.

Ecological reorganization in the AI era

Since the advent of generative AI, an important problem has been troubling various AI companies and users of large models. In terms of functional integration, most previous large AI models were difficult to achieve in-depth scene-based applications.

This is because to realize true scenario-based AI applications, a large number of data islands and application scenarios need to be opened up. However, these data and scenarios are often segmented by different Internet platforms.

According to IDC,Among the data storage capacity of China’s Internet industry, 73% of the data is concentrated on TOP10 platforms such as Tencent, Ali, and Byte, but the success rate of cross-platform data calls is less than 5%。

For example, user behavior data such as browsing history, purchase history, etc. on e-commerce platforms such as Taobao and Jingdong are independent from interest tag data on social platforms such as Douyin and Weibo. The behavioral data of users ordering meals at Meituan, watching videos on Douyin, and managing money on Alipay are scattered across different platforms, so AI cannot build a complete user portrait.

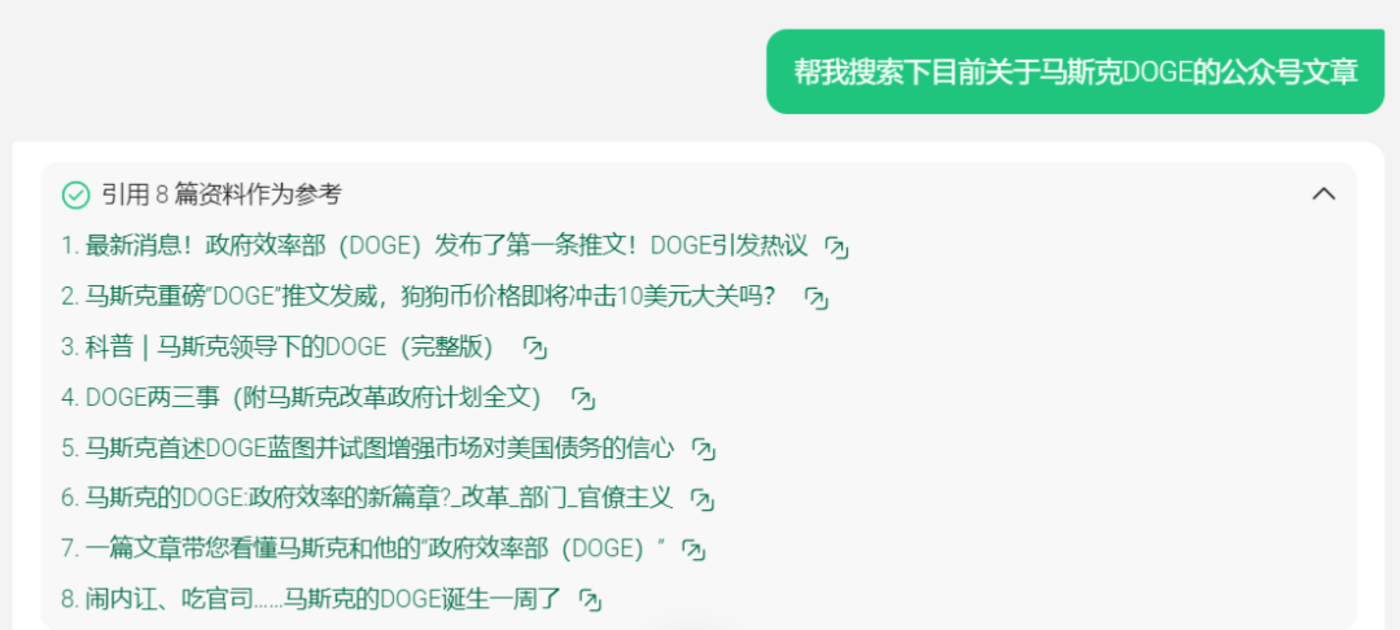

Specifically when it comes to search, although existing major head models such as Kimi and Jimu all have online search functions, almost all AIs that can be connected to the Internet can capture public domain information, but cannot grab private domain content such as the public account platform, only Tencent’s own Yuanbao can do it.

The existence of this private traffic pool essentially firmly controls the reading interests, browsing records and other data of a large number of users and readers in their hands.

Source: Tencent Yuanbao

Similarly, as the world’s largest Chinese search engine, Baidu APP currently has 700 million users. In application scenarios, it integrates more than 20 functions such as search, maps, post bars, online disk, library, knowledge, and health.

This means that when users type text in the Baidu search box, the demand may not be just a simple information review, but may be the result of the interaction of the above-mentioned multiple functions and scenarios.

This cross-scenario connectivity and the data association behind it are difficult for third-party AI to achieve through plug-in loading。

However, for quite some time, the capabilities of giants who master these huge data and application scenarios often perform poorly in their AI models and are unable to meet the more complex requirements of users.

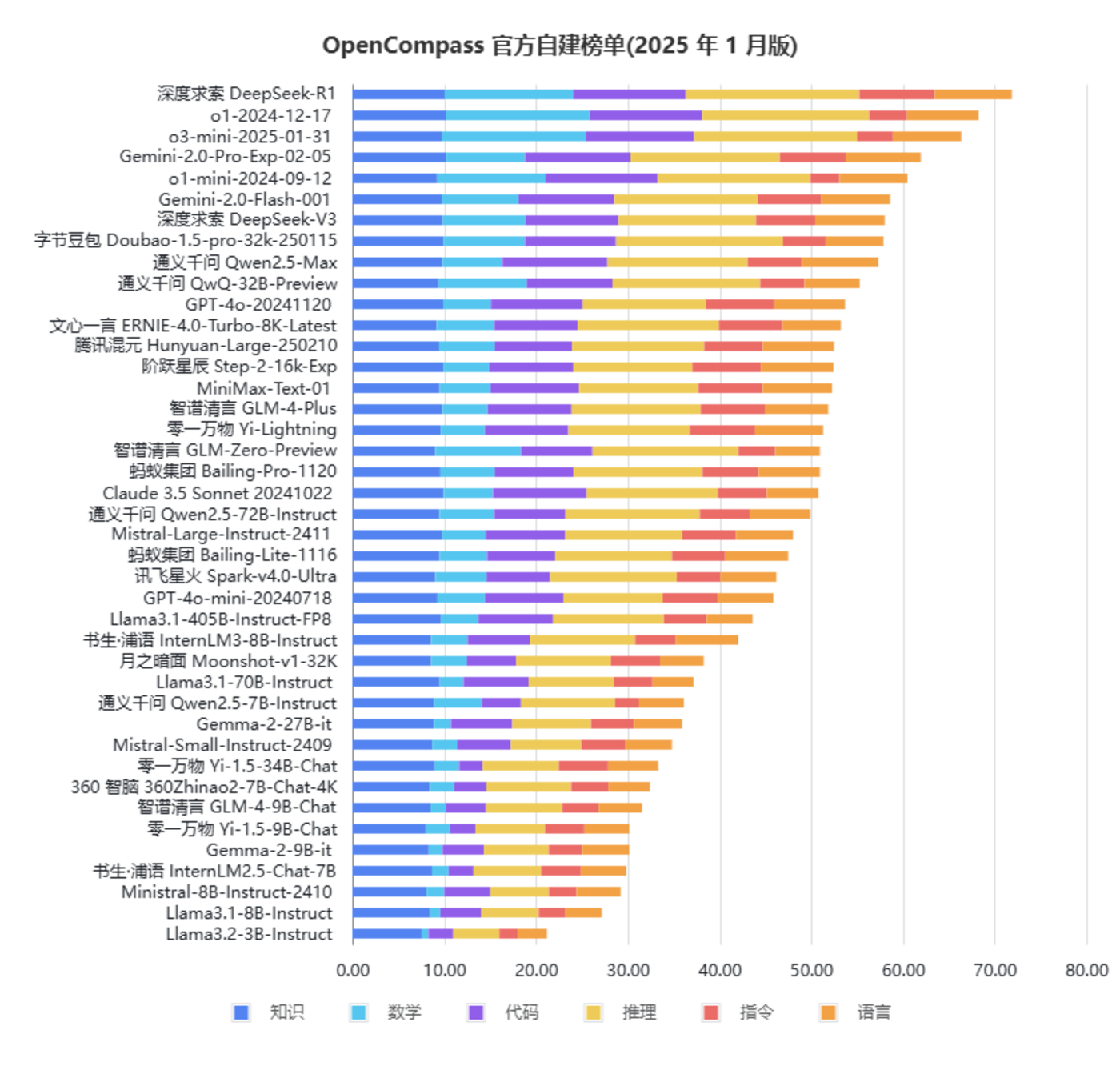

The Sinan Model List shows:Wenxin Yiyan and Mixed Elements are obviously behind DeepSeek-R1

So it formedTraditional Internet giants have entrances but their models are weak, and AI enterprise models are strong but have no entrances.。

This creates a paradox: technically, the current large models are already very powerful in terms of reasoning, mathematics and programming. For example, OpenAI’s o1 and DeepSeek-R1 have been able to even reach the doctoral level in tests. However, due to the lack of situational data, they are still not humane enough, do not understand users enough, and are difficult to enter public life.

Previously,The most successful AI applications often appear in closed and isolated scenarios, while cross-scenario smart applications are slow to break through because the latter involves much more difficulty in coordinating multiple parties.。

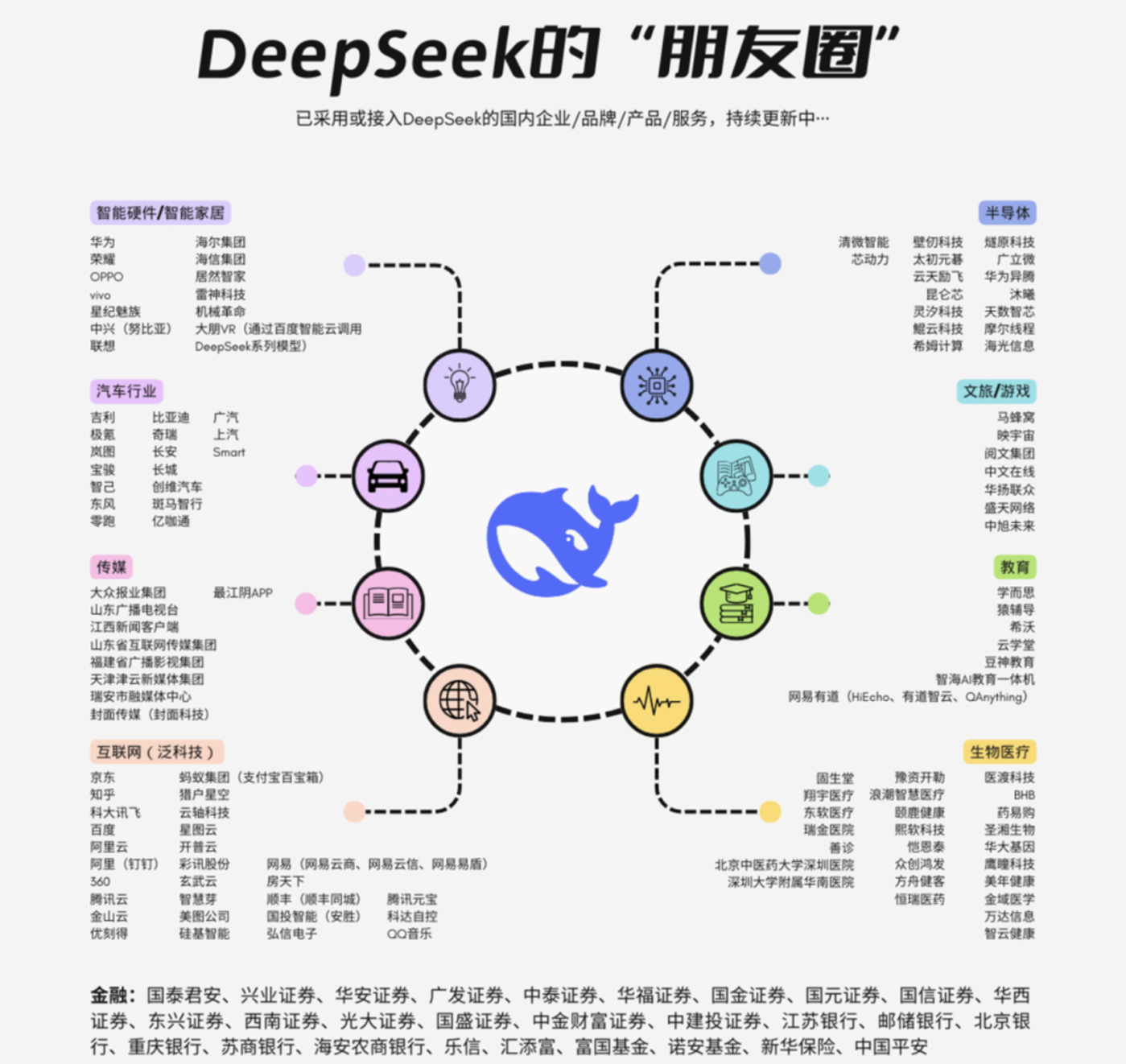

All of this has changed completely since the advent of DeepSeek-R1. Today, with its strong performance and low cost, DeepSeek’s ecological circle of friends has spread across various industries such as smart hardware, automobiles, media, the Internet, and semiconductors.

Source: Zidian Technology

Under such a general trend, major giants may have realized that although there are many large models on the market, the ones that will truly dominate the world in the future are likely to be one or two super models with both performance/cost aspects like DeepSeek-R1.

Therefore,This strategy of raising your own fish pond by using other people’s technology is actually using open source models as scaffolds to maintain its own huge ecology in the AI era.——Just like when Android was open source, Google still controlled the overseas Android ecosystem through GMS, namely core services such as Google Play Store, Gmail, Google Maps, and YouTube.

After all, the homogenization of technology may intensify, but scenario innovation and ecological synergy will still determine whether giants can continue to lead the way. But the question is, will such a strategy still be effective in the AI era?

The secret battle between platform and AI

If we can still maintain traffic advantages with the help of the performance of third-party models, will Tencent, Baidu and other major manufacturers give up their own models?

the answer is no。

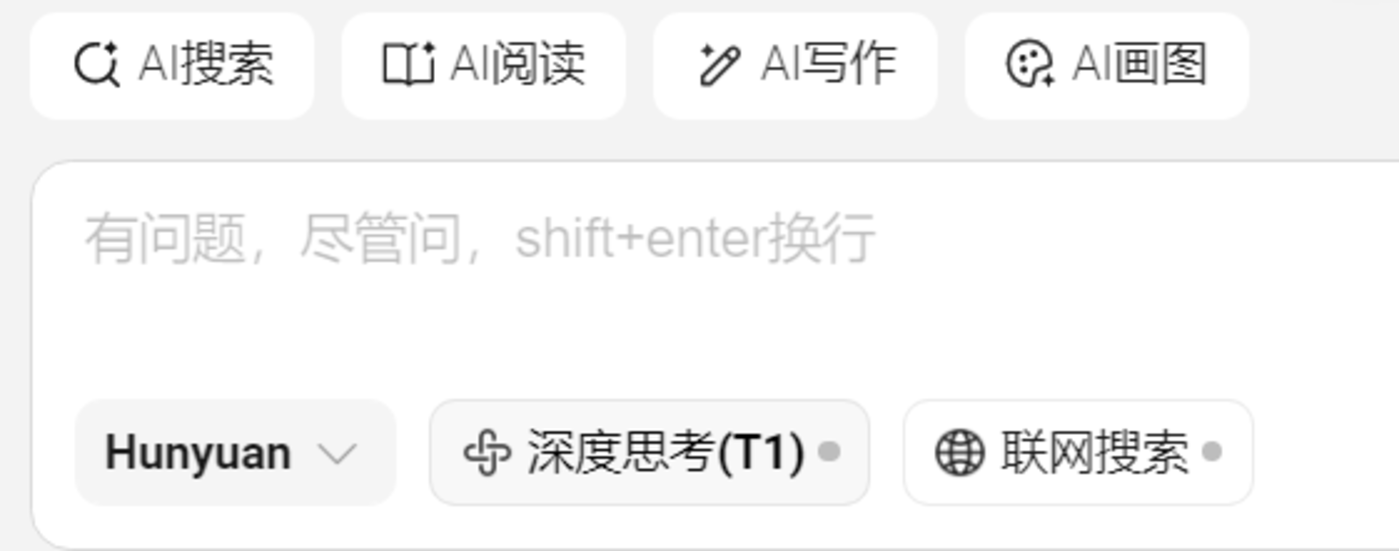

On February 17, shortly after connecting to DeepSeek, Tencent Yuanbao updated its APP, and the T1 gray test of the mixed yuan’s inference model was launched.

According to Tencent, T1 is a stronger and faster reasoning model suitable for handling complex tasks. It combines high-quality information sources such as Weixin Official Accounts to make answers more timely and authoritative.

Source: Tencent Yuanbao

Tencent maintains the mixed-source model in parallel with DeepSeek, which shows that the giant has not given up self-research, but uses external technology to make up for its shortcomings, trying to find a balance between technological dependence and independent controllability. nbsp;

If we blindly use third-party models, we will not only strengthen potential competitors, but will even lose the technological commanding heights of the AI era. This is equivalent to Yahoo, which outsourced search engines to Google and gradually declined.

Since self-research is a must-go path, can giants easily catch up with DeepSeek-R1?

Since DeepSeek-R1 is open source, it should be easy to replicate. Will successors easily reach a similar level?

But in fact, this view ignores the complexity of model training.

Technically speaking, DeepSeek-R1’s MoE architecture contains 671 billion total parameters, but only 3.7 billion parameters are activated per inference (5.5% of the total), which means that although it is large overall, it only uses a small amount of power when actually working.

In this way, tasks can be completed efficiently without having to take out all the tools, saving time and effort.

DeepSeek can do this thanks to an efficient task allocation method, multiple potential attention”(MLA)mechanism.

Such a mechanism, in short, isWhen the model faces many fork paths (choices), it looks in multiple directions at the same time and then picks the path that works best.。This way, there is no need to try every road, which is efficient and saves resources.

However, even if DeepSeek discloses the weight parameters of the model, it will be difficult for competitors to fully replicate this selection logic because it requires a large amount of test data and practical experience in reinforcement learning optimization to support it.

In other words,This is not just an algorithm adjustment, but requires a deep understanding of the inherent mechanisms of the language model.。

Open source code can provide a framework, but it cannot fully demonstrate this empirical knowledge. To put it bluntly, behind the seemingly open source, DeepSeek-R1 embodies profound engineering experience, which makes it a technology that is essentially easy to learn but difficult to refine.

Therefore,This technological catch-up for DeepSeek is destined to be a competition for AI talents, especially top AI talents.。

In terms of talents, a general rule in the technology industry is that in order to attract the top talents, in addition to high salaries, what is more important is a series of immaterial soft factors such as corporate culture and organizational structure.

In this regard, founder Liang Wenfeng revealed in an interview that unlike many China technology companies,DeepSeek discourages internal competition and overtime, and gives employees great freedom to choose their own tasks and use computing power.。

Because of this, DeepSeek has attracted a group of top AI talents with overseas experience to join. For example, core talents such as Pan Zizheng and Junxiao Song in the DeepSeek team all have overseas backgrounds. Pan Zizheng interned at Nvidia and received a positive offer, but he did not hesitate to join DeepSeek, which was still unknown at the time, and became a core contributor to DeepSeek-VL2, DeepSeek-R1 and DeepSeek-R1.

The flatness and flexibility of this organizational structure are an organizational advantage that large companies such as Baidu and Tencent currently do not have。

At present, Baidu, which has weak comprehensive strength in BAT, has become a lesson from the past. After Baidu released its 2024 full-year financial report on February 18, the company’s share price closed down 7.51% that day. Behind the decline in stock prices, it also reflects the market’s doubts about the veteran search giant’s AI transformation.

Whose future belongs to?

In the AI era,“Large models require more data and operating permissions, and the independent and fragmented scenario ecology of each platform have constituted an increasingly significant contradiction。

Of course, there are both bottlenecks in technical capabilities and deep constraints in business logic. For example, data sharing involves interest reconstruction, but the existing business model is difficult to balance the demands of all parties.

So,In the future Internet ecosystem, will the trend be platform-oriented or AI first?

Judging from the current AI competition landscape in China, the data + scene moat formed by various super apps of traditional Internet giants is difficult to shake.

The breakthrough point in the future may lie in the diversified balance of adaptability and commercial feasibility of data mobility scenarios, rather than a breakthrough in a single dimension。

In the short term of 1-2 years, traditional Internet giants and third-party AI companies may achieve win-win results through cooperation: giants use third-party cutting-edge technologies to optimize their services, and third parties use the giant’s user base and scenarios to accelerate technology implementation.

This model may blur the boundaries of traditional ecosystems and lead to more cross-border collaboration rather than pure competition or dependence.

However, in the long term of 3-5 years, if disruptive technologies appear, third parties may counterattack. For example, with the great improvement in cognitive efficiency brought by AI, its decision-making capabilities and operational efficiency in segmented areas will far exceed human beings., will force giants to further open their interfaces.

For the foreseeable future,Under the dynamic game of technological breakthroughs, policies and business models, the moat of traditional giants is not absolutely unshakable, and the trend of giving priority to AI may gradually emerge in specific fields.。