(Photo source: Photo taken by Zhijia Lin, editor of GuShiio.comAGI)

On March 6, GuShiio.comAGI learned thatThis afternoon, real-time interactive technology company SoundNet released a conversational AI engine in Beijing.

It is reported that the conversational AI engine has the capabilities of 650ms ultra-low latency response, elegant interruption, full model adaptation, and selective attention locking function to block 95% of ambient voices. It only takes two lines of code and 15 minutes to build an AI Agent to speak from the conversational AI engine Console to any model, and support the upgrading of large text models to large conversational multimodal models.

In terms of price, according to internal calculations by Sheng.com, the conversational AI engine costs less than 10 cents per minute, and the price per minute is 0.098 yuan.At the same time, for every conversation between a user and AI, there will be about 3 rounds of Q & A on average. The average conversation duration is about 21.1 seconds, and the single cost is only 3 cents. If the number of conversations is 15 times a month, the monthly cost is less than 50 cents, and the annual cost is only 5 yuan.

After the meeting, Yao Guanghua, head of the AI RTE product line of Shengwang, told GuShiio.comAGI and others thatThis is the world’s first conversational AI engine.During the Spring Festival, the team began to develop this conversational AI engine. In particular, the DeepSeek craze has had a positive impact on domestic enterprises and the scientific and technological community. Like the college entrance examination, everyone organized the core product line and R & D line together, and quickly promoted and made decisions. The Public Beta version was released on February 18, and this product is now officially released to the public.

Talking about the newly released Manus AI Agent product,Yao Guanghua said that Manus AI Agents and conversational AI products do not belong to the same species. From the underlying logic, the conversational AI interaction method is a subversive one, mainly because dialogue produces emotional value. If it can develop better, it may become something similar to companionship beyond tools, but now we have the understanding of AI Agents is still tools. The value of replacing many of your existing tool types is not the ultimate goal. When developing conversational AI products, the tone of emotional companionship and the number of people have increased.& ldquo; But we feel that the one we sent this morning (Manus) is a tool.” rdquo;

It is reported thatSoundNet is committed to building the critical infrastructure of artificial intelligence voice agents. The TEN (Transformative Extensions Network) service provided by the company has been used in AI companies such as DeepSeek, AliTongyi Qwen, Step Star, MiniMax, Amazon Bedrock, Baidu, and iFlytek. For example, on October 24 last year, SoundNet also announced that it was working with MiniMax to polish the first Realtime API in China.

The financial report released on February 24 showed that in the fourth quarter of 2024, Agora, Inc.& nbsp;(NASDAQ: API) achieved total revenue of US$34.5 million, a year-on-year decrease of 4.4%; net profit under the U.S. General Accounting Standards (GAAP) was US$160,000 (approximately RMB 1.1597 million), turning a profit. In fiscal year 2024, the group’s total revenue was US$133.3 million, a decrease of 5.9% from the same period last year.

In Yao Guanghua’s view, the value brought by conversational Agents to users includes the intelligent value of solving problems, the emotional value of emotional resonance, and the time value of improving efficiency.

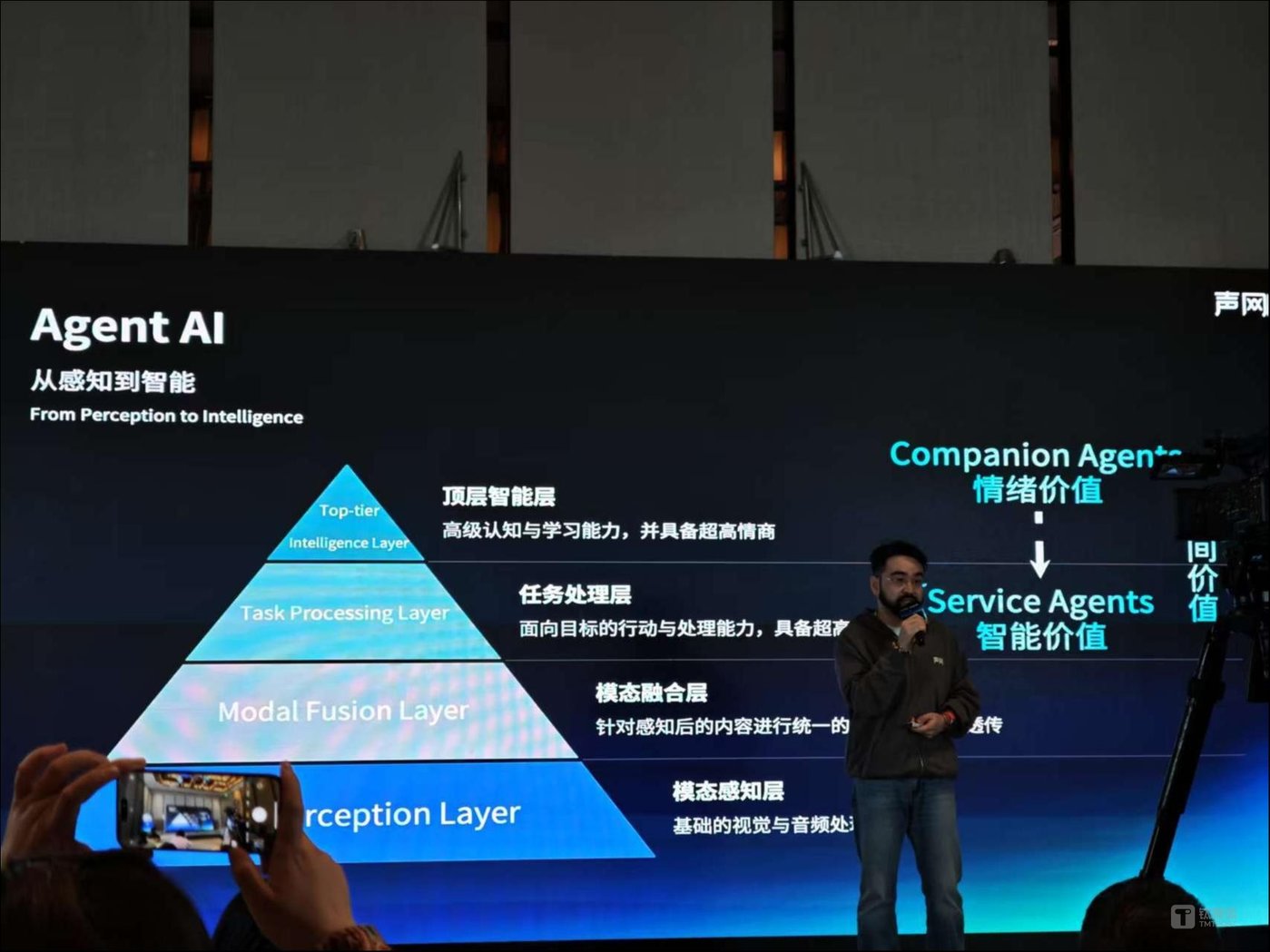

From perception to intelligence, Agent AI mainly includes four layers: the modal perception layer refers to the Agent’s need to perceive information in the physical world and process and transform audio and video; the modal fusion layer refers to the unified multimodal processing of the perceived information; The task processing layer is to process and solve goals and tasks; the top-level intelligence layer is advanced cognitive and learning capabilities and has high emotional intelligence. Among them,The soundnet conversational AI engine covers the modal perception layer and the modal fusion layer.

Talking about the topic of hallucinations, He Li Peng, product leader of Shengwang, told.comAGI thatBig model illusions are not (completely) eradicated, but need to be reduced,Behind this, the model itself needs to be constantly iterated, especially on the conversational AI engine. The team has done a lot of issues such as noise reduction and sound processing.

“Just like us today, you think that the answer I may not be you, because there may be misunderstandings between people, etc., but there is one of the best questions in dialogue. You find that he has misunderstood, and you tell me that this is not what I mean, so I interrupt you. I tell you directly that I actually mean this, and this is true for people and people.” rdquo; He Lipeng said that people will have illusions when communicating because their knowledge and backgrounds are different. In addition, the increase in context and the continuous strengthening of the CoT (thought chain) of reasoning are important solutions to reduce model illusions.

Yao Guanghua pointed out that the current conversational AI engine can be applied to many companion intelligent hardware and educational scenarios.

Zhao Bin, founder and CEO of SoundNet, said that generative AI has brought us transformative opportunities, especially in realizing real-time voice interaction between people and artificial intelligence models. Many large language models do not yet provide voice interaction capabilities, and models that provide voice interaction capabilities do not have an optimized experience. To bridge this gap, the company launched a conversational AI engine solution designed to provide natural conversation dynamics, including intelligent pause and interrupt processing, advanced voice processing, and ultra-low latency.

“Over the past few months, we have seen a breakthrough from AI inference models to Google multimodal, and this transformation has brought extraordinary opportunities to our business. rdquo; Zhao Bin emphasized that we firmly believe that this breakthrough innovation will accelerate the application penetration of interactive AI in various industries and become the core driver of the company’s future growth.” rdquo;