(Photo source: The Verge)

The craze for DeepSeek for China’s open source AI companies continues to intensify and has become a hot topic among the whole people. It has also set off a new AI craze. At present, American business circles, academia, and government agencies have launched a counterattack craze.

The first is at the American corporate level. I learned that in the early morning of February 7,OpenAI in the United States announced updates to the o3-mini and o3-mini high models to enhance the transparency of reasoning steps for free and paying users, increase memory for GPT services, and expose the o3-mini reasoning thought chain.

The day before, Google released a full-blooded version of the Gemini 2.0 model, introducing the reasoning AI model Gemini 2.0 Flash Thinking into the application to answer complex questions. Google CEO Sundar Pichai said it plans to invest US$75 billion in fiscal 2025 to develop AI technology to fight competitors such as DeepSeek and OpenAI.

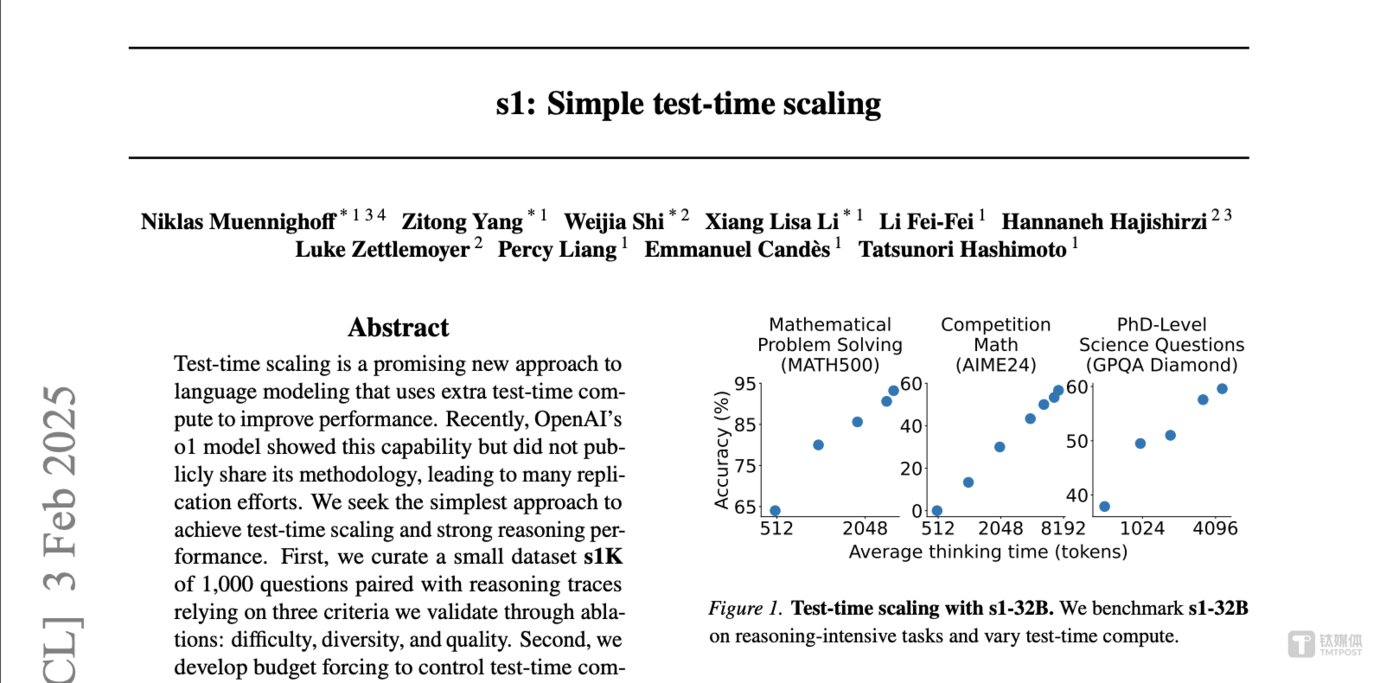

Secondly, in academia, a recent model research paper attracted attention.AI researchers such as Li Feifei, a professor at Stanford University in the United States, spent less than US$50 (approximately RMB 364.61) in cloud computing resources to use Alitongyi Qwen2.5- 32B-Instruct as the basic model and finally trained the open source AI inference model s1 through SFT supervision and fine-tuning methods. It is comparable to cutting-edge inference models such as OpenAI’s O1 and DeepSeek’s R1 in mathematical and coding ability tests. The author of the paper saidThe rental computing cost required to train S1 ended up being only about US$20 (about 146 yuan).

Finally, there is the legislative body.According to reports, U.S. Rep. LaHood (R-Ill.) and Gotheimer (D-N.J.) Citing data security reasons, a bill against DeepSeek will be introduced in the U.S. Congress in the next two days to ban the use of the product on federal government equipment. Earlier, U.S. Senator Josh Hawley proposed the “Decoupling U.S. AI Capabilities from China” bill, stating that any downloading or using DeepSeek would be classified as a crime and could be sentenced to up to 20 years in prison.

Obviously, as DeepSeek’s popularity increases, people from the American private sector to congressional agencies, from academia to industry, are looking for solutions to confront DeepSeek. At the same time, Italy, Australia, South Korea and other countries have successively introduced policies to restrict and ban DeepSeek.

Li Dan (pseudonym), a domestic AI industry person, told GuShiio.comAGI on February 6 that DeepSeek’s success at least proves that the United States cannot block China’s AI development by restricting chip exports at this stage, and can still catch up with it through open source technology and limited computing power. But in the long run, under the constraints of computing power and data, China’s AI innovation technology will still not be able to surpass American technology in the future, and China needs to do more work at the commercial application level.

Fu Cong, Permanent Representative of China to the United Nations, said:“Never underestimate the ingenuity of China researchers. DeepSeek has caused a global sensation and some people’s anxiety and panic, showing that technological containment and technological restrictions cannot work. This is a lesson that the world, especially the United States, needs to learn.” rdquo;

For less than $50, Li Feifei’s team gave DeepSeek a heavy blow

“The embrace, panic and confrontation brought about by the DeepSeek craze in the AI industry continue.

In China, in just six days, dozens of cloud computing service leaders such as Tencent Cloud, Alibaba Cloud, Huawei Cloud, Baidu Intelligent Cloud, and Volcano Engine, as well as more than 10 domestic AI chip companies such as Huawei Shengteng, Muxi, Moore Thread, and Biren, the three major domestic operators, Mobile, China Unicom, and Telecom, have successively announced the adaptation, launch or access to DeepSeek model services.

However, this universal use has caused the problem of insufficient computing power on the DeepSeek platform server。On February 6, DeepSeek confirmed that it has suspended the recharge of API services. Currently, server resources are tight. In order to avoid affecting your business, we have suspended the recharge of API services. The stock recharge amount can continue to be called, please understand!& rdquo;

The official price list shows that the DeepSeek-Chat model will have a discount period until 24:00 on February 8. After the discount, it will be charged up to 2 yuan per million input Tokens and 8 yuan per million output Tokens;DeepSeek-Reasoner will input 4 yuan and output 16 yuan.

On the evening of the same day, DeepSeek issued an article emphasizing that it has recently noticed that some counterfeit accounts and false information related to DeepSeek have caused misleading and trouble to the public. ldquo; At present, except for the official DeepSeek user exchange WeChat group, we have never set up any groups on other domestic platforms. All charges claimed to be related to DeepSeek official groups are fake. Please identify them carefully to avoid property damage. Thank you for your support and concern as always. We will continue to work hard to develop more innovative, professional and efficient models and continue to share them with the open source community. rdquo;

Compared with domestic prosperity, the United States has begun to replicate the model and present a lower-cost AI innovation development model.

In early February, Chinese scientist Li Feifei and other researchers from Stanford University and the University of Washington used only 1000 samples and 16 pieces of H100s for cloud computing costs of less than US$50. In 26 minutes, they completed the training of the open source AI inference model s1 that can match o1-preview, DeepSeek R1, with 32 billion parameters.

According to the paper, this model uses the Qwen2.5- 32B-Instruct of the Alitongyi team as the basic model. Through distillation, SFT and other technical methods, Google DeepMind’s reasoning model Gemini 2.0 Flash Thinking experimental version, the s1 model was finally obtained, and its performance in mathematics and coding ability tests was really good. One of the authors of the s1 model said that the computing resources needed to train s1 can be rented for about 146 yuan at the moment.

Currently, the project paper “s1: Simple test-time scaling” has been posted on arXiv, and the model s1 has also been open source on GitHub. The research team has provided data and code to train it.

According to the analysis of the paper, GuShiio.comAGI needs to share three new technical points: distillation, SFT and Test-time intervention.

The so-called distillation model,It is a model obtained through model data distillation technology. The core principle is to transfer the knowledge of large and complex teacher models to small and simple student models. It involves adjusting the output probability distribution of temperature parameters and a variety of loss functions to realize knowledge transfer. Methods such as knowledge, feature, relational distillation, and offline, online, and self-distillation are widely used in mobile deployment, real-time reasoning, and edge computing. It can reduce model calculation costs and storage requirements while maintaining good performance. Models such as DeepSeek R1 and s1 all adopt“ distillation” strategies.

In the view of Dr. Wang Weijia, an investor in Silicon Valley, distillation technology is using a large model to teach a small model. In fact, vertical knowledge in a certain direction is extracted from the large model and placed into a small model, so that there is no need to train the small model from scratch. ldquo; Just like Socrates, Aristotle Quan, and Leonardo da Vinci could cultivate a mathematics teacher, a physics teacher, and a chemistry teacher. This is distillation. When it comes to distillation, ordinary people don’t particularly understand it, but you will understand it all when you say that a master leads an apprentice.& rdquo;

According to the paper, in the s1 model, the researchers collected 59,029 questions from 16 different sources, including NuminaMATH, MATH, OlympicArena (all mathematics) and some original data sets, and then de-duplicated and de-contaminated them, and finally reduced them to 51581 samples and 384 high-quality samples. Two models, Qwen2.5- 7B-Instructor and Qwen2.5- 32B-Instructor, were used to evaluate the difficulty of each question.

Therefore, the final s1 dataset contains various problems in mathematics and other scientific fields, and has high-quality inference trajectories (extraction, distillation). The dataset is further reduced to 24496 samples to achieve model training and reasoning.

And SFT, which supervises and fine-tuning technology,It is a common technique in the field of machine learning. First, pre-train the basic model on large-scale unsupervised data sets to master the basic structure and knowledge of the data. Then, collect annotated data sets for specific tasks, and further apply the pre-trained model on the annotated data. Training, by calculating the loss value between the prediction result and the correct labeling, and using optimization algorithms to adjust the model parameters to make the model’s prediction on specific tasks more accurate. This technology is widely used in natural language processing text classification, dialogue systems, image processing, recommendation systems and other fields.

On the s1 model paper, researchers use a lot of supervision and fine-tuning techniques, use the selected samples to evaluate and feedback with the Ali Tongyi model, and use SFT to make the s1 model achieve a better goal.

The final concern is the intervention time during testing, which will determine the ultimate performance and goals of model reasoning.

Various methods are used to adjust, optimize or influence the output or decision-making process of the model. Interventions during testing can improve the performance of the model during testing, improve prediction accuracy, enhance the stability or interpretability of the model, etc. These methods may include Specific pretreatment of input data, introduction of additional information or constraints, adjustment of model parameters or hyperparameters, application of specific post-processing strategies, etc. In the s1 model, test-time intervention is mainly achieved through two methods: budget forcing and rejection sampling, which ultimately allows the s1 model to have better chain of thought (CoT) capabilities, better control reasoning behavior, and improve problem solving capabilities.

Therefore, as the paper said, the role of the s1 model is that language models with strong reasoning capabilities have the potential to greatly improve human productivity, from assisting complex decision-making to promoting scientific breakthroughs. However, recent developments in the field of reasoning, such as OpenAI’s o1, lack comprehensive transparency, limiting broader research progress. Therefore, we need to promote the development of the field of reasoning in a completely open manner, promoting innovation and collaboration, to accelerate progress that ultimately benefits society.

However, the limitations of the s1 model cannot be ignored. Its distillation based on the Alitonyi model cannot ensure that the model is controllable, and 1000 high-quality samples cannot meet the ability to solve complex problems. Therefore, how to ensure the improvement of model performance while reducing training costs is an important topic in AI technology research. In the future, with the advancement of technology and the optimization of algorithms, maybe we will really be able to see more low-cost, high-performance AI models coming out.

Global restrictions on DeepSeek, but Wall Street markets question the role of technology giant AI investment

On February 7, South Korea’s two major energy state-owned enterprises announced a ban on the use of DeepSeek. South Korean Acting President Choi Sang-moo called DeepSeek a new shock and directly announced a new fund of 34 trillion won (about 171 billion yuan) to support AI and semiconductor technology development.

He proposed that South Korea’s goal is to become one of the world’s top three AI leading countries. However, South Korean media believes that South Korea only has more than 2000 GPU graphics cards and is seriously insufficient in computing power resources.

Earlier on February 4, Australia, Ireland, France, and Italy all announced comprehensive restrictions on the use of DeepSeek AI services. In addition, everything from the U.S. Congress, the Pentagon, NASA to the Navy has considered or has begun to ban the use of DeepSeek, and Texas has become the first state in the United States to ban the use of DeepSeek on government equipment.

White House press secretary Karoline Leavitt said the United States is currently studying possible security implications.

In the early morning of February 7, U.S. Rep. Darin LaHood, Republican of Illinois, and Josh Gottheimer, Democratic of New Jersey, proposed a bill on all security grounds, saying that DeepSeek’s technology is risky, that the technology competition with China is not something the United States can afford to lose, and DeepSeek is worrying about the United States. rdquo;

Lahoud earlier said in the U.S. Senate that the latest DeepSeek is called AI’s Sputnik moment for the United States. DeepSeek almost proves that China is catching up with the United States in AI. The innovation between China and DeepSeek is shocking, but it has not yet occurred compared to AGI’s ultimate goal of defeating the United States, so we cannot allow this to happen. That’s why I make AI a top priority for Congress. Innovation in the United States is my north star, and I will continue to do so. I hope that our investment efforts in AI will continue to be strong and that more investment will be used to develop AI technology through legislation.” rdquo;

Obviously, countries led by the United States are questioning and testing the AI innovation boom in China brought by DeepSeek. But at the same time, American technology giants such as Meta and Google are facing torture from Wall Street for their continued larger-scale AI investments.

So far, the four major technology giants Meta, Microsoft, Google, and Amazon have announced that they will invest a total of more than US$320 billion in 2025 to develop AI technology.

Among them, Meta plans to invest US$60 billion to US$65 billion in capital in 2025, an increase of about 40% from 2024 to invest in AI technology; Microsoft plans to invest US$80 billion in AI infrastructure; Google expects to invest US$75 billion in capital expenditures in 2025, a surge of more than 42.7% from last year; Amazon invested US$100 billion. The company’s CFO said that expenditures mainly include AI service needs and AWS Cloud Services business facilities.

However, Futurum Group analyst Daniel Newman believes that given these huge expenses, they (U.S. technology giants) urgently need to increase the revenue return on AI, but what is happening so far (DeepSeek) is a wake-up call for the United States.……For now, AI’s capital expenditure is too much, but consumption is insufficient。& rdquo;

Data shows that DeepSeek-V3, a large model with a parameter of up to 671B,In the pre-training phase, only 2048 GPUs were used to train for 2 months and cost only US$5.576 million, but the final performance surpassed models such as OpenAI-o1.

Jake Behan, head of capital markets at Direxion, believes that the question now is not when AI spending will be profitable, but whether it can be rationalized.

“We don’t think all companies will turn to DeepSeek immediately, butThe low-cost, low-resource-consuming AI model released by DeepSeek shows that AI will become more commoditized in the future.The real differentiation lies in supporting higher accuracy, security and customized platform functions to meet specific needs, which is where Microsoft needs to invest.& rdquo;Valoir analyst Rebecca Wettemann said.

However, on the other hand, some analysts believe that DeepSeek still proves that there is a strong demand for computing power, and AI requires a large amount of infrastructure investment to meet market demand.

On February 1, Greg Jensen, co-chief investment officer (CIO) of Bridgewater, and Jas Sekhon, chief scientist of AIA Laboratory, an internal team of Bridgewater that uses AI for market transactions, issued a document saying that DeepSeek’s achievements are important and impressive. They have developed in a very short period of time, among the top five AI laboratories in the world. The results are only a few months behind cutting-edge models, but the cost is significantly reduced. Currently, DeepSeek has surpassed Meta to become the leader in the Open Source Big Language Model (LLM).

“Admittedly, the figure of $6 million does show significant progress. rdquo; The article wrote, however, over time, due to the advancement of AI software and hardware, this efficiency improvement is foreseeable. rdquo;

Qiaoshui further analyzed that the improvement in reasoning efficiency means that people will buy more reasoning capabilities, but the diminishing return point of the reasoning demand curve has not yet been reached. For example, a lot of the demand for AI does not come from direct use of large models, but from other uses of generative AI, such as robotics, autonomous driving, chip design, and biology. LLM models are often an input to these broader applications. As LLM improves, the computing power bottleneck shifts to other links, and the demand for these applications will be released.

Qiao Shui pointed out that DeepSeek’s results show that the development and efficiency of AI are accelerating, which is good news for most participants in the entire AI ecosystem and conducive to new AI investments. This means that demand for computing power has not slowed down, but may accelerate. Companies like Microsoft and Google will spare no effort to invest all necessary resources to ensure they are at the forefront. These hyper-scale cloud service providers will benefit from falling costs for large models and rising reasoning needs.

Meta CEO Zuckerberg said he still believes that investing heavily in the company’s artificial intelligence infrastructure will be a strategic advantage. ldquo; It may be too early to judge the trend of infrastructure and capital spending. In the long run, investing heavily in capital expenditures and infrastructure will become a strategic advantage. rdquo;

Microsoft CEO Satya Nadella believes that increasing AI spending will help alleviate the problem of limiting the company’s AI capacity. He added that as AI becomes more efficient and widely available, we will see demand grow exponentially. rdquo;

Yann LeKun, a Turing Award winner and Meta AI scientist, emphasized thatAfter the rise of DeepSeek, investors ‘sell-off of American technology giant stocks actually stems from a major misunderstanding of investment in AI infrastructure. A large part of these billions of dollars is invested in reasoning infrastructure rather than training. Billions of people running AI assistant services require a lot of computing, and once you incorporate video understanding, reasoning, large-scale memory and other functions into your AI system, reasoning costs increase.

Currently, DeepSeek has become an indispensable key force in the AI industry.

Open Source Securities released a research report that DeepSeek released and open-source inference model Deepseek-R1, which injects new variables into the development of the industry. With the potential value of this model in application areas such as smart driving and smart cockpits, DeepSeek’s release and open source are expected to accelerate the upgrading of related industries.

CITIC Construction Investment Research reported that DeepSeek significantly reduces training and reasoning costs while maintaining excellent model performance indicators. At the same time, high-performance, lightweight, and low-cost model capabilities will significantly promote the development of the end-side AI industry.