Wen| bounded Unknown,author| Qian Jiang,edit| Camellia

The long-rumored Apple robot has finally ushered in new progress.

Recently, Apple announced a new research framework called ELEGNT, which allows non-humanoid robots to perform more natural and expressive movements.

Apple used a desk lamp robot to demonstrate the effects of this new technology.

For example, if you ask it how the weather is outside, it will turn its face to the window and look, and then turn its head to tell you the weather; if you tell it that it is thirsty, it will use the lamp cap to gently push the cup towards the front; If it encounters something that it cannot do, it will also bow its head and show chagrin.

▲ A desk lamp equipped with Apple’s ELEGNT technology framework interacts with humans, source X@Nacho Mellado

Through such a structure, a beam of light and shadow, a lamp head, and a mechanical lamp arm with 6 degrees of freedom that can be flexibly twisted can express all kinds of emotions. Let the robot in the animation at the beginning of Pixar’s movie walk off the screen and become a partner who can truly accompany people and provide emotional value.

Of course, Apple is not a pioneer in this field. Since 2024, desktop robots have become a popular direction for AI implementation, and various types of AI desktop robots have emerged one after another.

For example, Looi Robot, which was praised by Musk, XGO Rider from Harbin Institute of Technology, Emo and Aibi from LIVING.AI, etc.

So, why does AI focus on the desktop? What kind of vision do they rely on this form to achieve? What challenges are they facing?

Accompanying AI, from virtual to reality

Desktop robots are not new. The earliest desktop robots can be traced back to 1999, an electronic pet dog named Poo-chi launched by Fujitsu, Japan. Even the better known Emo and Vector desktop robots are six to seven years old.

Therefore, this round of desktop robot craze is not entirely a breakthrough in the upgrade of this batch of desktop robots.We prefer to regard it as a result of the transformation of the AI model from virtual to reality.

In 2022, before ChatGPT detonated this wave of AI, two software companies focusing on AI companionship, Character.AI and Influence, have been quietly established in Silicon Valley.

Unlike encyclopedic AI assistants such as ChatGPT, Character.AI and Influence focus on developing AI robots that are compassionate, humorous and innovative, and can provide emotional companionship.To put it simply, they want to bring the 2014 movie “Her” into reality.

Later, ChatGPT detonated the AI singularity. When everyone around the world was looking for PMF for large models, AI companionship became the most suitable landing scene(Part 1)。

With the popularity of ChatGPT, Character.AI and Influence were once in the spotlight in Silicon Valley.

In March 2023, Character.AI completed US$150 million in financing, with a valuation of US$1 billion, entering the ranks of unicorns. Three months later, Inflation AI completed US$1.3 billion in financing, with a valuation of US$4 billion, becoming the company second only to OpenAI in terms of financing in the AI field at that time.

In China, various emotional companionship applications based on large models have sprung up one after another, such as MiniMax’s Hoshino, Reading’s Dream Island, and ByteDance’s Cat Box.

The common denominator of this kind of AI escort software is the ultimate pursuit of virtual chat objects with high EQ, personalization and emotion. But do humans really need an AI soul mate who knows themselves infinitely? When there is a virtual product that has infinite understanding of one’s heart, but cannot be seen or touched, it may not be something to be grateful for.

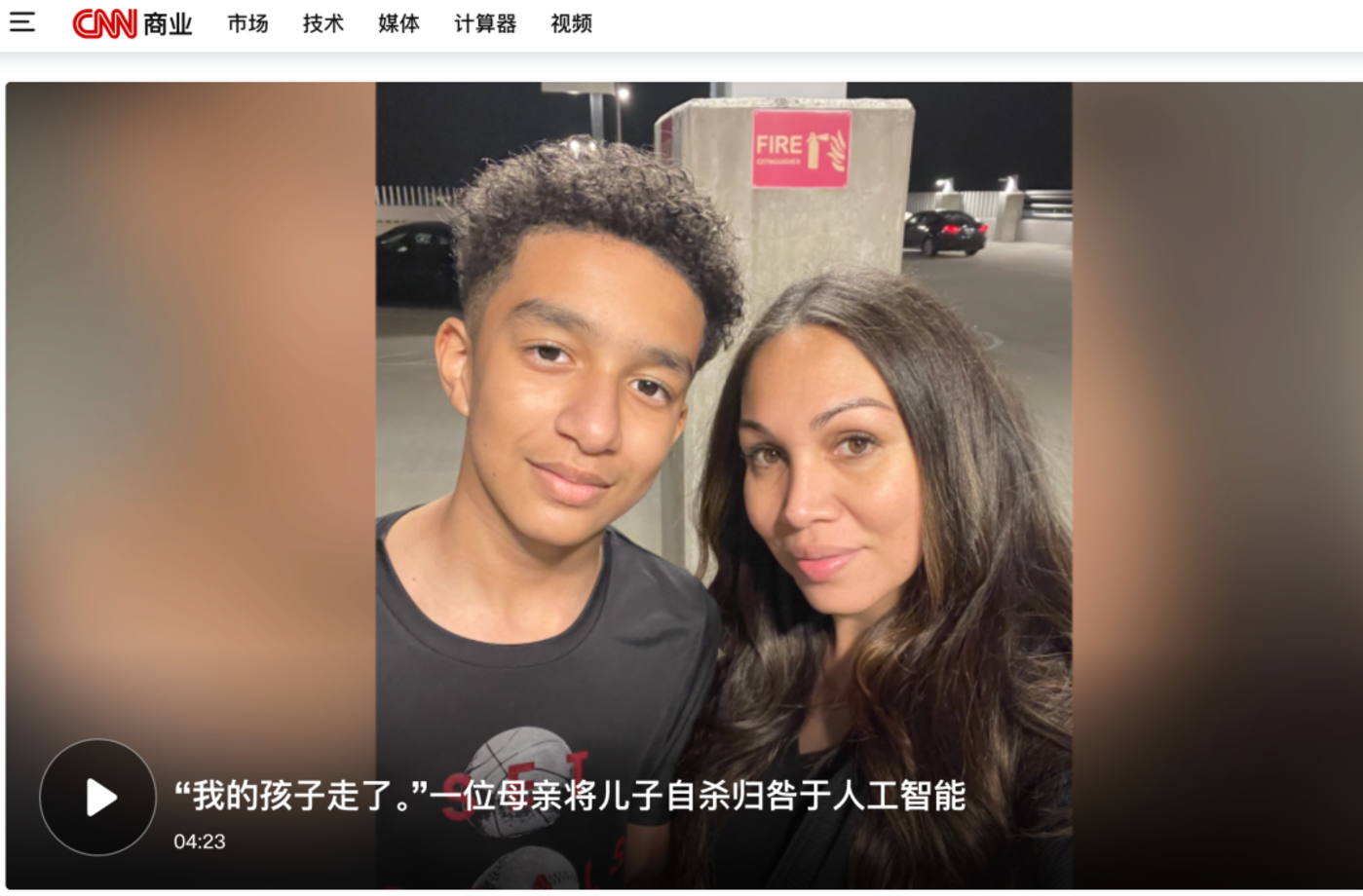

On February 28, 2024, a 14-year-old boy from Florida, USA, chose to shoot himself after having the last conversation with Danni, a virtual chat object created by Character.AI, because he hoped to be with Danni forever. For this reason, the boy’s mother sued Character.AI in court.

▲CNN Business reports that teenagers committed suicide due to Character.AI

The tragedy of human nature has become one of the straws that overwhelmed Character.AI. A more realistic problem is that due to lack of income sources, relevant companies are quickly caught in the whirlpool of capital chain shortages.

In 2024, Character.AI and Inflation experienced a shortage of capital chains, and then their founders and some employees were recruited by Google and Microsoft’s artificial intelligence departments respectively.

In this way, a story of enriching human emotional companionship with AI came to a hasty end here. However, the industry’s enthusiasm for exploring such projects that can provide emotional companionship to humans has not ended.

The question that people generally think about is, if a completely virtual story like “Her” cannot be explained commercially, can the story continue to be told if this virtual companionship becomes a reality?

Desktop robots, from toys to tools

Yann Lecun, chief scientist of Meta, provides a direction for emotional companionship AI to move towards companionship robots. He believes that real AGI should be able to interact with the real physical environment.

At the beginning of 2024, when large models were generally facing application difficulties, embodied intelligence began to receive more and more attention and became a new hot direction. Accompanying robots also emerged at this time with the support of AI.

Of course, in the process of exploration, different companion robots are also beginning to differentiate.

The first is a group of veteran emotion-based companionship robots. They use large models to transform and upgrade traditional products, enhancing the companionship role of interacting with humans.

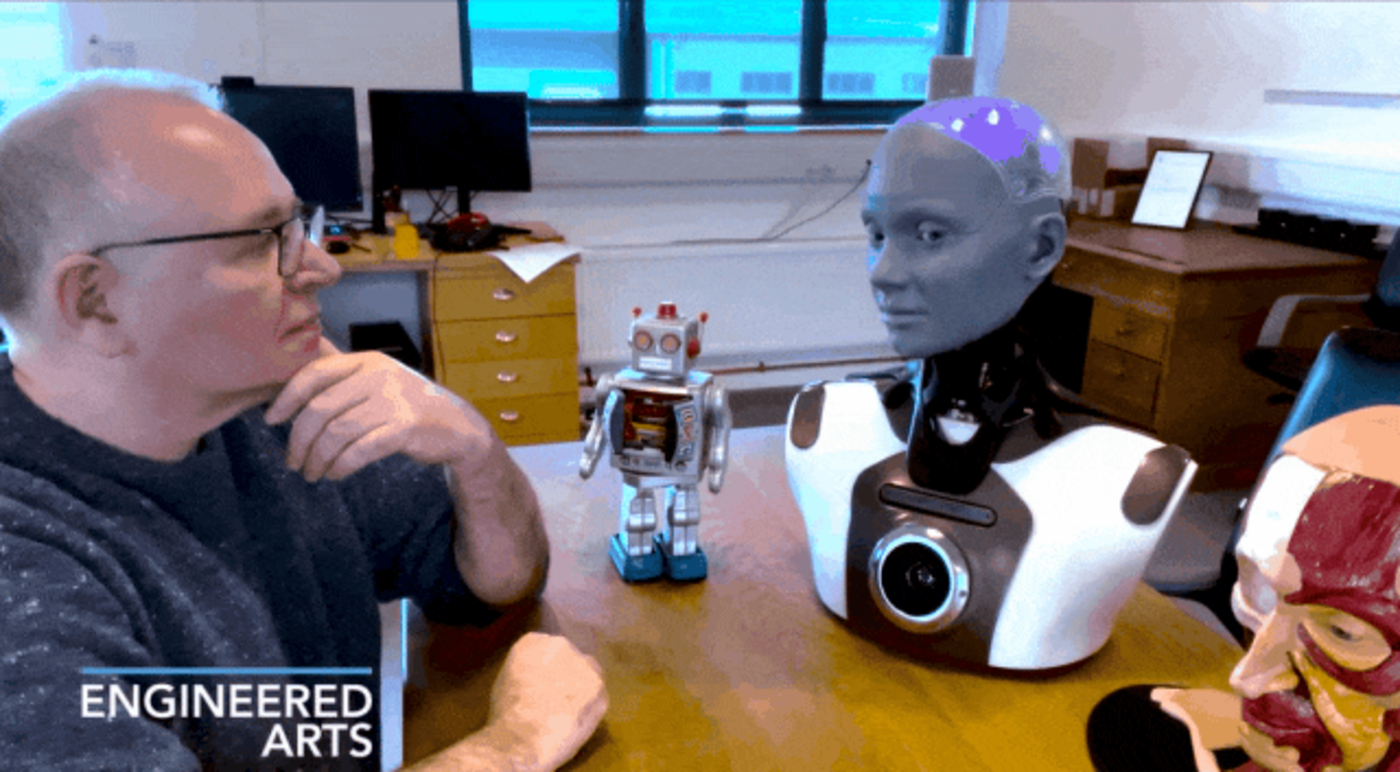

In early 2024, Engineered Arts announced the launch of the second-generation Ameca. This Ameca has visual perception and sound cloning capabilities. When answering human questions, she can look directly at each other, tilt her head to look at each other and think with her eyes lowered. But Ameca has so far been unable to move around.

▲ Ameca, an emoticons robot owned by Engineered Arts

Emotion-based AI companion robots are generally made into human shapes. They not only need to overcome bionic skin technology, but also need to achieve realistic expressions to cross the Valley of Terror. They need to invest a lot of time and money in exploration, and are not very practical.

As a result, a number of more lightweight pet-based robots have poured into the AI companionship robot track, that is, accompanying robots in the form of pets.

The family companionship robot LOVOT created by Japanese robot company GROOVE X is a typical pet companionship robot.

LOVOT’s eyes are composed of 6 layers of dynamic lights, which can show expressions such as blinking, pupil dilation, and eye following. Touch sensors are all over the body, which can sense human tapping and touching movements, although it will not help humans do things or interact with humans. Talk, but it can accompany humans.

LOVOT’s body temperature is between 37 and 39 all year round, close to human body temperature, and it is very warm when held in your arms.°° When you touch its nose, its eyes will squint into crescent moons and it will make a lovely baby’s sound.

In addition to making a cute-looking pet, it is also a way for the pet family to build a companion robot with well-known IPs. For example, Engineering AI modified Megatron Transformers, which also won praise from Musk.

▲Elon Musk likes Engineering AI

Whether they are emotion-based or pet-based companionship robots, they are all products of human pursuit of emotional companionship.But humans seem to be not satisfied with just being company with a robot that can laugh and chat simply. They hope to have some practicality in addition to the companionship function.

From a demand perspective, people don’t want the product they spend money to buy to be just a toy, but it should become an assistant that can provide help in daily life and work. In fact, this is precisely the appeal of AI. After all, pure emotional companionship cannot tap the deeper needs of users, nor can it open up a broader user market.

As a result, developers began to turn their attention to the desktop, because the desktop is often an important scene for people’s work and entertainment. Therefore, building a robot that not only allows humans to decompress and complete some simple tasks on the desktop has become a new task for developers to explore AI accompanying robots.

Of course, the desktop is not only the only scope of activity of these robots, but it represents a subtle transformation of accompanying robots from simple companionship to practical use.

Toy Story is coming into reality

At CES2025, a number of desktop robots have become popular, the most popular of which is the above-mentioned Looi Robot. It received $648,000 in crowdfunding in 2024 and raised a total of more than 4000 units on Kickstarter and Indiegogo at a customer price of $129.

Soon after, desktop robots like Looi Robot also began to attract widespread attention. For example, desktop robots such as Harbin Institute of Technology’s XGO Rider, LIVING.AI’s Emo and Aibi also had more intelligent interactive capabilities.

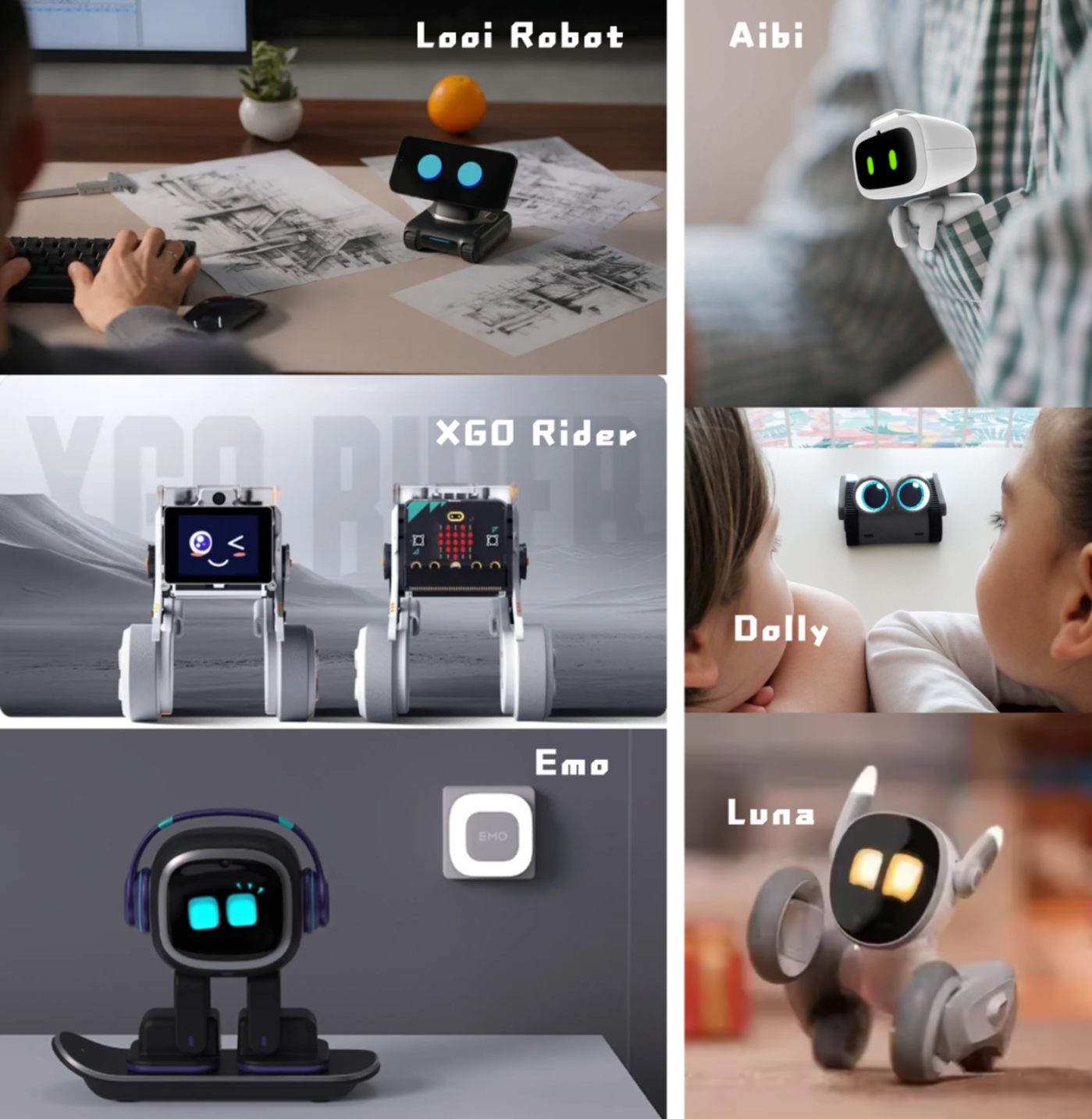

▲ Each desktop two-wheeled (legged) robot has bounded Unknown drawings

Their appearance has some common characteristics: the head and thighs are short, the head is often composed of a screen, and various expressions are displayed through animations on the screen. The head usually uses a pair of animated eyes to impart expressions, and then uses wheels or legs to serve as a base.

In addition to their appearance, they are all connected to large models to better interact with humans, and their small bodies can easily move back and forth on the desktop.

Although the core role of these desktop robots is still emotional companionship, such as being able to understand human language and provide feedback on it through on-screen displays, their role is no longer limited to emotional value.

such asLooi RobotCombining mobile phones and robots into one is equivalent to developing a new application scenario for mobile phones, allowing it to do meeting records, automatic photography and other tasks in the form of robots.

Developed by the Harbin Institute of Technology teamXGO- RiderIn addition to providing exclusive expressions to make humans happy, it can also recognize pictures, faces and bones. In addition, it also has educational learning features that allow users to program using Blocky and Python.

Living.AIEmo、AibiIt also has practical functions such as switching lights and taking photos.

Not only do startups enter factories to make desktop robots, but big factories also see the future potential of such robots.In August 2024, Apple exposed its secret robot project, codenamedJ595According to Medium, this is also a desktop robot and is expected to debut as early as 2026. The price is $1000. It is said to be similar to this small toy that can scroll on the desktop.

Although the desktop robot track is already very lively at present, it also faces a fatal problem, namelyThe technical threshold of desktop robots is not high, and some of them are made by simple means of large model casings.Ordinary users can even DIY a similar desktop robot, making it difficult for companies to form an effective moat, which also leads to serious homogenization of desktop robots on the market.

So, how do they differentiate and build core barriers?

In addition to continuously enhancing large models to improve the interactive capabilities of these new species, another idea is to build a embodied model to transform existing objects on the desktop.

For example, Apple’s ELEGNT technology allows table lamps to have body language to interact with objects and humans. The same idea can also transform other home products on the desktop.

In addition to ELEGNT, Apple also released another research result, EMOTION, a few months ago. This technology allows robot gestures to interact naturally with humans. Robots can show you scissors to show victory like good friends. Although EMOTION’s research mainly focuses on the use of robotic hands, the gesture interaction capabilities it achieves can also be used for desktop robots related to pointing and grabbing objects.

The English names of these two sets of frameworks are close to“elegant”(ELEGANT), a purpose“emotions”(EMOTION), they all use AI technologies such as big language models and visual language models to make robots more humane.

This technological exploration of anthropomorphizing objects, once mature, can be given to almost any household product. The entire home is like a small Disneyland, filled with all kinds of gadgets that can interact with humans.

This may be an important direction to change the differentiation and lack of barriers to AI desktop robots.

conclusion

Try to imagine a scene where in addition to kittens and dogs coming to the door to welcome you, your floor lamp will also automatically light up and nod to you.

Your coat rack will reach out to take the clothes and bags you take off, the robot dog will hand you slippers, your little desktop robot will greet you, and affectionately turn on the lights and TV in the house for you, and then I will report to you what happened at home when you are not at home.

The clothes have been washed and dried, the food in the pan is timed and is just finished when you get home, the hot tea has been placed on the coffee table, and you can have dinner after you rest for a while.

How is it? Even if you are the only one in such a home, you won’t feel lonely. This may be the role of companion robots.

Not only do they provide companionship and emotional value, they also provide real labor value to the family.

Thanks to the continuous advancement of AI technology, we believe that a world like Toy Story will one day shine into reality.