Wen| Silicon Valley 101

“Advanced semiconductors are the legendary past, AI is the booming present, and quantum computing is the inevitable future. rdquo;

Quantum computing, long regarded as a technology of the future, is accelerating from the laboratory to reality. On February 11, quantum computing company QuEra announced an investment of US$230 million, one of the largest single investments in quantum computing to date.

The company, which was spun off from Harvard University and the Massachusetts Institute of Technology, uses atoms and lasers to make qubits. This technology was previously considered to have too high errors, but compared with the superconducting circuits and ion trap routes used by giants such as Google and IBM. Compared, this neutral atom technology does not require superconducting circuits and has the advantages of high stability and no need for bulky refrigeration systems.

Just three months ago, Google announced that a new generation of quantum computers based on Willow chips could complete a standard mathematical operation that traditional computers would take 10 to the 25th power of years to complete in less than 5 minutes, and overcome the problem of high error rates. This breakthrough is known by the industry as the Transformer moment of quantum computing.

However, there are different predictions within the industry about the time it will take to truly achieve useful quantum computing. Nvidia CEO Renxun Huang predicts that large-scale commercial applications of quantum computing will take at least twenty years, and Google’s goal is to launch usable quantum computing services within five years.

In this issue of Silicon Valley 101, the manager of this issue, Hong Jun invited Roger Luo, founder and CEO of Anon Technologies, PhD and postdoctoral fellow at the University of Berkeley, and Jared Ren, founder and CTO of Anon Technologies, PhD and postdoctoral fellow at California Tech, to discuss Huang Renxun’s time prediction on quantum computing, the importance of Google’s Willow chips, Silicon Valley companies ‘layout and technical path in the field of quantum computing, and how it will affect cryptocurrency and the banking system. and the entire field of cryptography.

The following are some of the interviews

01 Understanding quantum computing, superposition and entanglement states of qubits

Hong Jun:Maybe we can start with a general question. Can you explain to the audience what quantum computing is in as common language as possible? What is it used for?

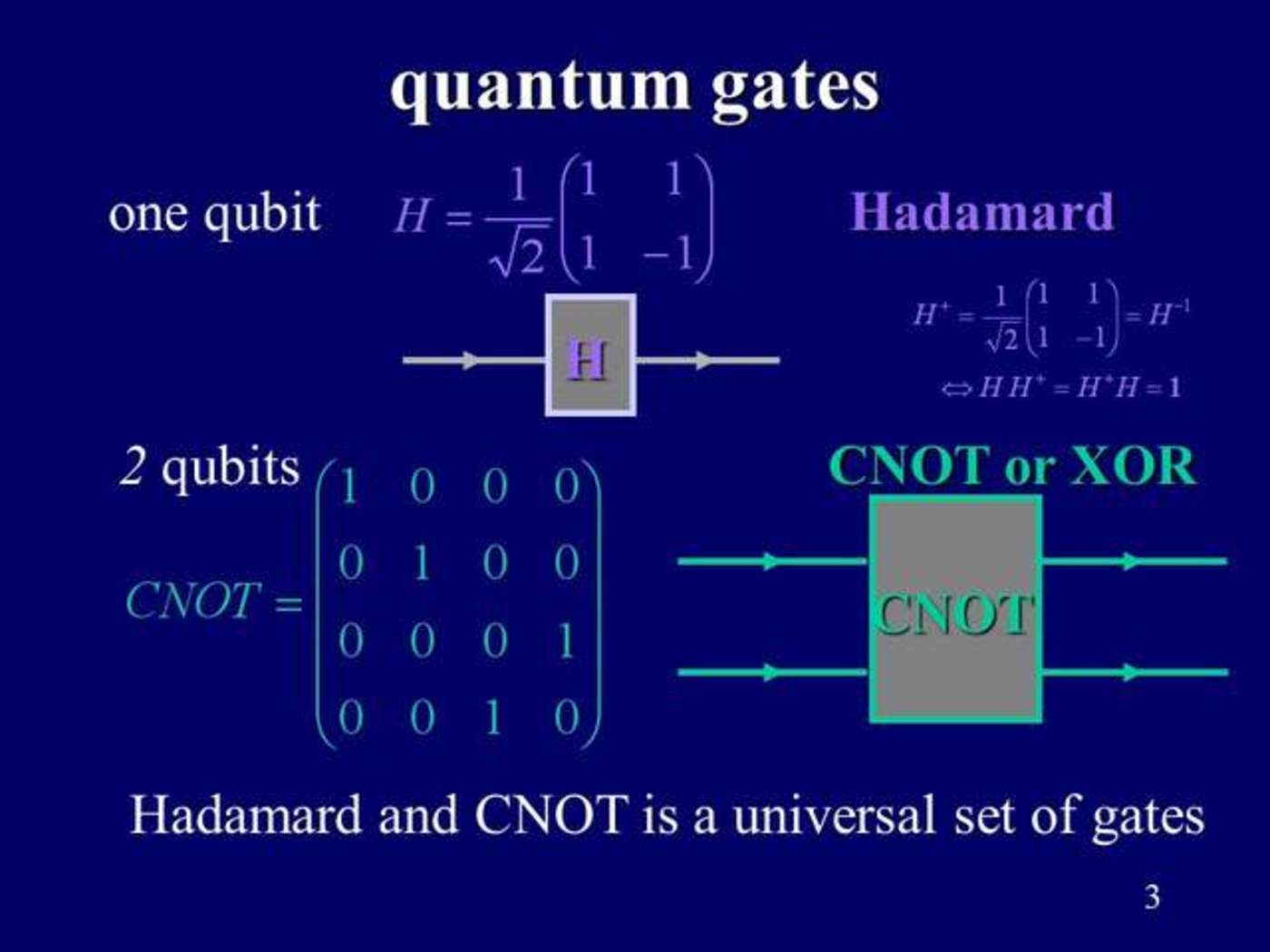

Jared: Understanding quantum computing can be analogized by classical computers. Almost all computing devices on the market today, whether they are CPUs, GPUs, mobile phones, or simple calculators, are essentially classic computers.

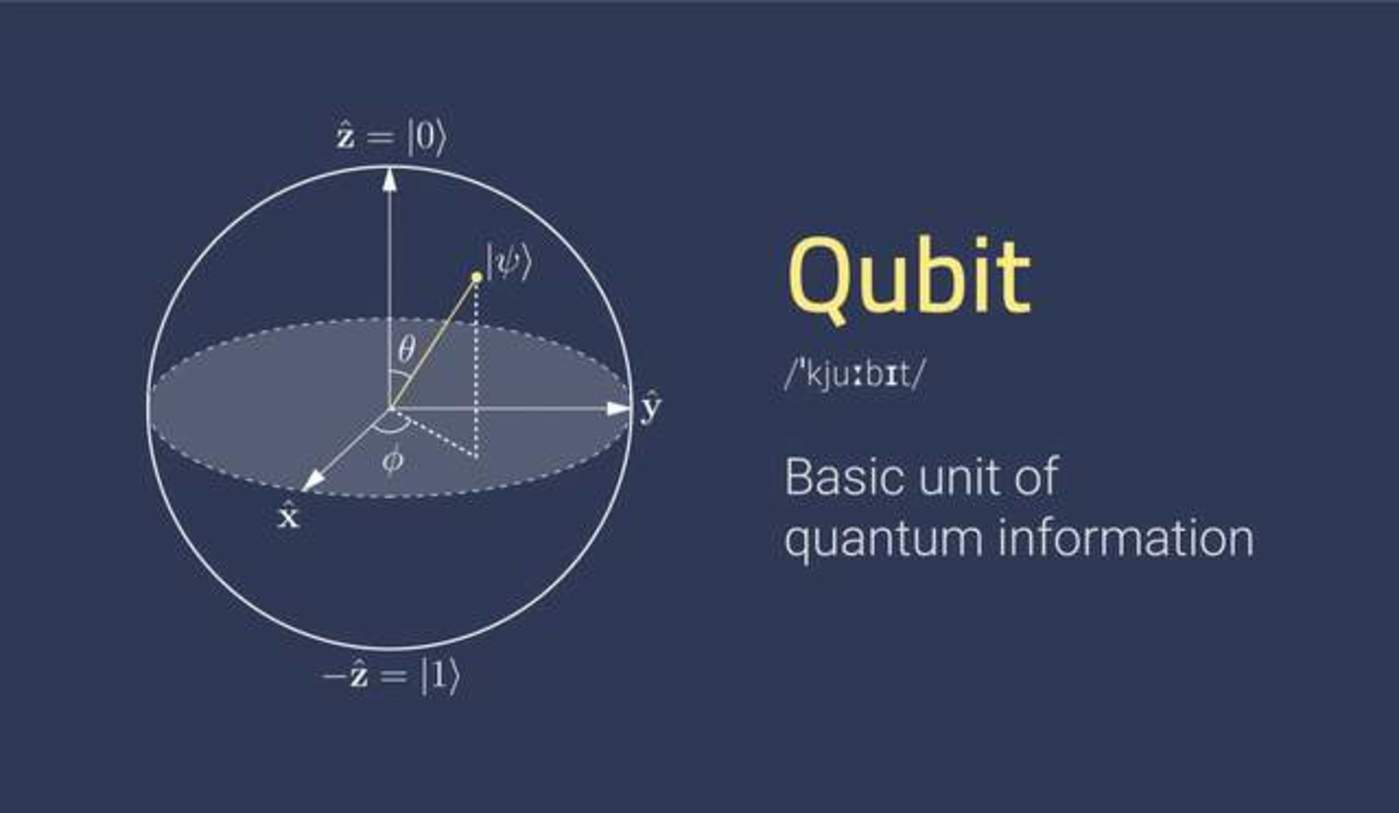

We can understand a classic computer as a long string of 0s and 1s, which is called bit。Bits can only be in the state of 0 or 1, and all calculations, no matter how complex or simple the device, are essentially processing these strings of 0s and 1s. Analogy to a quantum calculator, it is also a long string of characters, but its basic unit is Quantum bit (qubit)。

So what is the difference between qubits and bits? The difference is that it can not only be 0 or 1, but also be between 0 and 1 at the same time. superposition state。In other words, qubits can coexist between multiple states, unlike classical bits, which can only choose between 0 and 1.

Source: devopedia.org

Another characteristic of qubits is another principle of quantum, that is, its entanglement state. It does not just mean that each qubit is a separate qubit, but that different qubits can be entangled and change at the same time. When these two work together, they create a significant difference from classic computers.

The state of a classical computer is composed of bits. For example, for a classical computer with only three bits, all its possible states are 000、001、010、011、100、101、110、111, total 8 kinds Possible combinations. If you want to operate, it can only change each bit one by one. If it wants to go to these eight different states, it needs to do eight operations.

But quantum computers are different. If there is a quantum computer composed of three qubits, due to the superposition principle and entanglement principle, it can exist in these eight states at the same time, and then calculate these eight states at the same time. If there are only three digits, the difference between them may not be so obvious. One requires eight operations, and the other can complete the operation once.

But if the number of bits continues to double, then quantum computers are actually an exponential growth process. If there are four bits, the classical computer would have to perform 16 calculations to exhaust the 16 states, but the quantum computer still only needs one time to exhaust all 16 states. This is the so-called quantum computer’s calculation relative to the classical computer. In terms of exponential acceleration.

Hong Jun:Can I understand that quantum computers are very suitable for solving complex and difficult calculations? The more difficult the calculation, the more advantageous it will be.

Jared: We can understand it as itsThe core advantage lies in the ability to achieve exponential acceleration on certain issues.Quantum computers are not a substitute for classic computers, just as GPUs cannot seem to be completely a substitute for CPUs. The two of them co-exist and interact with each other. Quantum computers are particularly good at achieving exponential acceleration on certain specific issues, such as matrix factorization, quantum chemistry, simulation and combinatorial optimization, and will play a very important role in these high-complexity computing tasks.

Hong Jun:What areas will these highly complex calculations be used? If you can imagine the future application scenarios of quantum computing, what are its most advantageous scenarios and problems compared to traditional computers? Can you give an example of a specific scene?

Jared: It has several special algorithms. Some special algorithms have a particularly good exponential acceleration effect on matrix operations, and some operations have a particularly good acceleration effect on factorization. The world itself is quantum, and it is very difficult to simulate the quantum world with a classical computer. For example, some chemical operations are quantum themselves, so it will be easier to use quantum computers to perform these operations. The three examples I just mentioned correspond to are actually very obvious. All our current artificial intelligence learning is essentially matrix operations.

If you factorize it, all you can think of isShor’s algorithm Corresponding security issues and encryption issues. Most current encryption methods are used to encrypt through similar situations, so quantum computing has a great advantage in cracking such encryption.

Quantum chemical simulation obviously can think of the research and development of oil and gas, chemicals, drugs, etc., which is a very direct role.

Another area is that quantum computers solve combinatorial optimization very quickly and have exponential acceleration. Therefore, these areas are also very helpful for some content that needs to be optimized such as logistics.

Hong Jun:Several points have just been mentioned. For example, in terms of matrix computing, where is its acceleration of artificial intelligence? For example, with quantum computers, will it have special advantages when people train large models? Or is it possible to have some specific solutions to a specific problem in a very small field?

Jared: What I understand is that no matter what the model of artificial intelligence is, its essence is matrix operation. This brings us back to the fundamental question: Why are GPUs so much faster than CPUs when processing artificial intelligence models?

Essentially, the GPU will operate on matrices much faster than the CPU. The CPU is good at more complex problems and operations with relatively few threads, while matrix operations do not require particularly complex and repetitive processes, but require particularly many core synchronous operations. This is the role of the GPU. Therefore, in terms of artificial intelligence, the GPU has a greater advantage than the CPU because the GPU operates on matrices much faster than the CPU.

If algorithms such as HHL (Harrow-Hassidim-Lloyd) are used in quantum computers and the operation of matrices can also be accelerated, it can be compared to the development of a large model of artificial intelligence, realizing such a leapfrog acceleration from CPU to GPU. development.

Source: yanwarfauziaviandi.com

Roger: Quantum computing is a key factor in this specific issueBy accelerating the algorithms related to the matrix inversion algorithm, the overall progress of artificial intelligence learning and inference can be accelerated.。For example, if a model has 100 billion parameters, if you use a GPU to run it, the corresponding algorithm complexity is about 100 billion times 100 billion. For quantum computers, it is a way to find an exponential base, which is equivalent to a complexity of log100 billion. Relatively speaking, when dealing with a huge model, the number of operations will drop exponentially, which can calculate a huge model with less energy and resources.

02 Nvidia, who openly criticizes and secretly praises it: conservative prediction on the surface, rapid deployment on the secretly

Hong Jun:Can quantum computing be realized now, for example, what stage has this ability developed to? This question was raised by Nvidia founder Huang Renxun at this year’s CES. I remember him saying that if “very useful” quantum computing could be achieved in 15 years, he thought this was a very early prediction; if it was 30 years, it might be stable, so he thought a reasonable interval was twenty years. But as soon as he said this, the stock prices of a number of quantum computing stocks fell one after another. So I want to hear from you two how you look at Huang Renxun’s talk about the implementation time of quantum computing.

Roger: First of all, Huang Renxun was also asked at NVIDIA’s investor conference. He answered this question positively and has told this time for far more than 20 years.

Because once you start answering this question at such a meeting, it means investors will consider whether to factor in the impact of quantum computing on the company’s stock price over the next decade. As we all know, when Wall Street calculates stock prices, it takes expected growth and monopoly over the next decade into pricing.

So there is actually a conflict of interest for him. If he showed that quantum computing could have an impact on GPUs over the next decade, Nvidia’s share price would fall the next day. Compared with making Nvidia’s share price fall, the fall in quantum computing’s share price is a very reasonable decision for him. After all, which question should he answer in which position.

From another perspective, I respect Huang Renxun very much. He is indeed a very powerful person. But don’t just look at what he says, look at what he does. Nvidia is already a big fish in the field of quantum computing, and they are also our partners. They have been very proactive in all aspects, including the supercomputing conference we are about to attend, and we have to do a demo together. So overall, this represents a logic.

From the perspective of what he did, we can look back at history. CUDA was released in 2007, CUDA DNN in 2014, Transformer in 2016, and GPT-3.5 in 2022. Even starting from CUDA, it has been only fifteen years since Nvidia started laying out this direction to the complete implementation of the technology.

Logically speaking, at the speed of technological development in the 21st century, if a technology takes 15 years to mature, I may not have heard of half of its development history. So any technology that takes 20 years to achieve is not realistic. At least the CEOs of the top three companies in Nasdaq are unlikely to respond positively to this long-term technology, let alone formally involve the company, which is a bit against logic. Of course, it’s still the same saying. What he does is more important than what he says, because what he says must consider the impact of the secondary market, and he himself has the interests of Nvidia’s stock.“”

Third, he used words very accurately, saying that“very useful quantum computer”In this context, this can be understood as a Large-scale fault-tolerant quantum computer, which is the ultimate form of quantum computing. This is a bit similar to the AGI (General Artificial Intelligence) we discuss in the AI field, and it is a very long-term goal. If it can be achieved, it will basically redefine the next stage of human civilization, as we will be able to develop countless new materials and the possibility of artificial intelligence systems that far exceed human capabilities.

So what he means by very useful is that quantum computing has completely stood at the core of the current GPU. I personally don’t think there is a high probability that it will appear within 15 years. I think the industry consensus is that quantum computing in the monopoly stage such as the Large-scale fault-tolerant quantum computer may take 15 years. I don’t think his statement essentially deviates from their own company’s internal research and industry formulas. He just made it a way that might sound more friendly to investors.

Hong Jun:Can we understand it this way: If quantum computing is really implemented, it may not require as many GPUs to complete the operation, so the need for GPUs and chips will be reduced? I want to know the impact this will have on NVIDIA’s share price and how everyone thinks about this logic.

Roger:Yes, NVIDIA now positions itself as“a supercomputer infrastructure company”(Supercomputer Infrastructure Company). They believe that future supercomputing will cover computing problems such as AI, weather forecasting, and chemical simulations. From their perspective, this will be the core business of the future. But interestingly, quantum computing also says the same.

But in fact, quantum computing will indeed erode or participate in Nvidia’s market share in complex computing to a certain extent. For example, when performing extremely complex protein reaction simulations, instead of making predictions like AlphaFold, first-principles simulations are used to accurately discover new drugs. For such tasks, it is obviously unrealistic for Nvidia to calculate.

Hong Jun:The cost is too high for the company.

Roger:If a center is willing to buy 1 million NVIDIA’s future GPU chips to figure out this problem, the stock price will definitely be good for them. But at this time, if quantum computing gives an expectation that 1 million GPUs are not needed, almost 10,000 GPUs can achieve these goals. This is equivalent to the fact that it cannot be obtained in the market, which means that your quantum computing tasks basically do not need to share the workload with the GPU, and you can completely handle these complex problems.

So I think Huang Renxun put quantum computing into the 15 – 20-year period, which is equivalent to telling Wall Street not to factor future uncertainty into current pricing. From NVIDIA’s perspective, computing demand will continue to rise in the next ten years, and the company’s share price should rise. This logic is reasonable.

In the next decade, quantum computing itself will also require GPUs to do hybrid quantum computingThis is also why we want to cooperate with NVIDIA. Therefore, the next ten to fifteen years will actually be a stage of common existence and common growth, and there is no question of who replaces whom. Strictly speaking, this is an expansion of an incremental market, rather than a competition for share in the existing market.

Hong Jun:What is your cooperation with Nvidia?

Roger:We pay more attention to the product and technical aspects. As a hardware company, we mainly focus on two directions.

The first is to use the GPU’s software system and algorithms to optimize the chip design and quantum measurement and control of quantum computers, and use the GPU to optimize the operation of quantum computers. The second is to enhance the generalizability of AI models through quantum computers combined with GPUs, that is, use fewer parameters and data to train AI models with stronger generalization. This is the so-called quantum-enhanced AI path.

You have also heard that some very well-known AI companies have recently been recruiting talents in quantum machine learning to do corresponding development. In fact, this is also a trend. For example, SSI (Safe Super Intelligence), which you have heard of, is the company from Ilya. On the other hand, our cooperation with them is certainly not at the software level, but more focused on building computing platforms.

From another perspective, our cooperation with Nvidia is not at the software level, but at building a computing platform. We used their CUDA Quantum software as glue to glue their GPU and our QPU (Quantum Processor) into a complete quantum computing platform. Through high-speed direct interconnection, we enable quantum processors and GPUs to interact with data in real time, thereby enhancing computing power. While quantum computers are running, they can also improve their solutions in fields such as artificial intelligence learning.

This is equivalent to this system, where we are positioned as a quantum backend provider in Nvidia’s ecosystem. They call themselves GPU and backend providers, so we are actually in a parallel relationship while turning it into a complete quantum-enhanced computing platform. We have our own quantum chip and our own complete quantum computer. We just interconnected our quantum computer with NVIDIA’s GPU system, and then used their software to coordinate the work of both sides, and enhanced the GPU’s role in artificial intelligence learning problems through quantum hardware.

Hong Jun:Do you make your own chips, or are there chips specifically designed for quantum computing on the market?

Roger:This is a good question. Our chips are made by us because of our own independent designs and patents and processes. But there are actually companies selling quantum chips. I don’t comment on whether they are good or not. But basically American companies make their own chips. Or to put it this way, many technologies need to make breakthroughs in computing on a fast lane. It would be a bit unwise to give chips to teams that may not have such technology accumulation so early.

03 Willow chips and the Transformer moment of quantum computing”

Hong Jun:What do you think of Google’s new Willow chip?

Roger:They have been moving in this direction since 2014, which is to publish the roadmap and realizequantum error correction, proving that this scalable situation can achieve what Huang Renxun called a very useful quantum computer, which has always been their goal.

Why you may be a little confused, because different companies have different goals. For example, some companies target AGI, while some companies, like OpenAI, think it’s okay to release a GPT3.5.

So Google’s launch of Willow as Sycamore’s successor is a continuation from our perspective. Google found in some previous Sycamore demos that Sycamore is not enough to truly verify the scalability of quantum computing, especially opportunity quantum error correction computing, whether in terms of scale or chip performance.

To prove scalability means The more, the better, that is, the bigger the chips are, the more reliable and computing power the computer should be. Our previous experiments found that when you build a larger chip, the overall performance will not improve accordingly, because the overall error rate will also increase.

Therefore, to turn quantum computers into the so-called very useful quantum computer,Quantum error correction has become a crucial front-end technology.So Willow is equivalent to Google’s development over the past decade and finally proved the scalability of quantum computing plus error correction mechanisms.

Hong Jun:What stage is the Willow chip in? Have you started manufacturing yet?

Roger:Yes, they also have papers. They did not use the world’s most advanced qubits and fidelity, but coupled with various engineering improvements, this chip was able to verify the scalability of quantum computing at the hardware level and experimental implementation.

I want to emphasize this pointScalability of experiments。Because quantum computing itself can achieve complex supercomputing, this is not a problem in itself. From the algorithm level and from the principle level, it will be very clear in about ten to twenty years. What have Google, IBM and the entire industry done over the past decade? It is to prove this from the experimental and physically achievable device level, and to prove that we can truly make a chip that is ideal enough to achieve scalability and large-scale computing. So the significance of Google Willow chips is to make it from the actual physical level.

Hong Jun:Will he open up purchases to third-party partners? Or do they just use it themselves?

Roger:Google has never been a hardware company and has never relied on selling hardware. Therefore, the earliest TPUs they made were not sold, and they were basically used by themselves. For them, this is a very good verification, a stage proof that what can be done is to achieve the so-called very useful quantum computer within ten years.

Hong Jun:Then do you think that if its chip is built, it will accelerate the entire research of quantum computing?

Roger:From an accelerated level, it will definitely be. But more importantly, they proved they can do it. In this case, it will encourage them, a department affiliated with Google AI, to obtain more resources, make chips bigger and bigger, and solve some practical problems.

So the acceleration level does not mean using this chip to accelerate their other development, but using this chip as a living proof, and then obtaining more resources to expand this thing into a commercial or a very useful quantum computer. Acceleration will definitely accelerate because management is now convinced.

Hong Jun:Convinced of what? The effect is ok.

Roger:Google CEO also posted because management needed to see the concept verified. When you say you can scale, scale it to me. Now from the perspective of Google management, this scalability has been verified at the basic level.

This is a bit like a Transformer moment: you prove that your machine learning model can scale, and then expand it to a large enough scale to see if you can make a model like GPT.

Hong Jun:So the Willow chip is equivalent to the Transformer moment in the quantum computing community.

Roger:Yeah, I’m getting a little bit of wisdom here. Because an investor asked me if this was a Transformer moment. After thinking about it, it seemed a bit like it. Because Google did make a living verification that this thing can scale, so let’s expand it. If we look at the path of AI, I am actually more optimistic that it will happen within the next ten years. I think this evaluation of Huang Renxun is a bit too conservative.

Hong Jun:Your estimate is more optimistic than Huang Renxun’s estimate, and especially after the release of Google’s Willow chip, how many years do you think it can accelerate it to truly realize very useful quantum computing? For example, what is the essential difference between not having this chip and having this chip?

Roger:In fact, I think without this chip, people would be more inclined to Huang Renxun’s prediction, which would be about 15 to 20 years. But with this living verification, everyone’s prediction of future timeline convergence will be shortened to less than 15 years. Of course, this may also be why he was formally asked this question by investors at this meeting, because they will start to consider whether to consider this matter in stock price pricing.

Hong Jun:Regarding the Willow chip, it was mentioned earlier that it solves the scalability of quantum error correction in experimental implementation. Can you explain which of the core problems in quantum computing it solves and what is its principle?

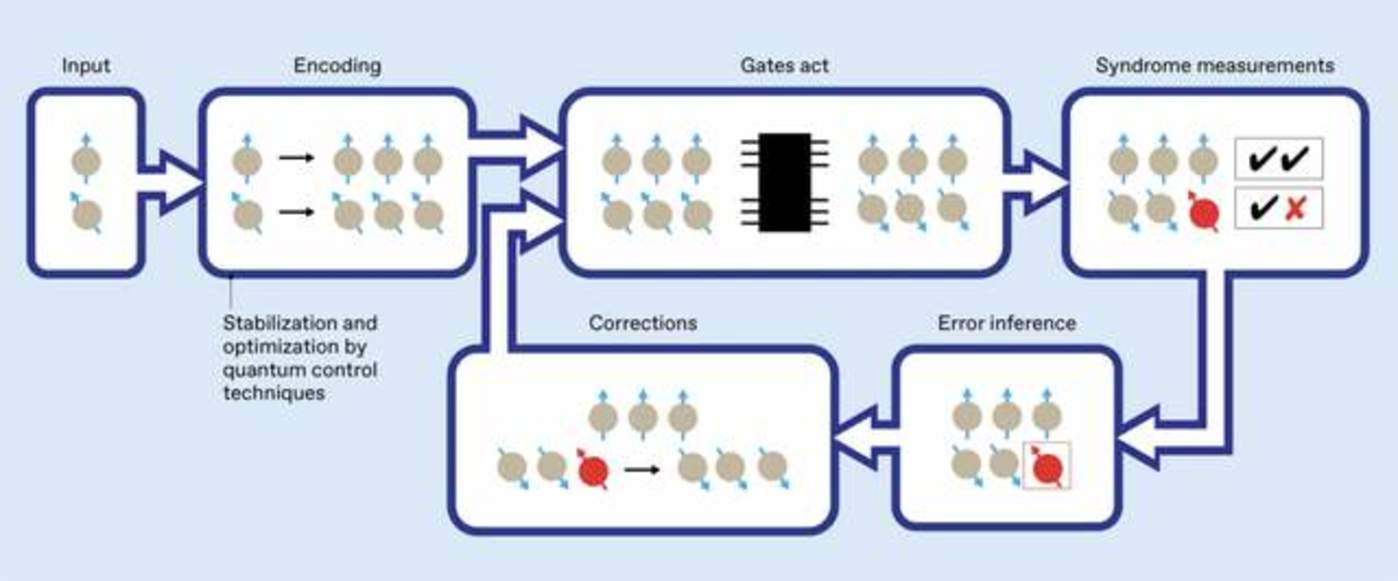

Jared:Let me briefly talk about the basic principles of quantum error correction. we knowOne of the biggest obstacles to the use of quantum computing is noise。You can also use classical computers to compare noise in quantum computers.

So-called classic computers are also very noisy. Nowadays, when we normally use electronic products, mobile phones, and computers in daily life, we won’t feel these noises. The reason is that they have had the underlying error correction algorithm in the classic field long ago, which has been corrected at the connection level of hardware and software. These noises or errors are corrected.

Source: Q-CTRL

For example, in a GPU, if you have a 1.2 volt GPU, ideally 1.2 volt would represent logic 1, and then 0 volt would represent logic 0. However, in actual operation, since mass-produced GPU chips cannot be exactly the same, the voltage applied by them is also different for each operation. So this voltage does not always have to be 1.2 volts or 0 volts. Different transistors, different times, and different outputs will vary, and the bottom layer of the computer will count all errors within a range as 0 or 1. For example, if a voltage of 1 volt is generated, it will also be considered 1.2 volts, which is logic 1, and 1.5 volts will also be classified as logic 1. In this way, it is a relatively simple error correction process for classic computers.

But back in quantum computing, this is very different. Because unlike classical calculations, it can reach a relatively large value such as 1 volt to facilitate error correction. The energy of a qubit is very small, like in the superconducting system we use, it only has the energy of one photon. Qubits are very fragile and highly susceptible to environmental interference and internal errors, resulting in the loss of quantum information.

There are also some examples of comparisons with classic bits. For example, classic bits will only have 0 or 1 flip errors. As described earlier, the qubit is actually a superimposed state, which not only includes the flip of 0 and 1, but may also include some phase shift errors. This will also have a noise impact on the calculation results or the calculation process.

Because qubits are entangled, they cannot be carried out separately for error correction like in classical calculations. If error correction is to be performed on quantum groups, all entangled quantum bits need to be corrected uniformly. This is why quantum error correction is considered to be a core technology to realize quantum computing and then promote quantum computing to be truly practical.

Hong Jun:So Google’s Willow chip has greatly solved this problem.

Jared:I thinkWillow uses one of the most popular quantum error correction schemes nowadays. This error correction scheme is called surface code.It also originated from California Tech’s quantum error correction technology. By using surface coded error correction codes and relatively optimized quantum hardware, Google has proved to the world that we can use this method and continue on this previously planned route.

As long as we continue to follow this path, we can gradually expand the scale and computing power of quantum computers, without making the error rate more serious as the scale increases. On the contrary, as the scale increases, its error rate will decrease, which means that the overall computing power will increase. Continuing along our path, we will eventually be able to achieve very useful quantum computing like the one just described. That is what we in the industry call a completely error-correcting quantum computer.

04 The battle for path among technology giants

Hong Jun:Everyone thinks that after Google got this chip, you just mentioned it. In fact, you have had this kind of work with Amazon before. Will it have a significant advantage for other companies doing quantum computing? For example, IBM, Microsoft, Amazon, Intel, and innovative companies such as D-Wave, IonQ and Rigetti.

Roger:From this perspective, Google is actually a very good company. Why? Because they first have a vision and are willing to spend their early time and energy, just like they invented Transformer, to prove to everyone that this general direction is right, and then everyone can follow this path, which is equivalent to clearing away a lot of uncertainty.

We can look at the responses of other big companies. For example, IBM has never included quantum error correction in their roadmap before, at least not explicitly, but in the past few months it has. Because Google didn’t come out immediately, to be honest, we have been able to see papers internally for a long time, because these require peer review and everyone can see them.

Hong Jun:So as long as the paper is published, it will be open source, and everyone may already know this method.

Roger:First of all, Google’s roadmap has always been open. They need to achieve their goals through scale and quantum error correction algorithms. This algorithm comes from Caltech, calledSurface code (surface code), is an error correction algorithm invented by Alexei Kitaev of Caltech.

There is one big difference between hardware companies and software companies: in the hardware field, I can clearly point out the general direction of physical process, because the specific physical implementation is the same everywhere, so that everyone can work hard according to this general direction. The reason why IBM does not give up is that it believes that the implementation timeline of quantum error correction will be longer. It believes that by violently increasing the number of qubits, commercial value can be realized earlier, or the so-called “very useful quantum computer” can be realized.

From another perspective, why does IBM, as the earliest participant in this industry, seem a bit conservative in this regard? This is because large companies tend to be more conservative and more firmly follow their early roadmap. IBM has been thinking more about how to use existing technologies that do not require error correction to make useful applications. This is its path. So it is constantly exploring ways to commercialize-although it is doing technology, it is also doing commercialization. In contrast, Google does not commercialize it at all and specializes in error correction.

IBM’s strategy has evolved several times. Everyone now knows that IBM believes quantum error correction is feasible because improvements in this area are achievable. And the key is that Google has much fewer people than IBM. So IBM has been doing these things. As industry pioneers, sometimes young companies are more efficient than them. For example, another large company (because this is non-public information, I won’t say specifically which one is) is also shifting its roadmap to quantum error correction, benchmarking Google. As soon as they see other big companies making breakthroughs, they also want to suddenly switch sides and turn in this direction. Because they used to think it might take late to make something, but now they are the earliest ones.

D-wave is a bit difficult because their path has always been to doQuantum annealing calculationThis means that their path is completely contrary to quantum error correction. But there are also historical reasons. Why was D-wave the earliest pure quantum computing company? To be honest, in those days, people thought that programmable general purpose computers did not exist, and so-called digital computers did not exist, or were difficult to build.

Therefore, their company’s original intention was to create a specialized quantum computer through simple and easy-to-implement annealed quantum computing. It cannot be used to program to do so-called quantum error correction, nor can it be programmed to do general quantum algorithms. But they believe that commercial value can be realized earlier by building specialized quantum computers. Looking back now, this was a wrong choice.

Hong Jun:You don’t approve of this route? So are they changing now?

Roger:Since its founding, their company has been in the direction of quantum annealing, so they need to have a process to transform, which is equivalent to a complete change of direction. It’s not that I disagree at all. They may be able to find many use cases, but they will discover that the path of quantum computing that was previously thought difficult to implement may be implemented earlier now, and the impact after key implementations will be greater.

Hong Jun:So error correction now seems to be a more mainstream and more recognized direction?

Roger:Yes, because it has already been done, and living examples are also set out there. Just like Huang Renxun or many people say that it takes fifteen, twenty, thirty years. But think about it carefully, what technology has been developed by humans for more than ten or twenty years, or will be developed for more than ten or twenty years at this stage?

Hong Jun:Controlled nuclear fusion?

Roger:Controllable nuclear fusion is not entirely a technical experimental issue in nature. Because thinking back then, when humans were doing nuclear physics, they proved that the first fission reaction was made, and it was really just a small scale in a laboratory. The fission reaction was on the scale of several atomic bombs, and finally produced a two-generation product. It took three years to directly implement the product, literally, and spanned another generation, which may take less than ten years.

So controllable nuclear fusion will actually be discovered. In essence, it is not so urgent in market demand because humans have a large number of fissionable reactors available to them. To put it bluntly, if you must use it, there are also hydrogen bombs that can be used. In fact, they can also generate electricity. The Soviet Union has relevant plans. If controllable nuclear fusion is to be achieved, it is a very elegant and ideal goal. But frankly speaking, ROI may not have been bigger and higher for the first time, considering the current energy needs of mankind. So I think if it takes a few decades, this technology will probably mature in advance.

For example, nuclear fusion already has a hydrogen bomb, so the fusion reaction has actually been implemented. It is equivalent to a second-generation or more advanced improvement process. If it is quantum computing, we are talking more about the first generation itself. I don’t think there is any such technology that will take more than ten or twenty years to develop.

Source: ResearchGate

Hong Jun:What is Amazon’s current route?

Roger:What they made was a relatively novel superconducting qubit to scale.

All major companies do itsuperconducting qubits, including Google, IBM, Amazon, just different superconducting qubits. From a public perspective, Amazon is making a relatively new superconducting qubit called Cat Qubit, but Google has clearly had a (greater) impact.

Hong Jun:What about Microsoft?

Roger:Microsoft felt that quantum computing was very far away. They actually started very early, not later than Google, but one of the things they left was calledtopological qubitThe path is something that has not been proven until now, and they have probably given up.

So now they want to do more in-depth cooperation with other quantum computing companies. For example, they have previously collaborated with Honeywell’s spin-off company called Quantinumlogical qubitsCalculate. Recently, he worked with a spin-off company in UC Berkeley to do atom-based logical qubit calculations.

Microsoft actually attached great importance to fault-tolerant quantum computing like Google at the beginning, but Google was actually more conservative. Why did Google choose the path of superconducting quantum? Because it has long been proven in this project that you can really make chips that can be used. So at the company level, in fact, you only need to carry out so-called engineering optimization on it to slowly produce results.

As for speed and slowness, many times it is directly related to your investment and market demand. Just like the example of the Manhattan Project just mentioned, there is huge market demand, so delivery can be achieved in three years. If market demand needs to wait for the opportunity or prove commercially reasonable, it may drag on longer. But generally speaking, you can make it directly to the expectations with superconducting paths. At that time, Microsoft felt that this path might take ten to twenty years.

As I said, at the beginning, everyone’s estimates of the future were prone to disagree, and it was easy to say that in thirty years, so they chose one called topological qubits. The advantage of this topological bit is that it is fault-tolerant. He has also developed a lot of software accordingly, and is regarded as an early quantum software development company.

Now that the hardware is obsolete, they are cooperating with other quantum computing companies to run logical qubits on other quantum hardware. So in fact, it is very similar to Google and attaches great importance to so-called fault-tolerant quantum computing. It’s just that when it finally implemented the path, Google actually chose a more hardware-verifiable method to do this with a combination of software and hardware. However, due to some mistakes in decision-making, Microsoft now only has software and cooperates with hardware companies.

Hong Jun:Therefore, the entire process still requires some key decisions to control the technical direction. How many startups are there? Like IonQ?

Roger:IonQ’s path is mainlyion trap。The ion trap path was actually considered a more promising path than superconductivity for a long time, even 10 years ago. Because quantum experiments based on ion traps are actually the earliest quantum experiments in humans, they have won many Nobel Prizes.

Superconductivity was considered a very poor platform for a long time, before 2007 09. Because the qubits made experimentally at that time were very poor. Around 2009, researchers at Yale began to make qubits better and better. Then in 2014, the threshold for error correction could be reached, which was also a point in time for Google to participate.

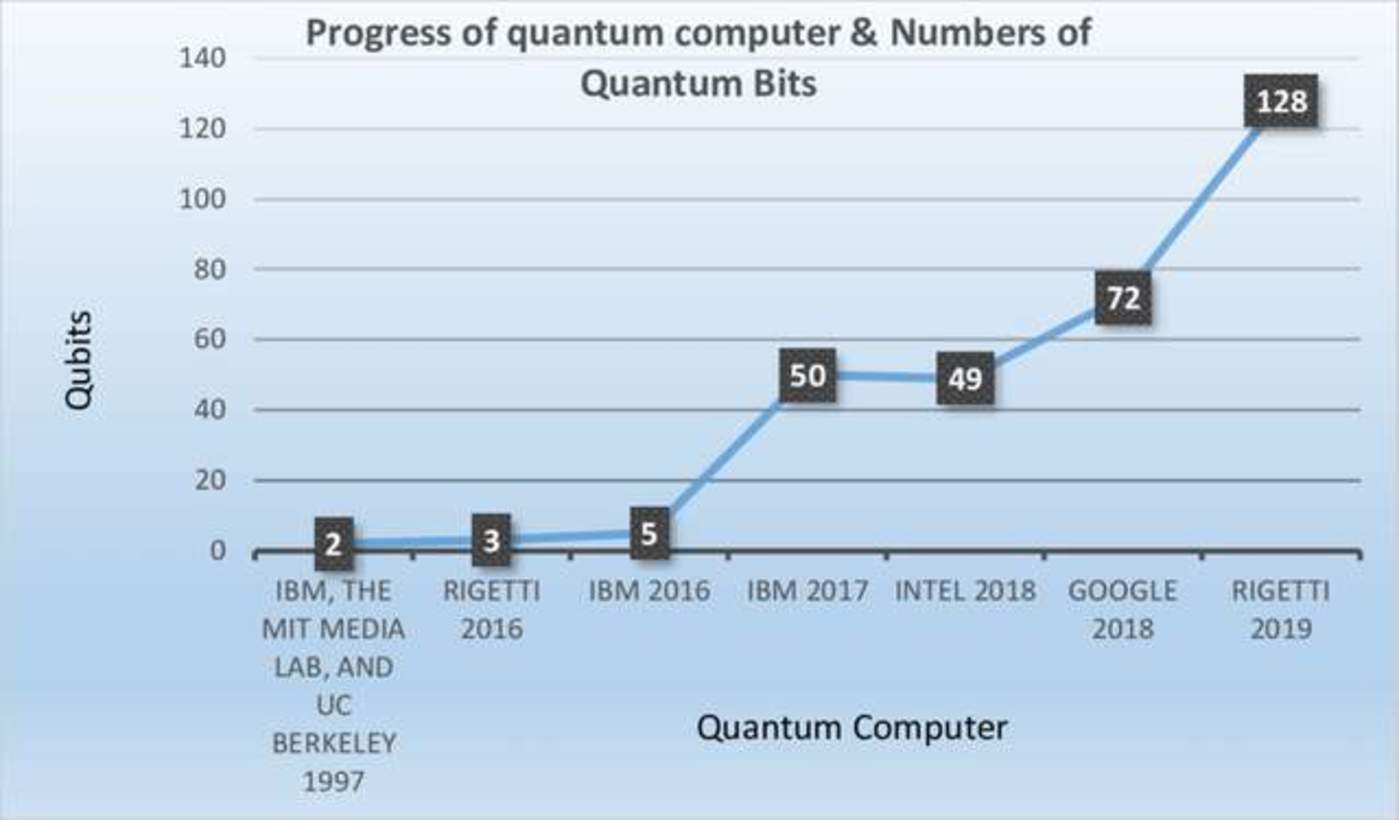

Why is IonQ’s path the best? Because this quantum system is the oldest, it is the best to manipulate among small-scale quantum systems, and it is very mobile. Therefore, their path at that time believed that the ion trap route might achieve the so-called commercial implementation and very useful quantum computer earlier. But in fact, the number of qubits in their company may have doubled from its inception to its launch.

Hong Jun:How many qubits are there now?

Roger:When they were founded in 2017, because they came from the school, the set they moved from the university laboratory had 11 qubits that could be entangled and calculated with each other. Many papers were published that year, but they were actually good work. But later it was discovered that the ion trap path, when you wanted to go beyond 11 qubits, there were huge amounts of scientific adjustments to the project. One is that the engineering challenges are big for everyone, but you can achieve them step by step. The other is that there are scientific challenges.

Hong Jun:Scientific challenges will be even more difficult, and they require basic breakthroughs.

Roger:There is more uncertainty in it, and you need more innovation to drive them.

Hong Jun:So you just said they doubled it, and now it’s 22 qubits.

Roger:Almost, between twenty and thirty.

Hong Jun:How many qubits are the most in the industry now?

Roger:The largest number of ion traps should be Honeywell’s 32. But the problem now becomes that when you have more qubits, one of their trade-offs is that the performance of the current ion trap is getting closer and closer to superconductivity. With the more qubits, its operating performance, such as fidelity, is getting closer and closer to superconductivity. It should be said that superconductivity is getting closer and closer to them. This leads to a very interesting cross-border, which is that it was previously thought that superconductivity could not achieve fidelity greater than 99.9, but now it can be done.

Technological development is actually a very interesting process. You will find that overtaking in corners is not possible in the early stages of technological development. Just like AI, how to go from Transformer to GPT, you can’t try and make mistakes to avoid using Transformer for a completely different architecture, right? Many people think they can overtake in corners, but they turn over instead.

Hong Jun:This is a rollover on a curve.

Roger:So the problem with IonQ is that there is no clear path to achieve the current scale of superconductivity. They will say we have a roadmap, but we need to see the actual demonstration.

Hong Jun:Where’s Regetti?

Roger:Regetti’s company is very legendary. Regetti himself was the person who made the first superconducting bit quantum energy at Yale, which was his graduation thesis. Then he brought this set to IBM, so IBM’s earliest roadmap was built based on his set. He later opened his own company. Anyway, for various reasons, some small details of his company’s superconducting bits also changed.

Regetti, I think he is actually a predecessor in the start-up of Quantum Company. But because it might be a little too early, he created it in 2013. For example, if you create an AI company before Transformer, you may become a pioneer, and you may not be able to use the latest technology and latest information to make smarter decisions later. Regetti’s entire company is actually a little behind in terms of both technical and commercial aspects, and their CEO is now retired.

Cryptography in the post-05 quantum era: Banks and technology giants deploy a new generation of encryption technology

Hong Jun:This year, VC invests in quantum computing. Have you felt that this market is getting hotter and money is pouring in large quantities?

Roger: I think for now, because the interest rate cut is also a relatively recent time, there is no obvious feeling that traditional VC investment is increasing.

Hong Jun:So what kind of investment will increase?

Roger:Strategy VC and National Sovereignty VC. Take John Martinis, former head of quantum computing at Google, and his new company is called Qolab. His company received US$16 million last year, from Japan’s Development Bank and other institutions.

The three industries that are now in the sensitive stage are advanced semiconductors and AI quantum. We can conclude that advanced semiconductors are a very legendary past, then AI is a very prosperous present, and quantum computing is the inevitable future.In a rate hike cycle, the government will become more interested in this inevitable future. But as the interest rate reduction cycle begins, I think VC will pay more attention to the entire industry,In particular, the emergence of Willow depends on how long the next interest rate hike cycle will last.

Hong Jun:The company you just mentioned is the Development Bank of Japan who invested in them. I understand that if quantum computing is implemented, it will require an upgrade of the entire global cryptographic system, which needs to be at the security level. There are some layouts?

Roger:This has already begun. Two years ago, Biden had an executive order requiring all federal agencies to change their encryption numbers to so-called quantum-resistant encryption. The National Standards Development Bureau set three standards about last year to carry out anti-quantum encryption, so it actually started two or three years ago.

Last February,The Monetary Authority of Singapore recommends that all financial institutions in Singapore adopt anti-quantum encryption and QKD (Quantum Key Distribution).Keep application data from being leaked. This logic actually involves the issue of the timeline that everyone mentioned just now. Why has the HKMA, the Central Bank of Singapore, been doing this since two years ago? HSBC has actually built a pilot network for anti-quantum encryption and secure communications in the UK. Many banks are also doing it, such as JPMorgan Chase and Chase, which are also very big players in this field. They have active infrastructure and project development against quantum encryption and quantum communication, all of which are public information.

Hong Jun:Therefore, cryptography that is resistant to quantum algorithms is also booming.

Roger:These things are actually very interesting. It was said before that the government was taking the lead, which is understandable. Since last year, the financial community has made great progress in this regard. Basically, all banks you have heard of have independent projects and common projects in this regard, even central banks. So returning to the question just now, I think many people just don’t want to see what he says, but what he does. If a quantum computer that can crack encrypted numbers is still ten to fifteen years away. Why is everyone so eager to change their infrastructure now?

Hong Jun:So when do you think quantum computing can crack bank passwords? Because on the day when Google Willow chip was released, I observed that the price of Bitcoin dropped sharply. In fact, there has been a widespread and popular saying in the market for a long time that quantum computing is very easy to crack Bitcoin’s algorithm.

Because Bitcoin’s algorithm is actually divided into two parts, one part is the algorithm of its mining mechanism, and the other part is its elliptic curve signature. Among these two parts of the algorithm, it is said that the elliptic curve signature is the easiest to crack, and it is even easier than cracking the password of the traditional banking system. Do you understand this?

Jared:Yes, Bitcoin does have two encryption systems. Here we will mention an algorithm that we have just talked about, the Shor algorithm.

it is aA quantum algorithm specifically for large number decomposition and discrete logarithm problems, you can crack this elliptic curve signature in polynomial time. This kind of encryption system is not only targeted at the Bitcoin system, but also targeted at all cryptographic systems where public keys are public, and will be relatively easily breached by this Shor algorithm.

This is different from the banking system, which does not have a public key. The key of the banking system itself is confidential information and will not be exposed. But the public key of Bitcoin users ‘wallets is public and traceable on the chain. Anyone can access these public keys through the blockchain network.

Therefore, in the era without quantum computers, it is absolutely impossible for you to calculate the public key when you get it. It cannot be said to be absolutely impossible. It is a very large cost, and it may take tens of thousands of years to calculate the private key. But with the quantum computer and the Shor algorithm, if the quantum computer has sufficient capabilities, it is a very feasible process to calculate the private key after getting the public key.

Hong Jun:How many qubits does it take to crack? Some people say that 4000 qubits are needed, but in fact we are still a long way away from 4000 qubits.

Roger:Let me correct this first. Whether it is 4000 or 3000 bits, the Shor algorithm requires that your qubits be error-free, that is, a fully fault-tolerant quantum computer. You need to have a 4000 qubits, the so-called large-scale fault-tolerant quantum computer to run. So this is Huang Renxun’s very useful quantum computer.

According to his prediction, it will be about fifteen years later. Looking at everyone’s actions, it’s obviously not the case. After all, when this thing has begun to threaten their wallets, everyone’s sensitivity becomes higher. So I think it may be ten years before the emergence of this quantum computer that can crack the encryption number, the so-called large-scale fault-tolerant quantum computer. Many institutions and companies, especially banks, and Bitcoin, have now configured this encryption number themselves. The reason is, what if?

Because this is not an absolute, this is an estimate, right? Just like GPT Moment, to be honest, at the beginning of 2022 and 2021, people didn’t know about this progress. At that time, it was generally believed that it would take 8 to 10 years for AI like GPT that could pass the Turing test. In fact, it only took a few months.

So in fact, after this trip, many people realized that a technological breakthrough is a bit like a technological explosion. You can’t predict when it will be discovered. I can only say that I reasonably speculated that it will come out in about 10 years. But if it comes out five years later, or even next year, I won’t be extremely surprised. There are no physical laws that say it is impossible. This is essentially an engineering problem.

Hong Jun:You just mentioned the possibility of realizing a fully fault-tolerant quantum computer. It may appear quickly, but it is not uniform. Can I understand it as a competition now to see who comes out first? If such strong quantum computing is implemented first, it will pose a very big threat to these cryptographic systems that have not yet had time to upgrade. On the other hand, we have made many upgrades in improving anti-quantum cryptography, so it may be a very smooth transition.

Roger:First of all, cryptographic substitution, which is relatively simple. The anti-quantum encryption number itself is a replacement for the encryption mechanism, which is largely a software upgrade issue or a hardware replacement issue. For example, if you want to use high-speed encryption, you may need a separate piece of hardware. But this includes last year, after the National Standards Development Bureau formulated three standard anti-quantum encryption algorithms. In fact, from the perspective of everyone’s commercialization level, you just need to comply with the standards and configure them. You will see that HSBC actually released their crypto last year, which was released in Hong Kong. That crypto also comes with anti-quantum encryption.

Anti-quantum encryption is not a slow process in itself, you have to do it. What is more complicated is that communication is called QKD. Many banks are also doing QKD. They do not rely on your encryption number itself to resist quantum encryption, but rely on the physical level. This is also a way.

But this is slower because it involves things like fiber optic networks after all. But many banks are actually pushing it themselves, and even a bank partner we contacted said that what is very interesting now is that banks are asking these communication companies, such as AT T, to deploy it, called the fiber QKD network, to help them communicate safely on Wall Street.

This is still quite interesting. On the contrary, the bank forced the communication company to do this because the communication company couldn’t do it, and the big banks were doing it themselves. So actually II’m not very worried about the collapse of the so-called financial system or virtual currency system due to the improvement of decryption capabilities. From a technical perspective, I don’t think so.。There is a very good saying in Sanshou: arrogance is the biggest problem.& rdquo;

Hong Jun:And I destroy you, it has nothing to do with you.” rdquo;

Roger:I think everyone is building a good job now, including banks and financial institutions. You think they are arrogant, but they actually want to play the safety card. So in fact, I think that including cryptocurrencies, they can actually iterate their encryption methods to make them more secure. I think this can be done. This is not a question of Rocket science.

We are at a very interesting stage now, just as many big companies and countries are thinking, there must be a place where we can make the first dividend. It’s better for me to get it than others get it. So it brought us a relatively big opportunity. We can provide supporting quantum computing equipment or add some equipment to large companies and some infrastructure development projects. That is, we have essentially become a server provider. This is actually the main perspective of our current customers. Our largest revenue comes from supplying our quantum computers to these data centers.

Hong Jun:For ordinary people, will quantum computing affect ordinary people’s daily lives? Or will it actually just operate silently in high-tech fields?

Roger:This thing is a bit like when computers were born in the early days. What would it serve? It is the infrastructure needs of a large multinational institution, or even a national government. It provides more backend needs and provides assistance for future chemical products, financial products, or AI products that can serve ordinary people.

A bit like a GPU. In fact, GPUs have been played by these game players for many years, and for some time they were mining. Now GPUs are slowly entering the so-called stage where you have to deal with me whether you play games or not, but also from the perspective of data center. To serve the public.

I think quantum computing will also go through this process. We will first serve some specific high-value customers, like the early days of computing, infrastructure projects, and large institutions, and then use them to indirectly serve ordinary people. But with the emergence of the very useful quantum computer according to Lao Huang in that era, it not only means that quantum computers may have reached a complete form. I even think that in those days, the industrial chain was more mature and the production efficiency was higher, so the cost would become lower and lower.

Computers were very expensive in the early days. IBM was the first company to make computer products and built the Watson computer. The classic misjudgment of the IBM chairman at that time was that only five computers were needed in the world. Because he counted, those who needed computers were the government, the military, and banks. Later, he found that he had made an obvious mistake because more people used them and found more uses, there would be more demand. More demand drives more production, and more production will reduce costs.