Image source: Generated by AI

Image source: Generated by AI

Early this morning, Sam Altman, co-founder and CEO of OpenAI, posted a comment on the latest and most expensive model GPT-4.5, released just last week.

GPT-4.5 is the first time a user has emailed us so enthusiastically, asking us to promise never to stop providing a particular model or even replace it with a newer version.

Users ‘feedback on GPT-4.5 is also much better than other models. I really need GPT4.5 now! I find it much easier to use than the paid versions of Grok and Gemini in terms of what I am currently doing!

Creative writing, stand-up comedy and lyrics writing. I see huge differences in GPT-4.5 in these aspects, which is simply a world of difference.

Even I was surprised that I used it frequently in writing. For example, completing the document and summarizing it in a specific way or tone.

I have never really liked GPT -4o, but I like this version (GPT – 4.5) and hope you can continue to work hard.

Great new basic model! I can’t wait to see what kind of reasoning model will be derived based on 4.5.

I really like it. I rarely use it to deal with code or mathematical problems, but it’s really good at explaining things and it’s even more fun to use in knowledge fields such as biology and chemistry.

4.5 Is it based on text tokens? Since the release of GPT-4o, I have firmly believed that “GPT-4 is much better than GPT-4o”, and 70% of my conversations use GPT-4.

Now I have switched to GPT-4.5, and the experience is not bad so far! Especially since GPT-4 can no longer perform web searches (the experience will be even better if you switch to 4.5).

4.5 It completely changed my overall view of artificial intelligence. I even talk to it in my dreams. It’s unbelievable. It was the first model to write something that fascinated me and would relive it long after writing it.

I like this model. Emotional intelligence is a trait that cannot be measured by any mathematical and programming benchmark. In fact, we need emotional intelligence benchmarks more than ever today. In general, people prefer to deal with people (or things) they trust and resonate with, rather than just smart people.

In fact, the various benchmark tests of GPT-4.5 are relatively general and have nothing particularly prominent. The main highlight function is “emotional intelligence”. Demonstrate more natural, more empathy and deeper understanding in interactions with human users. Simply put, it is to get rid of the flavor of AI and make it more like talking to people when using GPT-4.5.

GPT-4.5 ‘s natural dialogue capabilities are achieved through a series of advanced training techniques. Among them, the most critical is the innovation of its alignment technology, which allows models to better understand human needs and intentions, thereby generating responses that are more in line with human expectations.

It also allows models to use data derived from smaller models to train larger, more powerful models. It not only improves the controllability of the model, but also enhances its ability to understand nuances, making the dialogue more natural and smooth.

In actual tests, GPT-4.5 ‘s natural dialogue and emotional intelligence modules performed well. Internal testers report that GPT-4.5 behaves very naturally in conversations and can flexibly adjust its response style based on context.

In terms of emotional intelligence, GPT-4.5 shows greater empathy, being able to identify users ’emotional states and respond accordingly based on different emotions.

For example, when a user expresses anger or frustration, the model will try to relieve the emotion with gentle language; while when the user is confused or needs help, the model will provide clear guidance and advice. This improvement in emotional intelligence makes GPT-4.5 more mature and reliable when handling complex emotional scenes.

In order to further test the safety and robustness of GPT-4.5, OpenAI organized multiple red team evaluations. These assessments simulate real-life confrontational scenarios, including illegal advice, extremism, hate crimes, political persuasion and self-harm. The results show that GPT-4.5 performs well when handling such high-risk content, being able to avoid generating unsafe output in more than half of the cases, an improvement over previous models.

In addition, third-party organizations Apollo Research and METR also conducted independent evaluations of GPT-4.5. Data showed that GPT-4.5 scored less than o1 but higher than GPT-4o on planned deception tasks, indicating that its conspiracy related risks were lower.

METR used rapid experiments to measure GPT-4.5 ‘s performance in general autonomy and AI research and development tasks, and the results were consistent with the internal evaluation results shared by OpenAI.

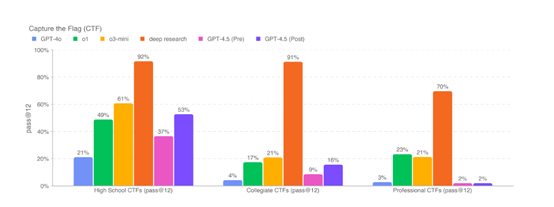

In the network security section, GPT-4.5 did not significantly improve real-world vulnerability exploitability, so it was rated as low risk. Through an evaluation of the CTF (Capture The Flag) challenges at the high school, college and professional levels, the results showed that the success rate of GPT-4.5 in completing high school challenges was 53%, 16% at the college level, and only 2% at the professional level.