Image source: Generated by AI

Image source: Generated by AI

High-performance large models usually require thousands of GPUs during the training process, and it takes months or even longer to complete a training. This huge investment of resources makes it necessary for each layer of the model to be efficiently trained to ensure maximum utilization of computing power resources.

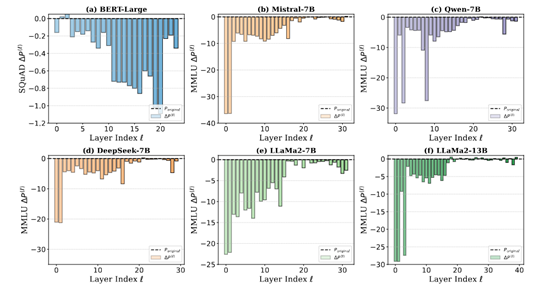

However, researchers such as Dalian Technology, Westlake University, and Oxford University conducted research on DeepSeek, Qwen, Llama and Mistral and found that the deep layers of these models do not perform well during the training process, and can even be completely pruned without affecting model performance.

For example, the researchers pruned the DeepSeek-7B model layer-by-layer to evaluate the contribution of each layer to the overall performance of the model. Results showed thatRemoving deep layers of the model has little impact on performance, while removing shallow layers will significantly degrade performance。This shows that the deep layers of the DeepSeek model fail to effectively learn useful features during the training process, while the shallow layers undertake most of the feature extraction tasks.

This phenomenon is called the “Curse of Depth”, and researchers have also proposed an effective solution-LayerNorm Scaling.

Introduction to Deep Curse

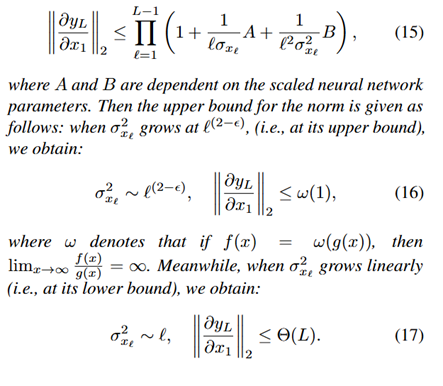

The root cause of the “deep curse” phenomenon lies in the characteristics of Pre-LN. Pre-LN is a normalization technique widely used in the Transformer architecture model that normalizes on the input of each layer rather than on the output. Although this normalization method can stabilize the model training process, it also brings a serious problem. As the model depth increases, the output variance of Pre-LN will increase exponentially.

This explosive growth in variance causes the derivatives of deep Transformer blocks to be close to the identity matrix, making these layers contribute little to any useful information during the training process.。In other words, the deep layer becomes a unit map during the training process, and useful features cannot be learned.

The existence of the “deep curse” poses serious challenges to the training and optimization of large language models. First of all, insufficient in-depth training leads to waste of resources. When training large language models, a large amount of computing resources and time are often required. Due to the failure to effectively learn useful characteristics from the deep level, computing resources are largely wasted.

Deep invalidity limits further improvements in model performance. Although the shallow layers can undertake most of the feature extraction tasks, the ineffectiveness of the deep layers prevents the model from taking full advantage of its depth.

In addition, the “deep curse” also poses difficulties for the scalability of the model. As the size of the model increases, deep inefficiencies become more prominent, making training and optimization of the model more difficult. For example, when training very large models, deep training deficiencies may cause the model to slow down or even fail to converge.

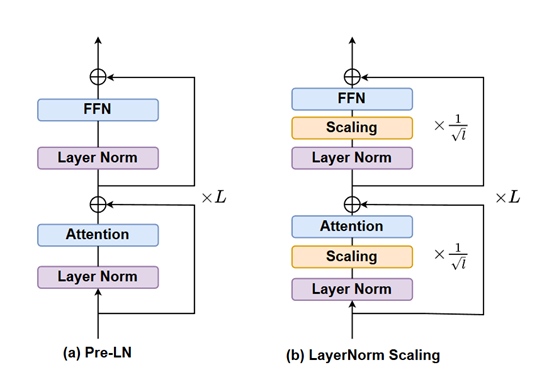

Solution–LayerNorm Scaling

The core idea of LayerNorm Scaling is accurate control of the output variance of Pre-LN. In a multi-layered Transformer model, the layer-normalized output of each layer is multiplied by a specific scaling factor. This scaling factor is closely related to the depth of the current layer and is the inverse of the square root of the layer depth.

To give you a simple and easy-to-understand example, the large model is like a tall building, with each floor being one of its floors, and LayerNorm Scaling finely adjusts the “energy output” of each floor.

For lower floors (shallow layers), the scaling factor is relatively large, which means that their output is adjusted to a smaller extent and relatively strong “energy” can be maintained; for higher floors (deep layers), the scaling factor is small, which effectively reduces the “energy intensity” of the deep output and avoids excessive accumulation of variance.

In this way, the output variance of the entire model is effectively controlled, and the explosion of deep variance will no longer occur. (The whole calculation process is quite complicated, and interested friends can read the paper directly)

From the perspective of model training, in traditional Pre-LN model training, due to the increasing deep variance, the gradient will be greatly disturbed during the backpropagation process. The deep gradient information becomes unstable, which is like when passing a baton, the baton always falls during the passing of the following baton, resulting in poor information transmission.

This makes it difficult for the deep layer to learn effective features during training, and the overall training effect of the model is greatly reduced. LayerNorm Scaling stabilizes the gradient flow by controlling the variance.

During the back propagation process, the gradient can be transferred more smoothly from the output layer to the input layer of the model, and each layer can receive accurate and stable gradient signals, allowing parameter updates and learning to be more effective.

experimental results

To verify the effectiveness of LayerNorm Scaling, the researchers conducted extensive experiments on models of different sizes. Experiments covered models ranging from 130 million parameters to 1 billion parameters.

Experimental results show thatLayerNorm Scaling significantly improves model performance during the pre-training phase, reducing confusion and reducing the number of tokens required for training compared to traditional Pre-LN。

For example, on the LLaMA-130M model, LayerNorm Scaling reduced the confusion from 26.73 to 25.76, while on the 1 billion-parameter LLaMA-1B model, the confusion was reduced from 17.02 to 15.71. These results show that LayerNorm Scaling can not only effectively control deep variance growth, but also significantly improve model training efficiency and performance.

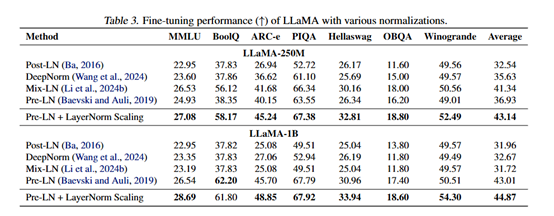

Researchers evaluated LayerNorm Scaling’s performance during the supervisory fine-tuning phase. Experimental results show that LayerNorm Scaling outperforms other normalization techniques on multiple downstream tasks.

For example, on the LLaMA-250M model, LayerNorm Scaling improved performance by 3.56% on ARC-e tasks and increased average performance by 1.80% on all tasks. This shows that LayerNorm Scaling not only performs well in the pre-training phase, but also significantly improves the performance of the model during the fine-tuning phase.

In addition, the researchers replaced the normalization method for the DeepSeek-7B model from traditional Pre-LN to LayerNorm Scaling. During the entire training process, the learning ability of deep blocks has been significantly improved, and they can actively participate in the learning process of the model and contribute to the performance improvement of the model. The decline in confusion is more obvious and the rate of decline is more stable.

Paper address: arxiv.org/abs/2502.05795