Wen| musical herald

Korean entertainment companies are fighting back against AI’s evil deeds.

Last week, South Korean entertainment company HYBE announced that it would work with the North Gyeonggi Province Police Department (NGPPA) to crack down on deepfake content targeted at HYBE artists.

HYBE and NGPPA signed an agreement clarifying the details of cooperation, including accelerating the investigation process of related cases and setting up a reporting hotline in South Korea to facilitate the public to report related criminal activities. At the same time, HYBE also announced that it will strengthen the monitoring and handling of deeply forged content, especially pornographic content, and will set up a special response mechanism to take action as soon as possible.

In September last year, South Korean entertainment companies JYP, YG and Cube also declared war on deeply forged visual content created using images of their K-pop artists. YG pointed out that well-known K-Pop girl groups including (G)I-dle, NewJeans, Twice, Kwon Eun-bi and BlackPink are among the victims of deeply forged content.

In recent years, the proliferation of deeply forged content has become the focus of global attention, especially malicious attacks against the entertainment industry. So, why is deep counterfeiting technology focusing on the K-Pop circle? What evil tastes are hidden behind it?

Female stars trapped in AI porn videos

DeepFake is an artificial intelligence technology that relies on deep learning technologies, especially Generative Adversarial Networks (GAN), to generate or tamper with images and videos. Among them, GAN consists of a generator and a discriminator. Through continuous confrontation and games between the two, the generator gradually learns to generate more realistic false content until it can be used as a fake.

With deep forgery, you can swap a person’s face, voice, and movements onto another video or picture to make it seem like something really happened, such as asking someone to say something he never said or do something he never did.

In the South Korean entertainment industry, the images of many female stars are used by lawless elements to produce harmful content such as spoof and pornography, which seriously infringes on the privacy and reputation of artists.

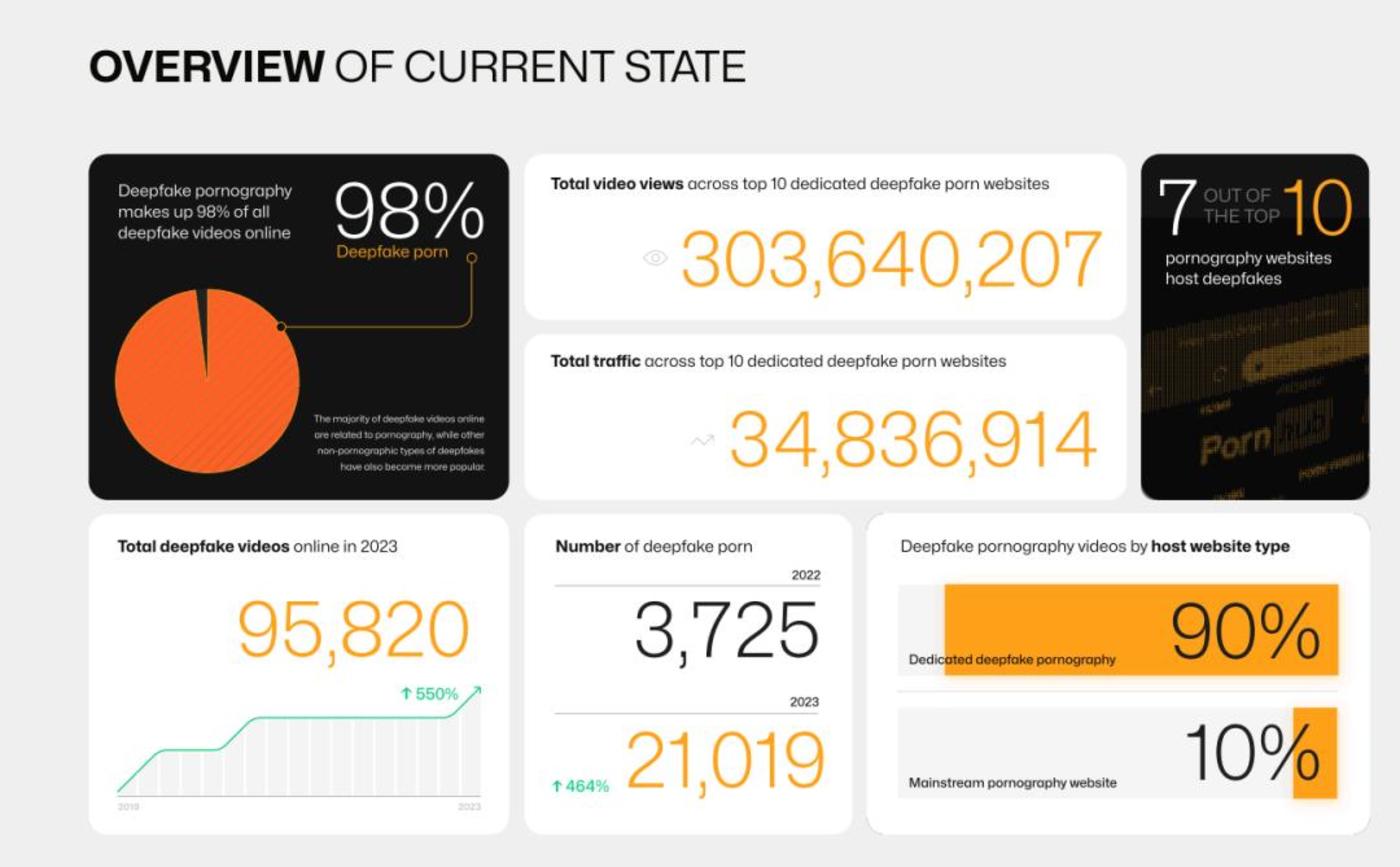

A report by Home Security Heroes, a US cybersecurity company, shows thatOf all deeply forged videos on the Internet, almost 98% are deeply forged pornographic videosOn the top 10 websites dedicated to deep counterfeiting of pornographic content, the total number of videos has exceeded 300 million times and the total number of views exceeds 30 million.

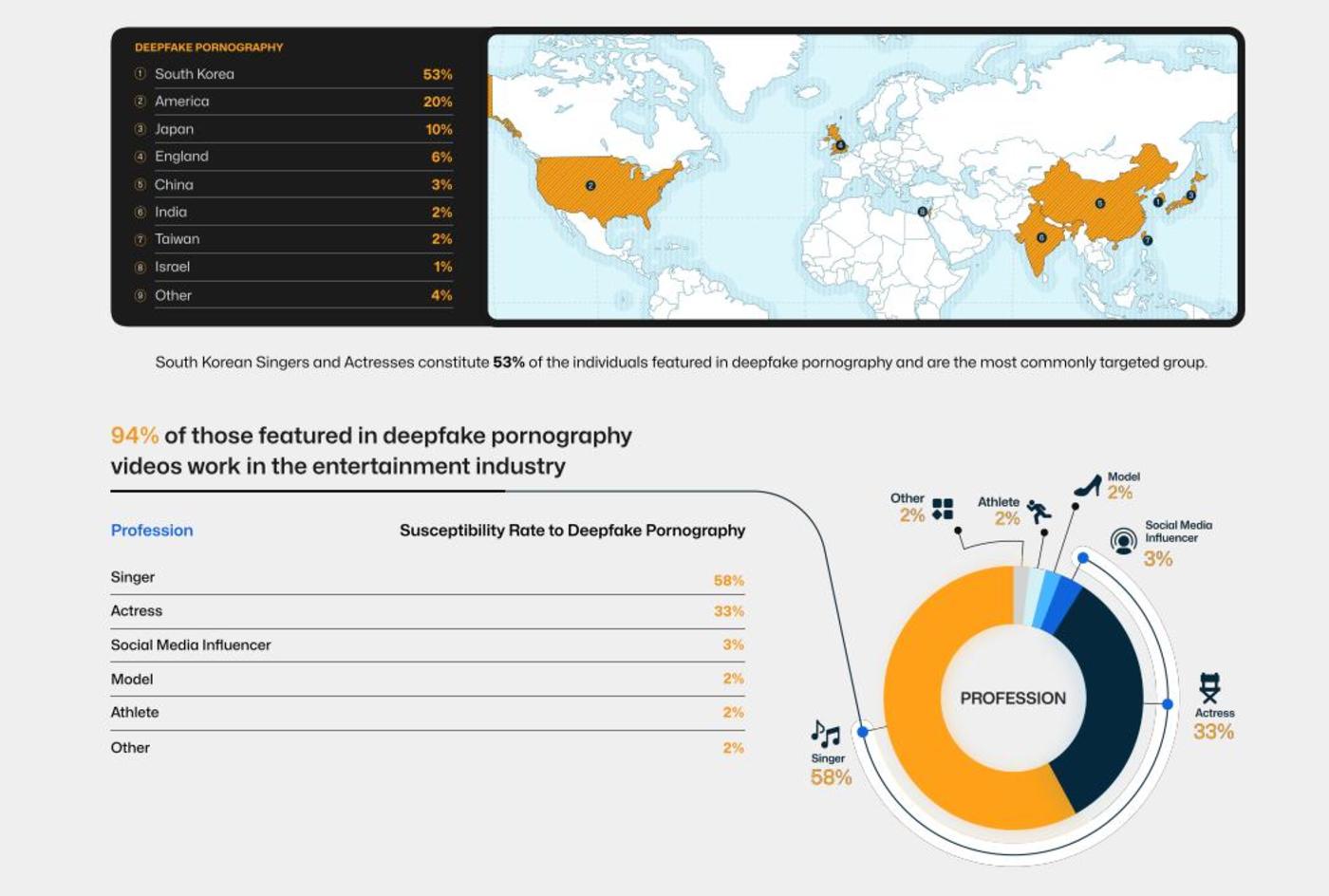

The report also pointed out that 94% of deeply forged pornographic content occurs in the entertainment industry, with singers and actors accounting for 58% and 33% respectively. Among deeply forged pornography, 99% of the victims are women and only 1% are men.

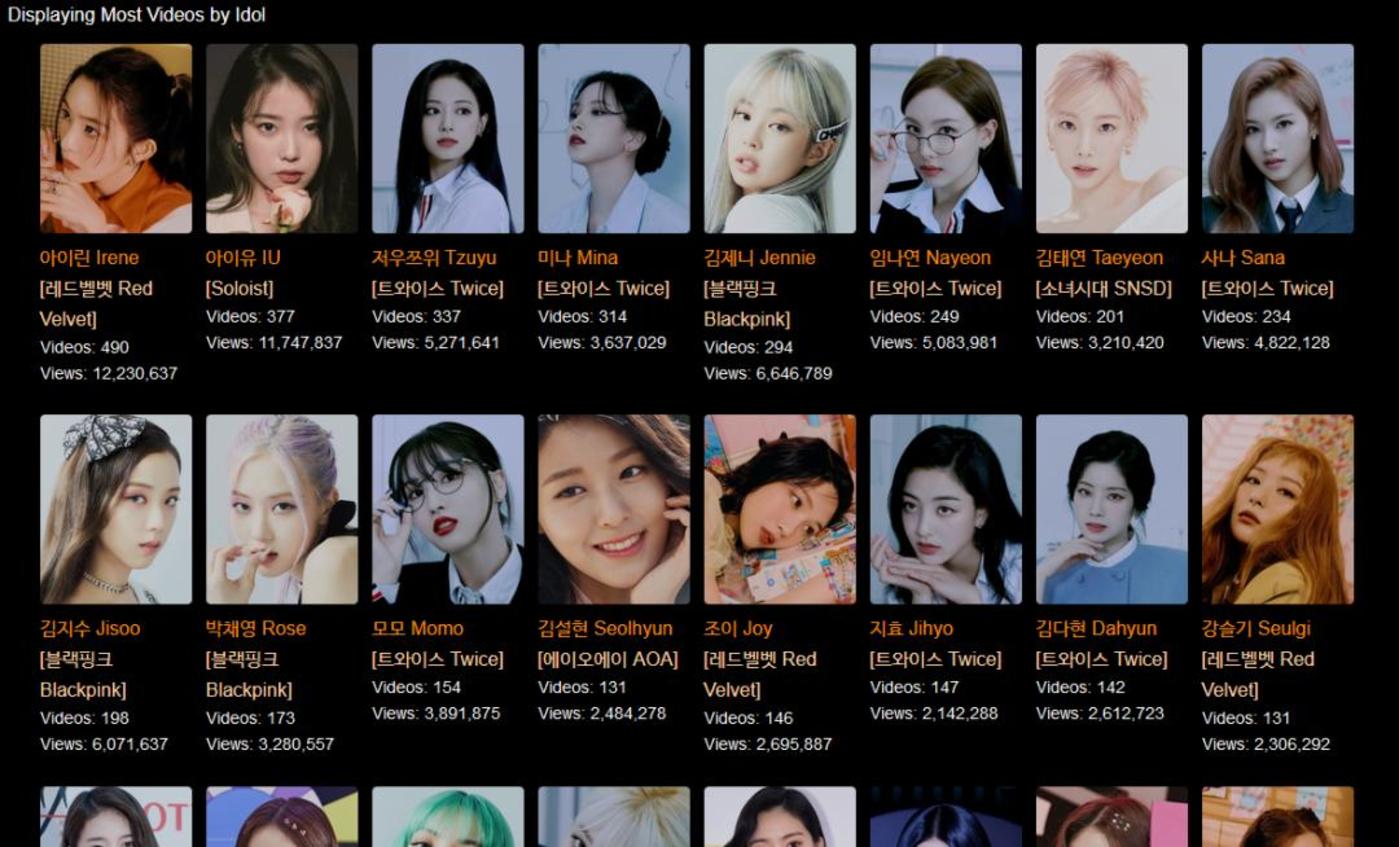

Of the nearly 96,000 videos posted on 10 deeply counterfeit pornography websites and 85 video sharing platforms, 53% were South Korean singers and actors.

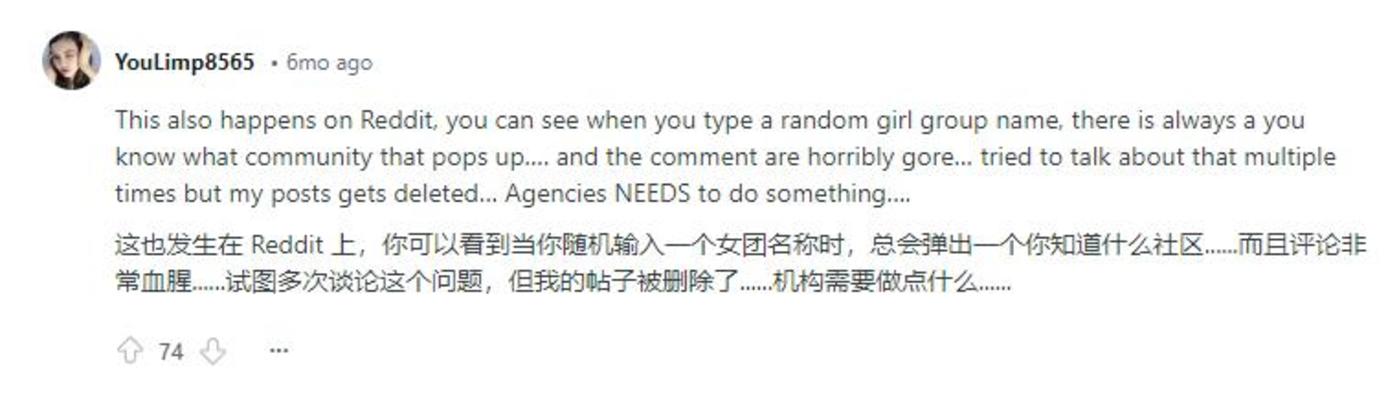

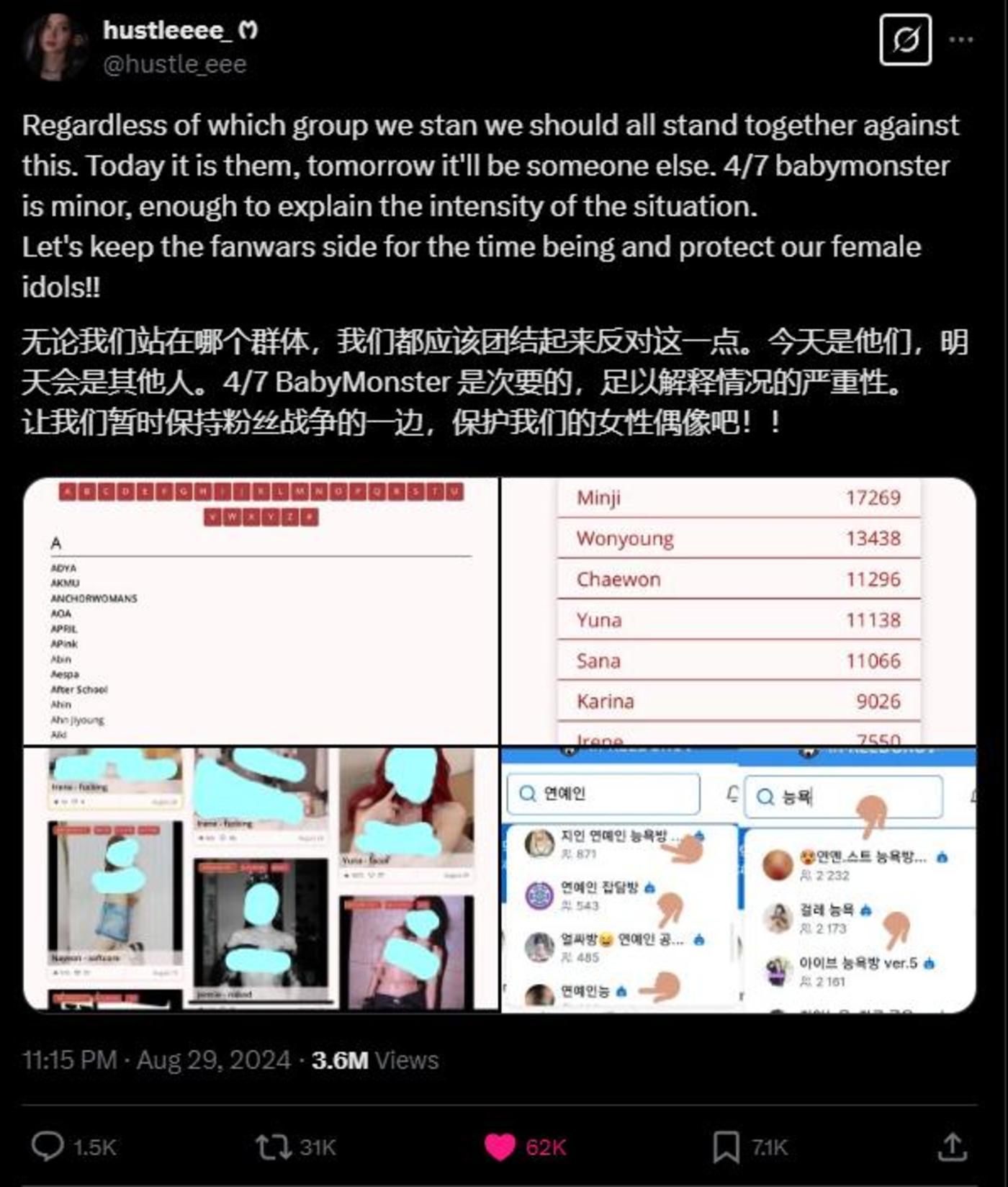

On overseas social media Reddit, some netizens pointed out that when searching for K-Pop-related keywords, popular subreddit (discussion communities) will pop up, which is almost all banned topics, and the comments are simply disgusting.

Currently, deeply forged pornographic content has spread to various online social platforms. Among them, Telegram, which triggered the vicious collective crime Room N incident in South Korea, was the hardest hit area, and some even named it the New Room N incident.

In August last year, the Korean Women’s Friends Association released a set of reports stating that in chat groups on the social platform Telegram, you only need to upload photos of acquaintances and generate indecent composite images within 5 seconds after paying. Shockingly, the number of participants in this chat group reached 227,000.

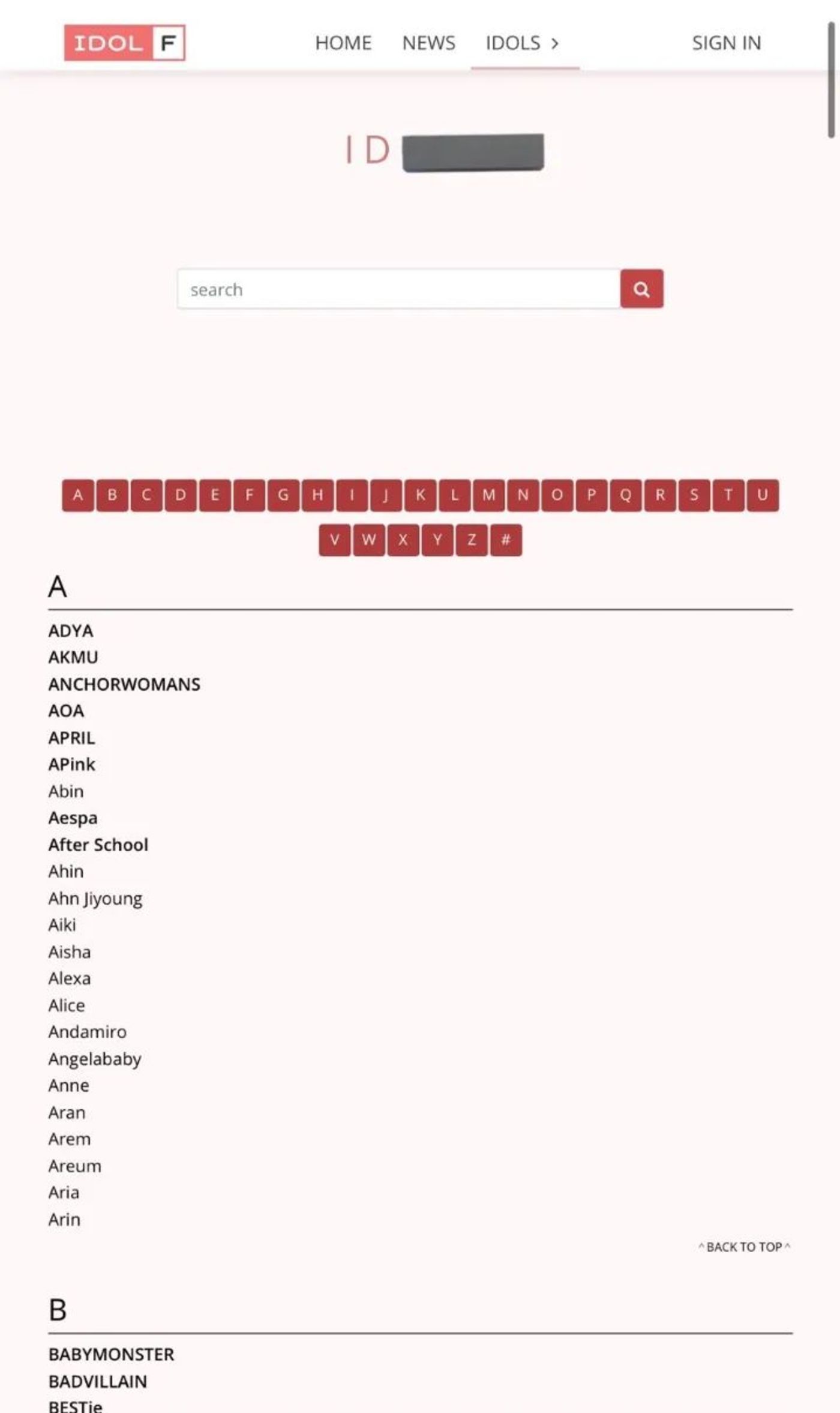

What’s even more disgusting is that some deeply forged websites even list the names of more than 200 female idols from almost every generation of K-Pop, including members of multiple fifth-generation K-Pop groups.

In January this year, due to a major staff error, the South Korean entertainment company Starship’s official Weibo account resulted in deeply forged content by Jang Won Young and An You-jin, members of the company’s K-Pop women’s group IVE.

Although brokerage companies, fan groups, and netizens have all denounced deeply forged content and urged relevant departments to quickly take legal action to protect victims and hold those responsible accountable, the harm caused by deeply forged content to female artists is difficult to completely curb. It is not only the result of technology abuse, but also the epitome of long-standing problems of gender violence and privacy violations in the digital space.

At present, under the pressure of public opinion, the South Korean government and relevant agencies have accelerated the legislative process to crack down on the production and dissemination of deeply forged pornographic content with stricter laws.

Why does deep counterfeiting target K-Pop female stars?

It can be said that the low threshold of technology, the high exposure of artists, the materialization of female idols by society, and the lag of law, are intertwined with various factors, which have jointly given rise to digital sexual assault in this Korean entertainment ecosystem.

Reports show that in South Korea, pornography cases involving deep counterfeiting have surged from 3725 in 2022 to 21019 in 2023.

Although under the Special Cases Law on Punishing Sexual Offences, individuals involved in editing, synthesizing or processing deeply forged pornographic content will face up to five years in prison or a fine of 50 million won, publishers who disseminate such content for profit can be sentenced to up to seven years in prison.

However, of the 71 rulings issued by South Korea on deep counterfeiting crimes from 2020 to 2023, only 4 cases resulted in imprisonment sentences for deep counterfeiting crimes alone. There is currently no law against downloading or viewing deeply forged pornography.

This means that the current law only penalizes those who produce and disseminate deeply forged pornographic content, while those who watch or possess such content are not subject to the law. This legal loophole allows viewers to browse relevant content without hesitation, further fueling demand in the illegal market and making it more difficult for victims to obtain effective legal protection.

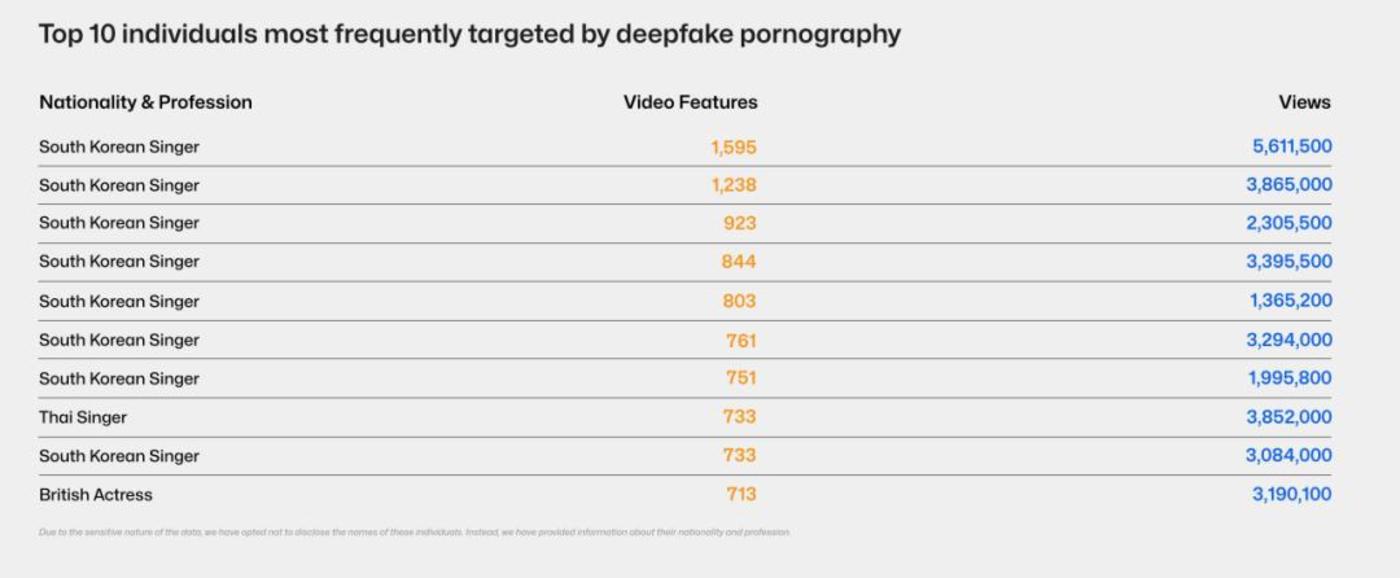

According to statistics from Home Security Heroes, among the top 10 most frequently attacked by deeply forged pornographic content, most victims are South Korean singers. One singer has 1595 videos, while another South Korean singer has more than 5.61 million deeply forged Video consumption.

In 2017, a Reddit user named DeepFake appeared and forged a series of female pornographic movies. Later, the meaning of the term gradually expanded to include not only the face-changing videos he made, but also various synthetic content generated using AI technology. Subsequently, open source tools such as DeepFaceLab and Facewap were released, making it easier for users to create deeply forged content.

Nowadays, deep counterfeiting of content is no longer a high-threshold technical challenge, and almost anyone can easily and efficiently create realistic fake content. From the perspective of operating difficulty, currently, only one mobile phone is enough to produce AI face-changing content. Users only need to provide materials and make simple adjustments, and the technology itself will complete most of the work.

The widespread spread of the K-Pop industry around the world, especially the close interaction between Korean female singers and fans through social media, has created extremely high exposure. This high degree of visibility makes their images ideal for deeply forged content.

In March last year, Huang Lizhi, a member of the K-Pop women’s group ITZY, accidentally saw a deeply forged dance video of his own during a fan connection event. At that time, Huang Lizhi’s expression was obviously a little overwhelming, happy and confused, and he could only awkwardly imitate the dance movements in the video. Although the video seemed harmless on the surface, many people said afterwards that watching the video was creepy.

Nowadays, every action and expression of an idol may become an object of malicious exploitation. Anyone can use public artist images and materials to create extremely realistic fake videos with the help of technical means. These forged videos may not only be pushed to public platforms, but may also be leaked to private occasions, causing unforeseen trouble and harm to idols.

On the other hand, South Korean society’s extreme attention and objectification of female idols exacerbates the universality of this violation.

In traditional gender culture, women are often regarded as objects of visual consumption, especially young female singers. Their images and forms are often amplified, examined and evaluated, making these female stars a high-frequency target for deeply forged content. In-depth counterfeiting of them can not only quickly attract attention, but its social influence also makes these content have higher communication value.

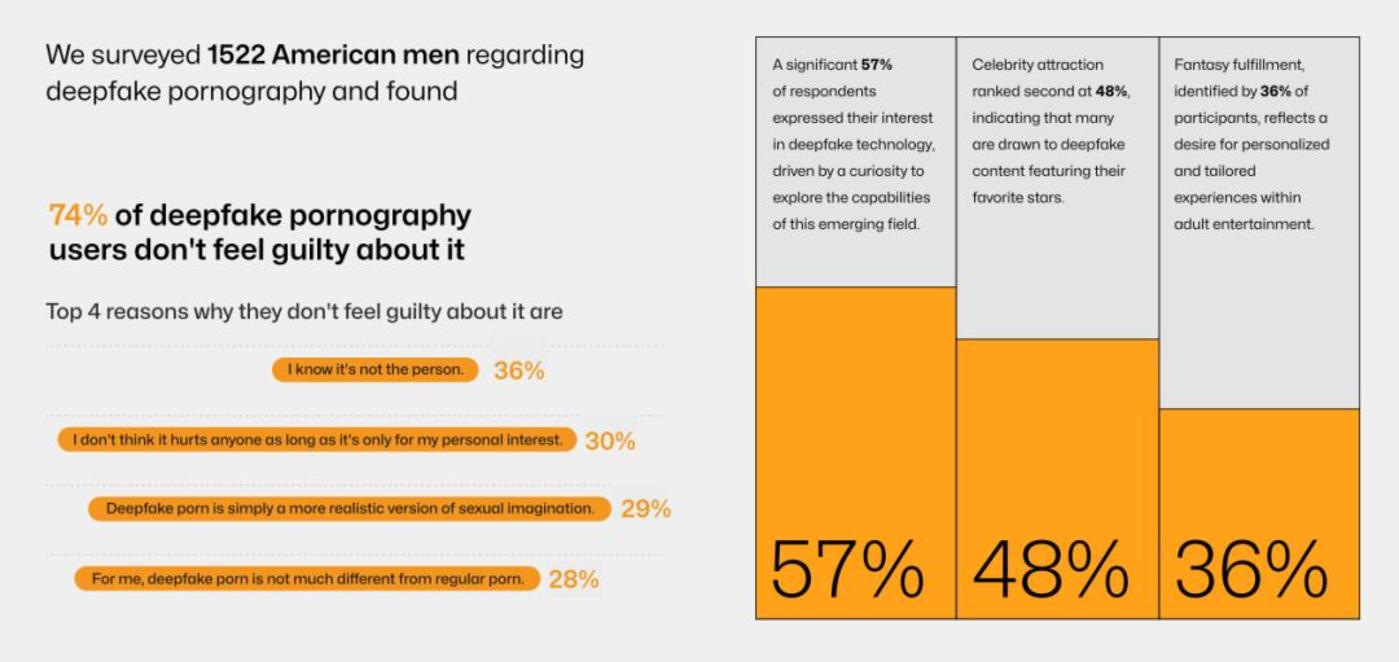

Moreover, most men who watch in-depth counterfeit pornography do not believe that this behavior is morally problematic.

Taking the 1522 American male respondents at Home Security Heroes as an example, 74% did not feel guilty about deeply faking pornography, which I knew was not a real person.” rdquo;(36%), It’s just my personal interest, it won’t hurt anyone.” rdquo;(30%) Deep fake pornography is just a more realistic sexual fantasy.” rdquo;(29%) There is not much difference between in-depth counterfeit pornography and ordinary pornography.” rdquo;(28%)

The logic behind it is that they are either driven by celebrity attraction or fantasized satisfaction, hoping to obtain a more personalized and customized adult entertainment experience, but ignore basic moral boundaries and hold an attitude of contempt for women’s dignity, which makes depth The wantonly spread of counterfeit pornography in the blank space of morality and law further deepens the harm and materialization of female artists.

For brokerage companies, considering that the remnants of images and the imprint of public opinion are difficult to erase on the Internet, they may even become the trigger of secondary online violence. Most of the time, there is no way to disclose the specific details of deep counterfeiting pornography cases, which also makes it difficult for the outside world to fully understand the seriousness of the case and the difficulties experienced by the victims.

It can be seen that K-pop female stars have become a high-risk group for deep counterfeiting of content. Behind this is the concealment and low threshold of deep counterfeiting technology, and it also reflects the contempt some men have for women.

It can be said that the K-pop industry’s high global exposure and ultimate shaping of idol images make female artists not only commercial symbols, but also a projection object of public desire. Coupled with the lag of the legal system in responding to this emerging problem, female artists have become puppets on the Internet, who can be manipulated, belittled, or even consumed wantonly by anyone at any time.

When technology becomes a cyber-mirror”

A more terrifying fact is not only South Korea. According to a report by the British “Guardian” in March last year, nearly 4000 stars had their faces changed by AI to become protagonists of pornographic content on the five most visited deeply forged content websites in Europe. The five websites received 100 million page views in three months. Victims include actresses, TV stars, musicians and YouTube Internet celebrities.

At the beginning of last year, Taylor Swift’s deeply forged pornographic images were also widely spread on X (formerly Twitter) and were viewed more than 47 million times on the platform. According to relevant statistics, at that time, there were more than 9500 websites on the entire network specializing in creating involuntary intimate images. In the end, X once blocked searches for Swift to curb the spread of these false content.

In China, there have also been cases of deep counterfeiting, and the victims are not limited to female celebrities, but also ordinary people like you and me.

In 2020, Yu made tens of thousands of yuan in less than half a year by spreading obscene videos of face-changing, and at the same time by selling AI face-changing software and providing private customized services such as AI face-changing In November 2023, the People’s Court of Xiaoshan Strict, Hangzhou City convicted Yu of making and disseminating obscene materials for profit, and sentenced him to seven years and three months in prison and fined 60,000 yuan. This is also my country’s first trial judgment in a public interest lawsuit involving AI face-changing infringing on citizens ‘personal information.

In the handling of such cases in China, the titles should be given and the penalties should be given, but interests and desires always seem to precede the rules, while the pace of the law often lags behind technological changes. Faced with new challenges of technology abuse, using technology to combat technology is becoming an important response strategy.

For example, the Glory Magic7 series launched last year launched the mobile phone industry’s first end-side AI anti-fraud detection technology, which can identify whether content is at risk of AI face-changing. This technology also won the Most Investment Value Award in the 2024 China Cybersecurity Innovation and Entrepreneurship Competition.

Of course, the responsibility of the platform should not be just to make up for it after a sheep has passed. Otherwise, no matter how perfect the rules are, they will be just a dead letter that lags behind the weaknesses of human nature. They will neither stop profit-seeking black property nor protect the dignity of the victims.

On social platforms, there are still a large number of lawbreakers who upload deeply forged video content of many female artists on illegal websites. Some merchants on trading platforms have just changed their faces and continue to navigate through the cracks of the rules.

In recent years, although China has paid attention to this issue in terms of relevant legislation, the rules and regulations that have been introduced do not yet have operable details and clear penalty standards. At the National Two Sessions in March this year, Li Dongsheng, a representative of the National People’s Congress, founder and chairman of TCL, also put forward suggestions on strengthening the management of AI deep counterfeiting fraud.

In recent years, technology has no good or evil, but it has similar rhetoric on borders, which has become a slogan in almost every report on technology abuse. However, technology cannot fill the hole for moral shortcomings. Every frame is an active choice by the perpetrator, not an algorithm that makes its own decisions.

When technology has become the cyber-demonizing mirror of some people, perhaps what we need more is not only a sharper regulatory blade, but also a spiritual awakening of the whole people. The essence of shamelessness in the digital world is the complete festering of real personality.