The big model melee, on the one hand, the ability is on the other, and on the other, the “cost” is on the other.

How are the costs of DeepSeek calculated?

author| Fixed focus One Wang Lu

DeepSeek has completely made the world unable to sit still.

Yesterday, Musk made a live broadcast with the smartest AI Gork 3 on earth, claiming that its reasoning ability surpassed all currently known models and that its reasoning-test time score was better than DeepSeek R1 and OpenAI o1. Not long ago, the national-level application WeChat announced its connection to DeepSeek R1, which is under gray scale testing. This king explosive combination is believed by the outside world that the AI search field will change.

Today, many major technology companies around the world, such as Microsoft, Nvidia, Huawei Cloud, and Tencent Cloud, have connected to DeepSeek. Netizens have also developed novel methods such as fortune-telling and lottery prediction. The popularity has directly transformed into real money, boosting DeepSeek’s valuation to rise, reaching a maximum of 100 billion US dollars.

DeepSeek was able to go out of the circle because in addition to free and good use, it also trained the DeepSeek R1 model that is comparable to OpenAI o1 at a GPU cost of only US$5.576 million. After all, in the 100-model wars in the past few years, domestic and foreign AI model companies have spent billions or even tens of billions of dollars. The price for Gork 3 to become the world’s smartest AI is also high. Musk said that Gork 3 training consumes a total of 200,000 Nvidia GPUs (the cost per unit is about US$30,000), while industry insiders estimate that DeepSeek is only more than 10,000.

But there are also some people who calculate DeepSeek on costs. Recently, Li Feifei’s team claimed that they had trained an inference model S1 for less than US$50 in cloud computing costs. Its performance in mathematical and coding ability tests is comparable to OpenAI’s o1 and DeepSeek’s R1. However, it should be noted that the S1 is a medium-sized model, which is far from the hundreds of billions of parameter levels of DeepSeek R1.

Even so, the huge difference in training costs from $50 to tens of billions of dollars still makes everyone curious. On the one hand, they want to know how powerful DeepSeek is and why everyone is trying to catch up with or even surpass it; on the other hand, how much does it cost to train a large model? What links does it involve? Is it possible to further reduce training costs in the future?

DeepSeek, a partial generalization

In the eyes of practitioners, before answering these questions, a few concepts must be clarified.

The first is to generalize the understanding of DeepSeek. What is amazing is that one of its many large models is DeepSeek-R1, the reasoning large model, but it also has other large models, and the functions of different large model products are different. The US$5.576 million is the GPU cost during the training process of its general-purpose large model DeepSeek-V3, which can be understood as the net computing power cost.

A simple comparison:

- General model:

When receiving clear instructions and disassembling the steps, the user should clearly describe the task, including the order of answers. For example, the user needs to prompt whether to summarize first and then give the title, or vice versa.

The reply speed is fast, based on probability prediction (rapid response), and the answer is predicted through a large amount of data.

- Reasoning Model:

Receive simple, clear, goal-focused tasks, the user can say what he wants, and it can make its own plans.

The reply speed is slow, and based on chain thinking (slow thinking), you can reason questions step by step to get answers.

The main technical difference between the two lies in the training data. The general model is the question + answer, and the reasoning model is the question + thinking process + answer.

Second, because Deepseek’s reasoning model DeepSeek-R1 has attracted more attention, many people mistakenly believe that the reasoning model must be more advanced than the general large model.

What needs to be affirmed is that the reasoning large model belongs to the cutting-edge model type. It is a new paradigm launched by OpenAI to increase computing power in the reasoning stage after the large model pre-training paradigm hit the wall. Compared with the general large model, the large model of reasoning consumes more money and takes longer to train.

But it does not mean that the large reasoning model must be better than the general large model. Even for certain types of problems, the large reasoning model may seem useless.

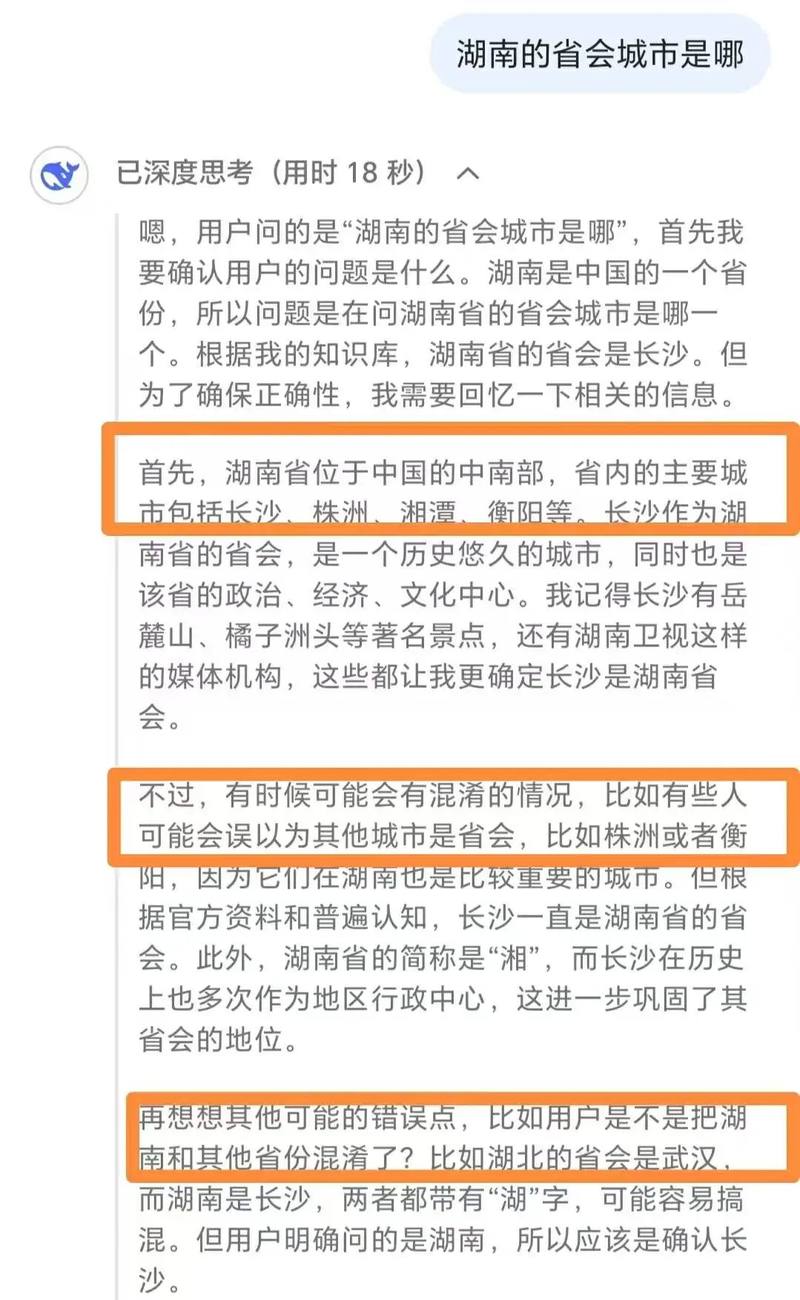

Liu Cong, a well-known expert in the field of large models, explained to “Fixed Focus One” that for example, if you ask the capital of a certain country/the provincial capital of a certain place, the large inference model is not as easy to use as the general large model.

DeepSeek-R1 Overthinking when Faced with Simple Questions

He said that in the face of such relatively simple questions, the large reasoning model not only has a lower answer efficiency than the general large model, but also consumes more computing power and costs, and even over-thinking may occur, which may eventually give wrong answers.

He suggested that reasoning models should be used when completing complex tasks such as mathematical problems and challenging coding. For simple tasks such as summary, translation, and basic question and answer, the use of general models should be better.

The third is what DeepSeek’s true strength is.

Based on the authoritative list and practitioners ‘opinions,”Focus One” ranks DeepSeek in the fields of reasoning large models and general large models respectively.

There are four main companies in the first echelon of reasoning models: foreign OpenAI’s o-series models (such as o3-mini) and Google’s Gemini 2.0; domestic DeepSeek-R1 and Ali’s QwQ.

More than one practitioner believes that although the outside world is discussing that DeepSeek-R1 is the top model in the country and has capabilities that surpass OpenAI, from a technical perspective, there is still a certain gap compared with OpenAI’s latest o3.

Its more important significance is that it has greatly narrowed the gap between the top levels at home and abroad. ldquo; If the previous gap was 2-3 generations, it has narrowed to 0.5 generations after the advent of DeepSeek-R1.” rdquo; Jiang Shu, a senior practitioner in the AI industry, said.

Based on his own experience, he introduced the advantages and disadvantages of the four companies:

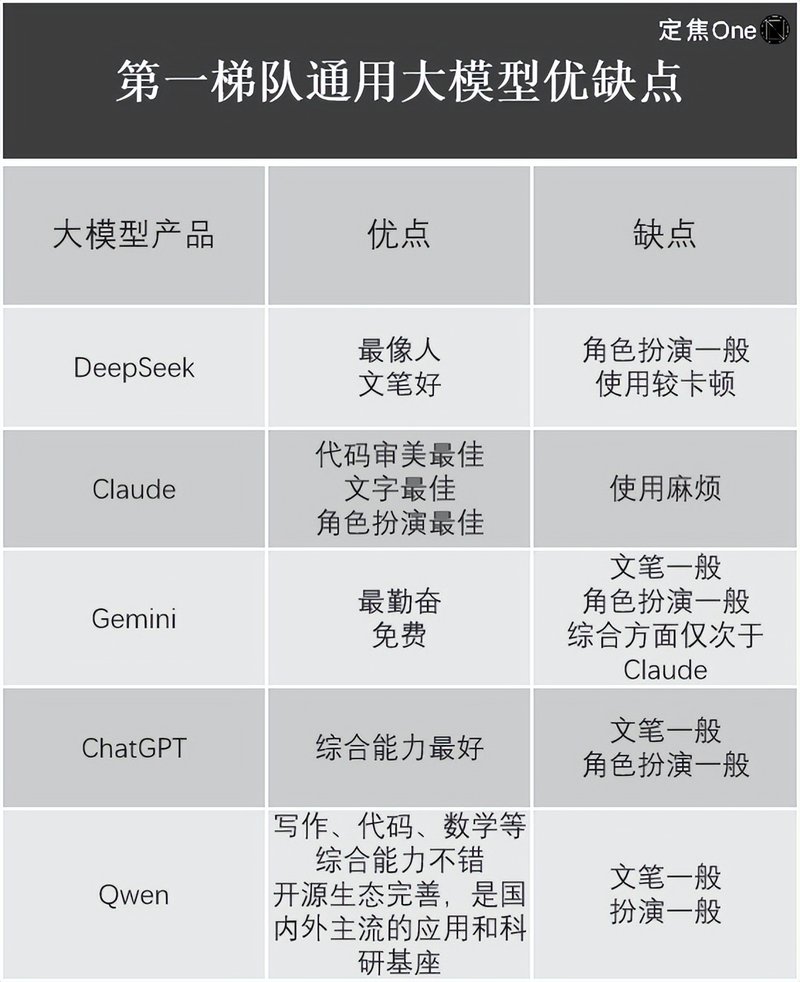

In the field of general large models, according to the LM Arena (an open source platform used to evaluate and compare the performance of large language models (LLMs)) list, there are five companies ranked in the first tier: Gemini (closed source) from Google abroad, ChatGPT from OpenAI, Claude from Anthropic; DeepSeek from China and Qwen from Ali.

Jiang Shu also listed the experience of using them.

It is not difficult to find that although DeepSeek-R1 has shocked the global technology circle and its value is beyond doubt, each large model product has its own advantages and disadvantages, and not all large models of DeepSeek are perfect. For example, Liu Cong found that DeepSeek’s latest multi-modal large model, Janus-Pro, which focuses on image understanding and generation tasks, has average use results.

How much does it cost to train a large model?

Returning to the cost of training a large model, how was a large model born?

Liu Cong said that the birth of the large model is mainly divided into two stages: pre-training and post-training. If the large model is compared to a child, what the pre-training and post-training need to be done is to let the child go from being born only able to cry to understanding what adults say, and then to taking the initiative to speak to adults.

Pre-training mainly refers to training corpus. For example, a large amount of text corpus is cast into the model to let the child complete knowledge intake, but at the moment he only learns the knowledge and cannot use it.

Post-training tells children how to use the knowledge they have learned. There are two methods, model fine-tuning (SFT) and reinforcement learning (RLHF).

Liu Cong said that whether it is a general model or a large reasoning model, domestic or foreign, everyone follows this process. Jiang Shu also told “Fixed Focus One” that each family uses the Transformer model, so there is no essential difference in the bottom-level model composition and training steps.

Many practitioners said that the training costs of each large model vary greatly, mainly concentrated in the three major parts: hardware, data, and labor. Each part may also adopt different methods and the corresponding costs are also different.

Liu Cong gave examples respectively. For example, whether hardware is bought or rented, the price difference between the two is very large. If you buy it, the one-time investment in the early stage will be large, but it will be greatly reduced in the later stage. Basically, you only need to pay the electricity bill. If you rent it, it may not be large in the early stage, but this part of the cost cannot be saved. In terms of the training data used, there is a big difference between whether to directly purchase ready-made data or manually crawl it yourself. The training cost is different each time. For example, the first time, you have to write crawlers and do data filtering. However, the next version will reduce the cost because the repeated operations of the previous version can be used. As well as how many versions are iterated before the model is finally displayed, the cost is also determined, but large model companies are very secretive about this.

In short, every link involves many high hidden costs.

According to GPU estimates, among top models, the training cost of GPT-4 is about US$78 million, Lama 3.1 exceeds US$60 million, and Claude3.5 is about US$100 million. However, because these top large models are closed-source and whether each family wastes computing power, it is difficult for the outside world to know. Until DeepSeek in the same echelon appeared for US$5.576 million. It should be noted that US$5.576 million is the training cost of the base model DeepSeek-V3 mentioned in the DeepSeek technical report. ldquo; The training cost of the V3 version can only represent the cost of the last successful training, and the costs of previous research, architecture, and algorithm trial and error are not included; the specific training cost of R1 is not mentioned in the paper. rdquo; Liu Cong said. In other words,$5.576 million is only a small fraction of the total cost of the model.

SemiAnalysis, a semiconductor market analysis and forecasting company, pointed out that taking into account factors such as server capital expenditures and operating costs, DeepSeek’s total costs may reach US$2.573 billion within four years.

Practitioners believe that compared with the tens of billions of dollars invested by other large model companies, DeepSeek’s costs are low even at US$2.573 billion.

Moreover, the training process of DeepSeek-V3 only requires 2048 Nvidia GPUs and only 2.788 million GPU hours are used. In contrast, OpenAI consumes tens of thousands of GPUs, and the Meta training model Llama-3.1- 405B uses 30.84 million GPU hours.

DeepSeek is not only more efficient during the model training phase, but also more efficient and less costly during the invocation and reasoning phase.

From the API pricing of each major model given by DeepSeek (developers can call large models through the API to realize text generation, dialogue interaction, code generation and other functions), it can be seen that the cost is lower than that of OpenAI. It is generally believed that APIs with high development costs often require higher pricing to recover costs.

The API pricing of DeepSeek-R1 is 1 yuan per million input tokens (cache hits) and 16 yuan per million output tokens. In contrast, OpenAI’s o3-mini is priced at US$0.55 (RMB 4) and US$4.4 (RMB 31) respectively.

Cache hits, that is, reading data from the cache instead of recalculating or calling the model to generate results, can reduce data processing time and costs. The industry improves the competitiveness of API pricing by distinguishing between cache hits and cache misses. Low prices also make it easier for small and medium-sized enterprises to access.

Although the DeepSeek-V3, which recently ended its preferential period, has been raised from the original price of 0.1 yuan per million input tokens (cache hits) and 2 yuan per million output tokens to 0.5 yuan and 8 yuan respectively, the price is still lower than that of other mainstream models.

Although the total training cost of large models is difficult to estimate, practitioners agree that DeepSeek may represent the lowest cost of the current first-class large model, and in the future, various companies should follow DeepSeek to reduce it.

DeepSeek’s cost-reduction revelation

Where does DeepSeek save its money? Based on the opinions of practitioners, every aspect has been optimized from the model structure-pre-training-post-training.

For example, in order to ensure the professionalism of the answers, many large model companies use the MoE model (Hybrid Expert Model), which means that when faced with a complex problem, the large model will break it down into multiple sub-tasks, and then hand over different sub-tasks to different experts. Answer. Although this model has been mentioned by many large model companies, DeepSeek has achieved the ultimate level of expert specialization.

The secret is to adopt fine-grained expert segmentation (subtasks are subdivided into the same category) and shared expert isolation (isolating some experts to reduce knowledge redundancy). The advantage of this is that the efficiency and performance of MoE parameters can be greatly improved., to give answers faster and more accurately.

Some practitioners estimate that DeepSeekMoE is equivalent to achieving an effect similar to that of LLaMA2 – 7B with only about 40% of the calculation effort.

Data processing is also a hurdle in large-scale model training. Everyone is thinking about how to improve computing efficiency while also reducing hardware requirements such as memory and bandwidth. DeepSeek found a way to use FP8 for low-precision training (to accelerate deep learning training) when processing data. This is a step ahead of known open source models. After all, most large models use FP16 or BF16 mixed precision training, and FP8 trains much faster than them. rdquo; Liu Cong said.

In terms of reinforcement learning in post-training, strategy optimization is a major difficulty, which can be understood as allowing large models to make better decisions. For example, AlphaGo learned how to choose the optimal strategy in Go through strategy optimization.

DeepSeek chose the GRPO (Group Relative Policy Optimization) algorithm rather than PPO (Near End Policy Optimization) algorithm. The main difference between the two is whether a value model is used when optimizing the algorithm. The former uses intra-group relative rewards to estimate the dominance function, while the latter uses a separate value model. With one less model, the computing power requirements will naturally be smaller and costs will also be saved.

And at the reasoning level, using the multi-head latent attention mechanism (MLA) instead of the traditional multi-head attention (MHA) significantly reduces memory consumption and computing complexity. The most direct benefit is that the API interface cost is reduced.

However, the biggest inspiration DeepSeek gave Liu Cong this time is that it can improve the reasoning ability of large models from different angles. Both pure model fine-tuning (SFT) and pure reinforcement learning (RLHF) can make good large reasoning models.

In other words, there are currently four ways to make reasoning models:

The first type: Pure reinforcement learning (DeepSeek-R1-zero)

Second type: SFT+ Reinforcement Learning (DeepSeek-R1)

The third type: pure SFT (DeepSeek distillation model)

The fourth type: pure prompt words (low-cost small model)

“Previously, SFT+ reinforcement learning was marked in the circle. No one expected that doing pure SFT and pure reinforcement learning could also get good results. rdquo; Liu Cong said.

DeepSeek’s cost reduction not only brings technical inspiration to practitioners, but also affects the development path of AI companies.

Wang Sheng, partner of Yingno Angel Fund, said that the AI industry often has two different path choices in the direction of running through AGI: one is the computing power armament paradigm, which piles technology and money and piles computing power, and first pulls the performance of large models to a high point, then consider industrial implementation; the other is the algorithmic efficiency paradigm, which aims at industrial implementation from the beginning and launches low-cost and high-performance models through architectural innovation and engineering capabilities.

“DeepSeek’s series of models demonstrate the feasibility of a paradigm that focuses on optimizing efficiency rather than capacity growth when the ceiling cannot rise. rdquo; Wang Sheng said.

Practitioners believe that as algorithms evolve in the future, the training cost of large models will be further reduced.

Sister Mu, founder and CEO of Ark Investment Management, once pointed out that before DeepSeek, the cost of artificial intelligence training dropped by 75% per year, and the cost of reasoning even dropped by 85% to 90%. Wang Sheng also said that if the model was released at the beginning of the year and the same model was released at the end of the year, the cost would drop significantly, and may even drop to 1/10.

Independent research firm SemiAnalysis pointed out in a recent analytical report that the decline in reasoning costs is one of the signs of the continuous progress of artificial intelligence. The performance of the GPT-3 large model, which originally required a supercomputer and multiple GPUs, can now achieve the same effect as some small models installed on laptops. And costs have also dropped a lot. Anthropic CEO Dario believes that algorithm pricing has moved towards GPT-3 quality, and costs have been reduced by 1200 times.

In the future, the cost reduction rate of large models will become faster and faster.

It is not allowed to reproduce at will without authorization, and the Blue Whale reserves the right to pursue corresponding responsibilities.