compilation| Ask nextquestion

▷Figure 1. Source: Pedreschi, Dino, et al. “Human-AI coevolution. ” Artificial Intelligence (2024): 104244.

01 An example of co-evolution between humans and artificial intelligence

Human history is a history of co-evolution, including co-evolution between humans and other species; between humans and industrial machines; and between humans and digital technology. Today,The co-evolution between humans and artificial intelligence is also deepening.

Take recommendation systems and smart assistants as examples. Recommendation algorithms on social platforms invisibly affect the decisions and interactions of the general public; personalized suggestions on shopping platforms guide our consumption habits; navigation services suggest routes to destinations; and generative AI creates content based on users ‘wishes, and has a profound impact on education, medical care, employment and many other aspects.

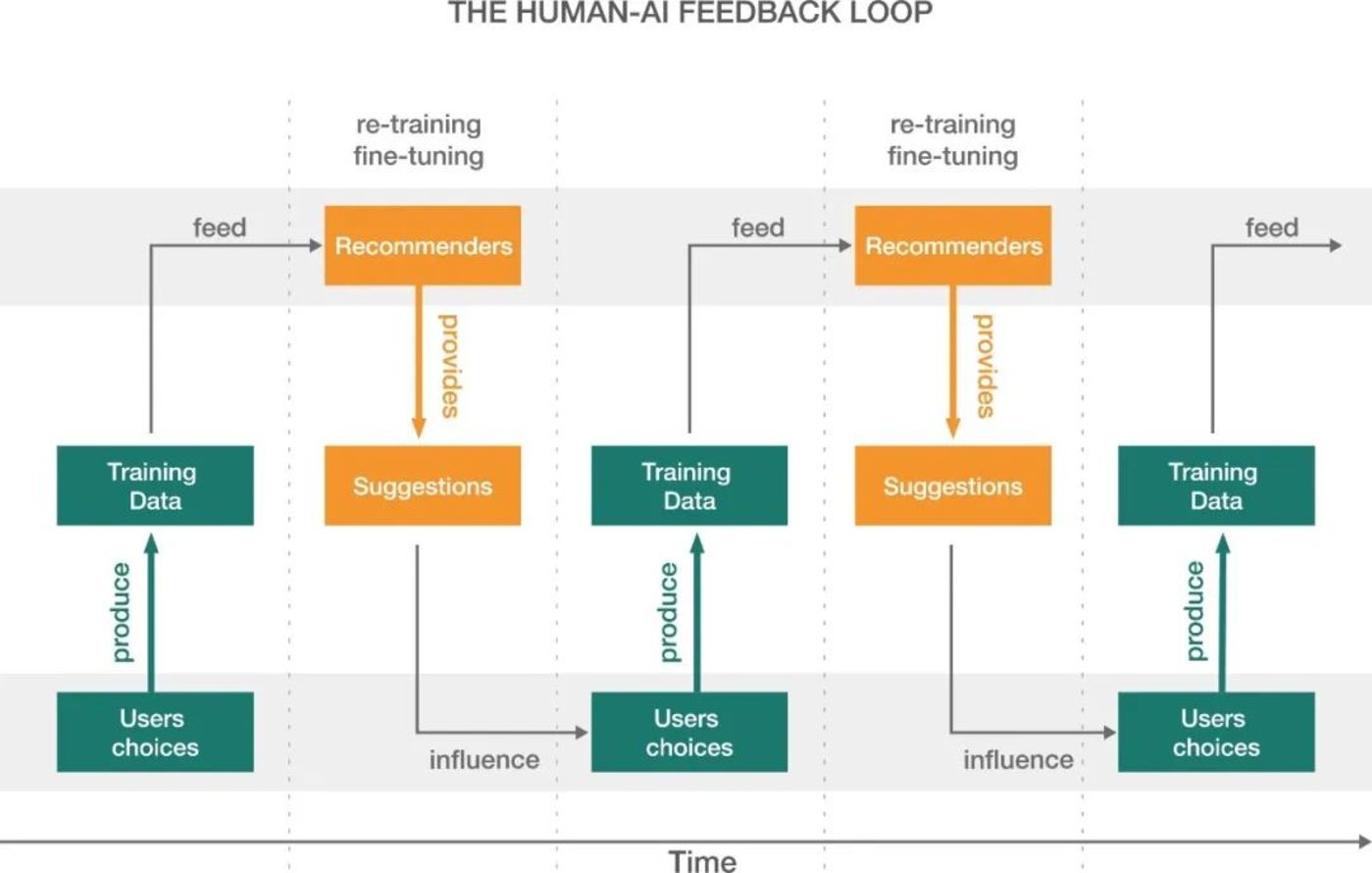

One thing that all these systems have in common is feedback loops.This is the core of human-machine co-evolution. Taking the recommendation system as an example, we can describe the feedback loop as:The user’s decisions determine the dataset on which the recommendation system trains; the trained recommendation system then affects the user’s subsequent choices, which in turn affects the next round of training, creating a potentially endless cycle.(Figure 2).

▷ Figure 2. Feedback loop between humans and artificial intelligence

Co-evolution between humans and technology is not new, butThe human-machine feedback loop gives it unprecedented form。Throughout history, technology and society have always influenced each other and developed together. For example, the birth of printing, radio and television has profoundly changed the course of history. However, at least in terms of popularity, persuasion, traceability, speed and complexity, AI-based recommendations have a significant amplifying effect.

In terms of popularity, recommendation systems are everywhere from social media to online retail and mapping services. Thanks to complex algorithms, rich data, and widespread acceptance of recommendations by users, recommendation systems have become a regular part of online interactions. By collecting and analyzing data on personal choices, recommendation systems can provide highly personalized suggestions, further improving the accuracy and persuasiveness of recommendations.

Unlike traditional technologies, the human-machine ecosystem leaves an indelible mark of recommended content and user choices (often referred to as big data). Therefore,AI-based recommendation systems have a comprehensive view of individual choices and unprecedented ability to change human behavior at scale.In this context, human-machine co-evolution is faster than ever before, as AI can be retrained with little human supervision and provide advice at an unprecedented rate. The human-machine ecosystem also promotes an unprecedented amount of interaction between users and the huge space of products, increasing system complexity.

02 Research significance of interaction between humans and artificial intelligence

Artificial intelligence is already capable of achieving similar performance to humans in many complex tasks, and is becoming increasingly interpretable and attentive to human needs. However,Current research methods often treat machines as independent individuals rather than studying them from the perspective of co-evolution.Little is known about the impact of feedback loops on the human-artificial intelligence ecosystem; therefore, we need to delve into how recommendation systems affect society, especially whether they exacerbate or mitigate unwanted collective effects.

Complexity science shows that social interaction networks are heterogeneous, leading to deeply connected hubs and modular structures. This structural heterogeneity interacts with social effects, such as inequality and isolation, and affects the evolution of networks, such as epidemics, the spread of information and ideas; the success of products, ideas and people; and urban dynamics. The impact of the human-artificial intelligence feedback loop on network processes is different from before the advent of artificial intelligence.Adopting a co-evolution perspective helps reveal the rules of complex interactions between humans and recommenders.We know very little about the parameters of the network dynamics that control human-AI co-evolution, making it difficult to predict such a complex system.

To this end, the research on the co-evolution of humans and artificial intelligence requires new methodologies. Science fiction writer Isaac Asimov once said that change, continuous change, inevitable change, is the decisive factor in today’s society. Any wise decision can no longer ignore the real world and the world it will become.With the widespread use of today’s artificial intelligence systems, greater and greater power is being held by fewer and fewer people in ways that are increasingly difficult to detect. What defines what a person is no longer defined by gods, authorities, and the public, but has become an ecosystem created by the interaction of humans and technology.

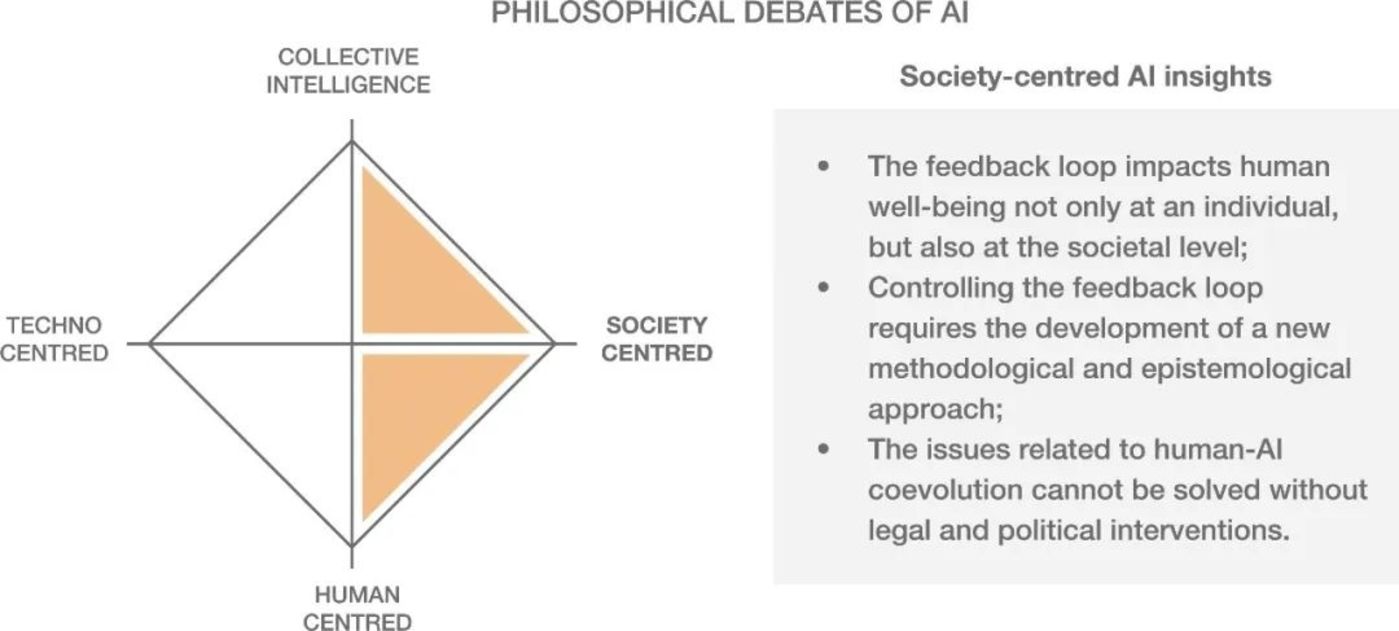

Current discussions on artificial intelligence are either technology-centered or people-centered. The feedback loop introduces a new dimension of thinking about artificial intelligence in a social-centered manner. Unlike the traditional technology-centric view, this perspective believes that the impact of feedback cycles cannot be solved through more technical means alone. In addition, it also incorporates insights from human-centered artificial intelligence and collective intelligence.On the one hand, from a people-centered perspective, feedback loops can harm human well-being. On the other hand, from a collective intelligence perspective, feedback loops have the potential to push human interaction with recommendation systems in an ideal direction.

Socially centered artificial intelligence brings three additional elements to the debate on the social impact of artificial intelligence (see Figure 3), namely (i) feedback loops affect not only individual well-being, but also social well-being;(ii) managing feedback loops requires the development of new scientific methods; and (iii) problems related to human-artificial intelligence co-evolution cannot be solved without legal and political intervention.

▷Figure 3.& nbsp; Socially centered artificial intelligence brings three additional elements to the debate.

03 Methodology for co-evolution research on human and artificial intelligence

There are many research methods for the research on the co-evolution of humans and artificial intelligence, each with its own advantages and disadvantages.

Empirical research and simulation research are the methods adopted by most existing research.Empirical research is based on data generated on user behavior on real platforms, which stems from dynamic interactions between humans and recommendation systems or smart assistants.These studies provide evidence about specific phenomena and an empirical basis for their understanding. If the data set sample is large and diverse, empirical research also allows researchers to generalize a wider group of people. However,The limitation of empirical research is that conclusions are often limited by the research time frame and conditions.In addition, because the data is mainly owned by large technology companies and rarely made public, these studies are almost impossible to replicate.

Simulation research is based on data generated by mechanisms, artificial intelligence, or digital twins.Simulation studies provide a cost-effective alternative to empirical research, especially when dealing with large-scale ecosystems or when data is not readily available. This method can be repeated under the same initial conditions to verify the results. Scholars can adjust parameters to observe the impact on the human-artificial intelligence ecosystem, which helps researchers understand the complex relationships between variables. However, because they are based on a large number of assumptions, simulations do not necessarily reflect real-world dynamics, so there are limitations in revealing unexpected or unforeseen effects. Setting specific parameters may prevent unexpected results.

Both empirical and simulation methods can be observational or controlling. Controlled research, known as experiments in social sciences, includes quasi-experiments, randomized controlled trials, and A/B tests. These studies divided samples into a control group and one or more experimental groups, with each group receiving different recommendations. Measure the impact of different recommendations by comparing the impact of two (or more) groups on different interventions.Controlled experiments allow researchers to control a variety of factors and conditions to facilitate isolation of the effects of specific intervention variables.controlled experimentaladvantagesIt lies in being able to establish causal relationships, reduce selection bias, and ensure balance between groups. However, controlled studies also existImportant shortcomings: Inclusion and exclusion criteria in the control environment may limit the universality of results; and make it difficult to adapt to changes that occur during the experiment. In addition, they are difficult to design because they require direct access to platform users and algorithms.

Some examples of controlled studies help clarify the characteristics of these methods. For example, Huszr and others conducted experiments to study the impact of Twitter’s personalized timeline on the dissemination of political content. The platform selected a group of users and exposed them to tweets posted in reverse chronological order from the accounts they followed, while the experimental group of users were exposed to personalized recommendations based on artificial intelligence. Personalized recommendations lead to significant amplification of political information, and mainstream right-wing parties benefit more from algorithmic personalization than left-wing parties. Cornacchia and others used traffic simulators to estimate the impact of real-time navigation services on the urban environment. Research found that when adoption rates exceed thresholds, navigation services may increase travel time and carbon dioxide emissions.

Observational studies assume that there are no control factors and rely solely on naturally occurring data.Examples include analyzing Facebook user behavior, providing drivers with advice from Google Maps, data collected from browser logs, robots that simulate human behavior, and experiments that ask volunteers to behave in specific ways. An advantage of observational research, whether based on empirical data or simulations, is that when the data is large and representative, the method allows researchers to generalize over a wider group, enhance the applicability of findings, and highlight potential deviations from different group paragraphs.However, a significant limitation is the challenge in establishing causality, which requires additional evidence to support the causal claim. In addition, findings from observational studies may be influenced by selection bias, measurement errors, or confounding variables; this may compromise their accuracy and reliability.

To illustrate the characteristics of observational research, two examples are given here. Cho et al. analyzed the factors that lead to political polarization on YouTube and found that recommendation systems can lead to this polarization, but mainly through users ‘channel preferences. Fleder and others investigated the impact of recommendation systems on user purchasing behavior on music streaming platforms. They found that users who were exposed to recommendations purchased more products and were more similar to each other in the diversity of purchase types.

Some studies have gone beyond static surveys of the impact of recommendation algorithms on user behavior and analyzed feedback loop mechanisms from a theoretical and/or empirical perspective.Some of this work introduces mathematical models that provide insights into the impact on recommenders based on the parameters in the model.For example, a theoretical study explored whether feedback loop mechanisms can degrade user interest: a prophetic recommendation algorithm with perfect accuracy can lead to a rapid fall into the filter bubble, while injecting randomness and expanding options into user choices slows down the process. Therefore,This study provides insight and potential remedies into the feedback loop that leads to information cocoons.

there are alsoResearch that combines theoretical evaluation and empirical evaluation.For example, one study investigated the impact of a predictive policing recommendation system based on historical crime data on the allocation of police forces in urban areas. They simulated a scenario where the following feedback loop occurred: police officers were deployed daily to areas with the highest predicted crime rates, the crimes discovered by those officers were reported, and the reported crime data were then fed back into the recommendation algorithm. This process continues to iterate. Given this feedback loop, recommendation algorithms repeatedly turn police attention to areas reporting more crimes. Due to the increase in police officers, these areas are more likely to detect more crimes, and in the long run, simulations lead to crime distribution that is unrealistic compared to observed historical crime data. The authors propose a correction mechanism that reduces the likelihood of deploying police to an area as discovered crime data is incorporated into recommendation algorithms. The Polya jar model was used to simulate feedback loops and correction mechanisms. The study can be interpreted as saying that small changes in the recommendation system can have a real impact in the human-machine ecosystem.

Although these groundbreaking studies provide valuable insights into the feedback loop mechanism, there is still important room for improvement in analyzing human-machine co-evolution. At the empirical level,The data used in these studies can only paint an incomplete picture of the interaction between humans and referees. Typically, they only describe the user’s choices over a specific period of time, without considering which recommendations are provided that will affect the user’s duration of use.

In addition, we don’t have any information on how long the platform retrains recommendation algorithms to update users ‘knowledge of preferences based on their choices. Therefore, existing research only uses available data to verify the theoretical method of model feedback loop mechanism. To overcome this limitation, we need empirical research based on longitudinal data that describes each iteration of the feedback loop: during this process,(i) the recommendations provided to users;(ii) the user’s response to those recommendations; and (iii) a re-training process that influences user choices based on previous recommendations. Such research would allow for two-way observation of causality rather than one-way.

04 Types of impacts of co-evolution between humans and artificial intelligence

The impact of artificial intelligence on humans is actually a by-product of the feedback loop generated by the interaction between recommendation algorithms and users.We can measure this impact at different levels, including the individual level, project level, model level, and system level. Personal influence refers to the influence that recommenders have on users, such as sellers and buyers in the online retail ecosystem and drivers and passengers in the citymap ecosystem. Project-level impact refers to how recommendation systems and user choices affect the characteristics of specific objects; such as posts on social media, products on online retail platforms, trips in urban services, and text or images in a content-generation ecosystem. The impact at the model level involves the impact of user choices on recommendation algorithms and large model dependence characteristics. Including whether the recommendation algorithm changes its behavior and the nature of the recommendation based on user choices. Finally, system-level impact refers to the collective impact of interactions between humans and recommendation algorithms on a larger scale.

Relevant literature shows thatThese effects may manifest themselves at the system level as polarization, echo chamber effects, inequality, concentration and isolation.Polarization refers to the sharp division of users or projects into different groups based on certain attributes (opinions or beliefs). The echo chamber effect refers to the environment in which opinion choices are recognized and strengthened within a group. Inequality represents the uneven distribution of resources among members of a group, while concentration refers to the close gathering of users. In an urban context, concentration is often identified as congestion. Isolation refers to the separation of user groups from each other. Some effects occur only at the individual or model level. For example, information cocoons (individual level), where users are only exposed to information consistent with their existing beliefs, and model crashes (model level), where recommendation system performance gradually declines as the recommendation system and users continue to co-evolve.

Eventually,Outcomes at all levels are reflected in changes in quantity (i.e., the quantity that measures certain user or item attributes) and diversity (i.e., the diversity of items and user behaviors), and all these changes are natural by-products of the co-evolution of humans and artificial intelligence.

In social media, recommendation systems have achieved considerable success, helping users recommend new posts and new users they follow. This co-evolution between users and recommendation systems produces two interrelated feedback loops.First, the interaction between users and posts affects the content of recommendations, and these recommendations affect subsequent interactions between users and future posts. Secondly, the accounts that users choose to follow will also affect the users who recommend them, and these recommendations affect the subsequent interactions between users and their followers. This feedback loop may have multiple effects at the individual level (e.g., information cocoons, homogenization) and the system level (e.g., polarization, fragmentation, echo chamber effects).While recommendation systems help users access content and connect with like-minded people, these algorithms can also limit them to information cocoons.Such limitations can lead to significant polarization of opinions and users, contributing to a tendency to radicalize ideas. For example, as mentioned earlier, personalized recommendations on Twitter excessively expose users to certain political content.

In online retail,Recommendation systems play a key role in the success of e-commerce and streaming giants such as Amazon, eBay and Netflix.The co-evolution of these recommendation systems with consumers can create complex feedback loops. Recommended products (e.g., consumer goods, songs, movies) are based on previous purchases, which in turn are influenced by previous recommendations. In this human-machine ecosystem, a key difference is collaborative filtering and personalized recommendations. Collaborative filtering is based on the principle that whoever buys one buys another and relies on collective user behavior; while personalized recommendations tailor suggestions based on individual user tastes. For example, collaborative filtering may increase sales and individual consumption diversity, while at the same time it may reduce overall consumption diversity and amplify the success of popular products. On the one hand, the recommendation system helps users better navigate through the huge product selection space, reduce selection overload, quickly allocate needed products, and increase platform revenue. On the other hand, at the overall level, they may reduce diversity in product purchases (i.e., create filter bubbles around users) and increase concentration, benefiting certain brands and reducing competition.

Navigation services recommend a route to a destination, taking into account changing traffic conditions and assisting users in exploring unfamiliar areas. Therefore, users with the same starting point and ending point will receive similar recommendations. The impact of navigation services on cities is unclear: While they are designed to optimize individual travel times, they can also lead to congestion and lead to longer travel times and higher carbon dioxide emissions in the environment. For example, in 2017, Google Maps, Waze, and Apple Maps redirected drivers from congested highways to the narrow, mountainous streets of Leonia, New Jersey, causing more severe congestion. These problems are exacerbated by the co-evolution between drivers and algorithm updates, creating a feedback loop: the actual recommended travel time also depends on the route choices of drivers affected by previous recommendations. Driver behavior may change travel times, shaping subsequent recommendations. In this case,If too many drivers choose the same environmentally friendly route, the route will no longer be environmentally friendly.

Large-scale language models that have emerged recently are rapidly penetrating into areas such as education, politics, and the job market. The use of these models may compress language diversity and thereby standardize language styles in generated text. Recent research has shown that when content generated by the large model is used to fine-tune the large model itself, regression to the mean may occur, losing linguistic diversity in the form and content of the generated text. As more machine-generated web content is used as training data for models, this self-reinforcing process will become more common.At the analytical level, we need to understand this co-evolution to develop recommendation systems that balance the potential positive impact of feedback cycles (language standardization) with the negative impact (language diversity compression).。

05 The social impact of the co-evolution of humans and artificial intelligence

Recommendation algorithms have a significant and often unexpected impact on these social phenomena, which are at the core of human-machine co-evolution because they can amplify trends that already exist in society.

Recommendation systems are often designed to maximize utility and profits for companies and individuals (e.g., for city map ecosystems, for online retail ecosystems), but such designs ignore public utility considerations. A valid starting point for understanding this limitation is the debate between rational choice theory and ethical individualism. Rational choice theory is based on the idea that individuals act through rational reasoning in society, thereby maximizing their utility. Individualist methodology interprets social outcomes as the sum of the relational behaviors of all people in society. Recommendation systems designed based on individualistic methodologies may not consider collective utility.

Historically, there have been many examples of damage to society caused by the universality of individualism. For example, Thomas More and the enclosure movement reflect the potential paradox that humans and recommendation algorithms may have co-evolved. The enclosure movement is a large-scale movement to privatize land originally used collectively. Similarly, recommendation algorithms that rely on individual utility as a driving force may reduce the potential collective utility brought about by the development of artificial intelligence, thereby strengthening polarization mechanisms that already exist in society.

The co-evolution of humans and artificial intelligence is closely related to the operation of capitalism: major technological developments in recommendation algorithms and artificial intelligence occurred during a period when rational choice theory, individualist methodology, and neoliberal economics dominated.Like Kean Birch’s concept of automated neoliberalism (which holds that digital platforms shape markets and the accumulation of personal data changes individual lives), algorithms have the potential to automate social relationships.

However, solutions that may help improve collective utility are often difficult to achieve due to the role of recommendation algorithms. The co-evolution of artificial intelligence and society takes place against the backdrop of a lack of balance between platforms with mainstream recommendation algorithms and users who use these algorithms uncritically.The ancient question of who owns the means of production is particularly prominent today and is taking on new forms in an environment of human-machine co-evolution.In this regard, by having mainstream recommendation algorithms and thus generating the most interactions, the platform could have a transformative impact in the economy and society.

This article does not elaborate on the political economy of the co-evolution of humans and artificial intelligence, that is, it conducts a systematic analysis of the interrelationship between asset ownership and the influence of recommenders.However, this aspect is an essential background element for discussing, at least theoretically, the potential unintended consequences of co-evolution between humans and recommendation algorithms. If recommendation algorithms have a strong influence on shaping individual choices that shape collective outcomes, we must reflect on how those choices are made.

In addition, the impact of co-evolution can further strengthen its social impact and increasingly undermine collective utility.The opposite reasoning is also effective. In different socio-economic contexts, if recommendation algorithms aim to achieve common collective goals, they can promote positive social outcomes through co-evolution. This emphasizes solutions based solely on technology, that is, the belief that technology is the answer to any challenge we face and faces higher risks. We need more research on the co-evolution of humans and artificial intelligence to guide positive results. A review of recent research shows thatArtificial intelligence tends to exacerbate social divisions, especially for historically marginalized groups(For example, research on the United States points to the impact of race and gender). These patterns are more evident in low-/middle-income countries. It is reasonable to assume thatDue to our lack of research to measure human-machine co-evolution and feedback mechanisms, the unbalanced impact of artificial intelligence has been underestimated or overestimated.

The co-evolution of humans and artificial intelligence may exacerbate inequality and concentration in systems from different backgrounds.For example, in social media, recommendation systems may exacerbate exposure inequality at the individual level, exacerbating the rich getting richer effect regardless of user attributes or network characteristics. For online retail, purchase-based collaborative filtering may encourage users to buy more products, but it may also lead to increased concentration, prompting users to buy the same products. On city maps, online ride-hailing (such as Uber and Lyft) can mislead low ride-occupancy in poor and black communities as a sign of low user demand, exacerbating existing racial and socioeconomic inequalities.In content generation ecosystems, such algorithms self-reinforcing can lead to a loss of Content diversity generated.

06 Challenges faced by the research on co-evolution of humans and artificial intelligence

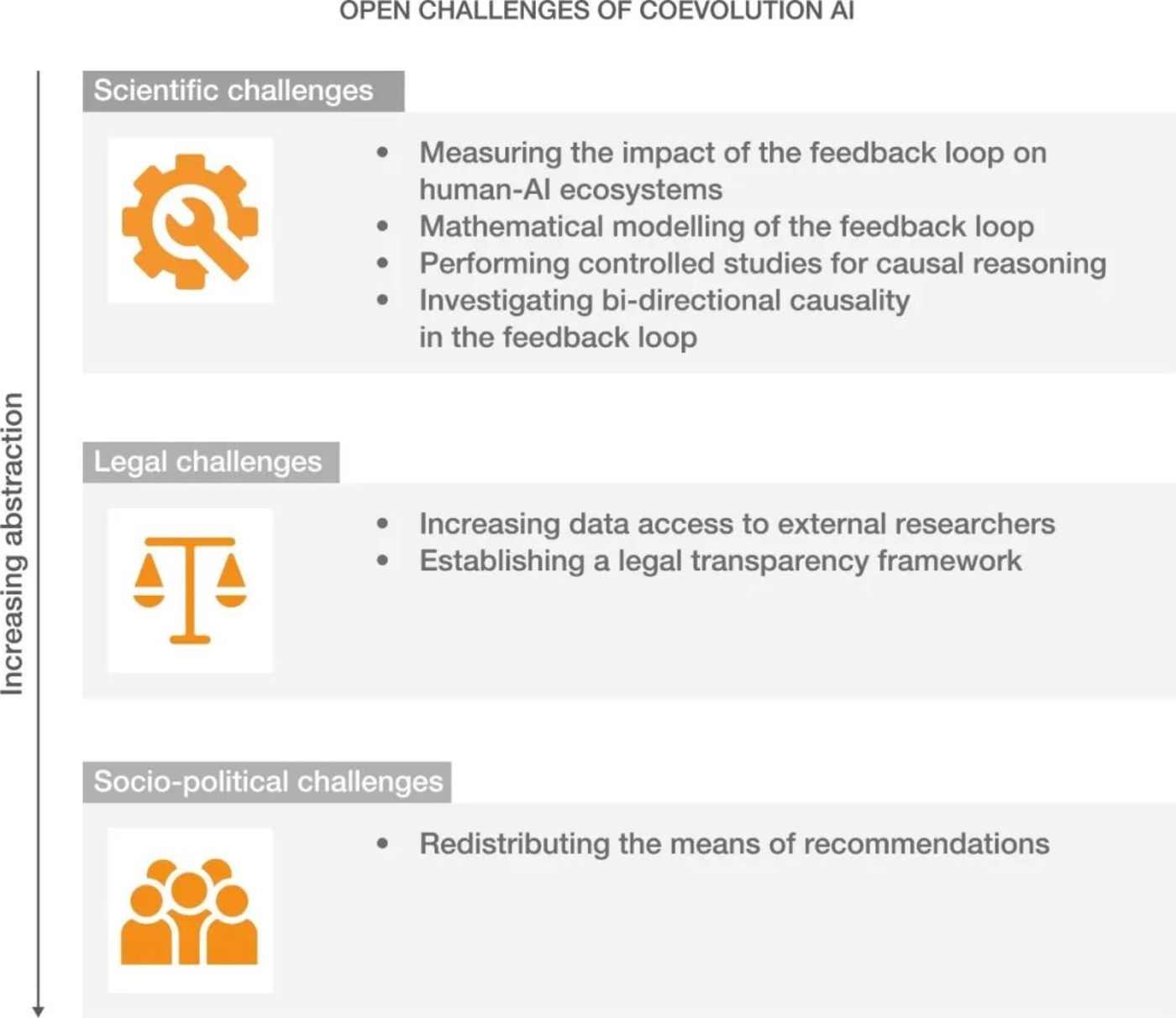

Research on the co-evolution of humans and artificial intelligence poses important challenges for the future. These challenges can be divided into scientific, legal and social and political aspects (see Figure 4)

▷Figure 4.& nbsp; Three important challenges posed by human-machine co-evolution for the future

From a scientific perspective, we need a way to continuously measure the impact of feedback loops on human and recommender behavior and make recommendations.This approach can be achieved by tracking the change in impact each time the recommendation algorithm is retrained. For example, you can measure the types of products a user purchases over a specific period of time, evaluate the extent to which a feedback loop mechanism amplifies or reduces this diversity over a longer time scale, or how many consecutive feedback loop iterations are needed to change this purchasing diversity? In a similar way, we can track the changes that occur to recommenders after retraining the algorithm: for example, how long does it take for a large generative model to collapse and lose language diversity?

An important technical factor in studying human-machine co-evolution is the deviations that occur at different stages of the feedback cycle: Collecting data from users may introduce user choice and exposure bias; inductive bias that may occur when recommendation systems learn from data, and popularity bias and unfairness that may occur after feedback recommendation results to users. These deviations, as well as other issues such as data pollution, can shape feedback loops and impacts at the individual, project, model and systemic levels. The interaction between deviations at different stages of the feedback cycle has not been fully measured in the current literature.

Another fundamental issue worthy of attention is the balance between conformity and diversity.From a mathematical modeling perspective, we need to make conscious efforts to better capture feedback loops and their impact on the human-artificial intelligence ecosystem. At an epistemological level, understanding the causal interactions between humans and recommenders is crucial; however, the co-evolutionary perspective is ignored in many existing literature. There are steps we can take to alleviate this problem, such as developing controlled research that considers feedback loop mechanisms. In addition, we must transcend the one-way view of cause and effect and explore the continuous influence of humans and recommendation algorithms on each other in both directions, requiring a comprehensive study of the dynamics of their co-evolution.

In addition to scientific challenges, other obstacles may hinder our ability to study the emerging phenomenon of machine evolution, such asResearchers have no access to data external to the platform, and insufficient transparency in the design and application of recommendation systems in different platforms。This seriously undermines the repeatability and replicability of potential research on human-machine co-evolution. Initiatives like the European Union’s Digital Services Act could ease this obstacle, but how to allow vetted researchers to access private platforms remains unclear. In addition to the new legal transparency framework, one potential way to overcome these obstacles could be to develop specialized APIs that encourage external researchers to interact with the platform and conduct evidence-controlled experiments by changing recommenders ‘parameters or establishing experimental and control groups of users. Governments should encourage platforms to establish a culture of continuous evaluation of the impact of feedback loops, which can draw on existing experience, such as measures such as emission reduction standards and drug side effect regulation.

Increasing transparency also requires addressing other fundamental challenges at the social and political level.The degree of concentration of recommendation algorithms is crucial on this issue. In an environment where large technology companies enjoy oligopolistic status, recommendation algorithms are adjusted to generate high profits for a few. In this regard, people-oriented research on the co-evolution of humans and artificial intelligence may lead to the neglect of many small user markets and broader social impacts due to the lack of political intervention to redistribute recommendation channels and methods. Such interventions may help develop more transparent data access rules and fairer allocation of recommended means.In the long run, small changes in recommendation algorithms or human behavior can have a significant impact on social outcomes, whether positive or negative. This may introduce a butterfly effect in the feedback loop, which we must study and understand.

The challenges discussed in this article will persist even as future online platforms may undergo profound changes, such as the emergence of decentralized platform architecture, user ownership of data, the maturity of AI agents, and new platform supervision and management models.These challenges are not only related to scholars ‘ability to conduct research, but also go far beyond this and affect the social and political fields. Only by accurately measuring and understanding the impact of recommendation algorithms on human behavior can we provide policymakers with information on how to make informed decisions, taking into account not only the real world, but also the future world. This understanding is critical to designing appropriate policies to avoid the potential negative externalities of uncontrolled co-evolution between humans and recommendation algorithms. We are committed to building a social-centered future artificial intelligence that will be part of the solution to long-term social problems, not part of the problem.

07 Compiled words

In the early days, Tiktok refugees crowded the Little Red Book, but now Deepseek publishes liberal arts. This article’s discussion of artificial intelligence and the coordinated evolution of humans has become closely related to you and me. This article focuses on recommendation systems, because recommendation systems are relatively mature and there is enough relevant research. What may be more far-reaching is large models and related agents, which can be used not only for recommendations, but also for education and influence the job market. However, because there is not enough relevant research, this article does not mainly elaborate. In addition, the article does not talk about the impact of artificial intelligence on human culture and politics.

After translating this article, I feel that the article mainly discusses the negative impact of the co-evolution of humans and artificial intelligence. The title of the article predicts that in the ecosystem composed of humans and artificial intelligence, humans are like mitochondria, providing data for artificial intelligence as nourishment. However, in a more ideal state, artificial intelligence is responsible for providing automation under human supervision to ensure the bottom line of human nature, and those that cannot be defined by automation are the upper limit of human nature.

Source for this article: Pedreschi, Dino, et al. “Human-AI coevolution. ” Artificial Intelligence (2024): 104244.