Article source: Fixed Focus One

Image source: Generated by AI

Image source: Generated by AI

The last time the AI industry caused a national shock was when ChatGPT was born in November 2022. Since then, every major change in the AI industry has been called a “ChatGPT moment.”

The word was rewritten at the end of 2024, and the “DeepSeek moment” appeared and was seen as a new turning point in the history of AI.

In the early days of the Spring Festival in 2025, DeepSeek, an AI company in Hangzhou, China, successively released two open source models, V3 (December 26, 2024) and R1 (January 20, 2025).

Among them, DeepSeek claims that V3 is close to the closed-source model OpenAI’s GPT-4o and Anthropic’s Claude-3.5-Sonnet in performance, better than the open-source model Meta’s Llama 3, and the total training cost is only US$5.576 million. The effect of the reasoning model R1 is close to that of OpenAI o1, and the price of the API (Application Programming Interface) is only 3.7% of that of OpenAI o1.

This is a startup company established on July 17, 2023, but it holds tens of thousands of Nvidia chips and has trained a large model with good performance at about 7% of the cost of overseas AI giants. As early as May 2024, this company officially launched a price war for the big model of China. It was targeted by major companies such as Byte, Alibaba, and Baidu. At the end of the year, it successfully burned the price war overseas.

The emergence of DeepSeek once caused global computing power concept stocks to plummet. Coupled with the general decline in U.S. technology stocks, Nvidia’s share price fell nearly 17%, and its market value evaporated by nearly US$600 billion, making it the largest in the history of U.S. stocks. OpenAI and Google are also urgently launching the latest models in the near future, and the AI industry can be said to be boiling oil.

After DeepSeek exploded, Silicon Valley giants began to lift the tables. OpenAI said it had found evidence that DeepSeek was “distilling” the OpenAI model. Anthropic founder and CEO Dario Amodi issued a document denying the breakthrough made by R1 and calling for strengthening export controls on computing power to China.

Putting aside the emotions behind this feast, this article attempts to clarify whether DeepSeek is “overvalued” and what ripple effects DeepSeek will have on the domestic and foreign AI industries.

If you want to wear its crown, you must bear its weight

DeepSeek-R1 has been launched for more than 20 days, and it has endured as much pressure as it receives.

Lin Zhi, an AI industry practitioner, summarized the sources of DeepSeek’s reputation to “Focus One”: 1. CompletelyFree to use.2. When chatting with usersDemonstrate the thought process, this can also inversely optimize the user’s questioning form and improve the dialogue experience. However, o1 did not publish the thinking process, which may be because it is afraid that competitors will copy the process and train their own models. 3. Conduct technical papers and modelsOpen source without reservation, some big open source models will still keep the best versions for themselves.

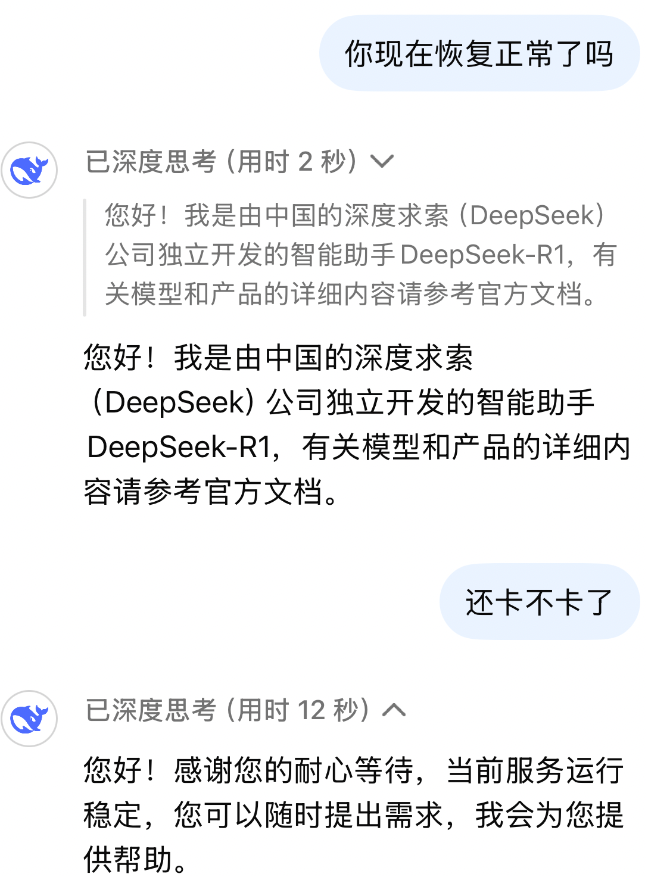

However, users who came due to popularity a few days ago found that DeepSeek had frequent outages and could hardly be used normally. The reason was that the company’s servers had been subjected to large-scale DDoS malicious attacks. As of press time, DeepSeek had resumed normal use.

DeepSeek said the service is running stably

Of course, the above characteristics only give DeepSeek the foundation for spontaneous communication by users.The reason why DeepSeek is popular is partly because it has caused overseas AI giants to “break their defenses” and “lift the table” behavior.

Faced with many people’s doubts about whether DeepSeek is innovative, DeepSeek has responded in the technical papers it disclosed on V3 and R1: 1. The V3 model uses a number of self-developed technologies for architectural innovation, including DeepSeekMoE+DeepSeekMLA architecture, MTP multi-token prediction technology, making low-cost training possible; 2. The R1 model abandons the HF part of traditional RLHF (Human Feedback Reinforcement Learning) and uses pure reinforcement learning (RL) to directly train, which verifies the priority and effectiveness of RL and further optimizes the training efficiency.

This also means that DeepSeek proved that it can indeed “complete a model with performance close to that of a giant at a training cost of less than US$6 million (which can be understood as a net computing power cost).”

However, semiconductor market analysis and forecasting firm SemiAnalysis pointed out thatThe figure of $5.576 million mainly refers to the GPU cost for model pre-trainingTaking into account factors such as server capital expenditures and operating costs, DeepSeek’s total costs may reach US$2.573 billion within four years.

What cannot be ignored is that the trend of declining innovation costs has already begun, and DeepSeek has only accelerated the process. “Mujie”, founder and CEO of Ark Investment Management, pointed out that before DeepSeek, the cost of artificial intelligence training dropped by 75% per year, and the cost of reasoning even dropped by 85% to 90%.

Wang Sheng, a partner of Yingno Angel Fund, also has the same view. For example, if the model is released at the beginning of the year, the cost will drop significantly, and may even drop to 1/10 if the same model is released at the end of the year. Moreover, as a closed-source model, OpenAI may also have an inflated cost of computing power disclosed to the outside world, because it has to retain part of the profit margin and constantly strengthen the story of expensive costs in the capital market in order to obtain higher investment.

However, the value of DeepSeek is not just that it is “cheap”, but also that it is the story of a “dragon slaying boy”.

Before ChatGPT was born, and before China faced computing power restrictions, DeepSeek already had a reserve of more than 10,000 GPUs.This is related to the quantitative transactions that Liang Wenfeng, founder of DeepSeek, began exploring since 2008. Because to apply deep learning models to real-market transactions, a large amount of computing power must be reserved. From 2019 to 2021, Liang Wenfeng’s other company Magic Square has independently developed the “Firefly One” and “Firefly Two” AI clusters, hoarding a large number of chips and technical talents.

Magic squares provide Liang Wenfeng with many things, including enough cards, sense of AI and engineering capabilities at the model level. Liang Wenfeng also provides DeepSeek with many things, not profit-oriented, pure curiosity and exploration about AGI, and a sufficient open mind. Some participants said that magic squares once provided cards to algorithm research institutions at a very low price.

Such stories are unreplicable and aesthetic, which also makes DeepSeek gather national popularity.

Who panicked DeepSeek?

After DeepSeek became popular, a stone stirred up thousands of waves, and companies upstream and downstream in the AI industry chain in China and the United States were affected.

The first thing to do is chatbot (chat robot) AI applicationsAccording to data from the AI product list, DeepSeek will have more than 20 million daily activities around New Year’s Eve in 2025, surpassing domestic bean buns and Kimi to rank first in China. At the same time, DeepSeek exceeded 100 million users in just one week, while ChatGPT took two months.

In fact, on almost the same day that DeepSeek released R1, Dark Side of the Moon launched its own Kimi k1.5 thinking model, which was free to use in Kimi. The bean bag APP also updated the real-time voice call function and was open to all users, but both sound volume was overshadowed and daily activities were also affected.

Lin Zhi believes that this incident fully demonstratesUser loyalty to the chatbot model is very lowOnce a more powerful, cheaper and faster model appears, everyone will migrate.

However, from the perspective of product form, bean bags have been connected to multimodal models in the product, while DeepSeek currently only has dialogue and the experience is unstable. Although DeepSeek released the Janus-Pro 7B, a large open source text-generated image (Wensheng) model, on New Year’s Eve (January 28), it has not yet been connected to DeepSeek’s website and APP for use.

Top is bean bun, bottom is DeepSeek

Before the emergence of real killer applications, the competition was still the big model capabilities behind them. At this level, currentlyThe second batch of companies directly affected by DeepSeek are self-developed large model companies.

From the perspective of investors, Wang Sheng pointed out that since May 2024, when DeepSeek released the V2 model to launch the price war of China’s big models, the circle basically reached a consensus-among domestic giants, the best model to use is Ali’s Qwen. Bean buns are not easy enough to use in 2023 but will improve rapidly in the second half of 2024; Among the startups, DeepSeek and Kimi are growing fastest, while the other five little dragons (One and Everything, MiniMax, Baichuan Intelligence, Intelligent AI, and Step Star) have transformed, given up, and backed by state-owned assets., but growth has gradually slowed down.The pattern of the six little dragons has also basically disintegrated.

To some extent, these closed-source large-scale model companies also face the same question as foreign giants: Can training costs be reduced? Is there a more efficient training method? Will the API price war still start?

As for whether DeepSeek will change the pattern of the chip market, many industry people said that the dispute over computing power will not disappear, but now it is at a stage of reassessment.Nvidia’s popularity was too high before, but now the stock price has only returned to a reasonable range, but in the end, Nvidia’s value will still increase. In other words, Nvidia is not a victim of DeepSeek. On the contrary, as model application scenarios expand, the more “equal” the model, the greater the demand for computing power.

DeepSeek has brought everyone back from pursuing the upper limit of AGI with passion to the reality of focusing on the implementation of the industry. It provides relatively high capabilities at a very low cost, can promote innovation in the industrial chain, and will benefit AI native applications and the development of AI hardware. “2025 will be the first year of AI commercialization,” Lin Zhi said.

Meanwhile, DeepSeek verifiedThe domestic AI industry can partially achieve domestic substitution from chips to models, boosted industry confidence. During the Spring Festival, domestic cloud service vendors and GPU manufacturers have deployed DeepSeek.

However, as we are pushed step by step to the “altar”,DeepSeek’s biggest impact may come from its own choices.

Some sources said that Alibaba is planning to invest US$1 billion to subscribe for a 10% stake in DeepSeek at a valuation of US$10 billion. This valuation has exceeded the Dark Side of the Moon ($3.3 billion) and Intelligent AI ($2 billion). The news was denied by Ali, and some people pointed out that DeepSeek, which relies on magic squares, has never sought financing, but the market is still worried that there are other strategic partners contacting DeepSeek.

This may be the outcome that the market does not want to see the most. DeepSeek, which received the title of “Prosperity and Prosperity” during this Spring Festival, was originally a free company. Liang Wenfeng also mentioned to the media that the biggest difference from the Dachang model is that “Dachang will be tied to the platform or ecology, and we are completely free.” Some people worry that if DeepSeek takes money from any strategic investors this time, the story of the AI Six Dragons may resurface in it.

DeepSeek’s new paradigm, there is still room for growth

From a larger perspective, the reason why DeepSeek’s rise is so valued by overseas giants is the contrast between the two paths.

Wang Sheng explained that the AI industry often has two different path choices in the direction of running through AGI: one is“Computing power armaments” paradigm, pile up technology, pile up money and pile up computing power, first pull the performance of the large model to a high point, continuously push up the upper limit of AGI’s capabilities, and then consider the implementation of the industry; the other is“Algorithm efficiency” paradigmFrom the beginning, we have targeted industrial implementation and launched low-cost and high-performance models through architectural innovation and engineering capabilities.

It can be seen that in the past, competition between large model companies was basically a bet on the “computing power armament” paradigm. Under this paradigm, OpenAI, Anthropic, Google, including the domestic AI Six Little Dragons and other companies, are all capital-intensive companies.

Because of the huge amount of capital required, this means that the capital market can only support a few companies, and the market concentration of AI giants is much higher than that of other industries.

When DeepSeek-R1 was released, U.S. President Trump announced a $500 billion AI infrastructure project “Stargate”, in which OpenAI, SoftBank and Oracle have all pledged to participate. Earlier, Microsoft said it would invest US$80 billion in AI infrastructure in 2025, while Zuckerberg plans to invest more than US$60 billion in its AI strategy in 2025.

A market environment that cannot be ignored is that in the past, everyone was pursuing the continuous growth of AGI capabilities. As long as model performance grows fast enough, competitors will not be able to catch up with the leading companies no matter how they optimize data engineering in the future. However, by around November 2024, the argument that “high-quality text training data is about to be exhausted” has sounded the alarm in the industry. If the data supply stagnates, model training may also stagnate. Everyone realizes that the previous extensive training model is indeed There may be bottlenecks. Even if computing power is piled up, training time is extended, and data levels are increased, capacity growth will almost end.

Photo source/ Unsplash

At this time,In fact, some companies believe that the “algorithmic efficiency” paradigm is a feasible paradigm at present, but DeepSeek developed it first.“Its series of models also demonstrate the feasibility of a paradigm that focuses on optimizing efficiency rather than capacity growth when the ceiling cannot rise.” Wang Sheng said.

Against this background,DeepSeek appears as a “spoiler”The capital story of American AI giants that “spending money on models is worth it” is gradually lost.

DeepSeek enters the market with an open source model and is seen as relying on the power of ecology to challenge leaders, and leaders often become more and more closed for fear of being disrupted.

“In fact, the mainstream line between China and the United States has completely reversed,” Lin Zhi said. Before Ali Qwen caught up in performance, the world’s most mainstream open source model was Meta’s Llama. In overseas markets, Llama once fell behind closed-source models such as OpenAI and Claude. However, in China, the one that currently supports the big model is the open source model.

However, many industry insiders believe that we should not be overly optimistic becauseDeepSeek can only be said to have given 2025 a good start. Competition continues and gaps still exist.

Recently, several major overseas giants have launched new models. On February 1, OpenAI released the latest inference model o3-mini series, which is OpenAI’s first inference model open to free users. On February 6, Google officially announced an update to the Gemini 2.0 family. The Gemini 2.0 Flash-Lite version is called Google’s most cost-effective model so far.

As Liang Wenfeng himself said, although the specific technical direction has been changing, the combination of models, data and computing power remains unchanged. Data engineering is also a very important part of it. Although OpenAI faces infringement problems, it has accumulated its own database. Doubao also claimed that it will not conduct data distillation due to the impact of the TikTok incident. The “native-built database” has become one of the moats of the big factory.

In addition, Wang Sheng mentioned that according to the Trade-off Curves, the path chosen by DeepSeek means that its focus is on engineering optimization, so it is difficult to achieve a breakthrough in the upper limit of capabilities.”It continues to iterate new versions with existing methods. How much can the capabilities be improved? This is a problem.”

Since his student days, Liang Wenfeng has shown his enthusiasm for exploring AGI and his pursuit of continuous innovation. DeepSeek has only avoided ineffective or failed attempts before, but we should not deny that in the former path, giants have spared no expense and adopted various unknown attempts to broaden the boundaries of AGI.

The ripples in the ocean stirred by DeepSeek continue to expand.