Wen 丨 Jingxiang Studio, author| Ye Mei, Editor 丨 Lu Zhen

The turmoil DeepSeek has brought to Silicon Valley is far from over, and OpenAI’s performance proves this.

It no longer continues the style of 12 press conferences in the previous 12 days to squeeze toothpaste and keep appetites, but launches a number of new products, functions and preferential policies in one go: the new inference model o3-mini was released, and the inference model was opened to free users for the first time; Open the ChatGPT search function to all users without registration; launch Deep Research, an agent product for in-depth research; users can view the content of o3-mini’s thought chain in more detail.

Even OpenAI CEO Ultraman admitted for the first time that OpenAI’s closed-source strategy is on the wrong side of history. He said that while not currently the top priority, OpenAI still needs to find a different open source strategy.

● Picture source: Internet screenshot

Faced with the fact that we are being chased up and the gap is getting smaller, what are the top priorities?

It may be to enter the larger market of ordinary consumers. According to media reports, OpenAI will soon air its first TV commercial during the Super Bowl on Sunday at the US Spring Festival Gala, which it rarely placed in advertising in the past.

It may also be accelerating the transformation of the corporate structure. At the end of last year, OpenAI officially announced that it would reorganize from a for-profit division under the control of a non-profit organization into a Delaware public benefit corporation (hereinafter referred to as PBC).

In a public statement in December, OpenAI said that in order to face competition from commercial products like ChatGPT to open source LLMs, as well as security innovation, the company will need to invest more capital than in the past. The original complex structure no longer applies, so the traditional equity model like PBC was re-chosen.

AI competition has long gone beyond pure technical competition. It is also related to how a company builds new organizational forms and management methods, not only to obtain enough funds to promote technological development, but also to ensure that development is universally beneficial, responsible and safe.

Now, when DeepSeek has quickly attracted a large number of small and medium-sized enterprises and developers through its open source model and extremely low training costs, directly impacting OpenAI’s paid API subscription model, OpenAI has fallen into a dilemma: the cost of staying ahead is getting higher and higher, but latecomers can easily catch up, but as a leader, there is no other way to go.

OpenAI needs more investment to buy time for itself. First, it must remove the shackles that bind its hands and feet.

Get rid of the shackles of non-profit”

As the name goes, OpenAI had a grand vision when it was founded: to realize open and shared AGI, to confront the monopoly of artificial intelligence by large companies or a few people, and to ensure that the benefits brought by artificial intelligence are widely and equally distributed by all mankind.

Under this vision, OpenAI has two goals: one is to promote the development of artificial intelligence while ensuring safety, and the other is to ensure that the results of development are not occupied by a few people.

But these two goals are conflicting to a certain extent. Developing artificial intelligence requires huge amounts of money, and the source of funds is a few rich people or large companies. In 2018, due to a conflict of ideas, Musk, the early main funder, withdrew funds from OpenAI. OpenAI experienced a major crisis. Ultraman left from a venture capital institution and served as the CEO of OpenAI full-time. One of his main tasks was to find money.

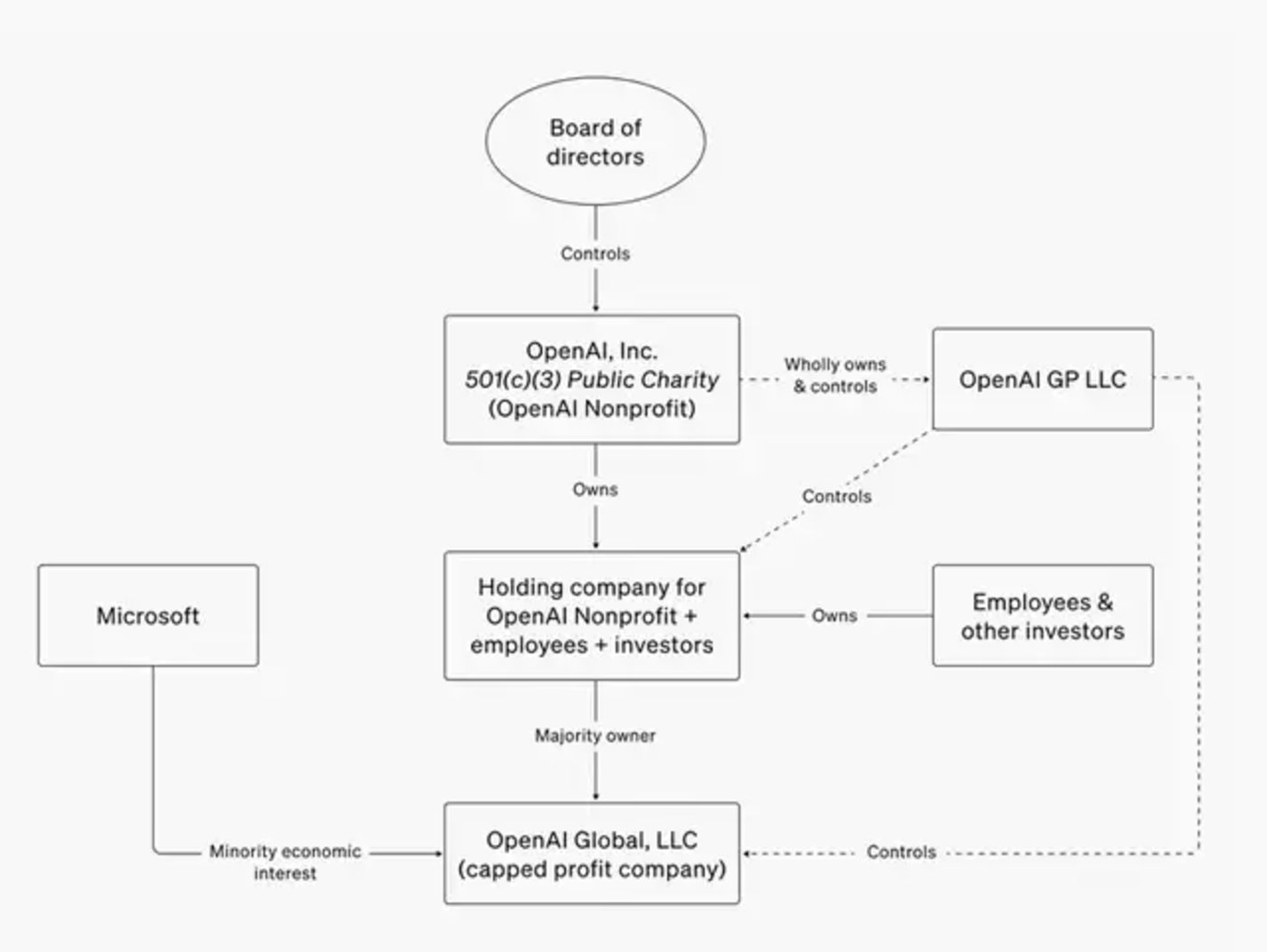

In order not to change the original intention of OpenAI and raise more funds, in 2019, Ultraman designed a hybrid architecture to transform the non-profit organization when it was founded in 2015 into a for-profit entity controlled by a non-profit organization. Under this structure, the non-profit organization OpenAI is responsible for controlling the direction of research and development and ensuring that research results are safe, open and shared, while for-profit entities can raise funds, be responsible for specific AI research, development and commercial operations, and distribute profits to investors.

This structure expresses a clear requirement to all potential investors: You can invest in me, and I will distribute profits to you, but you cannot interfere with my specific decisions, let alone take my research and development results for yourself.

Microsoft has acted as the crab-eater. Over the past few years, it has continued to invest more than US$10 billion in OpenAI, but it cannot hold a seat on the OpenAI board of directors or influence OpenAI’s decisions. It can only prioritize OpenAI’s technical achievements and obtain them from them. Dividend income. There is still an upper limit to this revenue, and once OpenAI announces the implementation of AGI, Microsoft will no longer be able to benefit from it.

In this architecture, whether they are external investors like Microsoft or employees holding shares in OpenAI for-profit entities, as long as they have commercial interests, they cannot enter the OpenAI board of directors and participate in major OpenAI decisions. Members of non-profit organizations and board members must disclose their income, not have equity in for-profit entities, and not be influenced by commercial interests to ensure the fair and equitable realization of AGI ideals.

Even Ultraman himself lost all his positions because of the architecture he designed. The OpenAI board of directors felt that he was too radical in commercialization and product development, which violated OpenAI’s security principles, and fired him without consulting him.

● OpenAI’s organizational structure

The recall storm that occurred in November 2023 also quickly exposed problems with the seemingly reasonable organizational structure. Although board members of non-profit organizations cannot hold shares in the company, they only represent the views of the public welfare party and lack the voices of major stakeholders (such as Microsoft and employees). After the recall incident, Microsoft, as the largest investor, did not receive the news immediately and was forced to take huge risks.

At this rate, no one will be willing to continue to spend money on OpenAI’s dream.

In addition, the board’s decision-making process is not transparent and lacks sufficient consultation processes, which makes the company extremely unstable. Everyone is not united, which also affects OpenAI’s ability to participate in artificial intelligence competition.

During the turmoil, Nadella, CEO of Microsoft, the largest investor, began to question the OpenAI company’s architecture and believed that it needed to change in a more stable direction. Investors and Ultraman also began to promote OpenAI’s structural transformation. During this period, there was a major change of board members and a continuous brain drain. OpenAI gradually transformed from an organization bound by common ideals and values to a commercial company made up of interests and money.

There is still the last step to maintain this transformation with organizations and systems.

Last year, when OpenAI raised $6.5 billion from Microsoft, Nvidia and other venture capital firms, an important term in the negotiations was that OpenAI must become a public welfare company within two years. If this fails, investors may recover their money and the interest earned during this period.

This traditional company form can allow OpenAI to return to shareholders to form a board of directors, and it can also make it easier for investors to obtain more income. Investors have a say and the income will not be limited, which can attract more investment.

OpenAI also wants to gain more autonomy through architectural changes.

Its relationship with Microsoft is becoming subtle and undercurrent. Previously, Microsoft has been OpenAI’s only cloud service provider, requiring exclusive cloud service cooperation and extending the term of intellectual property rights. However, as OpenAI’s demand for computing power grows, this restriction has affected the speed of product development and reduced the stability of the product. Sex; while OpenAI hopes to reduce the share ratio (OpenAI provides API services through Azure, Microsoft can earn a certain amount of revenue) and tries to introduce other cloud service providers. Recently, OpenAI also announced a partnership with SoftBank and Oracle to develop Stargate, a $500 billion data center project.

The two parties also have an agreement that OpenAI needs to continue to share technology and profits with Microsoft before implementing AGI. The quantifiable goal they set for achieving AGI is US$100 billion. Last year, OpenAI only achieved US$4 billion in revenue and is still at a loss. At present, it seems that the profit target of US$100 billion is still far away, which also means that OpenAI will be bound for a long time.

The agreement also states that full AGI statements are at the reasonable discretion of the OpenAI board of directors. There is speculation that OpenAI may claim to implement AGI in advance to get rid of its obligations to Microsoft.

No matter which way you look at it, the transformation to a for-profit company is a reform that OpenAI has to carry out.

Would PBC be a better solution?

The public interest company PBC is essentially a type of for-profit company. The reason why it emphasizes public welfare is that the Delaware Company Law stipulates that PBC directors need to balance the economic interests of shareholders, the public welfare purposes specified in the company registration certificate, and the interests of stakeholders who are significantly affected by the company’s actions.

According to the plan, OpenAI will reorganize its for-profit subsidiary OpenAI Global LLC into PBC and issue common shares. After that, the PBC will no longer be fully controlled by the non-profit board of directors, but will operate in a more independent and market-oriented manner, and will also be supervised by more shareholders and the public.

The non-profit organization OpenAI Nonprofit will continue to exist, holding a minority stake in PBC and promoting charity in areas such as health care, education and science.

However, choosing PBC does not mean that OpenAI’s problem of balancing shareholder interests and public interests will be solved.

Anthropic, which was created by former OpenAI employees and also chose the PBC architecture, wrote in an official statement that while the PBC architecture legally allows directors to balance the public interest with maximizing shareholder value, it does not make the board directly accountable to other stakeholders, nor does it align their incentive mechanisms with the public interest.

Anthropic’s general counsel also stated that if PBC board members do not pursue their public welfare mission firmly enough, the public cannot rely on a certain mechanism to sue the company and demand enforcement.

To put it bluntly, in the previous structure, the decision-makers and the profit-makers were not the same group, and at least they could check and balance each other. After being regarded as a traditional for-profit company, shareholders have both decision-making and profit-making rights, making it even more difficult to supervise whether they are abusing their power for personal gain.

Therefore, PBC is not the ultimate answer.

Anthropic provides another idea. They conducted a corporate governance experiment and worked with professors from Yale University, Harvard Law School and several lawyers to study and design a supporting system for PBC that no one had tried before, Long-Term Benefit Trust (LTBT).

LTBT is an independent body composed of five trustees, all with background and expertise in artificial intelligence security, national security, public policy and social enterprise. Their responsibility is to protect themselves from Anthropic’s economic interests and independently balance public interests with shareholder interests, similar to previous independent directors on the OpenAI Board of Directors. The first five trustees have been selected for a one-year term. Candidates for future trustees will be elected by the trustees.

To allow the trustee to function, Anthropic created shares (Class T shares) held exclusively by the trust. These shares give the trustee the power to elect and remove a certain number of board members, and a majority of board members will be elected within four years. In other words, these trustees have also become a new type of shareholders that are more public interest.

At the same time, Anthropic has also established a new board seat, elected by Series C funding and subsequent investors, ensuring that investors ‘views are also represented on the board.

In this way, most members of the board of directors can be responsible to both the trust fund and the investors, better balancing the public interest and the interests of shareholders. Trustees with more knowledge in areas such as AI security and social contribution can also help boards make better decisions.

Anthropic also considers the possibility of architectural failure and easy revocation. They also designed a revision process, with most adjustments requiring agreement between the trustee and Anthropic’s board of directors, or the trustee and other shareholders.

In a statement on its website, Anthropic wrote that public interests are not inconsistent with business success or shareholder returns. Their experience shows that the two often have strong synergies. We are positivists and want to see how LTBT will work.

However, the PBC+ long-term interest trust model is not necessarily suitable for OpenAI. Unlike Anthropic, it did not choose the PBC model at the beginning and also has a non-profit organization, so the transformation of the architecture will have to deal with more issues.

Who is the biggest obstacle on the road to transformation?

The transformation of OpenAI is not only an inevitable capital-driven choice, but also a microcosm of the collision between technical ethics and business logic. The difficulty in transformation lies in how to take into account the interests of all parties and find a balance between financing needs, partnerships and governance mechanisms.

The first step requires reaching internal consensus. Currently, OpenAI is still controlled by the board of directors of non-profit organizations. In a statement on December 27, 2024, OpenAI plans to complete this transformation in 2025, which can be regarded as the plan has received high-level support.

But this is only the beginning, and will subsequently face rigorous legal and compliance reviews and assessments of the assets of non-profit organizations.

U.S. law requires that the assets that a nonprofit organization ultimately owns (including any cash and securities) are at least as valuable as the ones it transfers.

Although both non-profit organizations and for-profit subsidiaries belong to OpenAI, if the former were to give up control of its assets over a world-leading technology, the for-profit subsidiary should have left it to the non-profit organization after paying off its investors and employees. The profits will be huge.

The announcement issued by OpenAI stated that non-profit organizations ‘important equity interests in current for-profit subsidiaries will appear in the form of shares in the PBC, and the fair valuation of the shares will be determined by an independent financial adviser. According to media reports, the restructured non-profit organization is expected to hold at least 25% of PBC, with a book value of approximately US$40 billion.

As for who will manage the non-profit organization after receiving compensation, where will the original board of directors go, what specific work the new non-profit organization will be responsible for, and how the board of directors of PBC Company will be formed, there are no detailed and specific arrangements.

A long-term policy researcher who left OpenAI in October last year was worried that non-profit organizations that originally undertook security and open tasks would become collateral to the company, abandoning the more important work concerns and studying the long-term risks that AI may bring, participating in and promoting the formulation of policies and standards in AI ethics, safety, social impact, etc., and guiding the healthy development of the industry.

Secondly, OpenAI also needs to resolve its relationship with Microsoft, the largest existing investor.

Since October last year, the two companies have been negotiating changes after the structural reform, including: (1) Microsoft’s stake in the new for-profit entity;(2) whether Microsoft will continue to be OpenAI’s exclusive cloud provider;(3) How long will Microsoft retain its right to use OpenAI intellectual property at will in its own products; and (4) whether Microsoft will continue to withdraw 20% of OpenAI’s revenue.

When OpenAI wants to find more investors, it must negotiate good conditions with Microsoft, make good compensation, and set new investment rules before it can introduce new investors.

● Musk, one of the co-founders of OpenAI, has filed for an injunction to prevent OpenAI’s transformation into a for-profit company. Source: Video screenshot

The transformation from a non-profit organization to a for-profit public welfare company also involves complex legal and tax issues. Ultraman is also facing a series of lawsuits and bans from former colleagues and peers.

Musk, one of the co-founders of OpenAI, has filed for an injunction to prevent OpenAI’s transformation into a for-profit company. He said when he co-founded and provided seed funding, he was deceived into believing that OpenAI was still a nonprofit organization. But OpenAI called Musk’s complaints unfounded and published eight years of correspondence from 2015 on its official website. Currently, a California judge has not yet decided whether to issue an injunction.

Facebook’s parent company Meta also supports preventing OpenAI’s transformation into a for-profit company. In December last year, Meta sent a letter to California Attorney General Rob Bonta, saying that allowing such a shift would have a significant impact on Silicon Valley and set a very bad precedent.

Meta wrote in the letter: If OpenAI’s new business model is effective, non-profit investors will receive the same profit-making benefits as investors who invest in for-profit companies in traditional ways, while also receiving tax exemptions granted by the government. rdquo;

But these are still small problems. What OpenAI needs to worry about most is the continuous loss of internal talent.

Since the recall storm in 2023, OpenAI has experienced a rapid drain of talent, and employees are worried that OpenAI will prioritize commercial products more at the expense of security. When capital absorption becomes faster and reasonable governance mechanisms fail to keep up, the loss of employees who agree with OpenAI’s original mission will also cause greater chaos for the company.

In a statement late last year, OpenAI said that in 2025 it would be more than just a laboratory and a startup, but must become an enduring company. How to allow the company to continue to develop in this competition while taking into account AGI’s ideal of benefiting all mankind, this structural transformation needs to give a new answer.

[Copyright Statement] The copyright of all content belongs to Mirror Studio and may not be reproduced, excerpted or used in other forms without written permission, unless otherwise stated.