Article source: AI Fan Er

Image source: Generated by AI

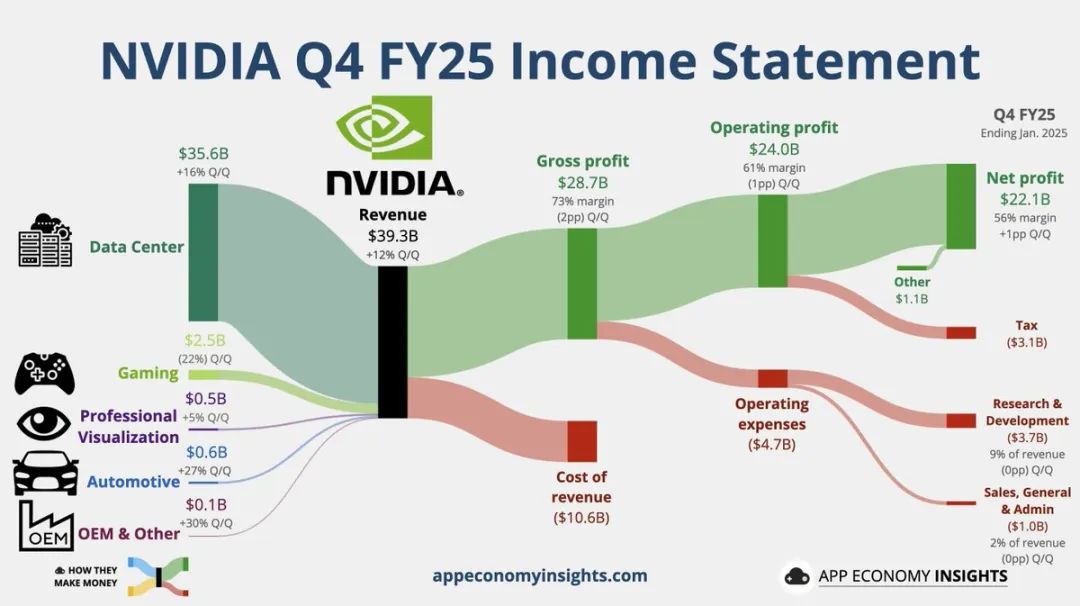

Financial nuclear explosion

– Single-quarter revenue rages $39.3 billion(About 284 billion yuan), a year-on-year surge 78%, surpassing Meta’s annual revenue

– Single-quarter data center revenuealmost doubled(A surge of 93%), reaching US$35.6 billion (approximately 250 billion yuan), setting a historical record

– Data center business surges in two years 10 times, revenue accounted for more than ninety percent(US$35.6 billion)

– Spending $33.7 billion to buy back shares, creating a rare masterpiece in the history of science and technology

🚀Technological hegemony

– Blackwell architecture chipsOrders are sold first before mass production: won in the fourth quarter $11 billionOrders (exceeding Xiaopeng’s annual revenue), Microsoft and Google accounted for half of them

– Inferential AI computing power black hole: Demand for models such as DeepSeek R1, xAI Grok3, and GPT-4 has surged A hundred times,The efficiency of kcal group training is improved compared with the previous generation 5 times

– Technical differential rolling: The U.S. version of the GB200 chip has higher AI generation efficiency than the China special version 60 times

ˇHidden worries emerge

– China’s battlefield is urgent: Chip ban affects revenue waistHuawei Shengteng 910B performance has reached H10080%

– Self-developed chip shunting: Microsoft Maia and Google TPU v5 eroded 15% of orders, Blackwell’s gross profit margin fell due to yield issues 3%

– Consumer market stall: Game graphics card business unexpectedly declined 11%, network equipment sales not increase

ˇHuang’s rule of breaking the game

– “Software breaks through the shackles of hardware”: Developers can bypass export restrictions through algorithm optimization, and the CUDA ecosystem may become the key to breaking the situation

– “Inference models are demand catalysts”: DeepSeek R1 ‘s long thought chain reasoning requires 100,000 servers to run at full speed, forcing companies to invest more computing power

– geopolitical game cards: Global technology giants invest hundreds of billions of dollars in AI infrastructure every year, which is still Nvidia’s “key to the sea”

(Note: On January 27, the in-depth search for a “computing power efficiency revolution” triggered stock price shocks. Huang Renxun urgently put out the fire and said that “such models will generate a hundredfold demand for chips”;Blackwell’s single card computing power has 5 times that of its previous generation, but the hidden heat dissipation risks may become a mass production bottleneck.)

This earnings call is like a realistic verification of Huang’s Law-when AI enters the era of “thoughtful thinking”, Nvidia’s computing power empire is still devouring global capital. However, Huawei’s rising attack and the wave of self-developed chips are making the crown of the king of chips crack for the first time.